In a nutshell: Developers were supposed to be among the biggest beneficiaries of the generative AI hype as special tools made churning out code faster and easier. But according to a recent study from Uplevel, a firm that analyzes coding metrics, the productivity gains aren't materializing – at least not yet.

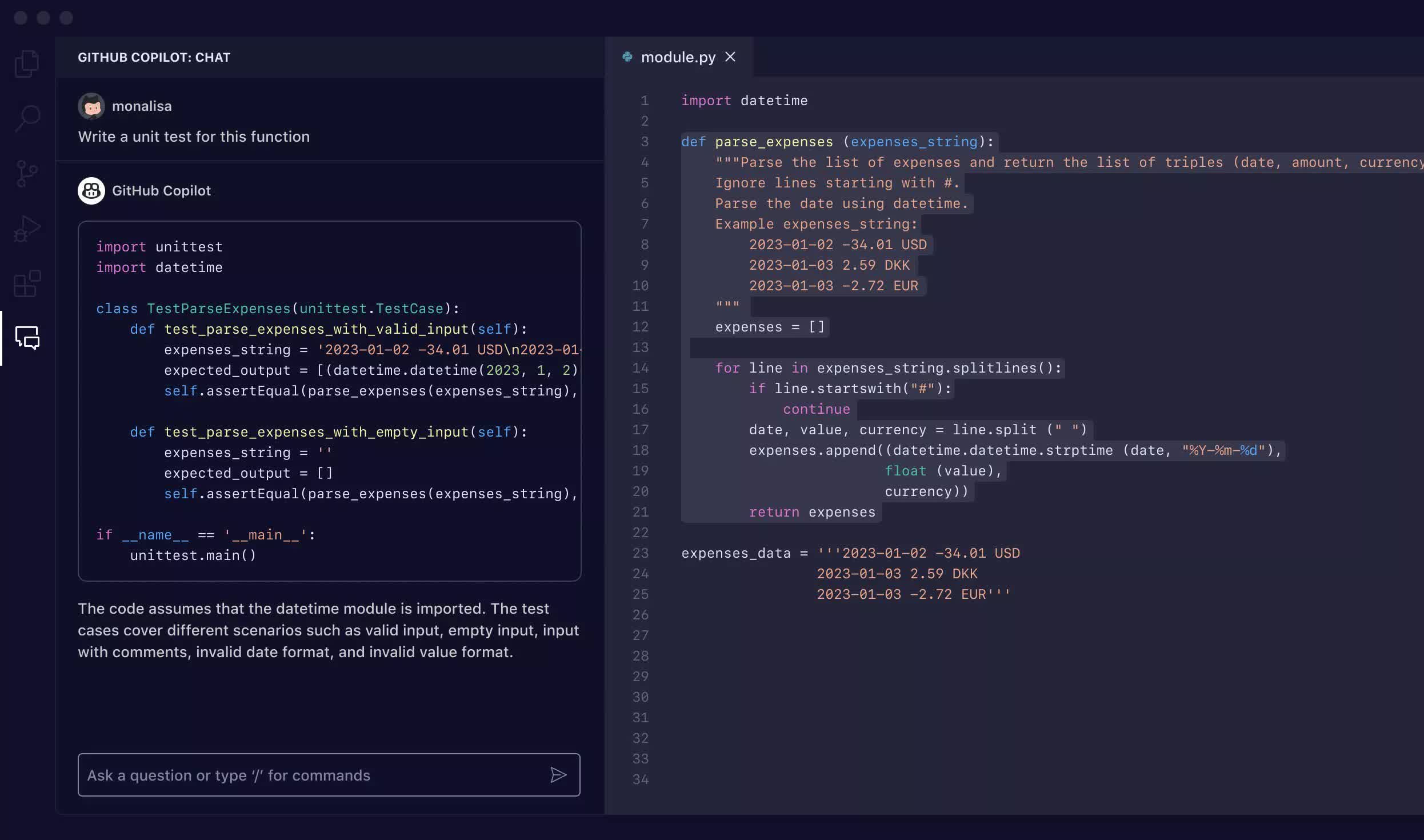

The study tracked around 800 developers, comparing their output with and without GitHub's Copilot coding assistant over three-month periods. Surprisingly, when measuring key metrics like pull request cycle time and throughput, Uplevel found no meaningful improvements for those using Copilot.

Matt Hoffman, a data analyst at Uplevel, explained to the publication CIO that their team initially thought that developers would be able to write more code and the defect rate might actually go down because developers were using AI tools to help review code before submitting it. But their findings defied those expectations.

In fact, the study found that developers using Copilot introduced 41% more bugs into their code, according to CIO. Uplevel also saw no evidence that the AI assistant was helping prevent developer burnout.

The revelations counter claims from Copilot's makers at GitHub and other vocal AI coding tool proponents about massive productivity boosts. A GitHub-sponsored study earlier claimed developers wrote code 55% faster with Copilot's aid.

Developers could indeed be seeing positive results, given that a report from Copilot's early days showed nearly 30% of new code involved AI assistance – a number that has likely grown. However, another possibility behind the increase usage is coders developing a dependency and turning lazy.

Out in the field, the experience with AI coding assistants has been mixed so far. At custom software firm Gehtsoft USA, CEO Ivan Gekht told CIO that they've found the AI-generated code challenging to understand and debug, making it more efficient to simply rewrite from scratch sometimes.

A study from last year where ChatGPT got over half of the asked programming questions wrong seems to back his observations, though the chatbot has improved considerably since then with multiple updates.

Gekht added that software development is "90% brain function – understanding the requirements, designing the system, and considering limitations and restrictions," while converting all this into code is the simpler part of the job.

However, at cloud provider Innovative Solutions, CTO Travis Rehl reported stellar results, with developer productivity increasing up to three times thanks to tools like Claude Dev and Copilot.

The conflicting accounts highlight that we're probably still in the early days for AI coding assistants. But with the tools advancing rapidly, who knows where they are headed down the line?

AI coding assistants do not boost productivity or prevent burnout, study finds