It's the Software, Stupid The year is coming to a close, and AMD had been hoping its powerful new MI300X AI chips would finally help it gain ground on Nvidia. But an extensive investigation by SemiAnalysis suggests the company's software challenges are letting Nvidia maintain its comfortable lead.

SemiAnalysis pitted AMD's Instinct MI300X against Nvidia's H100 and H200, observing several differences between the chips. For the uninitiated, the MI300X is a GPU accelerator based on the AMD CDNA 3 architecture and is designed for high-performance computing, specifically AI workloads.

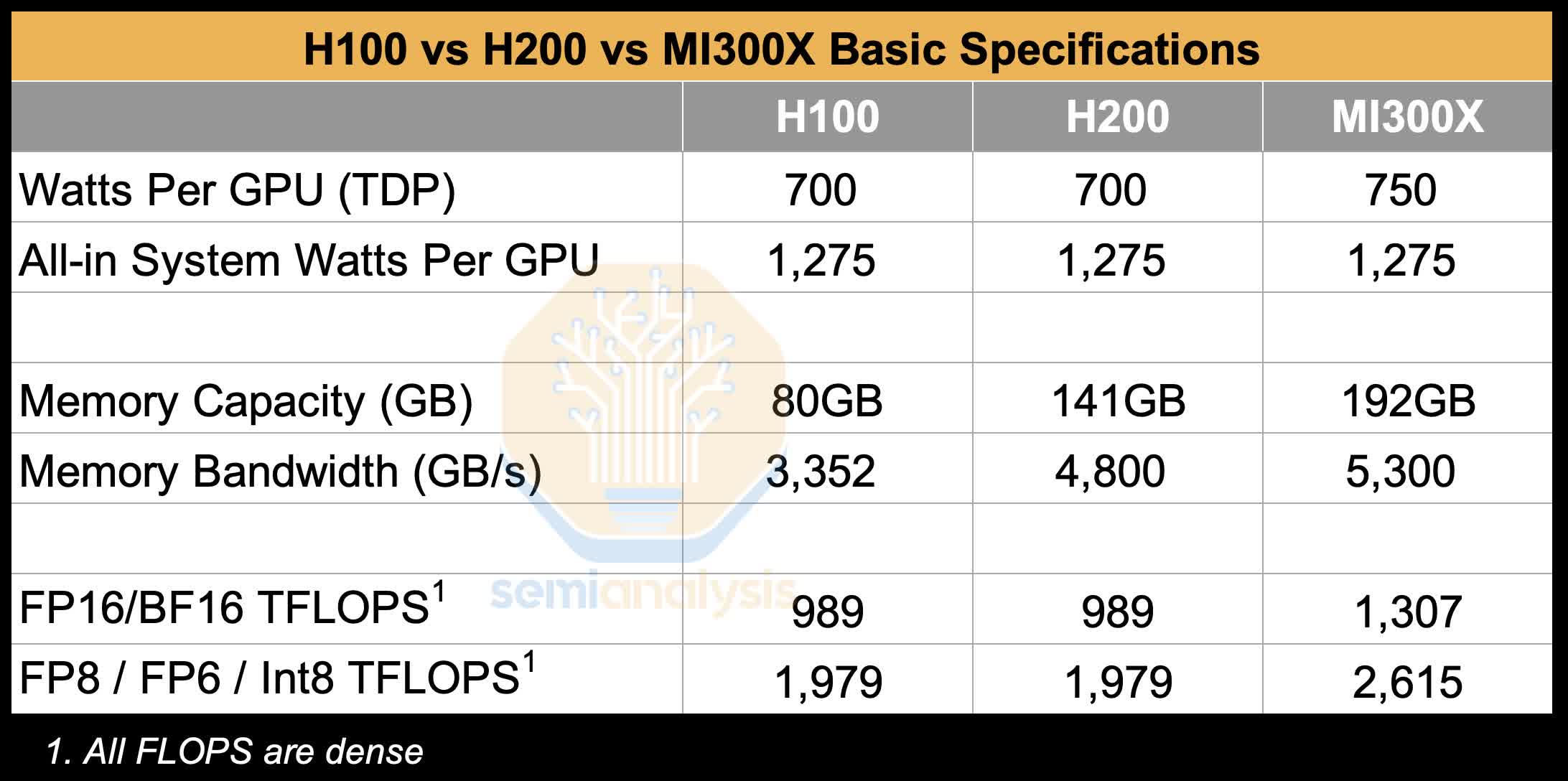

On paper, the performance figures appear excellent for AMD: the chip offers 1,307 TeraFLOPS of FP16 compute power and a massive 192GB of HBM3 memory, outclassing both of Nvidia's rival offerings. AMD's solutions also promise lower overall ownership costs compared to Nvidia's pricey chips and InfiniBand networks.

However, as the SemiAnalysis crew discovered over five months of rigorous testing, raw specs are not the entire story. Despite the MI300X's impressive silicon, AMD's software ecosystem required significant effort to utilize effectively. SemiAnalysis had to rely heavily on AMD engineers to fix bugs and issues continuously during their benchmarking and testing.

This is a far cry from Nvidia's hardware and software, which they noted tends to work smoothly out of the box with no handholding needed from Nvidia staff.

Moreover, the software woes weren't just limited to SemiAnalysis' testing – AMD's customers were feeling the pain too. For instance, AMD's largest cloud provider Tensorwave had to give AMD engineers access to the same MI300X chips that Tensorwave had purchased, just so AMD could debug the software.

Also read: Not just the hardware: How deep is Nvidia's software moat?

The troubles don't end there. From integration problems with PyTorch to subpar scaling across multiple chips, AMD's software consistently fell short of Nvidia's proven CUDA ecosystem. SemiAnalysis also noted that many AMD AI Libraries are essentially forks of Nvidia AI Libraries, which leads to suboptimal outcomes and compatibility issues.

"The CUDA moat has yet to be crossed by AMD due to AMD's weaker-than-expected software Quality Assurance (QA) culture and its challenging out-of-the-box experience. As fast as AMD tries to fill in the CUDA moat, Nvidia engineers are working overtime to deepen said moat with new features, libraries, and performance updates," reads an excerpt from the analysis.

The analysts did find a glimmer of hope in the pre-release BF16 development branches for the MI300X software, which showed much better performance. But by the time that code hits production, Nvidia will likely have its next-gen Blackwell chips available (though Nvidia is reportedly having some growing pains with that rollout).

Taking these issues into account, SemiAnalysis listed a bunch of recommendations to AMD, starting with giving Team Red's engineers more compute and engineering resources to fix and improve the ecosystem.

Met with @LisaSu today for 1.5 hours as we went through everything

– Dylan Patel (@dylan522p) December 23, 2024

She acknowledged the gaps in AMD software stack

She took our specific recommendations seriously

She asked her team and us a lot of questions

Many changes are in flight already!

Excited to see improvements coming https://t.co/38aAwwIdEI

SemiAnalysis founder Dylan Patel even met with AMD CEO Lisa Su. He posted on X that she understands the work needed to improve AMD's software stack. He also added that many changes are already in development.

However, it's an uphill climb after years of apparently neglecting this critical component. As much as the analysts want AMD to legitimately compete with Nvidia, the "CUDA moat" looks to keep Nvidia firmly in the lead for now.

AMD's poor software optimization is letting Nvidia maintain an iron grip over AI chips