Forward-looking: A new technology developed at the University of California, Davis, is offering hope to people who have lost their ability to speak due to neurological conditions. In a recent clinical trial, a man with amyotrophic lateral sclerosis was able to communicate with his family in real time using a brain-computer interface (BCI) that translates his neural activity into spoken words, complete with intonation and even simple melodies.

Unlike previous systems that convert brain signals into text, this BCI synthesizes actual speech almost instantaneously. The effect is a digital recreation of the vocal tract, enabling natural conversation with the ability to interrupt, ask questions, and express emotions through changes in pitch and emphasis. The system's speed – translating brain activity into speech in about one-fortieth of a second – means the user experiences little to no conversational delay, a significant improvement over older text-based approaches that often felt more like sending text messages than having a voice call.

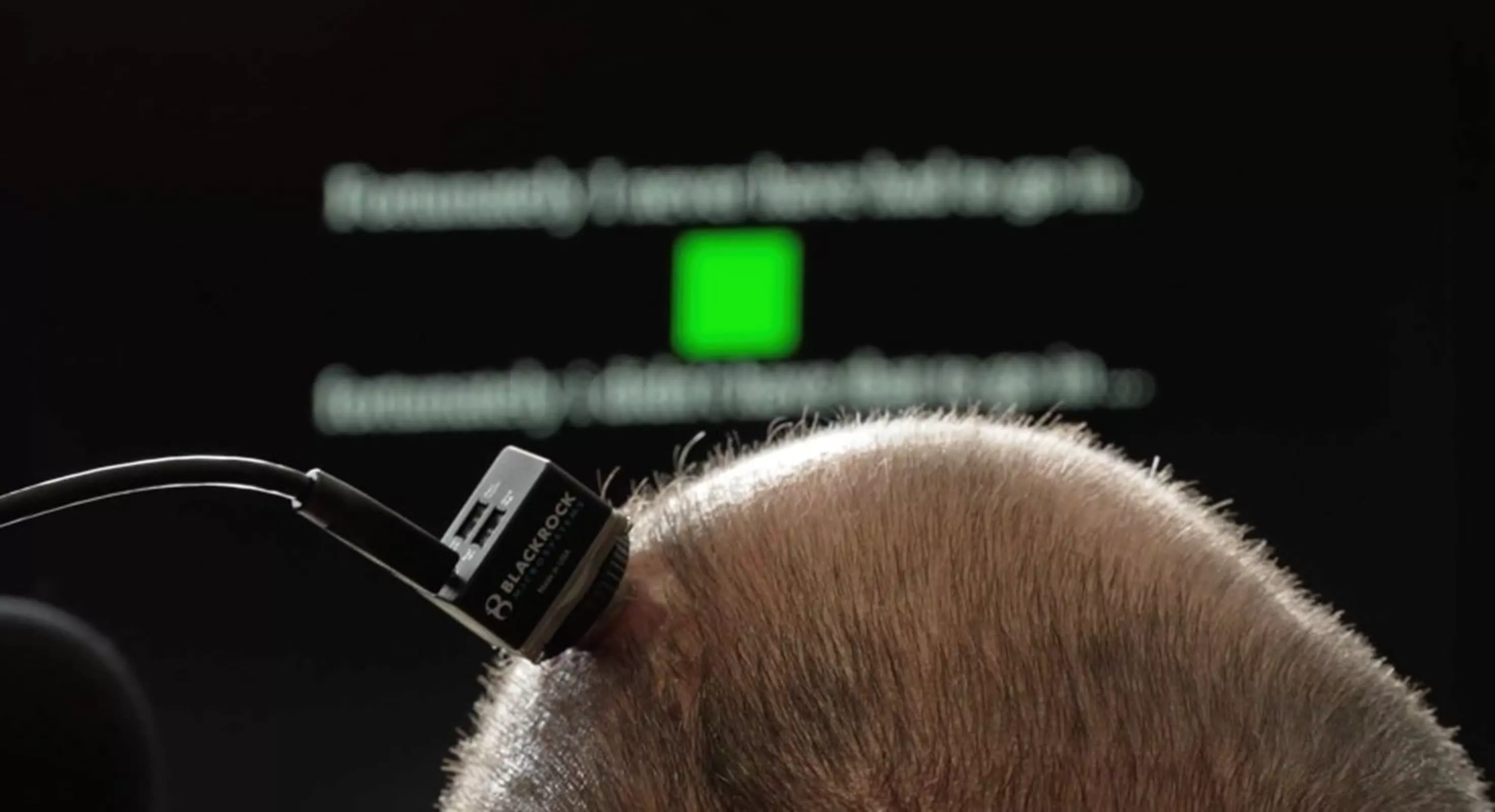

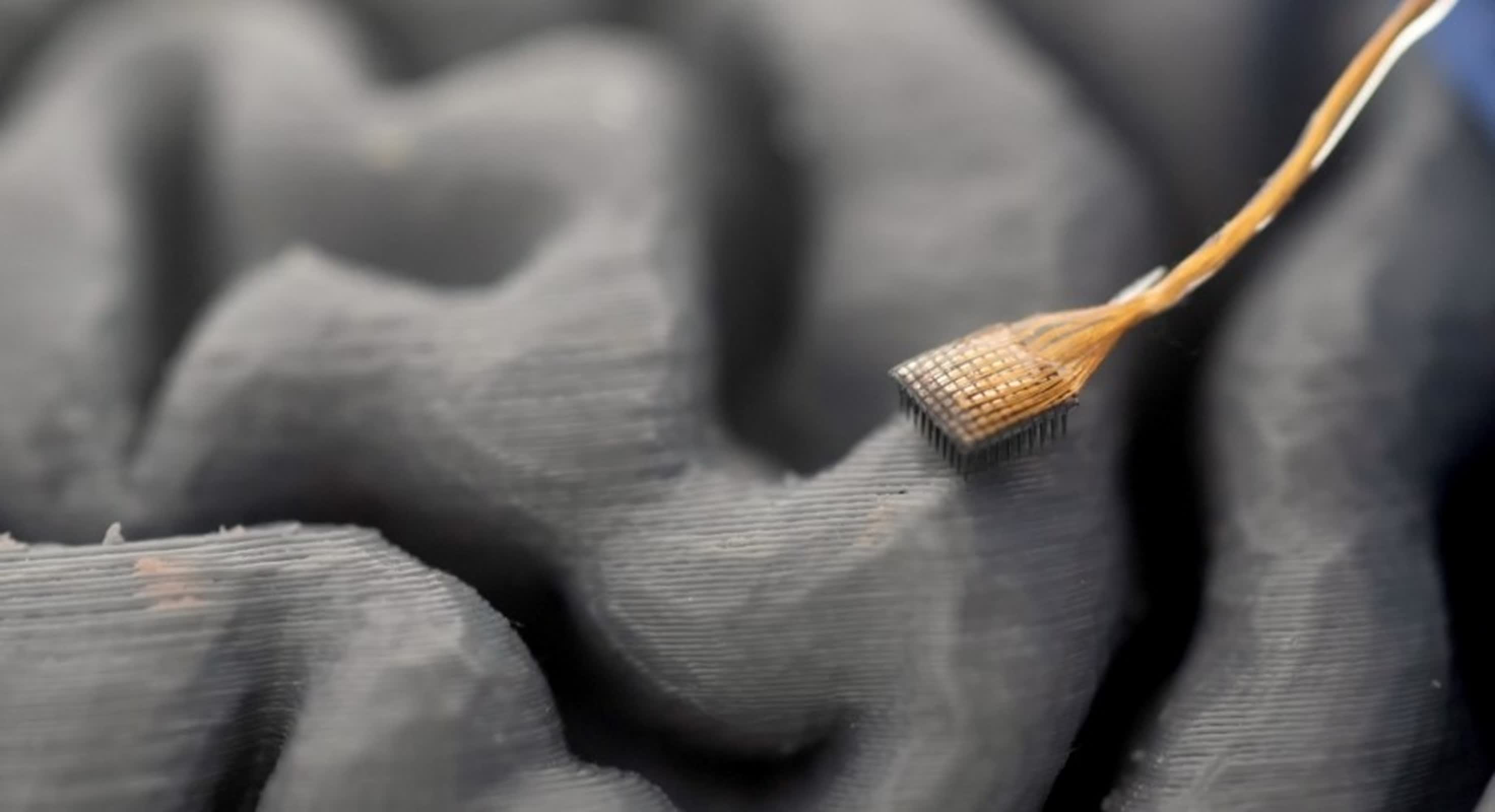

The technology works by implanting four microelectrode arrays into the region of the brain responsible for speech production. These arrays record the electrical activity of hundreds of individual neurons as the participant attempts to speak. The neural data is then transmitted to external computers equipped with advanced artificial intelligence algorithms. These algorithms have been trained using data collected while the participant tried to say specific sentences displayed on a screen. By matching patterns of neural firing to the intended speech sounds at each moment, the system learns to reconstruct the user's voice from brain signals alone.

One of the remarkable features of the UC Davis system is its expressiveness. The participant was not only able to generate new words that the system had not encountered before, but also to modulate the tone of his synthesized voice to indicate questions or emphasize specific words.

The technology could even detect when he was trying to sing, allowing him to produce short melodies. In tests, listeners could understand nearly 60 percent of the synthesized words, a dramatic improvement over the 4 percent intelligibility when the participant attempted to speak unaided.

This BCI represents a significant leap forward in restoring natural communication for individuals with paralysis or severe speech impairments. Previous assistive technologies, such as eye trackers or devices that convert neural signals to text, have been slow and cumbersome, often requiring significant effort from both the user and caregivers.

A model of a human brain showing a microelectrode array. The arrays are designed to record brain activity.

The system is still in its early stages. So far, it has been tested with a single participant, and researchers acknowledge the need to replicate these results with more individuals and across different causes of speech loss. There are also technical challenges to overcome, such as improving the intelligibility of synthesized speech and adapting the system for long-term, everyday use.

Nonetheless, the results mark a significant milestone in the field of neuroprosthetics. As clinical trials continue, researchers and participants are hopeful that this technology will soon offer a solution for those who have been silenced by neurological disease.

Brain implant at UC Davis translates thoughts into spoken words with emotion