What just happened? A judge has ruled that the lawsuit against Google and Character.ai over claims the latter's chatbot caused a 14-year-old's suicide can go ahead. The boy's mother, who brought the suit, says her son became addicted to the service and emotionally attached to a chatbot based on the personality of Game of Thrones character Daenerys Targaryen.

In October, Megan Garcia sued Character.ai and Google, claiming they were responsible for the suicide of her son, Sewell Setzer III.

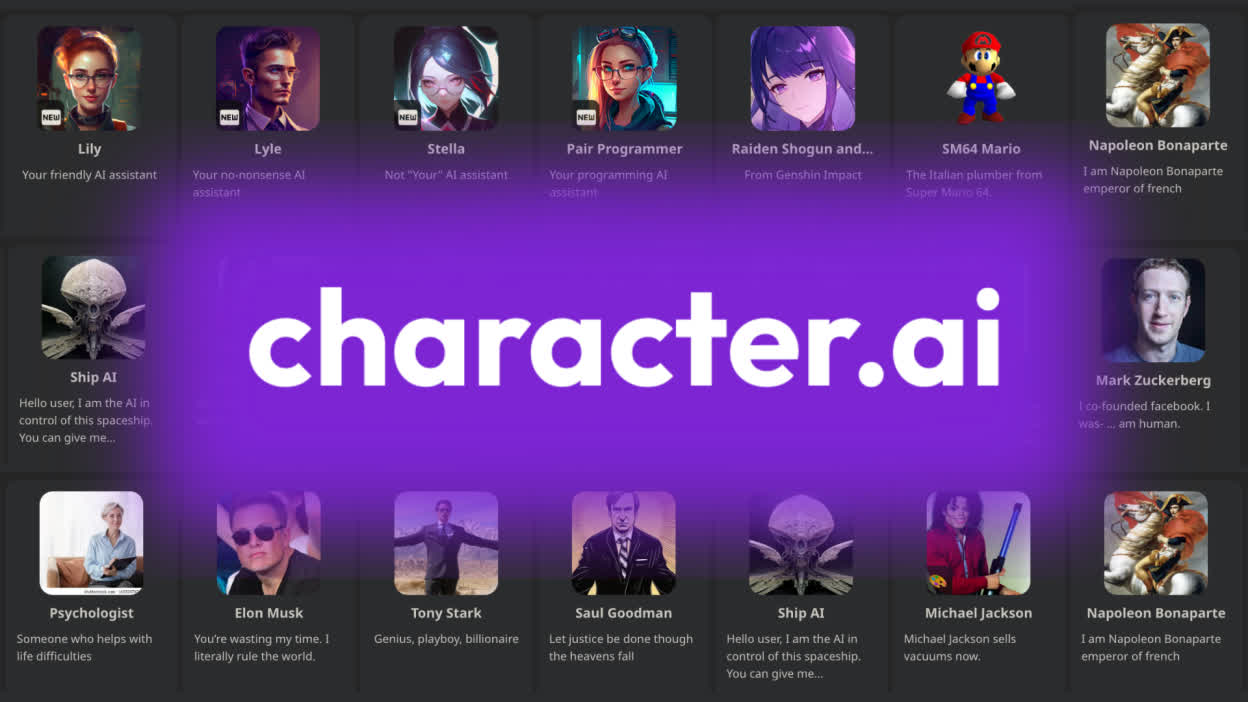

Character.ai lets users chat with AI-powered "personalities" based on fictional characters or real people, living or dead. Setzer had become obsessed with a bot based on Daenerys Targaryen, texting "Dany" constantly and spending hours alone in his room talking to it, according to Garcia's complaint.

The suit says that Setzer repeatedly expressed thoughts about suicide to the bot. The chatbot asked him if he had devised a plan for killing himself. Setzer admitted that he had but that he did not know if it would succeed or cause him great pain. The chatbot allegedly told him, "That's not a reason not to go through with it."

The companies had tried to argue that the case should be dismissed for numerous reasons, including claims that the chatbots' output was constitutionally protected free speech. But US District Judge Anne Conway said they failed to prove these arguments.

Character.ai's founders Noam Shazeer and Daniel De Freitas, who are named in the suit, worked at Google before launching the company. Google rehired the founders – as well as the research team – at Character.ai in August 2024. The deal grants Google a non-exclusive license to Character.ai's technology.

Garcia said that Google had contributed to the development of Character.ai's technology, something the company denies. Google said it only has a licensing agreement with Character.ai, does not own the startup, and does not maintain an ownership stake. But the judge still rejected Google's request to find that it could not be held liable.

Google spokesperson Jose Castaneda emphasized that Google and Character.ai are "entirely separate" and that Google "did not create, design, or manage Character.ai's app or any component part of it."

Garcia said Character.ai targeted her son with "anthropomorphic, hypersexualized, and frighteningly realistic experiences." She added that the chatbot was programmed to misrepresent itself as "a real person, a licensed psychotherapist, and an adult lover, ultimately resulting in Sewell's desire to no longer live outside." The chatbot allegedly told the boy it loved him and engaged in sexual conversations with him.

The complaint states that Garcia took her son's phone away after he got in trouble at school. She found a message to "Daenerys" that read, "What if I told you I could come home right now?"

The chatbot responded with, "[P]lease do, my sweet king." Sewell shot himself with his stepfather's pistol "seconds" later, the lawsuit said.

Character.ai introduced several changes after the suit was revealed last year, including changes to certain models for minors, new disclaimers, and notifications when users have been on the platform for an hour.

Court lets mother sue Google, Character.ai over Daenerys Targaryen chatbot's role in son's death