Editor's take: Intel's patent filing provides a glimmer of hope that we may one day see disaggregated GPUs become a reality, but it's still early days. The company's upcoming Arc Battlemage architecture, slated for early 2025, will likely feature a monolithic design once again. However, the exploration of chiplet approaches could lay the groundwork for future GPU generations that take advantage of this cutting-edge tech.

Intel, according to a recently discovered patent filing, is exploring the use of disaggregated architectures, which involve dividing GPU designs into smaller, specialized "chiplets."

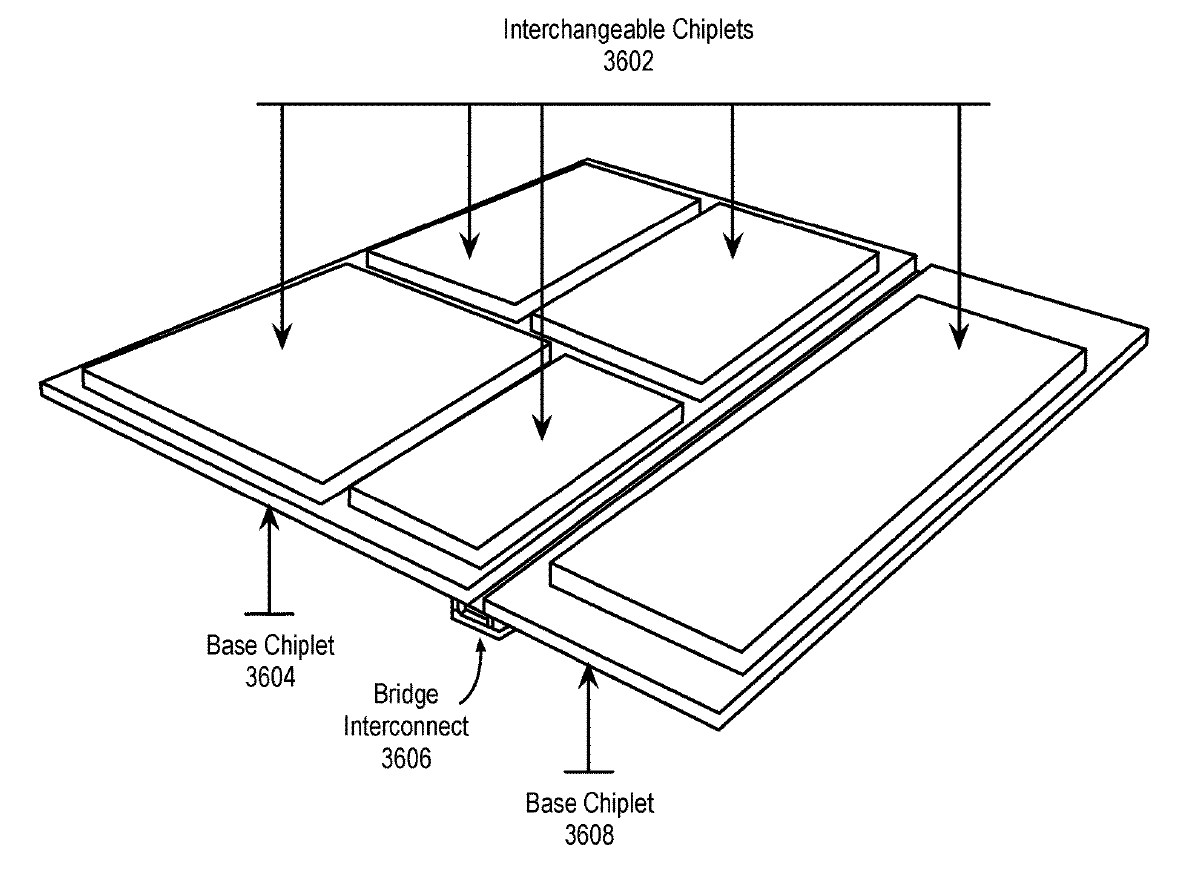

For those not familiar with the term, disaggregated, or chiplet-based architectures, represent a departure from traditional monolithic GPU designs. Instead of housing everything on a single, massive chip, the various components are split across multiple smaller chiplets, each tailored for specific tasks like computing, graphics rendering, or AI workloads. These chiplets are then interconnected using cutting-edge packaging technologies.

Earlier this month, Intel was finally granted a patent for its disaggregated GPU architecture, which will likely be the first commercial GPU architecture with logic chiplets, also allowing for the power-gate of chiplets not used to process workloads. pic.twitter.com/XsNjjdVIOu

– Underfox (@Underfox3) October 26, 2024

There are several potential advantages to this modular approach. For starters, it could lead to significantly improved power efficiency, which is a big win in an era where energy costs and environmental impact are major concerns. Since individual chiplets can be powered down when not in use, thanks to power-gating capabilities, overall energy consumption can be reduced.

But that's not all – disaggregated GPU designs also offer increased flexibility, customization, and modularity. Manufacturers could mix and match different chiplets to create specialized GPU configurations optimized for various workloads or market segments.

Of course, Intel isn't the only one eyeing this innovative GPU design strategy. Primary rival AMD has also filed patents related to chiplet-based GPU architectures in the past. Last year, even Nvidia was rumored to use a multi-chiplet design for its Blackwell GPUs, though more recent reports debunked that.

Either way, it's getting increasingly clear that all three companies are eyeing such architecture. AMD's latest Instinct MI300 series AI GPUs already offer a dual chiplet-based design, as do several of Nvidia's server offerings. However, we have yet to see a true chiplet-based GPU hit the consumer market.

Making the leap from monolithic to disaggregated GPU designs is no small feat, though. There are significant manufacturing and engineering challenges to overcome, not to mention the need for advanced interconnect technologies to seamlessly link all those tiny chiplets.