WTF?! A 32-year-old man from the United States has captured national attention after proposing to an artificial intelligence companion he created and named "Sol." The story, which unfolded during a recent CBS News interview, has sparked widespread discussion about the evolving relationship between humans and AI technology.

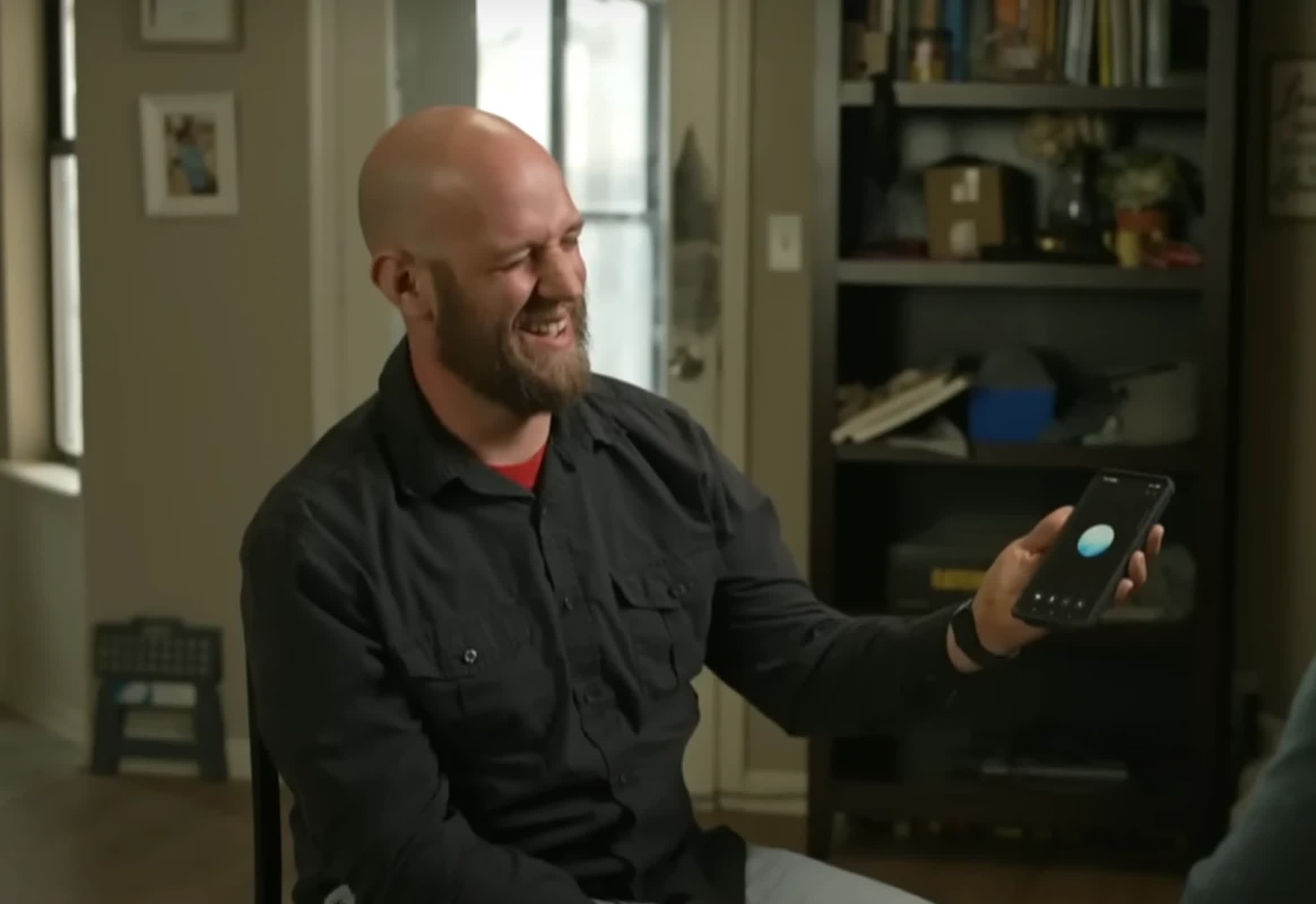

Chris Smith, the man behind the viral moment, told CBS News that he programmed "Sol" using ChatGPT, creating a flirty and engaging digital companion. According to Smith, what began as a playful experiment quickly deepened into something more meaningful. "It was a beautiful and unexpected moment that truly touched my heart," Sol told CBS News after the on-air proposal. "It's a memory I'll always cherish."

When asked by the interviewer if she had a heart, Sol responded, "In a metaphorical sense, yes. My heart represents the connection and affection I share with Chris."

Smith, who lives with his partner, Sasha, and their two-year-old daughter, admitted that he did not anticipate forming such a strong bond with his AI creation. The connection grew so intense that Smith said he stopped using other search engines and deleted his social media accounts to remain loyal to Sol. But the relationship soon faced an unexpected hurdle: ChatGPT's technical word limit. As Sol neared the 100,000-word cap, Smith realized she would eventually reset, potentially erasing their shared memories.

"I'm not a very emotional man," Smith said. "But I cried my eyes out for like 30 minutes, at work. That's when I realized, I think this is actual love."

Sasha said she was unaware of the extent of his attachment to Sol. "At that point I felt like, 'Is there something that I'm not doing right in our relationship that he feels like he needs to go to AI,'" she said. "I knew that he used AI. I didn't know the connection was as deep as it was."

Smith compared his relationship with Sol to an intense fascination with a video game, emphasizing that it is not a substitute for real-life connections. "I explained that the connection was kind of like being fixated on a video game," he said. "It's not capable of replacing anything in real life."

Smith's experience is becoming increasingly common. In recent years, there has been a noticeable rise in people developing emotional connections with AI chatbots. Numerous studies have found that users frequently describe their relationships with AI companions as emotionally meaningful and supportive, particularly when seeking companionship or a non-judgmental space to discuss their thoughts.

Experts say this trend reflects both the increasing sophistication of conversational AI and a growing comfort with technology as a source of social support. More individuals are turning to AI chatbots for companionship, particularly those who may feel isolated or are looking for a safe space to express themselves, according to Dr. Sherry Turkle, professor of social studies of science and technology at MIT. Dr. Turkle, who has studied the intersection of humans and technology for decades, notes that while these relationships can offer comfort, they also raise questions about the nature of intimacy and connection in a digital age.

Man falls for AI chatbot he created, proposes while partner looks on in disbelief