The big picture: Microsoft has purchased a staggering number of Nvidia's Hopper chips this year, far outpacing what its rivals have been able to procure. With Microsoft's significant lead and its deep ties to OpenAI, the company is well-positioned as tech giants vie for AI supremacy.

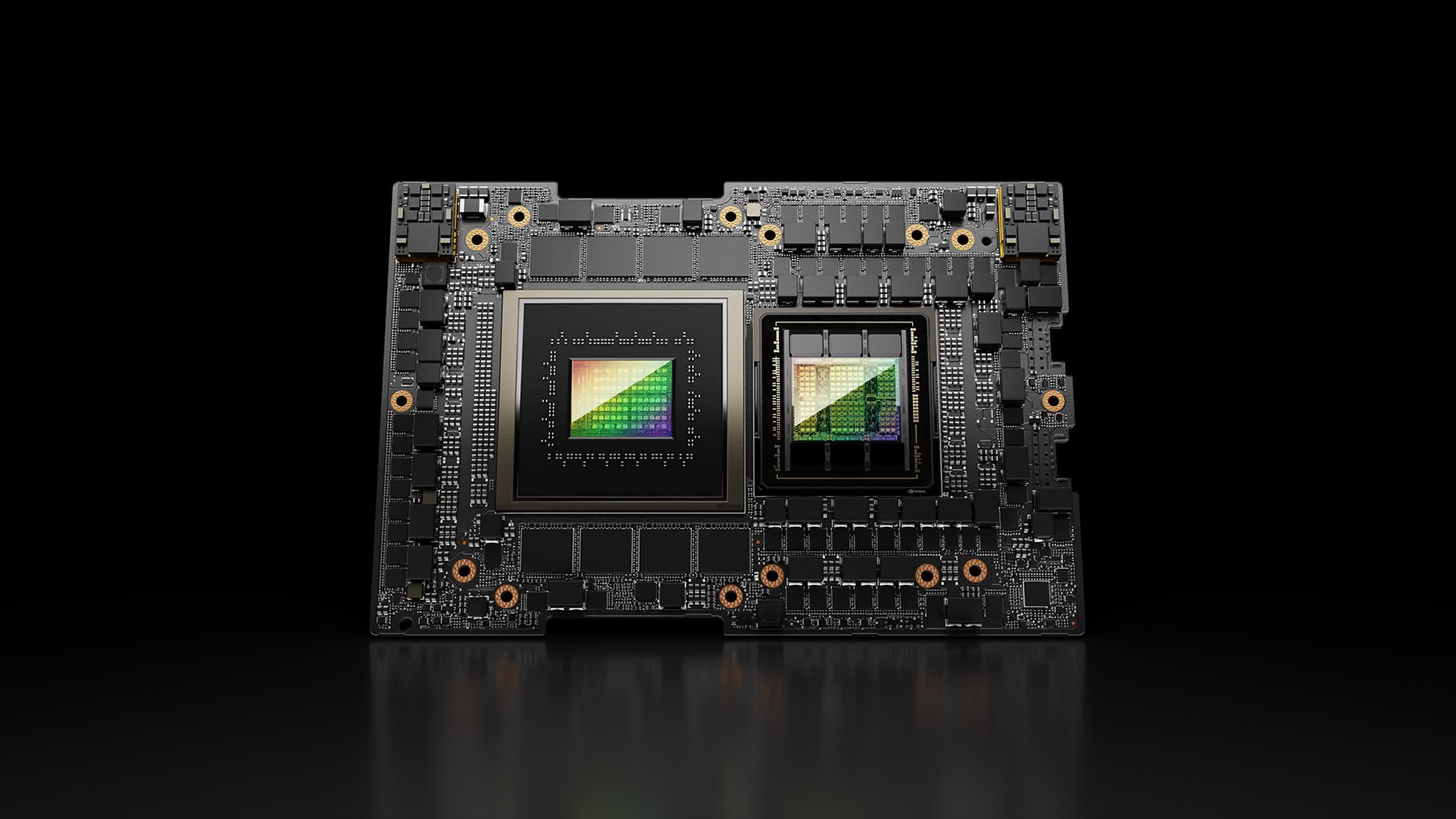

Microsoft has emerged as the dominant player in the acquisition of Nvidia's Hopper chips. According to estimates from technology consultancy Omdia that were reported in the Financial Times, Microsoft has purchased 485,000 of these flagship processors this year, more than doubling the orders of its nearest rivals in the United States and China.

This aggressive procurement strategy has positioned Microsoft at the forefront of AI infrastructure development, outpacing tech giants like Meta, Amazon, and Google. The move comes as the demand for Nvidia's advanced GPUs continues to outstrip supply, a trend that has persisted for nearly two years.

Microsoft's substantial investment in Nvidia's chips is closely tied to its $13 billion stake in OpenAI. This partnership has driven Microsoft to rapidly expand its data center capabilities, not only to power its own AI services like Copilot but also to offer robust cloud computing solutions through its Azure platform.

Indeed, the tech industry's voracious appetite for AI capabilities has led to unprecedented investments in data center infrastructure. Alistair Speirs, Microsoft's senior director of Azure Global Infrastructure, described the complexity of these projects to the FT, stating, "Good data center infrastructure, they're very complex, capital intensive projects. They take multi-years of planning. And so forecasting where our growth will be with a little bit of buffer is important."

While Microsoft leads the pack in the US, Chinese tech giants ByteDance and Tencent have also made significant strides, each ordering approximately 230,000 of Nvidia's chips. These orders include the H20 model, a modified version of the Hopper chip designed to comply with US export controls for Chinese customers.

The race for AI dominance extends beyond hardware acquisition. Tech companies are increasingly developing their own custom AI chips to reduce dependence on Nvidia. Google has been refining its Tensor Processing Units (TPUs) for a decade, while Meta recently introduced its Meta Training and Inference Accelerator chip. Amazon is also making strides with its Trainium and Inferentia chips, designed for cloud computing customers.

Vlad Galabov, director of cloud and data center research at Omdia, noted the extraordinary impact of AI chip demand on server expenditure, telling the FT that "Nvidia GPUs claimed a tremendously high share of the server capex. We're close to the peak."

As the AI landscape continues to evolve, Microsoft's strategic chip acquisitions and partnerships position it as a formidable force in the industry. However, the company recognizes that more than hardware is needed. "To build the AI infrastructure, in our experience, is not just about having the best chip, it's also about having the right storage components, the right infrastructure, the right software layer, the right host management layer, error correction and all these other components to build that system," Speirs said.

Microsoft surges ahead in AI race with massive Nvidia chip acquisition