Something to look forward to: Pricing for Nvidia's Blackwell platform has emerged in dribs and drabs, from analyst estimates to CEO Jensen Huang's comments. Simply put, it's going to cost buyers dearly to deploy these performance-packed products. Morgan Stanley estimates that Nvidia will ship 60,000 to 70,000 B200 server cabinets in 2025, translating to at least $210 billion in annual revenue. Despite the high costs, the demand for these powerful AI servers remains intense.

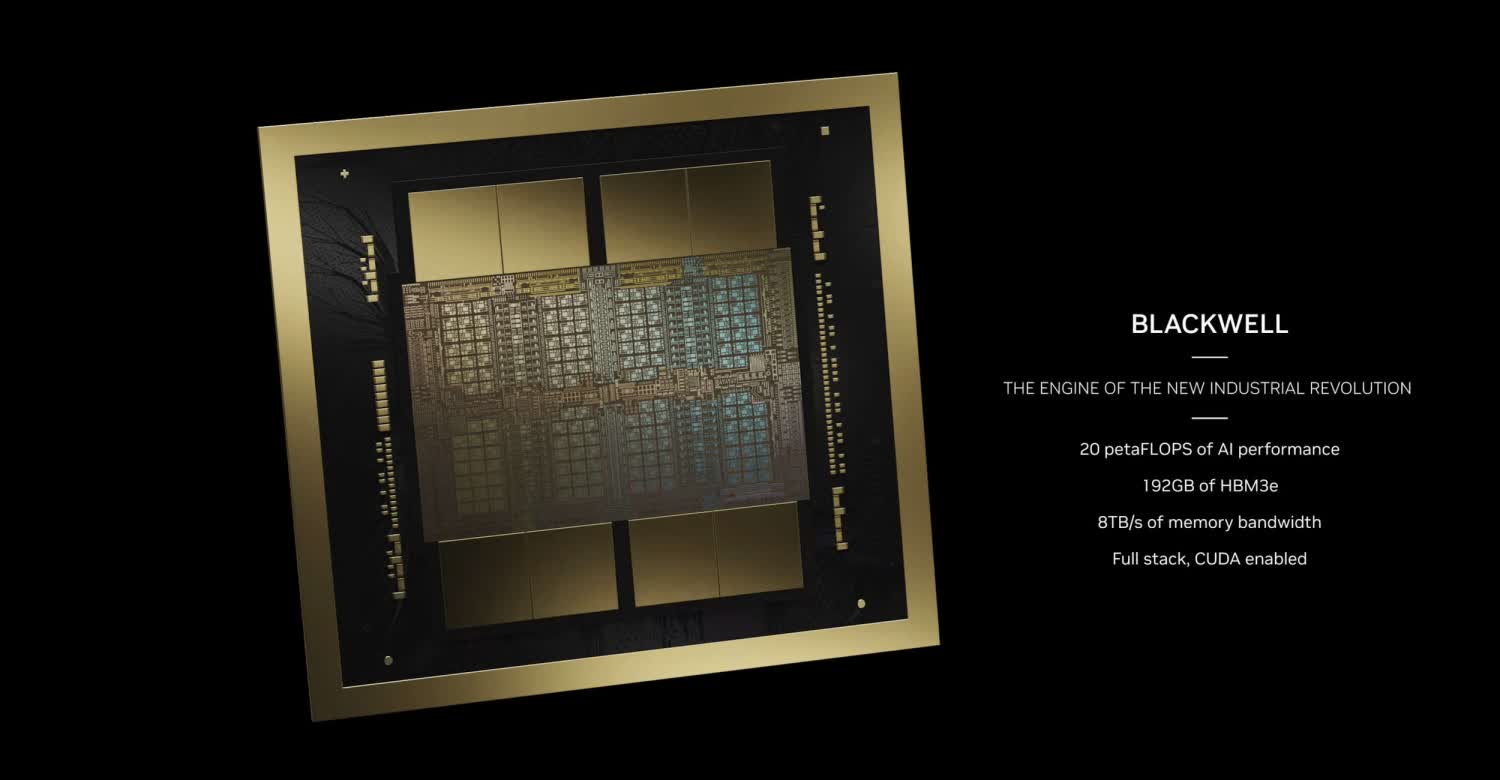

Nvidia has reportedly invested some $10 billion developing the Blackwell platform – an effort involving around 25,000 people. With all the performance packed into a single Blackwell GPU, it's no surprise these products command significant premiums.

According to HSBC analysts, Nvidia's GB200 NVL36 server rack system will cost $1.8 million, and the NVL72 will be $3 million. The more powerful GB200 Superchip, which combines CPUs and GPUs, is expected to cost $60,000 to $70,000 each. These Superchips include two GB100 GPUs and a single Grace Hopper chip, accompanied by a large pool of system memory (HBM3E).

Earlier this year, CEO Jensen Huang told CNBC that a Blackwell GPU would cost $30,000 to $40,000, and based on this information Morgan Stanley has calculated the total cost to buyers. With each AI server cabinet priced at roughly $2 million to $3 million, and Nvidia planning to ship between 60,000 and 70,000 B200 server cabinets, the estimated annual revenue is at least $210 billion.

But will customer spend justify this at some point? Sequoia Capital analyst David Cahn estimated that the annual AI revenue required to pay for their investments has climbed to $600 billion... annually.

HSBC estimates pricing for NVIDIA GB200 NVL36 server rack system is $1.8 million and NVL72 is $3 million. Also estimates GB200 ASP is $60,000 to $70,000 and B100 ASP is $30,000 to $35,000.

– tae kim (@firstadopter) May 13, 2024

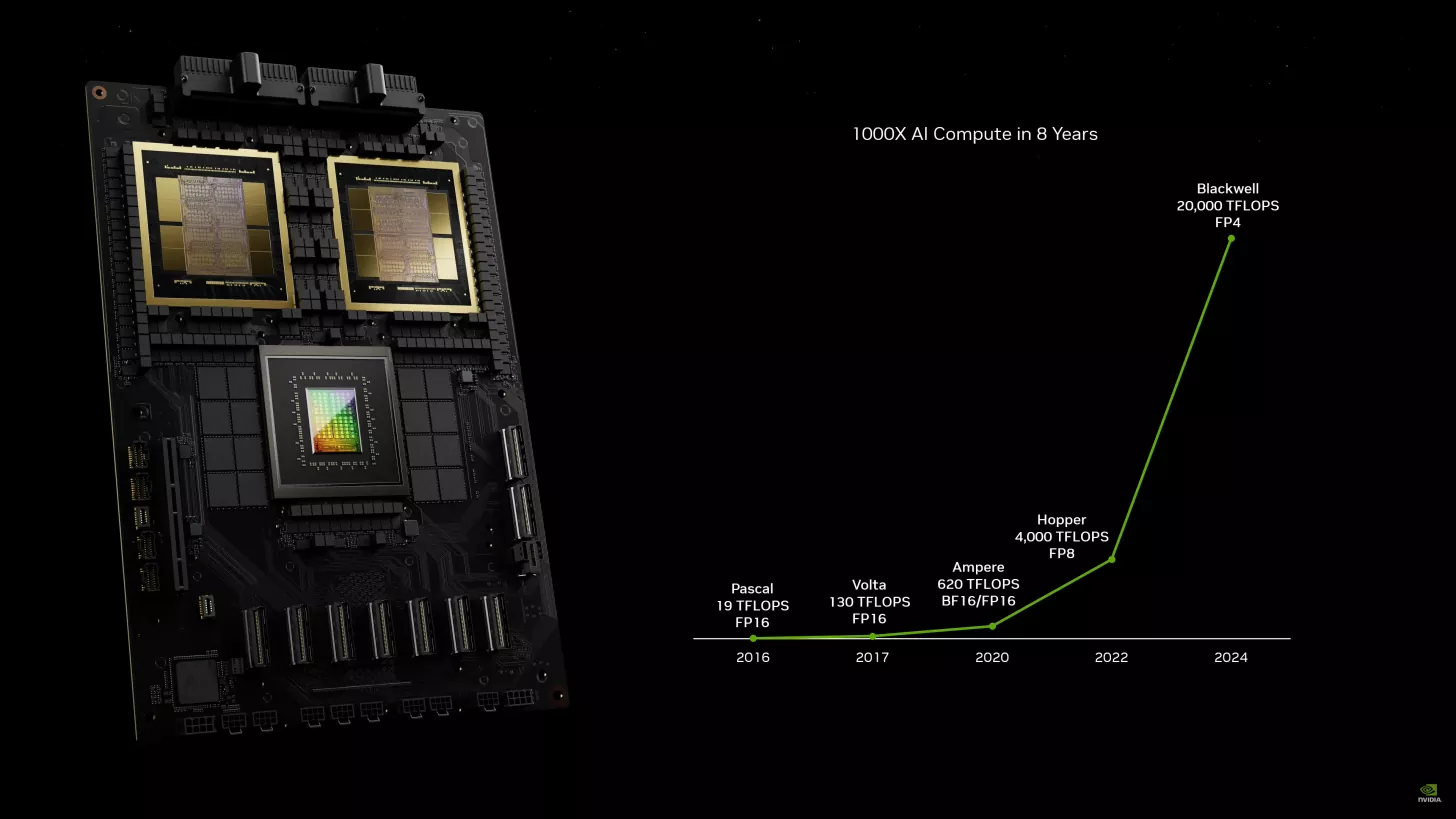

But for now there is little doubt that companies will pay the price, no matter how painful. The B200, with 208 billion transistors, can deliver up to 20 petaflops of FP4 compute power. It would take 8,000 Hopper GPUs consuming 15 megawatts of power to train a 1.8 trillion-parameter model.

Such a task would require 2,000 Blackwell GPUs, consuming only four megawatts. The GB200 Superchip offers 30 times the performance of an H100 GPU for large language model inference workloads and significantly reduces power consumption.

Due to high demand, Nvidia is increasing its orders with TSMC by approximately 25%, according to Morgan Stanley. It is no stretch to say that Blackwell – designed to power a range of next-gen applications, including robotics, self-driving cars, engineering simulations, and healthcare products – will become the de facto standard for AI training and many inference workloads.

Nvidia Blackwell server cabinets could cost somewhere around $2 to $3 million each