What just happened? Some of Nvidia's top enterprise customers are reportedly delaying orders of the latest Blackwell chip racks due to overheating issues and glitches in chip connectivity. The news has sent ripples through the tech industry and financial markets, with Nvidia's shares experiencing a sharp four-percent decline in early trading.

The Information notes that Blackwell GB200 racks, crucial components in data centers, have exhibited problems during initial deployments. The unprecedented power consumption of these cutting-edge GPUs, with each rack drawing a staggering 120-132kW, is the source of the problem, as the extreme power density has pushed traditional cooling systems to their limits.

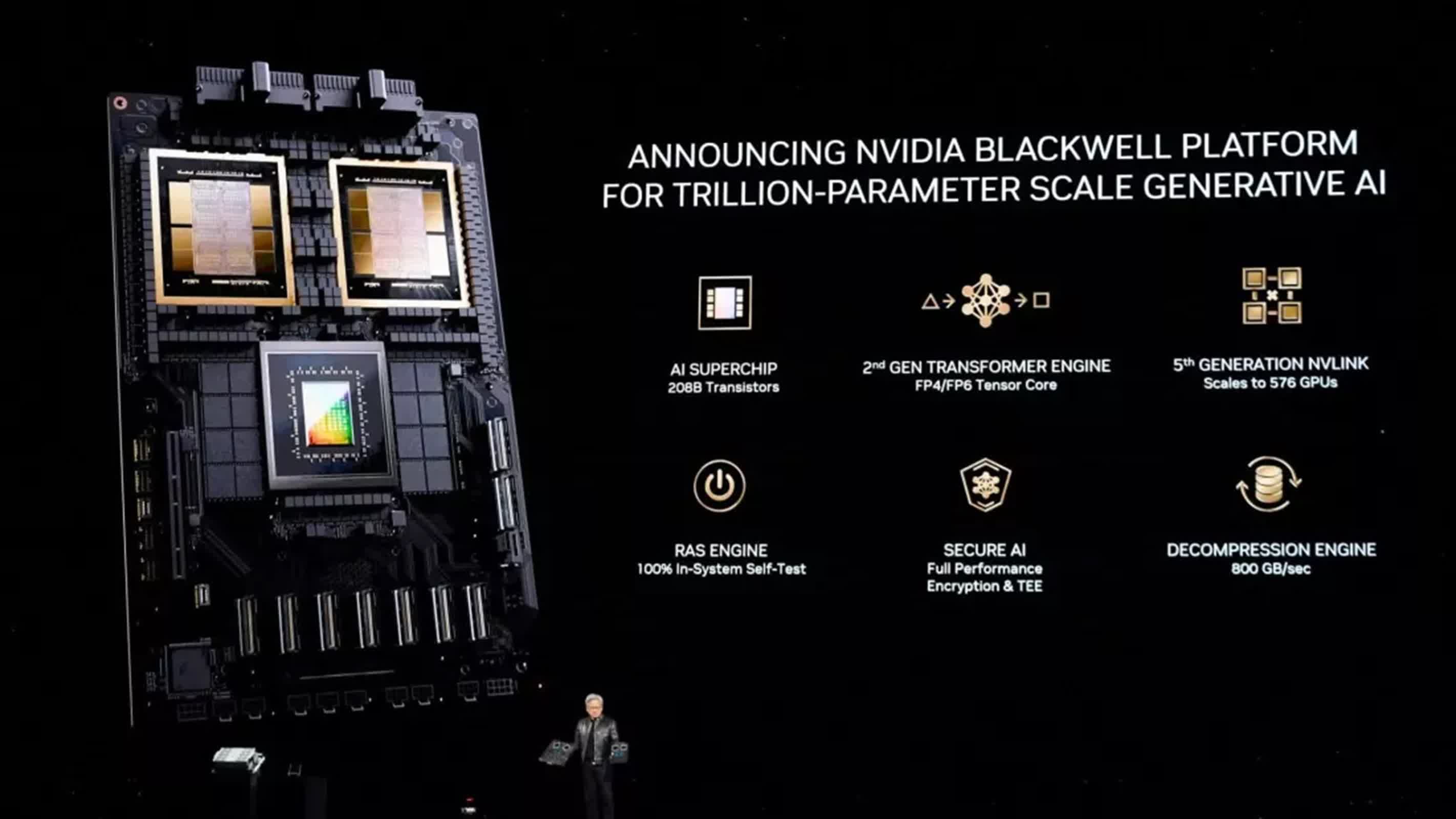

Additionally, initial shipments of Blackwell racks revealed interconnect glitches, hampering efficient heat distribution and creating problematic hotspots. The complex multi-chip module design, which integrates two large GPU dies on a single package, further exacerbates the heat management challenges.

As deployments scale, with configurations featuring up to 72 Blackwell chips per rack, these thermal inefficiencies compound dramatically. The current server rack designs have proven insufficient to handle the extreme thermal output, prompting Nvidia to request multiple design modifications from its suppliers. Resolving these issues will likely require a combination of chip-level optimizations, the development of more advanced cooling solutions, and a complete overhaul of server rack infrastructure.

Some of Nvidia's biggest buyers, including Microsoft, Amazon Web Services, Google, and Meta Platforms, have reduced their orders for the Blackwell GB200 racks. These hyperscalers had placed orders worth $10 billion or more for the new technology. The impact of these order reductions could be significant.

For instance, Microsoft had initially planned to install GB200 racks with at least 50,000 Blackwell chips in one of its Phoenix facilities. However, as delays emerged, Microsoft's key partner, OpenAI, requested Nvidia's older generation 'Hopper' chips instead.

Despite these setbacks, how these order reductions ultimately affect Nvidia's sales remains unclear. Other potential buyers for the GB200 server racks may exist, even with the reported issues.

During initial testing with a flagship liquid-cooled server containing 72 of the new chips, Nvidia CEO Jensen Huang denied media reports of overheating problems. In November, Huang also stated that the company was on track to exceed its earlier target of recording several billion dollars in revenue from Blackwell chips in its fourth fiscal quarter.

Nvidia and Amazon have declined to comment on the situation, while Microsoft, Google, and Meta have not yet responded to requests for comment.