Why it matters: Nvidia introduced CUDA in 2006 as a proprietary API and software layer that eventually became the key to unlocking the immense parallel computing power of GPUs. CUDA plays a major role in fields such as artificial intelligence, scientific computing, and high-performance simulations. But running CUDA code has remained largely locked to Nvidia hardware. Now, an open-source project is working to break that barrier.

By enabling CUDA applications to run on third-party GPUs from AMD, Intel, and others, this effort could dramatically expand hardware choice, reduce vendor lock-in, and make powerful GPU computing more accessible than ever.

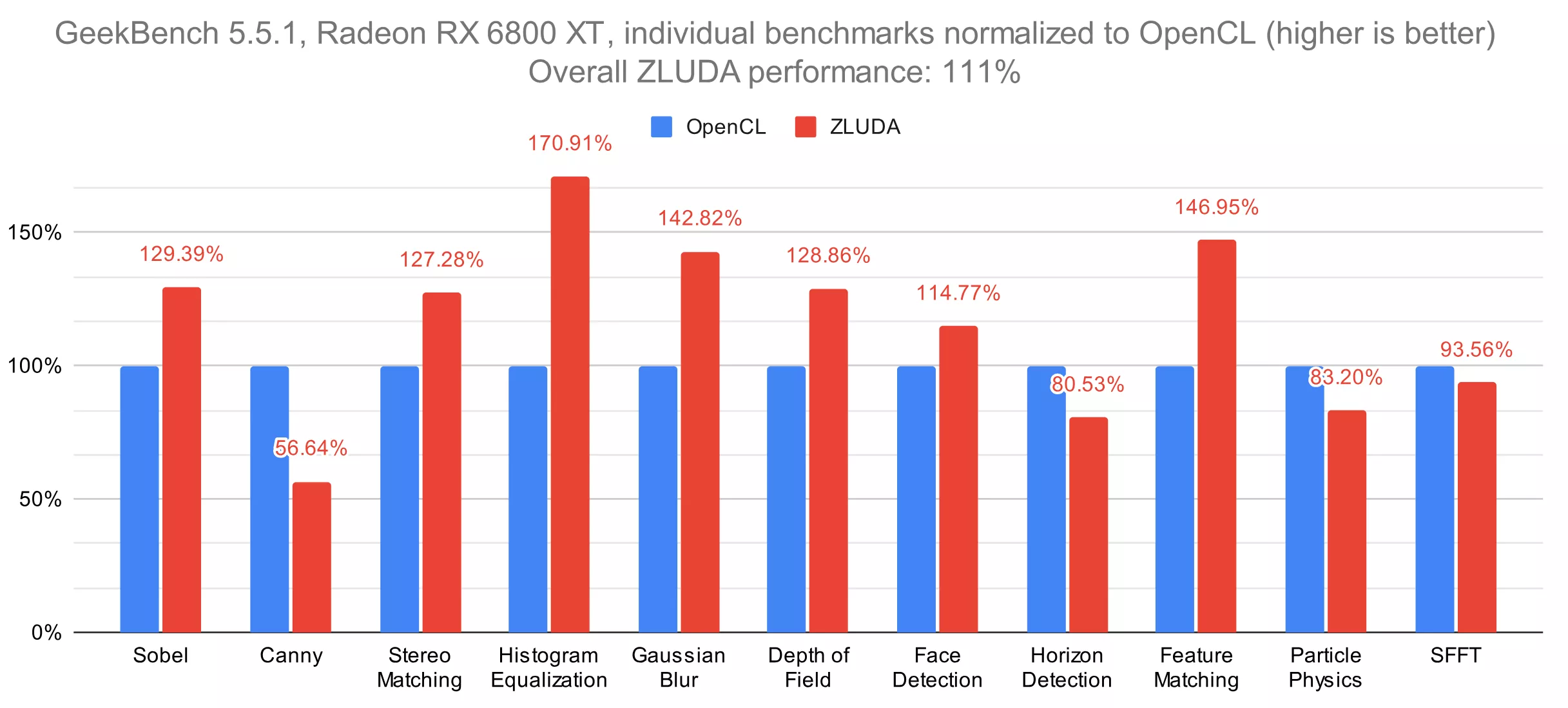

The Zluda team recently shared its latest quarterly update, confirming that the project remains focused on fully implementing CUDA compatibility on non-Nvidia graphics accelerators. Zluda's stated goal is to offer a drop-in replacement for CUDA on AMD, Intel, and other GPU architectures – allowing users and developers to run unmodified CUDA-based applications with "near-native" performance.

A most promising change for Zluda is that its team has doubled in size. There are now two full-time developers working on the project. The newly added developer, known as "Violet," has already made notable contributions to the tool's official open-source repository on GitHub.

Other important updates involve improvements to the ROCm/HIP GPU runtime, which should now function reliably on both Linux and Windows. GPU runtimes like CUDA and ROCm are designed to compile GPU code at runtime, ensuring that code developed for older hardware can typically compile and run on newer GPU architectures with minimal issues.

Zluda is also now significantly better at executing unmodified CUDA binaries on non-Nvidia GPUs. Previously, the tool either ignored certain instruction modifiers or failed to execute them with full precision. Now, the improved code can handle some of the trickiest cases – such as the cvt instruction – with bit-accurate precision.

A key step in fully supporting CUDA applications is tracking how code interacts with the API through detailed logging. Zluda has improved in this area as well. It can now capture previously overlooked interactions and even handle intermediate API calls.

Also see: Not just the hardware: How deep is Nvidia's software moat?

The developers also made meaningful progress in supporting llm.c, a pure CUDA test implementation (written in C) for language models like GPT-2 and GPT-3. Zluda currently implements 16 out of 44 functions in llm.c, and the team hopes to fully run the test soon.

Finally, Zluda has advanced slightly in its potential support for 32-bit PhysX code. Nvidia dropped both hardware and software support for this middleware with the Blackwell-based GeForce 50 series GPUs, leaving fans of old(ish) games with what can be essentially described as a broken or subpar experience.

In the past quarter, Zluda received a minor update related to 32-bit PhysX support. The initial focus is on efficiently collecting CUDA logs to identify potential bugs, which can eventually affect 64-bit PhysX code as well. However, the developers caution that full 32-bit PhysX support will likely require significant contributions from third-party coders.

Open source project is making strides in bringing CUDA to non-Nvidia GPUs