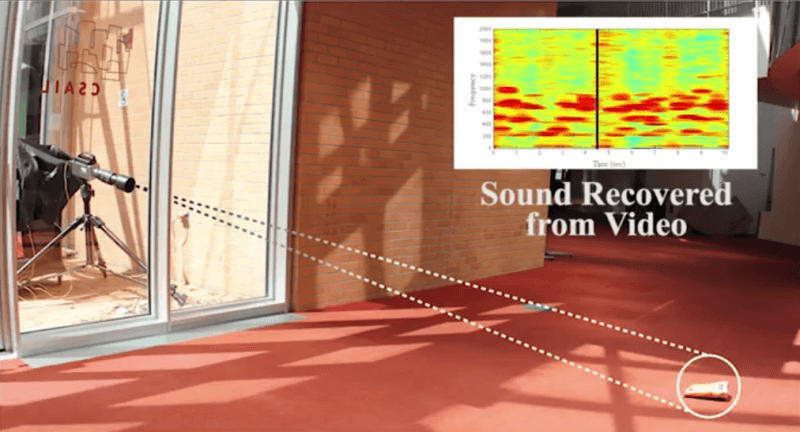

A group of researchers from Microsoft, MIT and Adobe have developed technology which turns everyday objects into visual microphones.

Sounds of any kind, whether it be voices, music or otherwise, create surrounding objects to vibrate. In normal situations, these vibrations are so subtle they appear invisible to the naked eye. The team has developed an algorithm that can analyze these microscopic vibrations from within video clips in order to reproduce the sound that created them. As an example, the team set up a plant in a room with the song "Mary Had a Little Lamb" playing. By analyzing the vibrations in the plant from the footage, the team is able to reproduce the song in fairly impressive fidelity.

Immediately many may feel the technology seems to be best suited for spying applications. The team also gives another example where it is able to pick up the human voice through a sound proof window from the vibrations on a bag of potato chips. While not perfect, you can failry easily makeout the words, and authorites using something like this in a criminal case likely have other software tools to enhance the audio after the fact.

While all you need is the algorithm and a camera, it does require a very high speed recording device that can capture up to 6,000 frames per second in order to recover usable data, for the most part. A standard commercially bought camera is only be able to make out the basic audio without much detail, as you'll see in the demonstration video below: