FreeSync 2 is AMD's monitor technology for the next generation of HDR gaming displays. In a previous article we explained everything you need to know about it and now we'll be giving our impressions of actually using one of these monitors for some gaming, and whether it's worth buying a FreeSync 2 monitor right now.

The monitor I've been using to test FreeSync 2 is the Samsung C49HG90, a stupidly wide 49-inch double 1080p display with a total resolution of 3840 x 1080. It's got a 1800R curve, uses VA technology, and it's certified for DisplayHDR 600. This means it sports up to 600 nits of peak brightness, covers at least 90% of the DCI-P3 gamut, and has basic local dimming.

Now while this panel doesn't support the full DisplayHDR 1000 with 1000 nits of peak brightness for optimal HDR, the Samsung CHG90 does provide more than just an entry-level HDR experience.

There are plenty of supposedly HDR capable panels that cannot push their brightness above 400 nits and do not support a wider-than-sRGB gamut, but Samsung's latest Quantum Dot monitors do provide higher brightness and a wider gamut than basic SDR displays.

Despite being advertised on their website, the Samsung CHG90 does not support FreeSync 2 out of the box, requiring to download and install a firmware update for the monitor, which isn't a great experience. As explained before, AMD announced FreeSync 2 at the beginning of 2017 but this is the first generation of products actually supporting the technology.

There will likely be many cases where people purchase this monitor, hook it up to their PC without performing any firmware updates, and just assume FreeSync 2 is working as intended. The fact you might need to upgrade the firmware is not well advertised on Samsung's website - it's hidden in a footnote at the bottom of the page - and upgrading a monitor's firmware isn't exactly a common practice.

If you buy a supported Samsung Quantum Dot monitor, make sure it's running the latest firmware that introduces FreeSync 2 support; if it is running the right firmware, the Information tab in the on-screen display will show a FreeSync 2 logo.

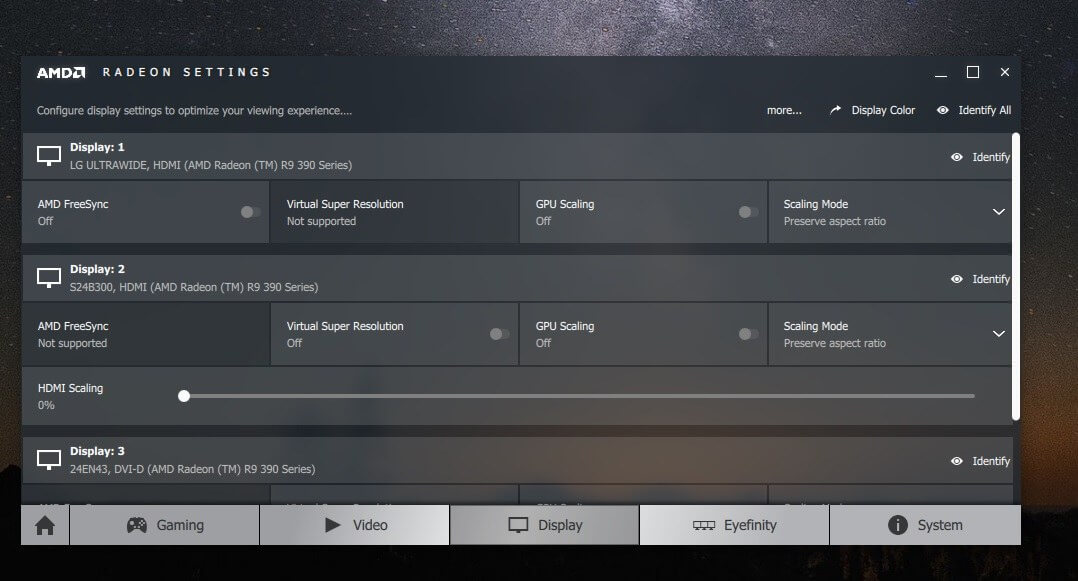

AMD's graphics driver and software utility exacerbate this issue with FreeSync 2 display firmware. While Radeon Settings does indicate when your GPU is hooked up to a FreeSync display, it does not distinguish between FreeSync and FreeSync 2. There is no way to tell within Radeon Settings or any part of Windows that your system is attached to a FreeSync 2 display, so there's no way to check if FreeSync 2 is working, whether your monitor supports FreeSync 2, or if it is enabled.

There was no change to the way Radeon Settings indicated FreeSync support after we upgraded our monitor to the FreeSync 2 supporting firmware either. This is bound to confuse users and does need attention from AMD...

So how does FreeSync 2 actually work and how do you set it up?

Provided FreeSync is enabled in Radeon Settings and in the monitor's on-screen display - and both are enabled by default - it should be ready to go. There is no magic toggle to get everything working and no real configuration options, instead the key features are either permanently enabled, like low latency and low framerate compensation, or ready to be used when required, like HDR.

Using the HDR capabilities of FreeSync 2 does require you to enable HDR when you want to use it. In the case of Windows 10 desktop applications, this means going into the Settings menu, heading to the display settings, and enabling "HDR and WCG". This switches the Windows desktop environment to an HDR environment, and any apps that support HDR can pass their HDR-mapped data straight to the monitor through HDR10. For standard SDR apps, which currently are most Windows apps, Windows 10 attempts to tone map the SDR colors and brightness to HDR as it can't automatically switch modes on the fly.

While Windows 10 has been improving its HDR support with each major Windows update, it's still not at a point where SDR is mapped correctly to HDR. With HDR and WCG enabled, SDR apps look washed out and brightness is lacking. Some apps like Chrome are straight up broken in the HDR mode. There is a slider within Windows for changing the base brightness for SDR content, however with our Samsung test monitor, the maximum supported brightness for SDR in this mode is around 190 nits, which is well below the monitor's maximum 350 nits when HDR and WCG are disabled.

Now, 190 nits of brightness is probably fine for a lot of users, but it's a bit strange that the slider does not correspond to the full brightness range of the monitor. It's also a different control to the display's on-screen brightness control; if the monitor's brightness is set to less than 100, you'll get less than 190 nits when displaying SDR content.

If this all sounds confusing to you, that's because it is. In fact, the whole Windows desktop HDR implementation is a bit of a mess, and if you can believe it, earlier versions of Windows 10 were even worse.

This is the case with not just FreeSync 2 monitors, but all HDR displays hooked up to Windows 10 PCs. At the moment, our advice is to disable HDR and WCG when using the Windows 10 desktop and only enable it when you want to run an HDR app, because that way you'll get the best SDR experience in the vast majority of apps that currently don't support HDR.

So how about games? Surely, this is the area where FreeSync 2 monitors and HDR really shines, right? Well... it depends. We tried a range of games that currently support HDR on Windows, and we were largely disappointed with the results. HDR implementations differ from game to game, and it seems that right now, a lot of game developers have no idea how to correctly tone map their games for HDR.

The worst of the lot are EA's games. Mass Effect Andromeda's HDR implementation was famously shamed when HDR monitors were first shown off, but the curse of poor HDR continues into Battlefield 1 and the newer Star Wars Battlefront 2. Both games exhibit washed out colors in the HDR mode that looks far worse than the SDR presentation, compounded by a general dark tone to the image, and weak use of HDR's spectacular bright highlights. In all three of EA's newer games that support HDR, there is no reason whatsoever to enable it, as it looks so much better in SDR.

We're not sure why these games look so bad, because reports seem to suggest EA titles also look bad on TVs with better HDR support, and on consoles. We think there is something fundamentally broken with the way EA's Frostbite engine manages HDR and hopefully it can be resolved for upcoming games.

Hitman is one of the older games to support HDR, and it too does not manage HDR well. While the presentation isn't as washed out as with EA's titles, colors are still dull and the image in general is too dark, with little (if any) use of impressive highlights. The idea of HDR is to add to the color gamut and increase the range of brightnesses used, but in Hitman it just seems like everything has become darker and less intense. Again, this is a game that you should play in the SDR mode.

Assassin's Creed Origins has an interesting HDR implementation as it allows you to modify the brightness ranges to match the exact specifications of your display. We're torn as to whether the game looks better in the HDR or SDR modes; HDR appears to have better highlights and a wider range of colors during the day, but suffers from a strange lack of depth at night which oddly makes night scenes feel less like they are actually at night. The SDR mode looks better during these night periods and is perhaps slightly behind the HDR presentation during the day.

On a display with full array local dimming, which this Samsung monitor does not have, Assassin's Creed Origins would look better but it's not the best HDR implementation we've seen in any case.

The best game for HDR by far is Far Cry 5. AMD tells me this is the first game that will support FreeSync 2's GPU-side tone mapping in the coming weeks, although right now the game does not support this HDR pipeline. Instead, as with most HDR games, Far Cry 5 uses HDR10 that is passed on to the display for further tone mapping.

Unlike most other games, though, Far Cry 5's HDR10 is actually quite good. The color gamut is clearly expanded to produce more vivid colors, and we're not getting the same washed out look as many other HDR titles. Bright highlights truly are brighter in the HDR mode with great dynamic range, and in general this is one of the few titles that looks substantially better in the HDR mode. Nice work Ubisoft.

Middle-earth: Shadow of War is another game with a decent HDR implementation. When HDR is enabled, this game utilizes a noticeably wider range of colors and highlights are brighter. Again, there is no issue with dull colors or a washed out presentation, which allows the HDR mode to improve on the SDR presentation in basically every way.

How you enable HDR in these games isn't always the same. Most of the titles have a built-in HDR switch that overrides the Windows' HDR and WCG setting, so you can leave the desktop as SDR and merely enable HDR in the games you want to play in the HDR mode.

Hitman is an interesting case where it has an HDR switch in the game settings but will display a black screen if the Windows HDR switch is also enabled. Shadow of War has no HDR switch at all, instead deferring to the Windows HDR and WCG setting, which is annoying as you'll have to switch between HDR and SDR manually to get the optimal HDR experience in the game, but a decent SDR experience on the desktop.

While the HDR experience in a lot of games right now is pretty bad and actually a lot worse than the basic SDR presentation, we think there is reason to be optimistic about the future of HDR gaming on PC. Some more recent games like Far Cry 5 and Shadow of War have pretty decent HDR implementations which improve upon the SDR mode in noticeable ways, while many of the games that have poor HDR implementations are somewhat older.

As the HDR ecosystem matures, we should see more Far Cry 5s and fewer Mass Effect Andromedas in terms of their HDR implementation.

We're also not at the stage where any games use FreeSync 2's GPU-side tone mapping. As we mentioned before, Far Cry 5 will be the first to do so in the coming weeks, with AMD claiming more games scheduled for release later this year will include FreeSync 2 support right out of the box.

It'll be interesting to see how GPU-side tone mapping turns out, but it definitely has the scope to improve the HDR implementation for PC games.

However, as it stands right now, we see little reason to buy a FreeSync 2 monitor until more games include decent HDR. It's just too hit or miss - and mostly misses - to be worth the significant investment into a first-generation FreeSync 2 HDR monitor. This isn't the sort of technology to become an early adopter in at the moment, as later in the year we should have a wider range of HDR monitors to choose from, potentially with better support for HDR through higher brightness levels, full array local dimming, and wider gamuts. By then we should also have a better look at the HDR game ecosystem, hopefully with more games that sport decent HDR implementations.

That's not to say you should avoid these Samsung Quantum Dot FreeSync 2 monitors, in fact they're pretty good as far as gaming monitors go. Just don't buy them specifically for their HDR capabilities or you might find yourself a bit disappointed right now.