Ever since Intel Arc GPUs launched, the processor giant promised to offer regular driver updates. So far they have kept true to its word and there's been no shortage of new software, citing bug fixes and support for new games, but also lots of performance gains.

Marketing claims and reality are often very different beasts, though, and there's only one way to see what's really going – benchmarks and a thorough analysis of the results.

The Arc of Driver Progress So Far

When the Arc A770 and A750 graphics cards first launched, their specifications suggested that they would be more than a match for any model that AMD or Nvidia had in the same price category. And for the first games we tested the cards on, that was very much the case.

It soon transpired that they had a serious Achilles' heel: drivers. Some DX12 games had multiple issues, and others just ran slow, but older titles using DX9 were catastrophically bad.

This was eventually resolved by Intel removing native DX9 support and switching to an open-source mapping layer (D3D9on12) that translated DX9 instructions into DX12 ones. With each new driver release, Intel continued to hone its software.

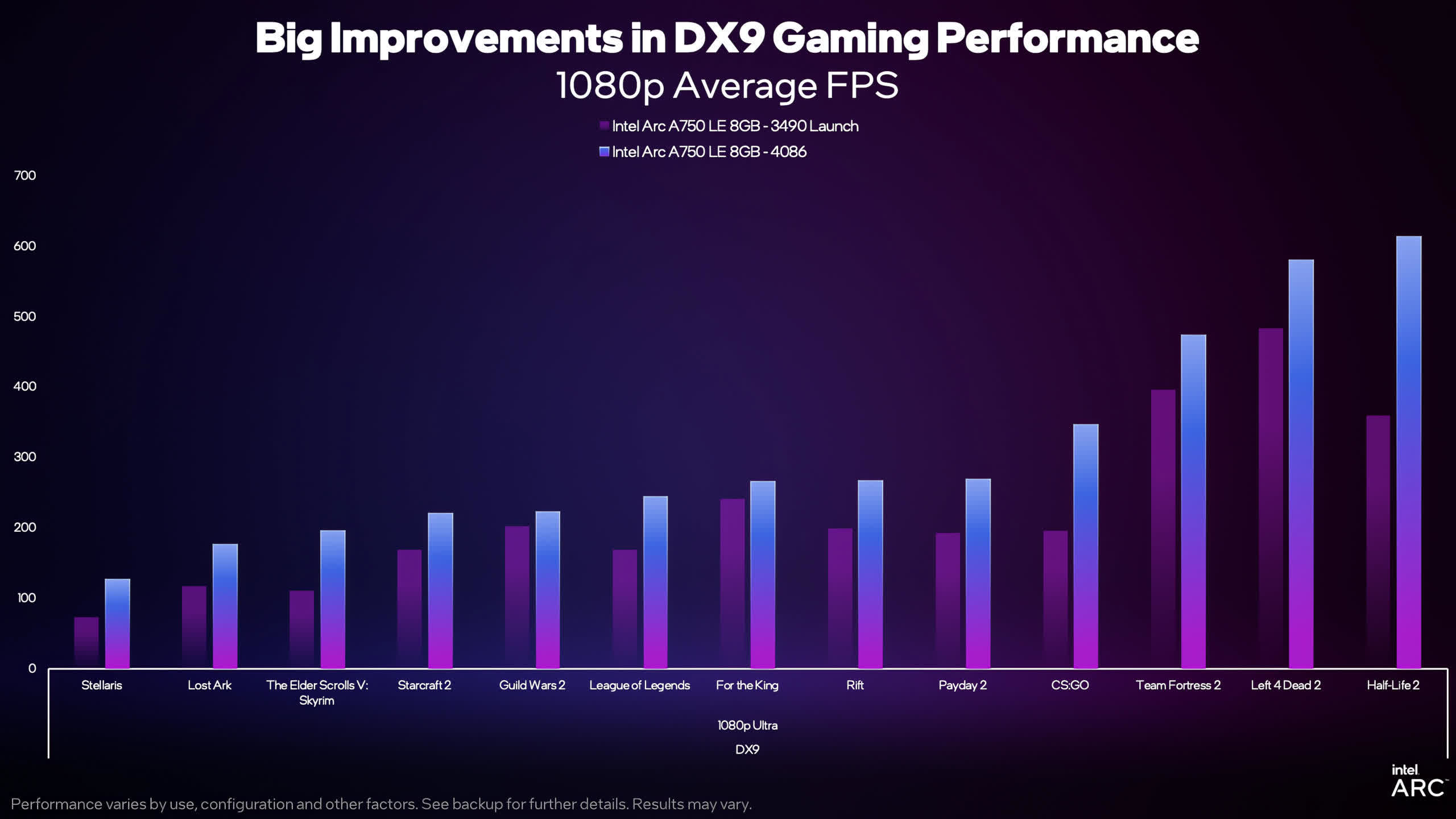

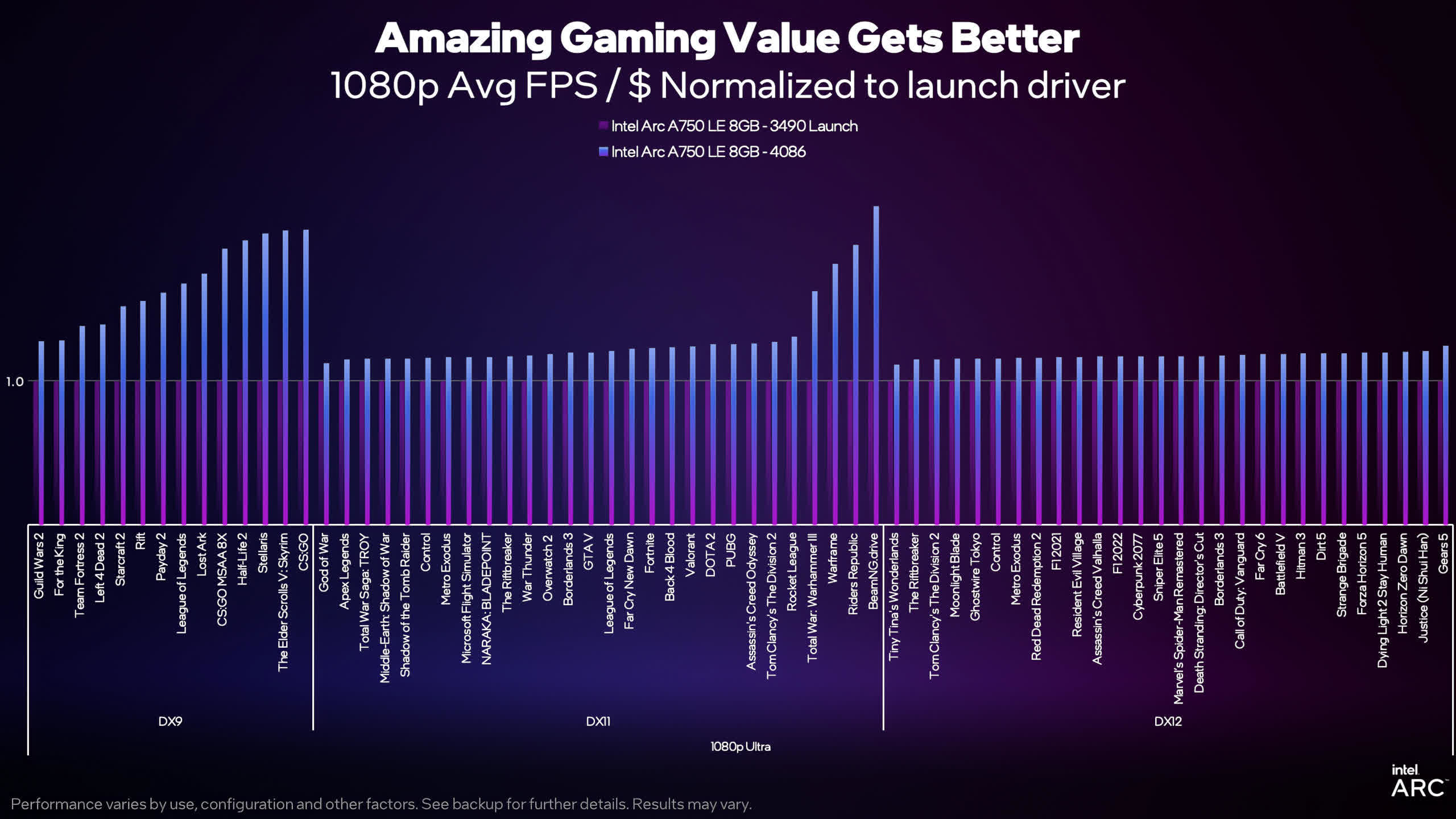

Lately, it made claims of big performance gains with the 4086 drivers, citing an average DirectX 9 performance improvement of 43%, with individual gains as high as 87%. By any measure, those are some mega increases – if true, of course.

There were some further claims of DX11 and DX12 performance improvements, but the actual gains were somewhat hidden behind a layer of marketing talk.

Rather than give actual performance figures, Intel used vague-looking graphs to indicate how much better the 4086 drivers were.

Naturally, we decided to look into all of this and in this article, we're revisiting the Arc A770 with an updated set of benchmarks, using the 4123 driver set. Due to time constraints, we didn't get a chance to fully examine the A750, but we will have something to say about that card by the end of this re-review.

We'll pay close attention to how well the Arc A770 fares against the AMD Radeon RX 6650 XT and Nvidia GeForce RTX 3060, as these GPUs are either closely matched in price or they've been used in Intel's marketing materials for performance-per-cost comparisons.

| GPU Parameters | Arc A750 | Arc A770 | Radeon RX 6650 XT | GeForce RTX 3060 |

| Shader Cores | 3584 | 4096 | 2048 | 3584 |

| TMUs | 224 | 256 | 128 | 112 |

| ROPs | 112 | 128 | 64 | 48 |

| Base Clock | 2050 MHz | 2100 MHz | 2055 MHz | 1320 |

| Boost Clock | 2400 MHz | 2400 MHz | 2635 MHz | 1777 |

| Memory Bus Width | 256 bits | 256 bits | 128 bits | 192 bits |

| Memory Speed | 16 Gbps | 16 Gbps | 17.5 Gbps | 15 Gbps |

| Memory Bandwidth | 512 GB/s | 512 GB/s | 280 GB/s | 360 GB/s |

| Memory Amount | 8 GB | 16 GB | 8 GB | 12 GB |

For the gaming tests, all GPUs were run at the official clock specifications, so no factory overclocking. The rest of the system setup comprised the Ryzen 7 5800X3D with 32 GB of dual-rank, dual-channel DDR4-3200 CL14 memory on the Asus ROG Crosshair VIII Extreme motherboard.

Gaming Benchmarks

In total, we've tested 12 games at 1080p and 1440p. Some have been promoted by Intel as receiving a specific boost, whereas others not mentioned at all are simply part of our usual benchmark array.

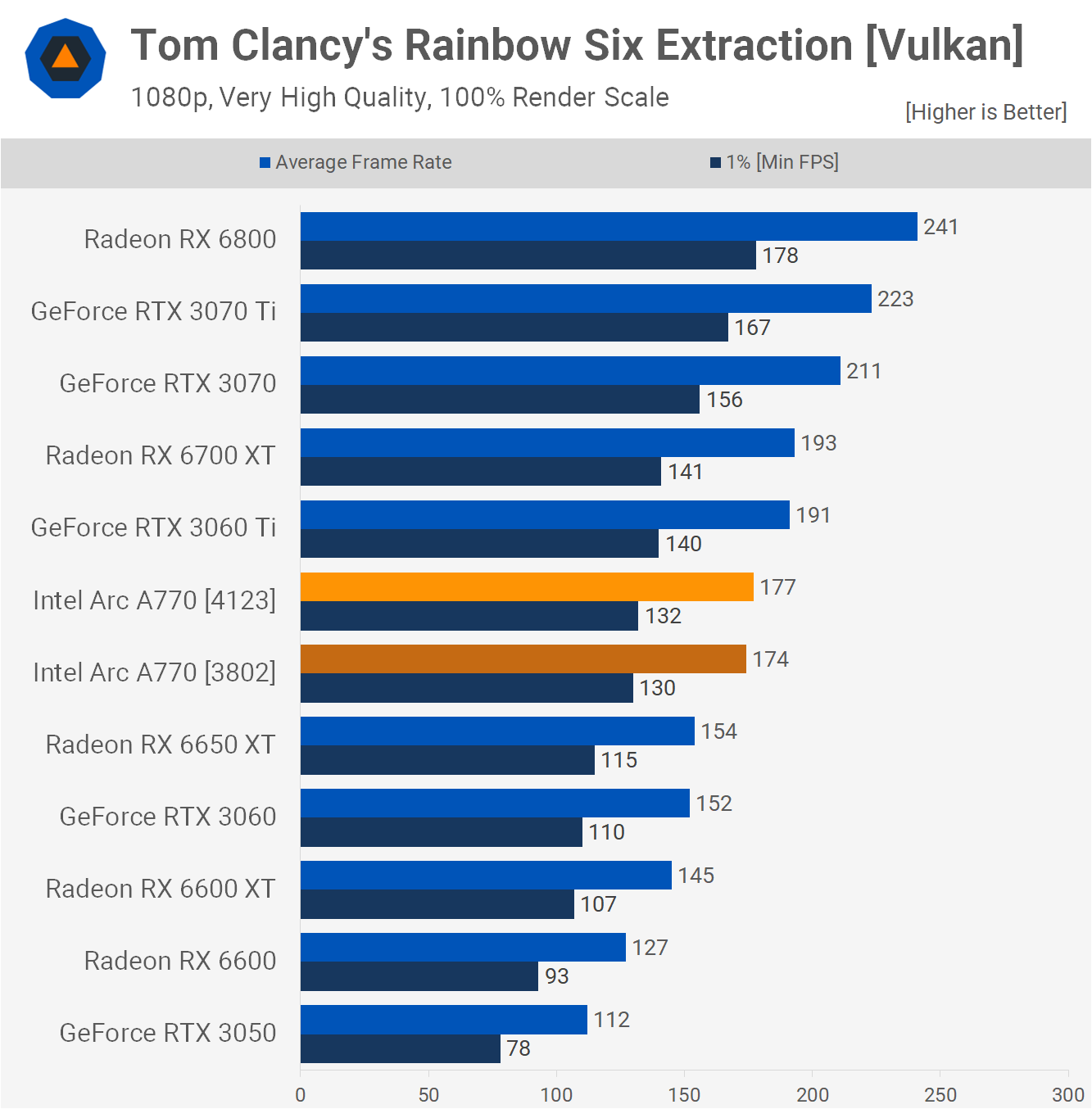

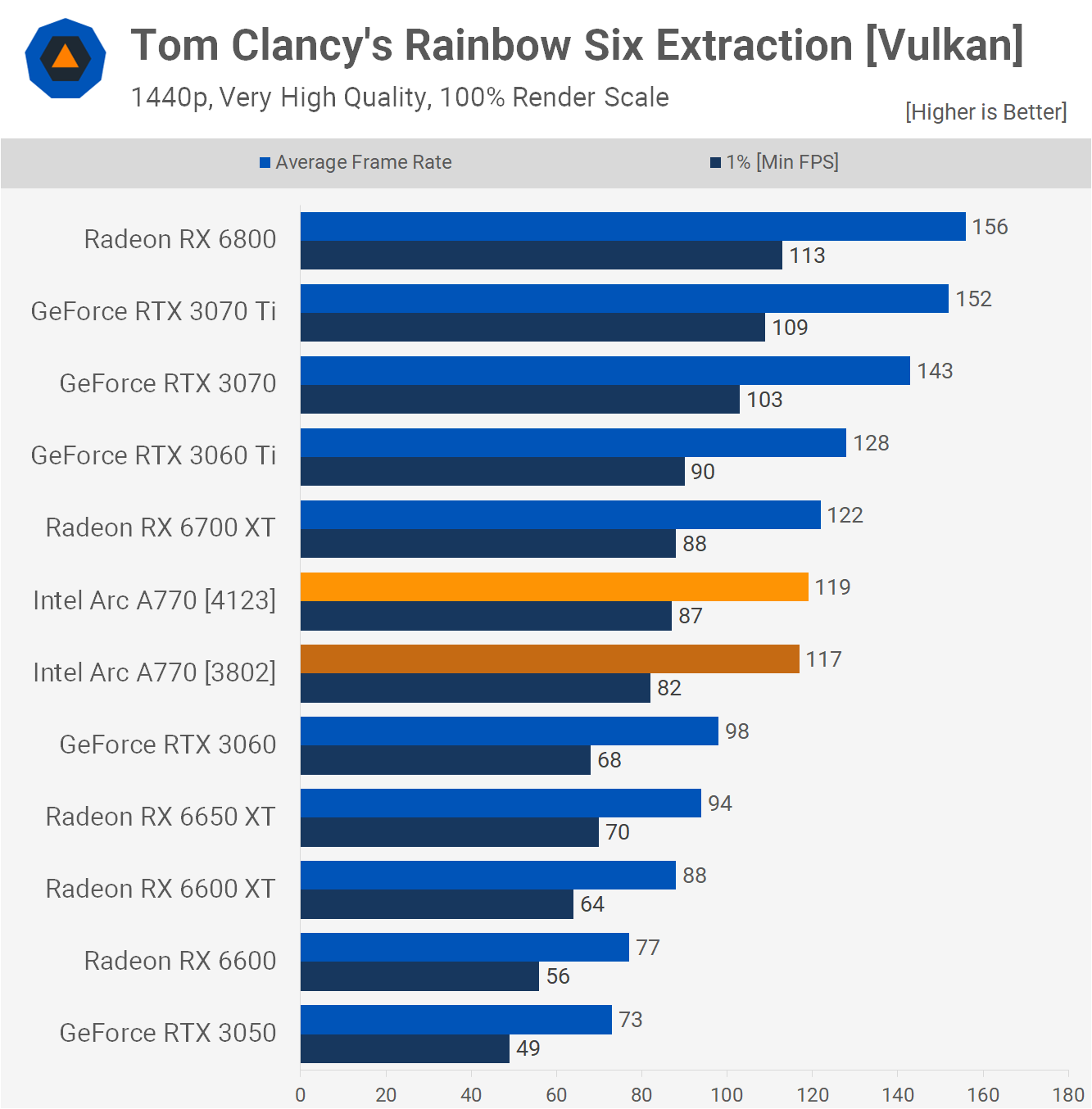

The first title examined was Rainbow Six Extraction, one that Intel made no specific performance claims for. At 1080p, we saw no significant change in performance, as the results with the newer drivers were improved by just 2%.

This was already a really strong title for Intel's Arc GPUs, so it's probably unsurprising that there was no massive change here. The same percentage improvement was also seen at 1440p, which shows that this is a genuine gain from the new drivers, albeit a very small one.

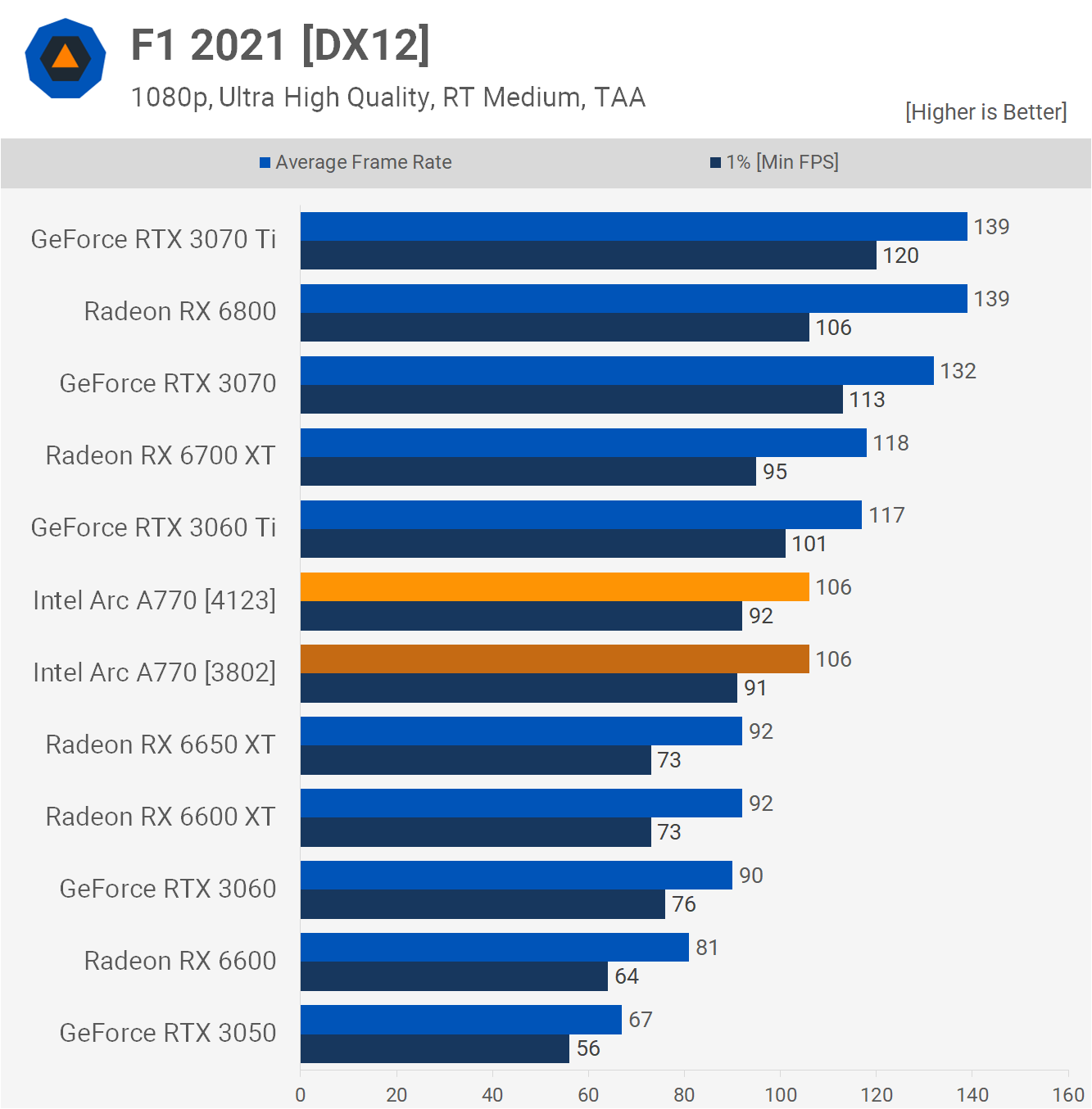

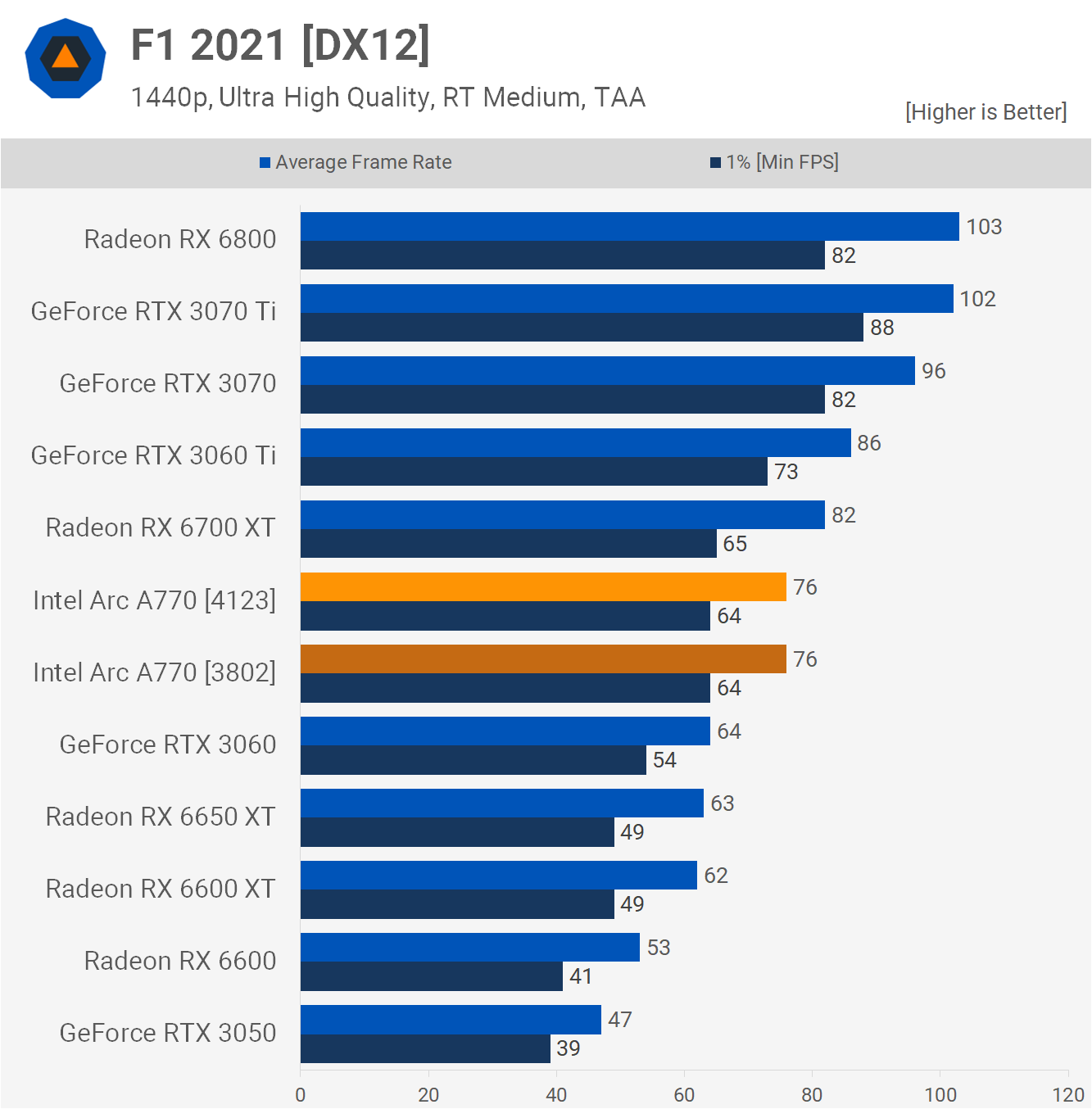

Intel did claim a modest improvement in F1 2021, as well as the newer F1 2022 version, though exactly how much isn't clear for the A770. Regardless, our tests showed no performance change at all in F1 2021, at 1080p and 1440p.

Fortunately, this was already a very positive title for the Arc A770 as it easily beat the Radeon 6650 XT and GeForce RTX 3060 at both resolutions.

It's interesting to note that the A770 takes a 28% hit in performance, going from 1080p to 1440p, whereas the Radeon RX 6650 XT drops by 32% and the GeForce RTX 3060 by 29%. That might look better than the competition, but the A770 should really be a little better than that.

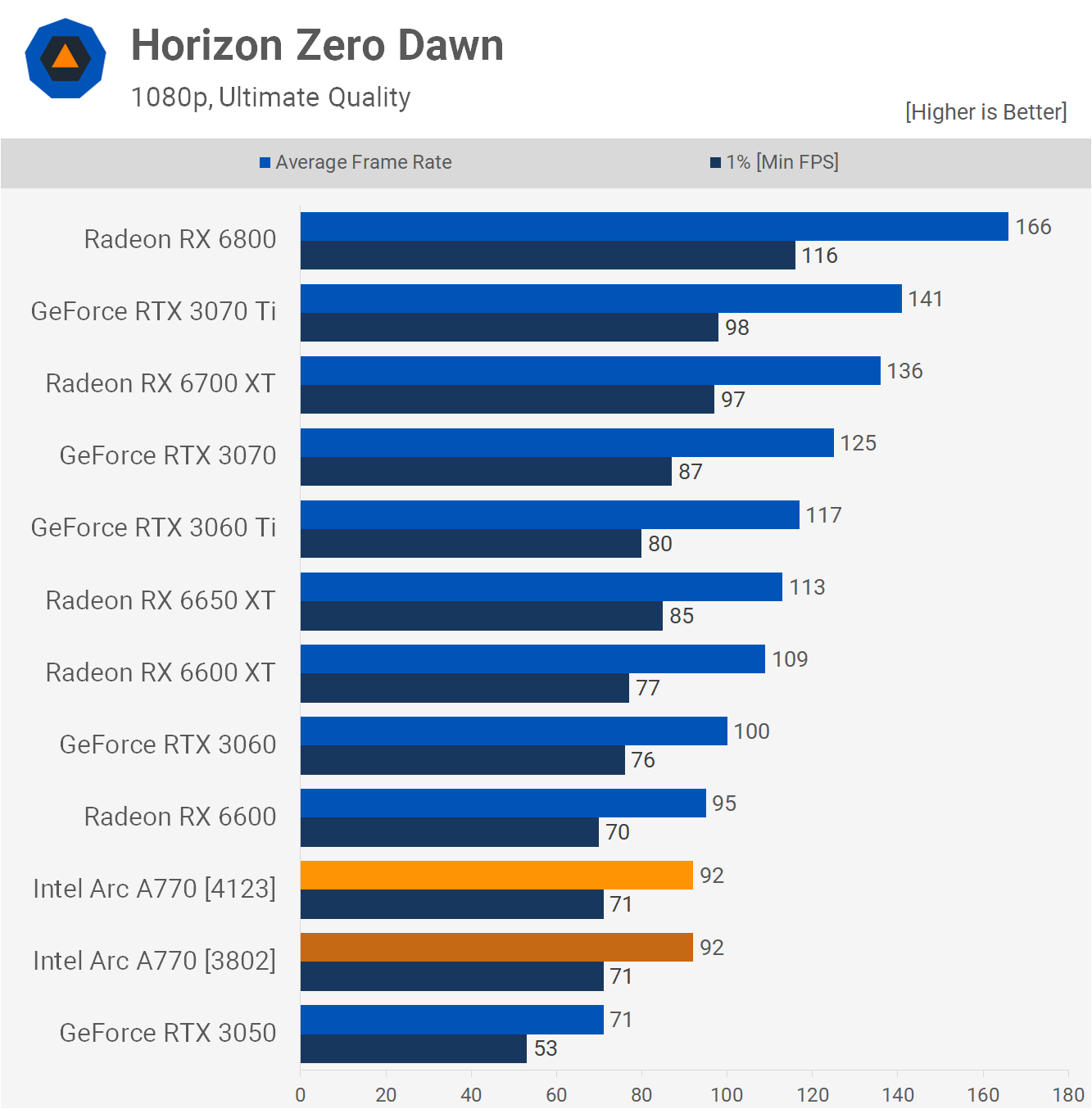

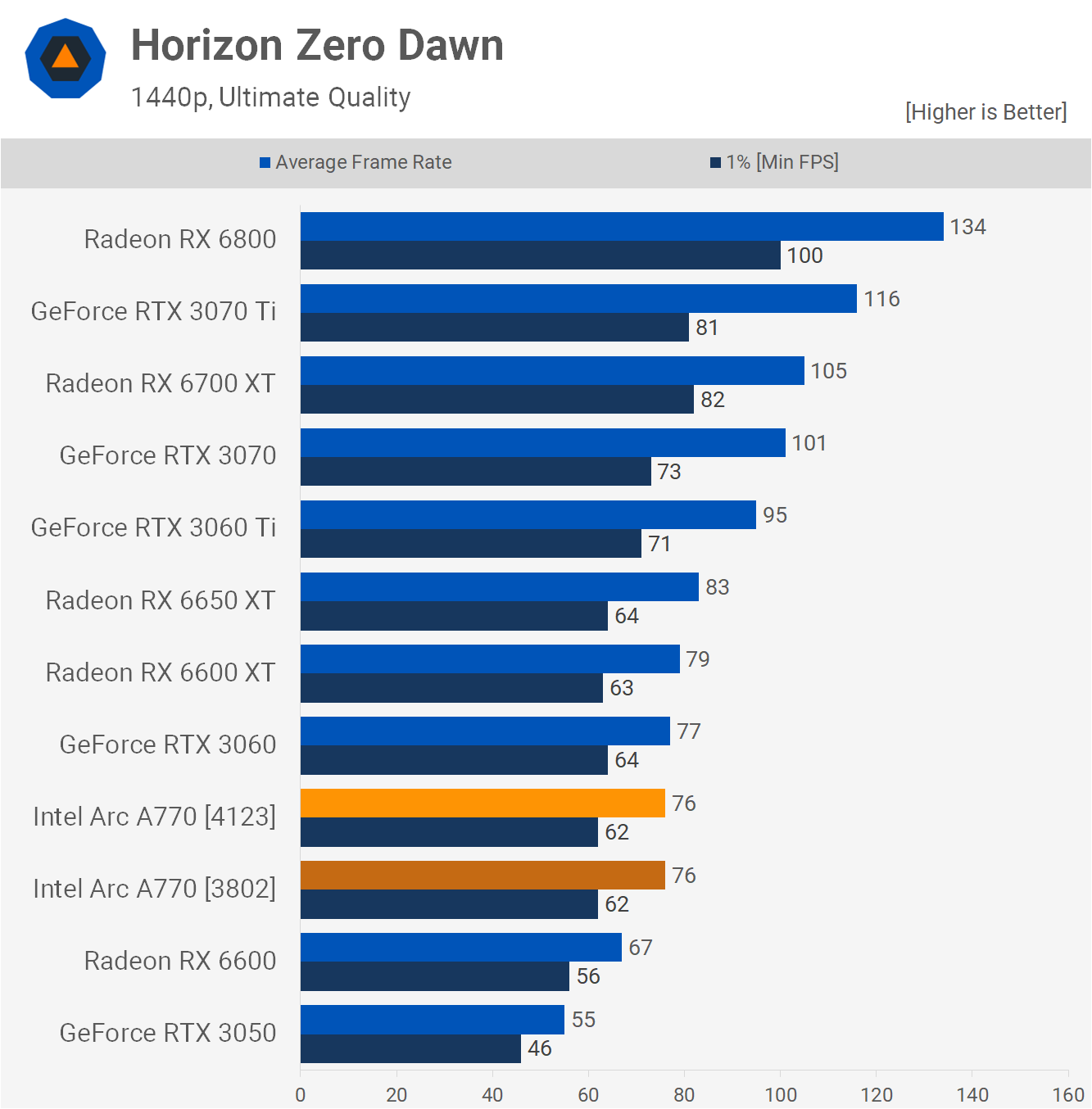

On to the next test and one in which Intel claimed one of its biggest DX12 gains – Horizon Zero Dawn. But just as with all the other offered results, none of the graphs were labeled, so it's not exactly clear what the exact performance improvement was.

But just as we saw in F1 2021, there were no changes in the frame rates in our testing.

The average fps and 1% low figures achieved with the 4123 drivers were exactly the same as the original 3802 drivers, at both 1080p and 1440p. Intel states that "results may vary" on its charts but there's a world of difference between a small performance gain and none whatsoever.

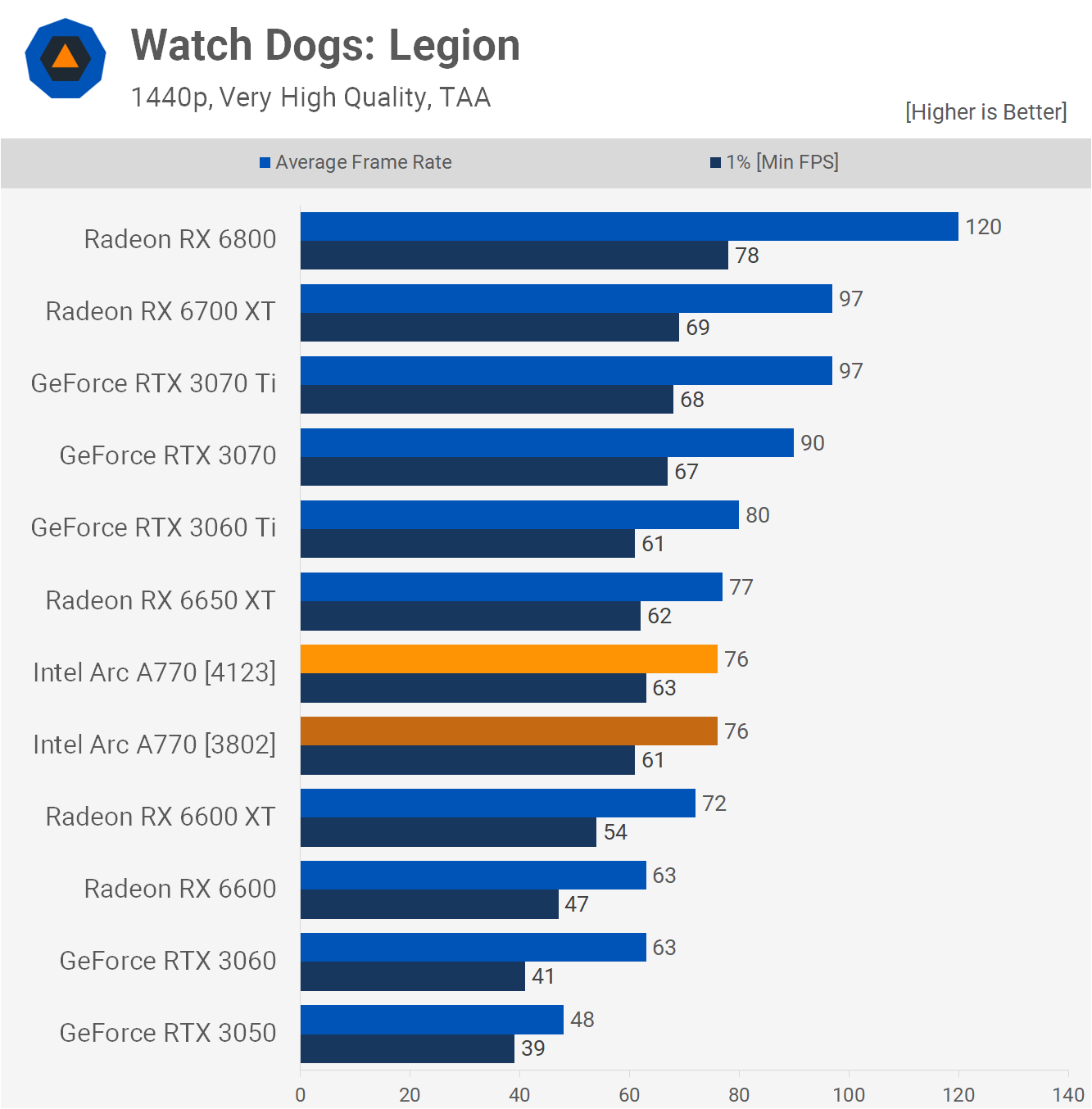

Watch Dogs: Legion isn't a game that Intel claimed any performance gains for, but as we tested with it previously it makes sense to include it here. So based on that, you probably won't be surprised to learn that the 1080p data with the latest driver pretty much matches what we found originally – a 1% increase in the average frame rate means nothing.

At 1440p, though, we did see a 3% gain in the 1% Lows, but the average fps was no better. It's possible that some changes in the newer drivers have helped out here, but it's unlikely as plenty of other titles didn't show any changes at all.

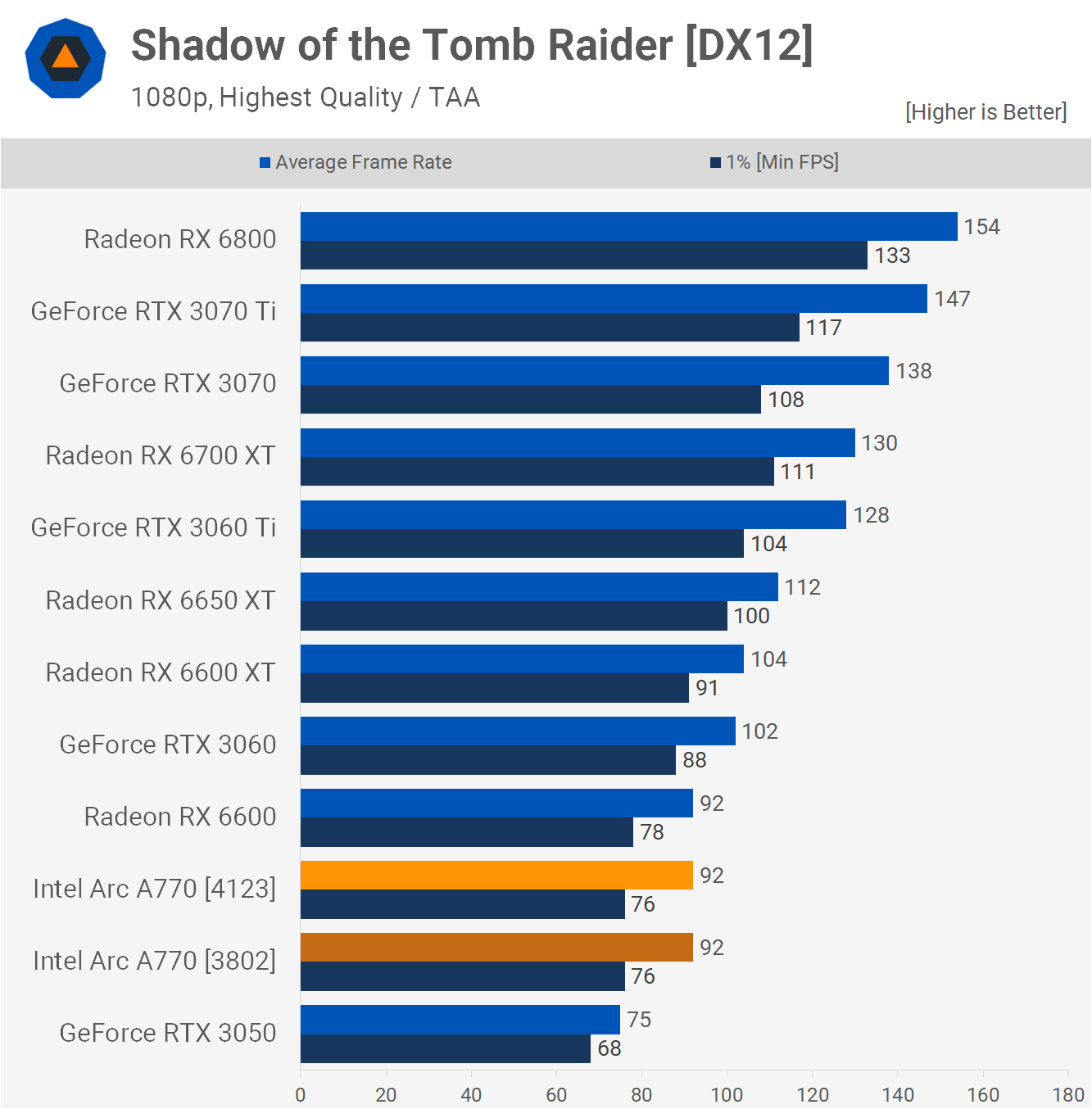

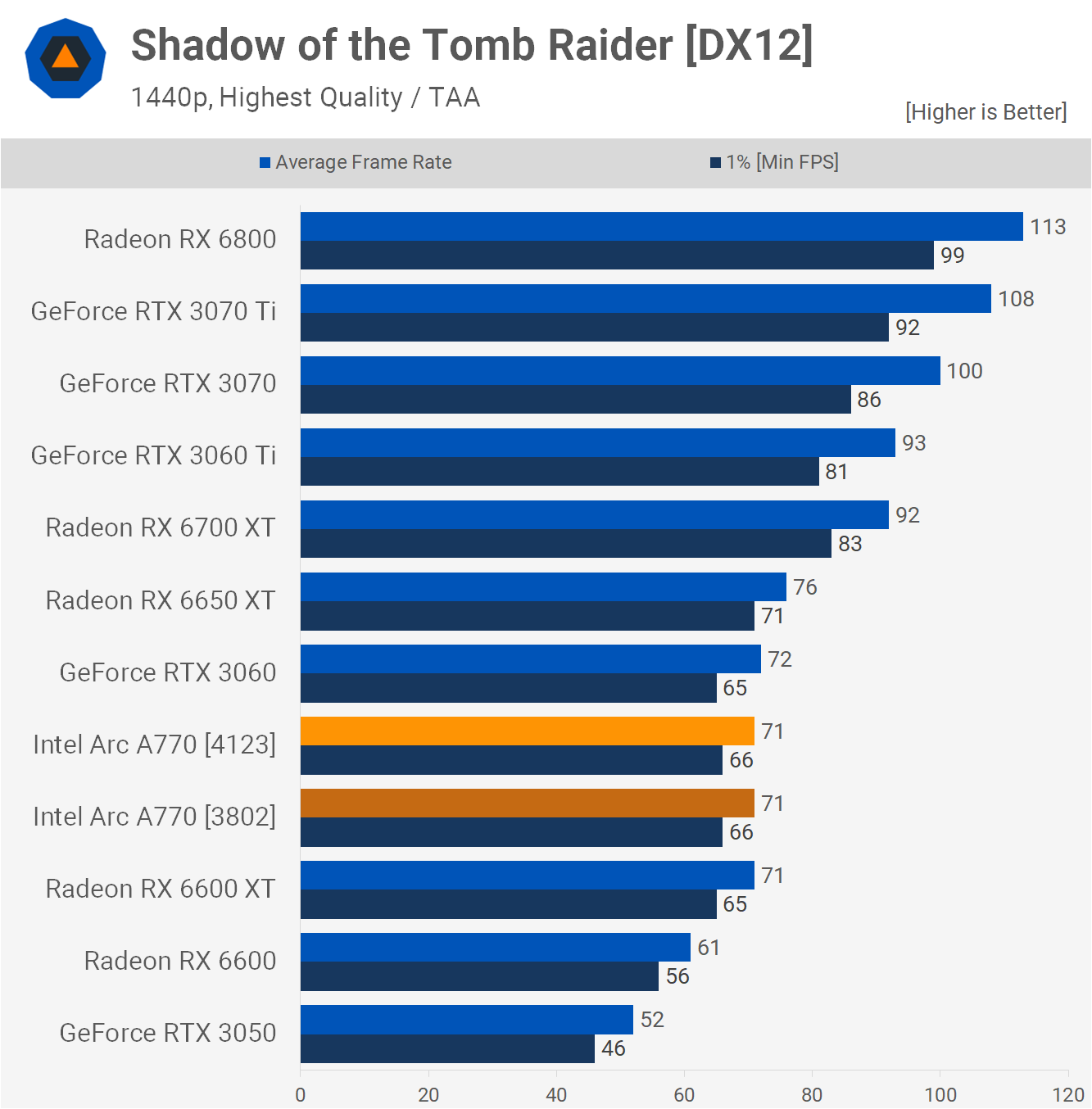

Another game that we first tested the Arc A770 with was Shadow of the Tomb Raider and again, it's not one that Intel claimed any performance improvements for.

The A770 was reasonably competitive with the 6650 XT and RTX 3060 here, especially at 1440p, and that remains true with the 4123 driver revision. But once again, there was no improvement in the performance figures.

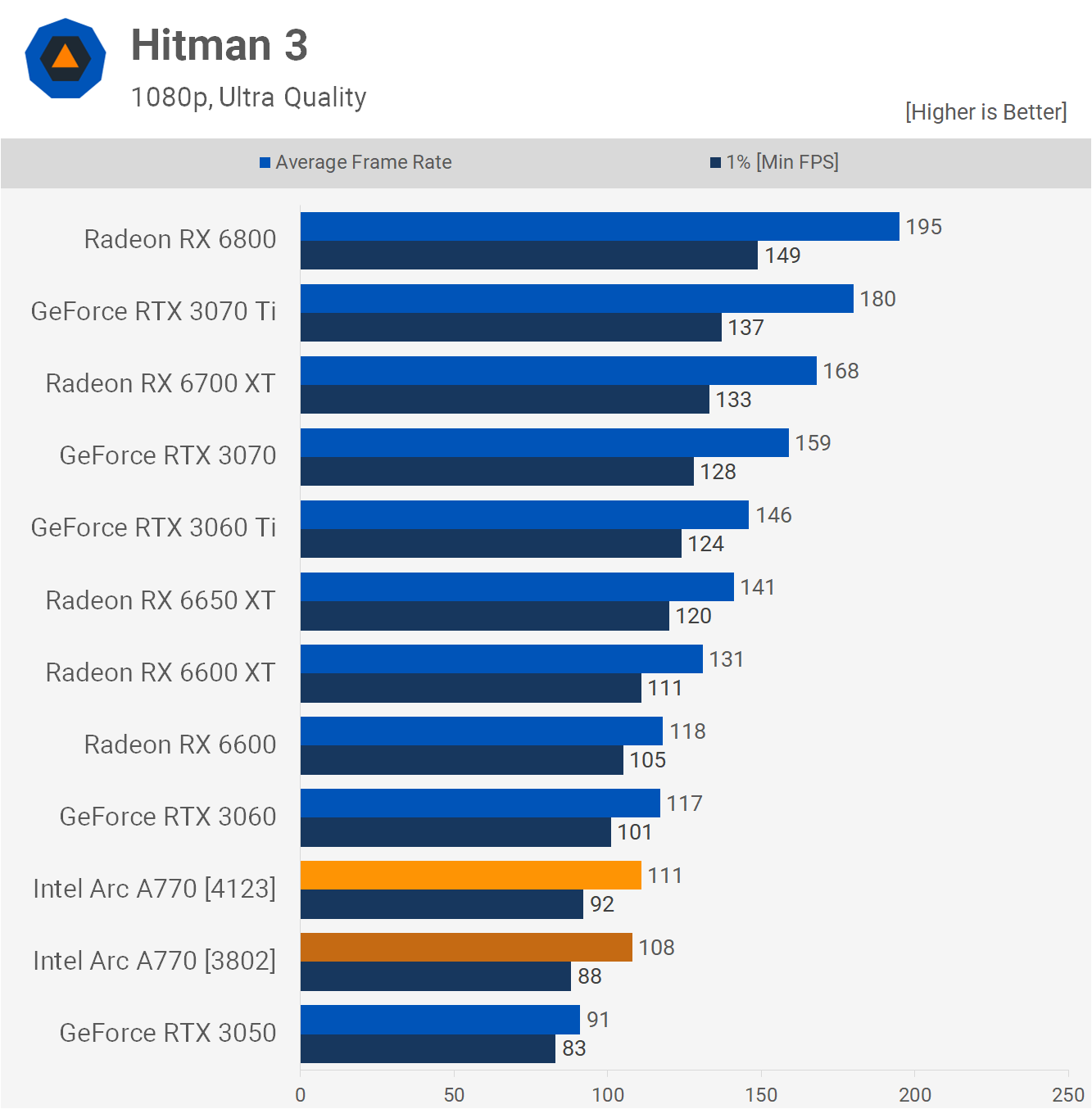

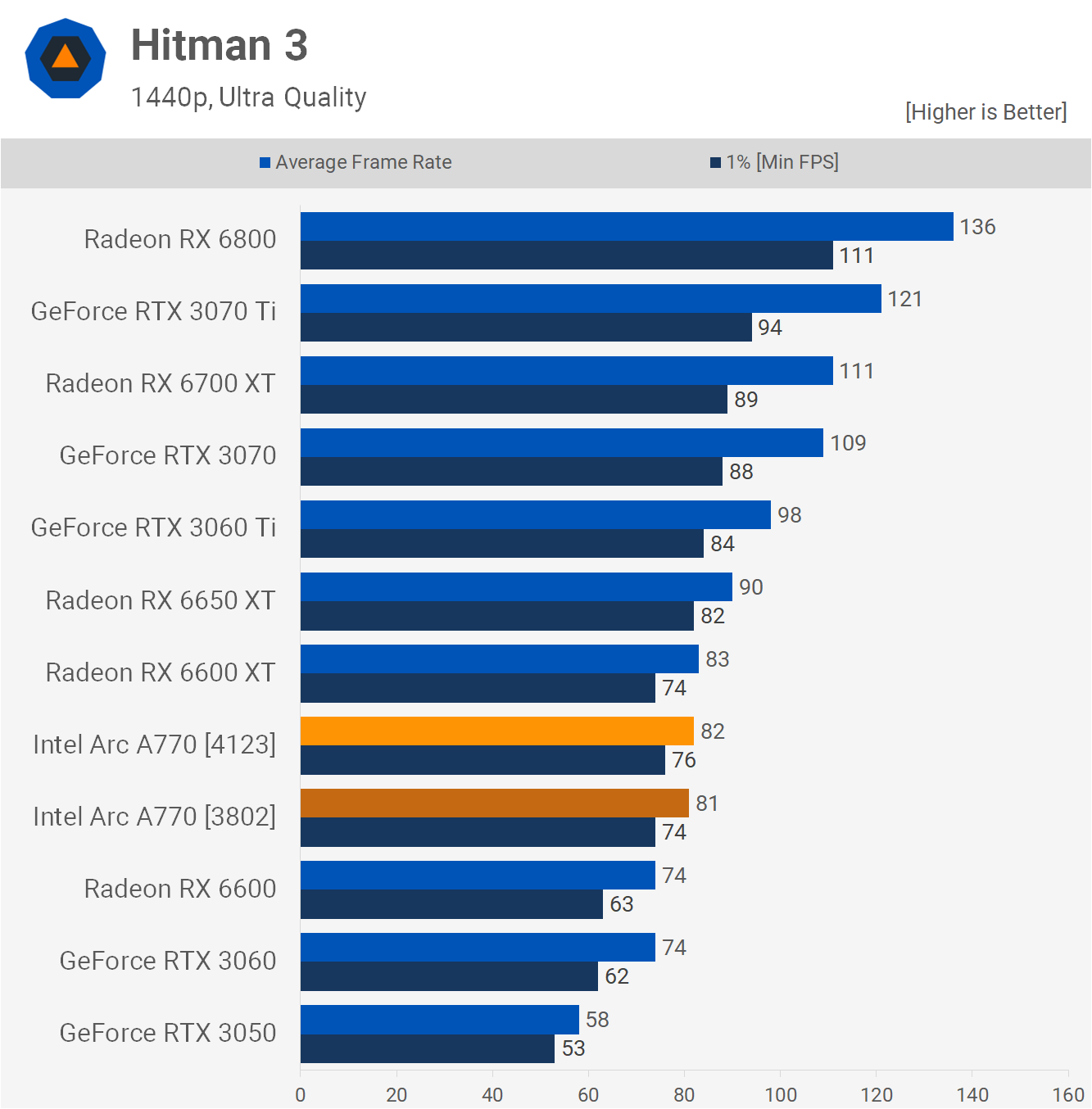

Intel did claim a small but unspecified performance improvement in Hitman 3, and we did see some very mild gains – 3% in the average fps and a 5% increase in the 1% Lows at 1080p.

However, the improvements were very minor at 1440p – 1% in the average fps, which isn't worth anything, and 3% in the lows.

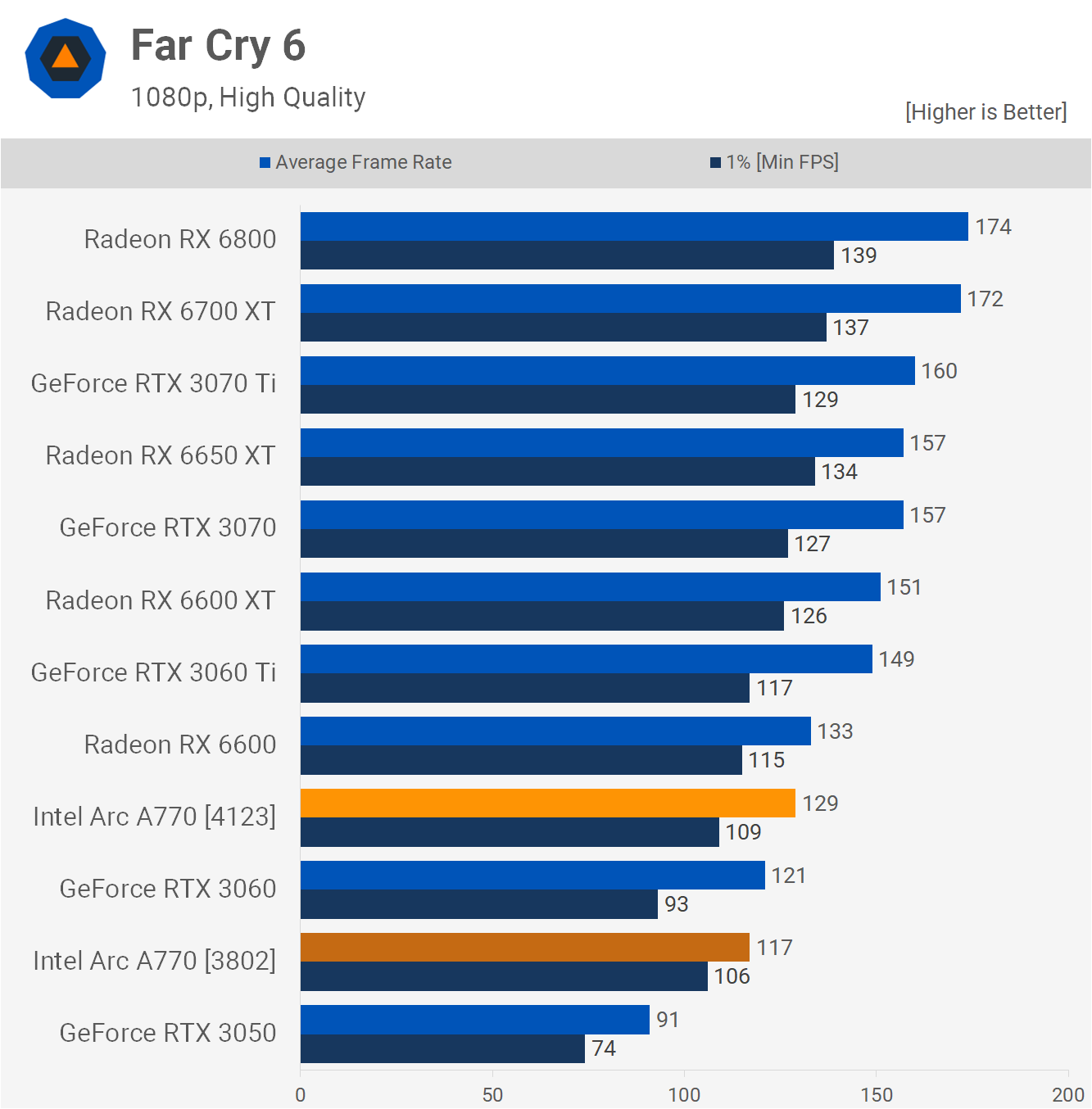

Interestingly, we did see strong performance gains in Far Cry 6 and this is a title that was shown to be improved in Intel's graphs. At 1080p the A770 was 10% faster with the newer driver, allowing it to overtake the RTX 3060 by a 7% margin, though it did still trail the 6650 XT by a rather substantial 17% margin.

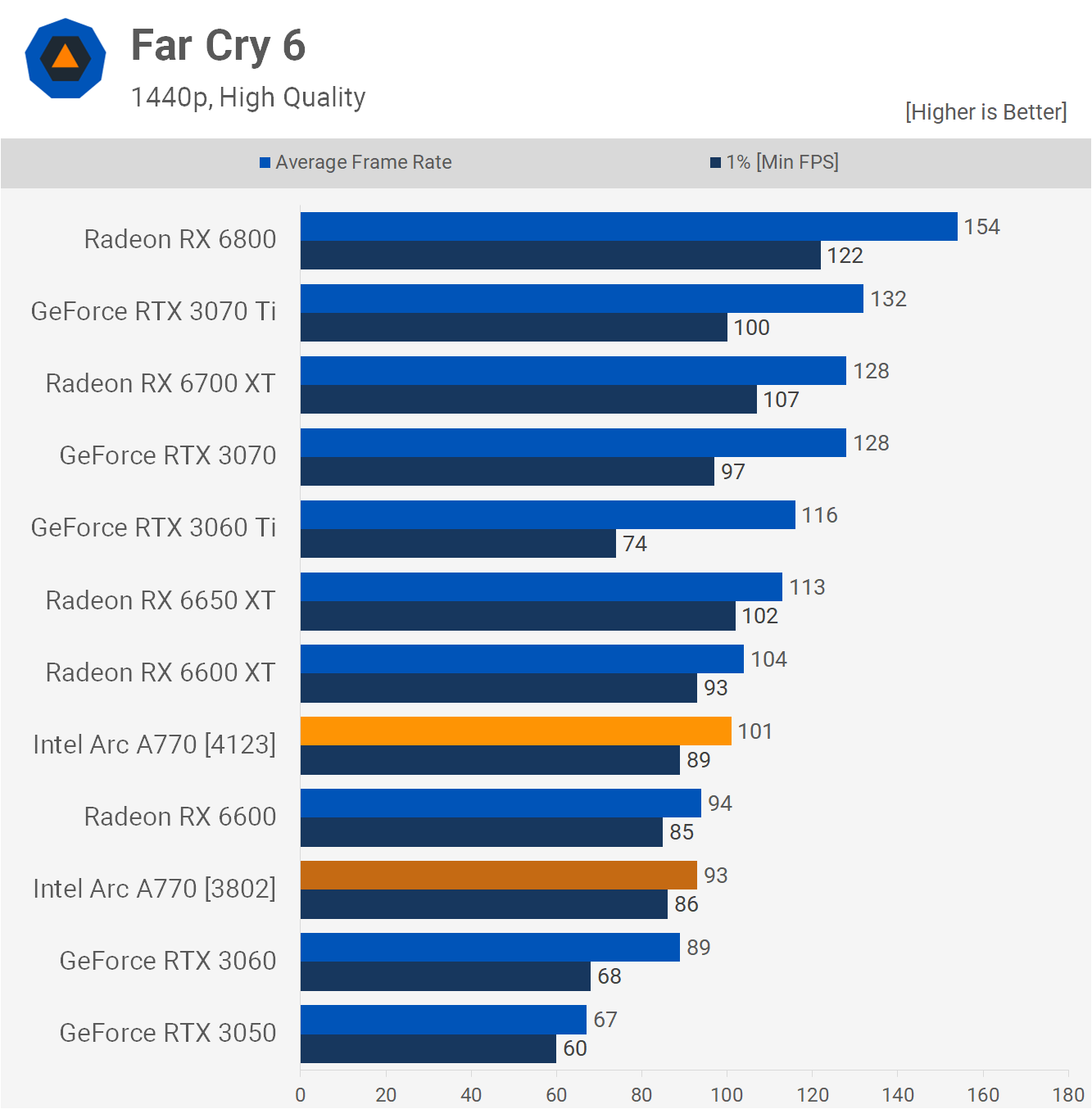

Then at 1440p we saw a 9% improvement, though this meant the A770 was still 11% slower than the 6650 XT. Exactly why this particular game, and not some of the other titles with claimed increases, performed so much better is somewhat of a mystery.

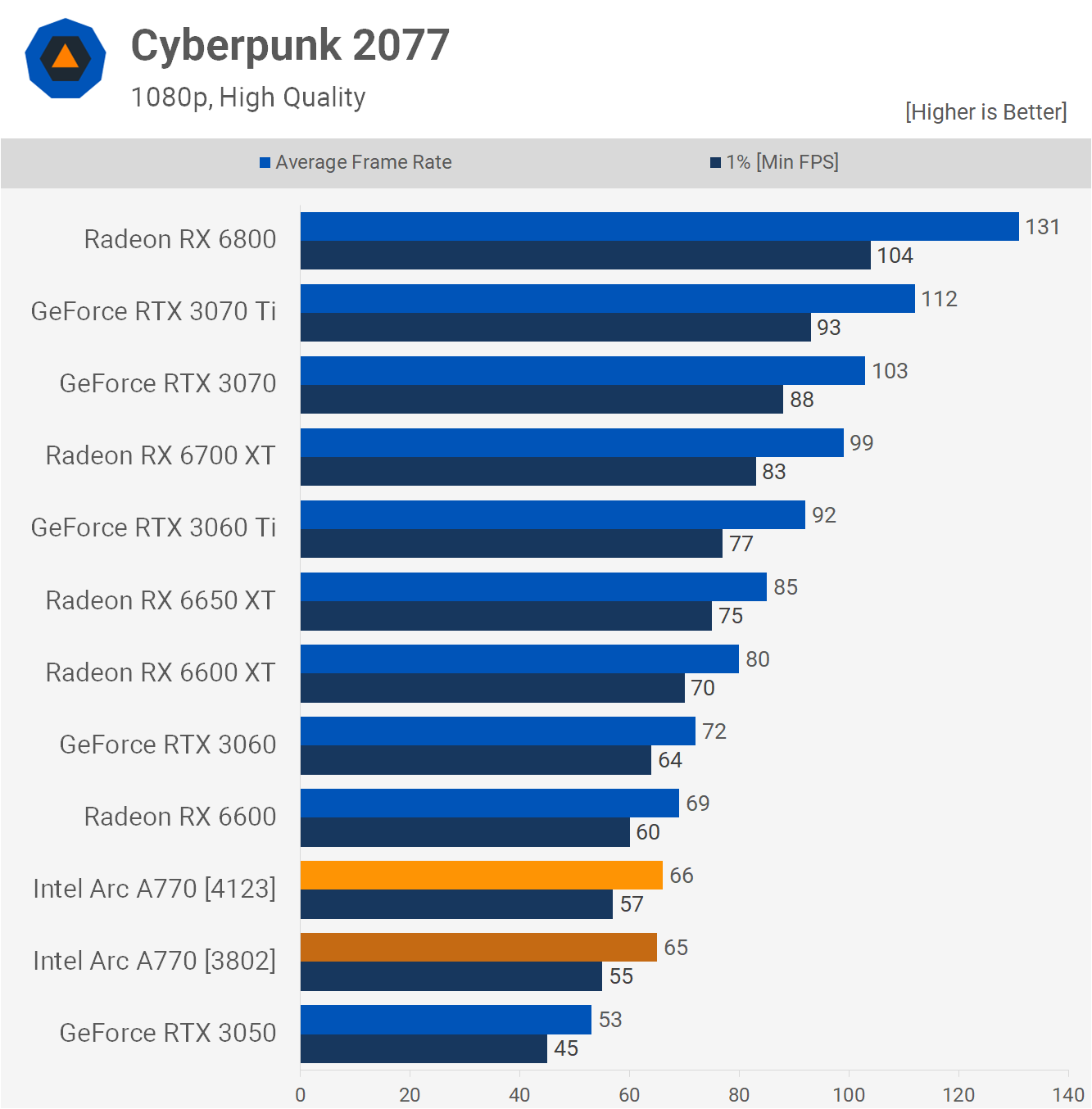

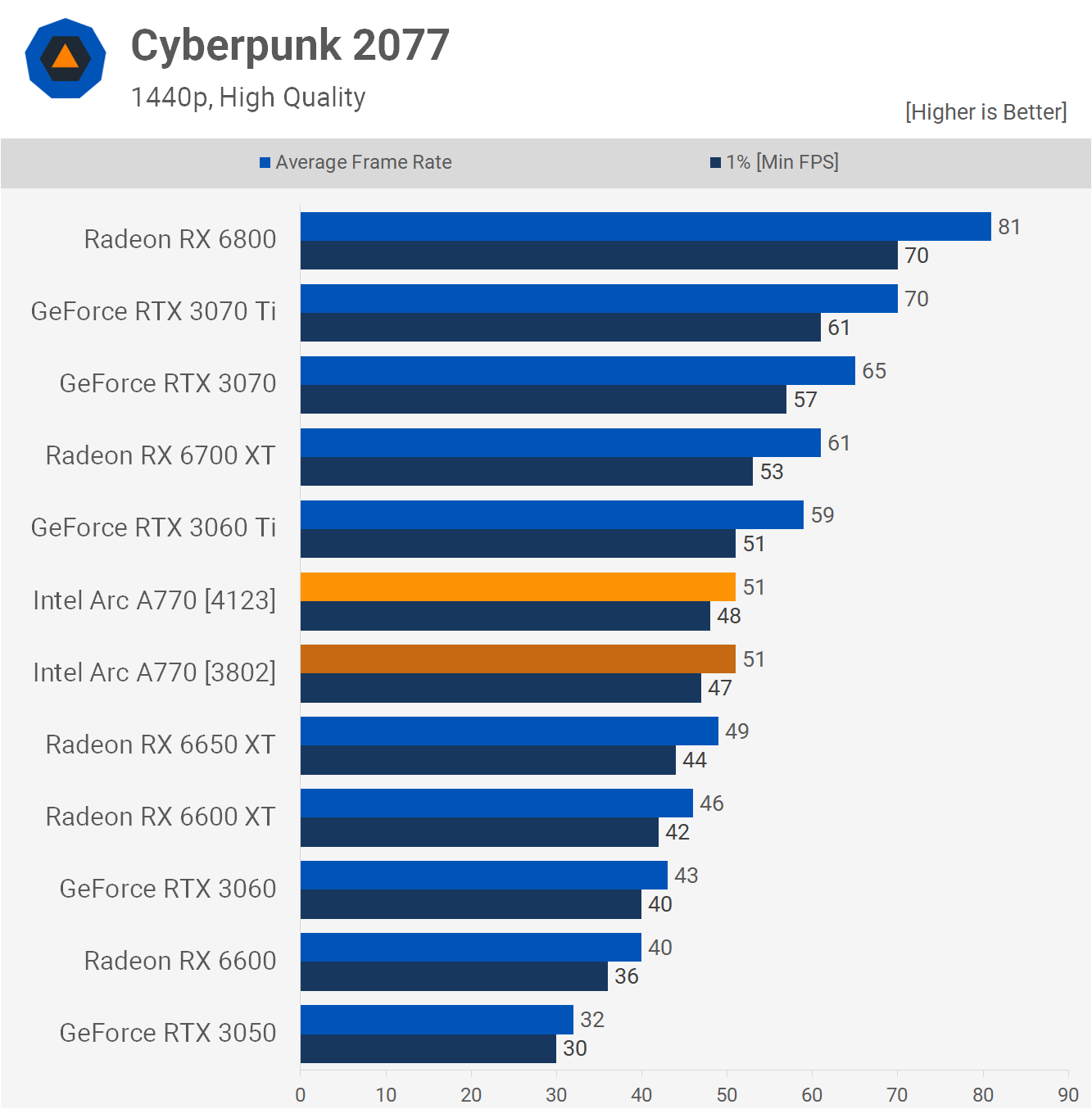

Also on Intel's list is Cyberpunk 2077 but our tests didn't reflect this very much. At 1080p, the 1% lows improved by 4% but the average frame rate was just 2% better. The A770 still performs worse than the RX 6650 XT and RTX 3060.

At 1440p, there was no detectable change in any of the frame rates, with the newer drivers, but the A770 did out-perform the AMD and Nvidia GPUs – it dropped just 22% of the performance seen at 1080p, compared to a significant 40% loss for the RTX 3060 and 42% for the 6650 XT.

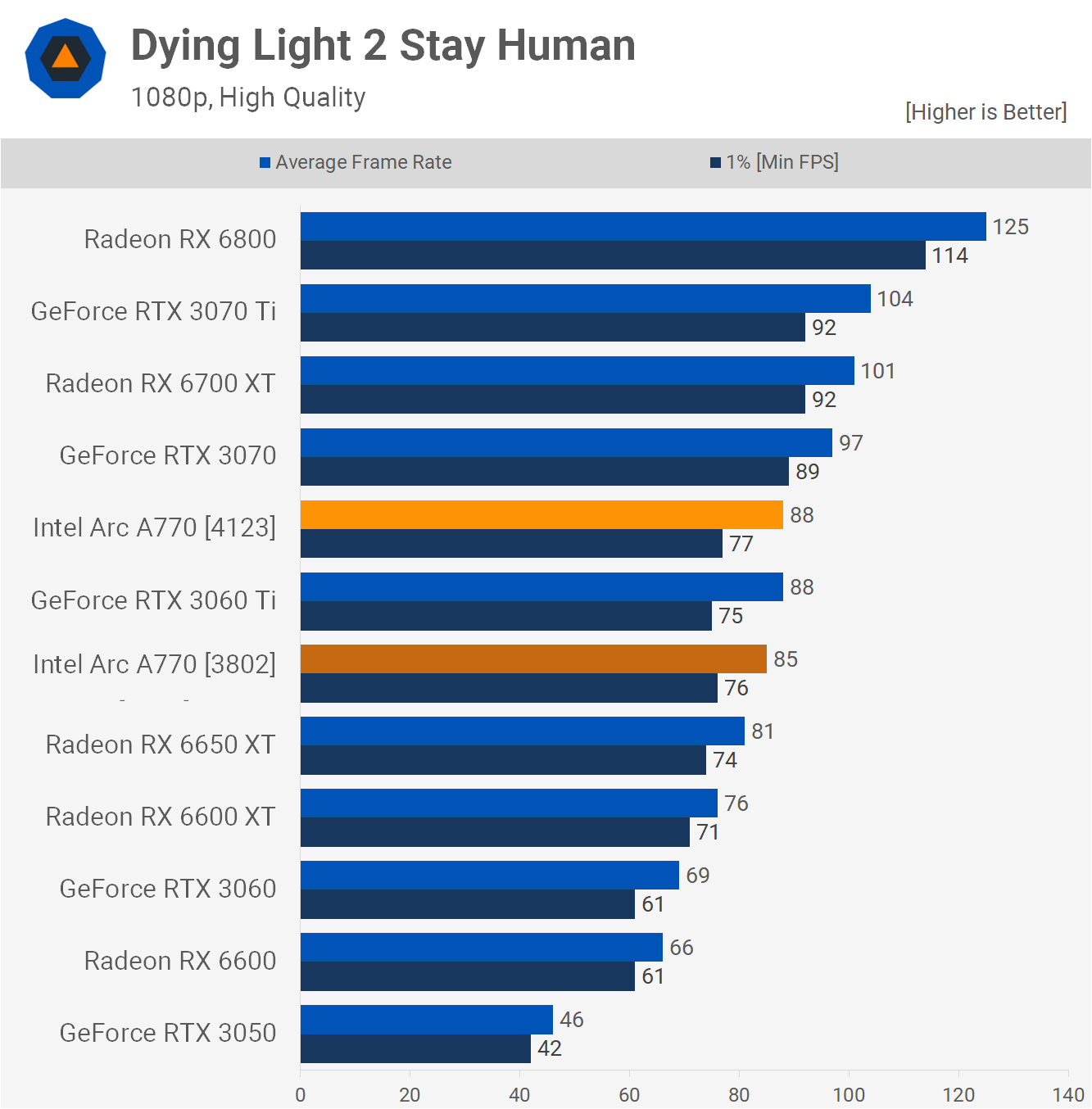

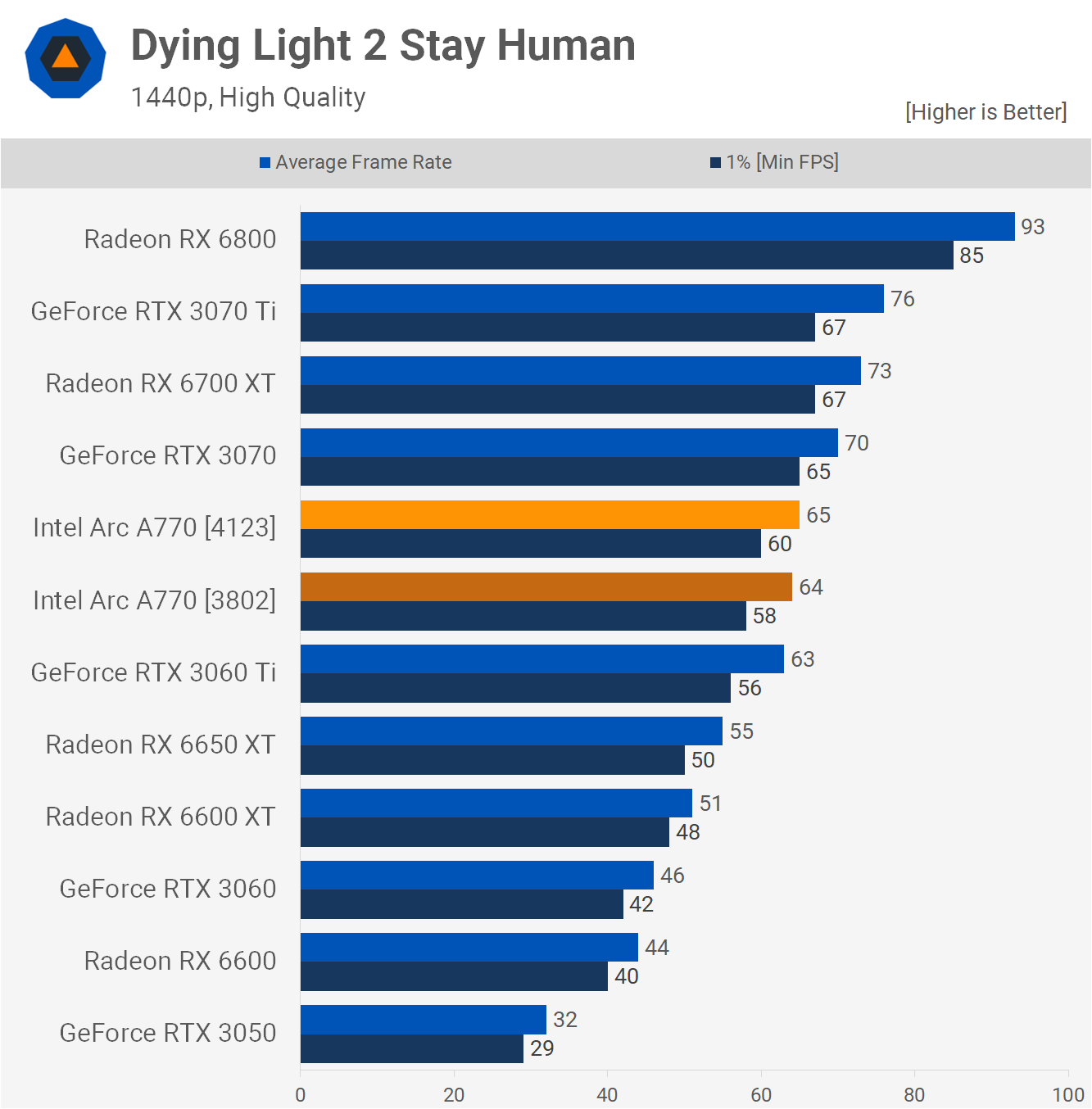

Dying Light 2 was among the biggest gains claimed by Intel for DX12 titles, though here we're using DX11 as the newer API is only utilized when using ray tracing. The game actually recommends DX11 when RT effects are disabled.

At 1080p we saw a small 4% boost, though only in the average frame rate – the 1% lows were effectively the same (a mere 1% better).

Moving up to 1440p, the gains swapped around, with the average fps improving by just 2% and the lows by a fraction over 3%. The increases were certainly consistent but not enough to make any kind of a fuss over.

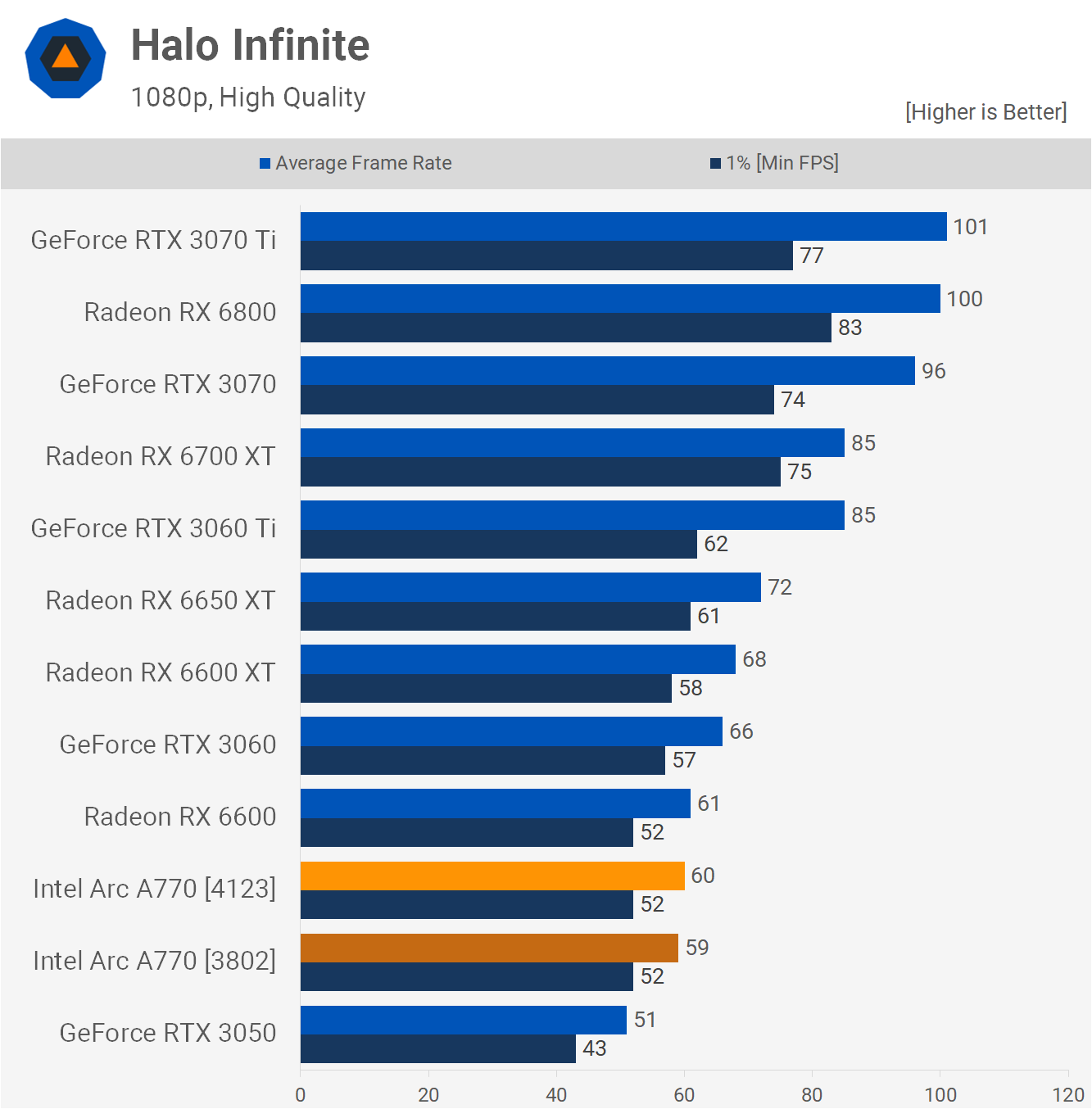

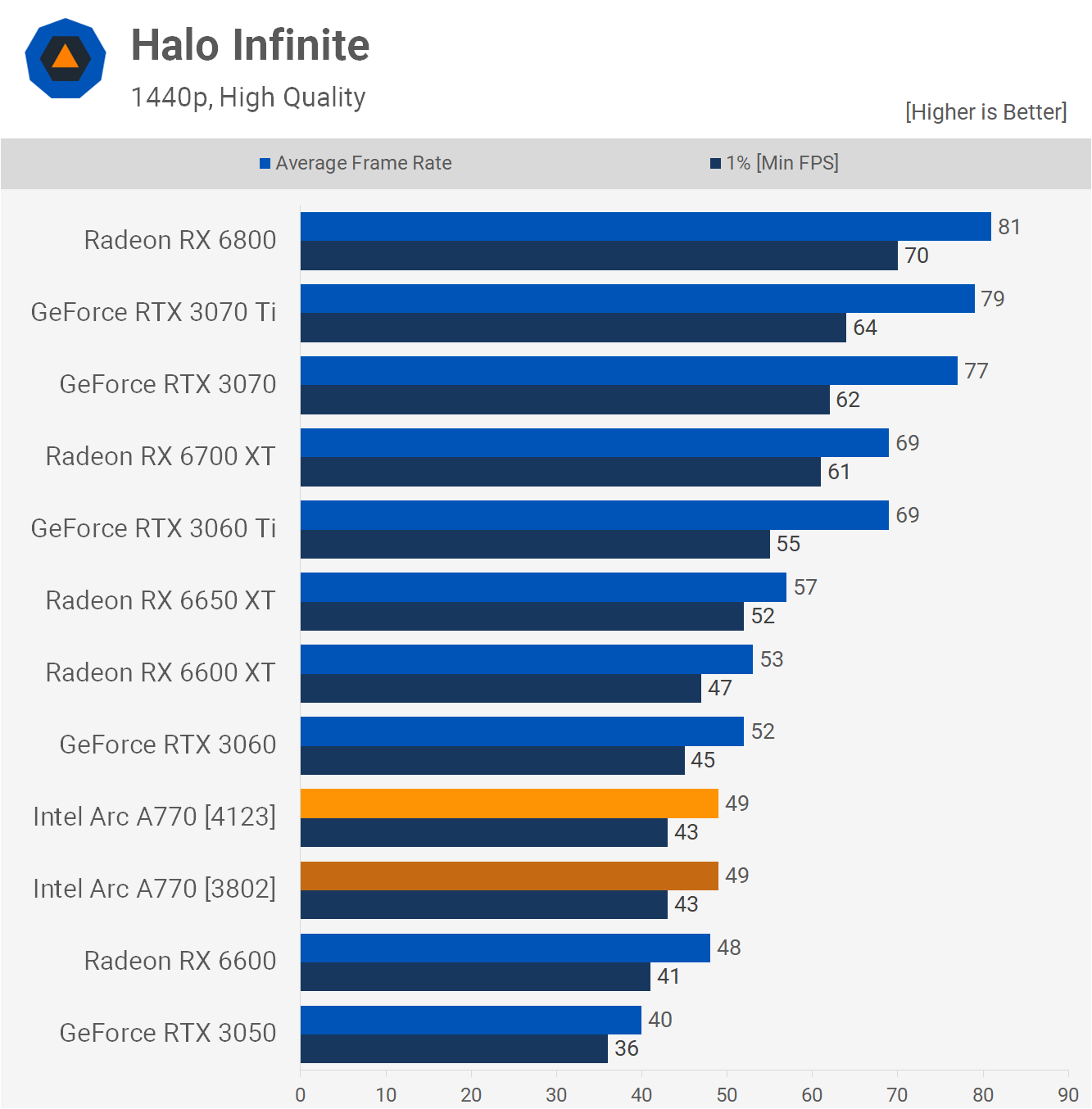

Halo Infinite wasn't mentioned by Intel, so no performance claims for this title, and as you can see, our results with the latest driver effectively matched what was seen using the release driver.

It's worth pointing out that this game also has a number of known graphical issues with Arc GPUs and Intel has yet to solve those.

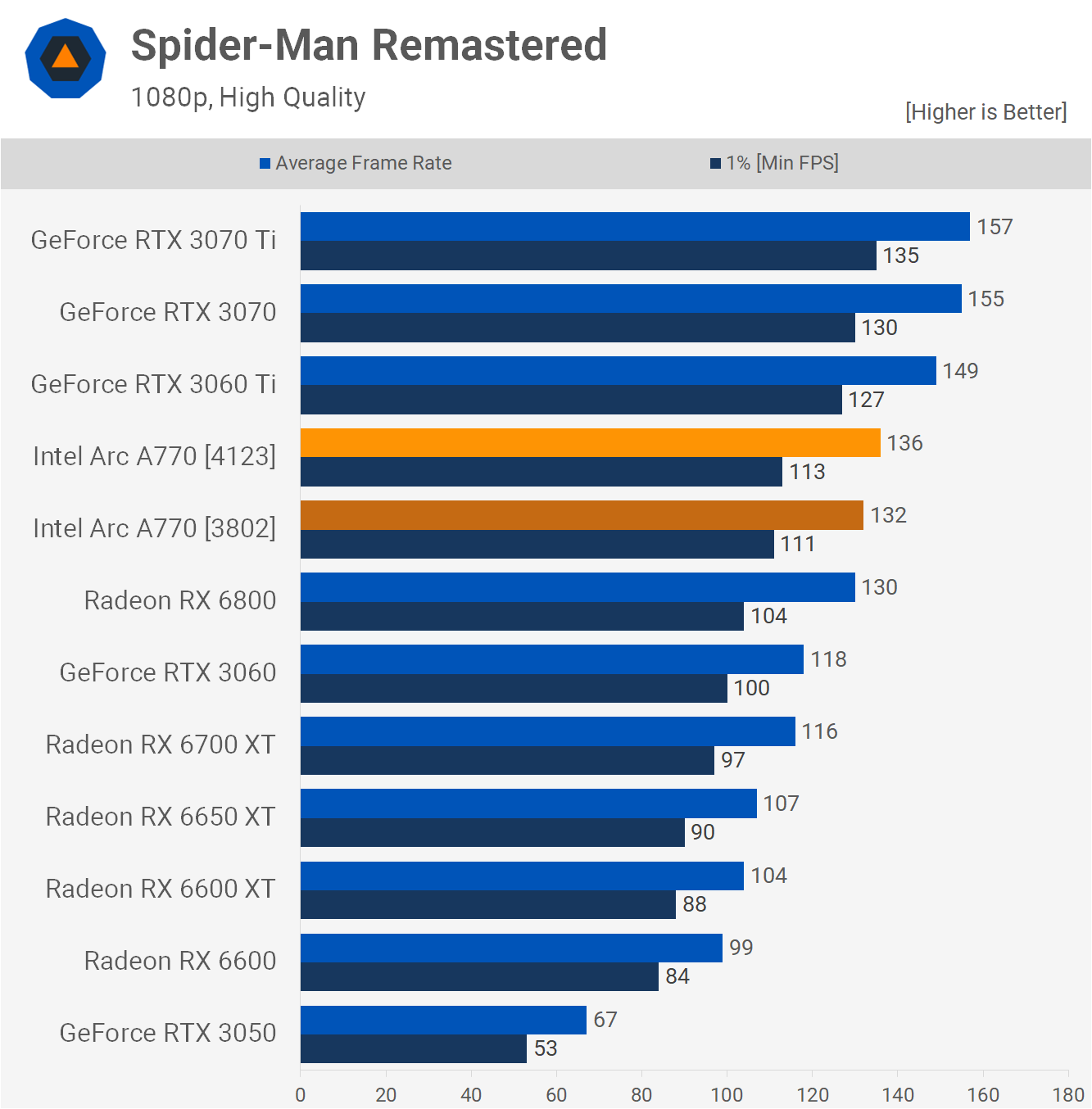

The second to last game tested was Spider-Man Remastered and this is a title that ran exceptionally well in our initial testing. Despite that Intel is still claiming improved performance with the newer driver.

A 3% increase in the average frame rate and a 2% rise in the lows certainly isn't significant, but that's an improvement on an already very impressive performance.

We also saw a 4% boost at 1440p, taking the A770 to 108 fps. That made it just 4% slower than the RTX 3060 Ti and 11% faster than the RX 6700 XT.

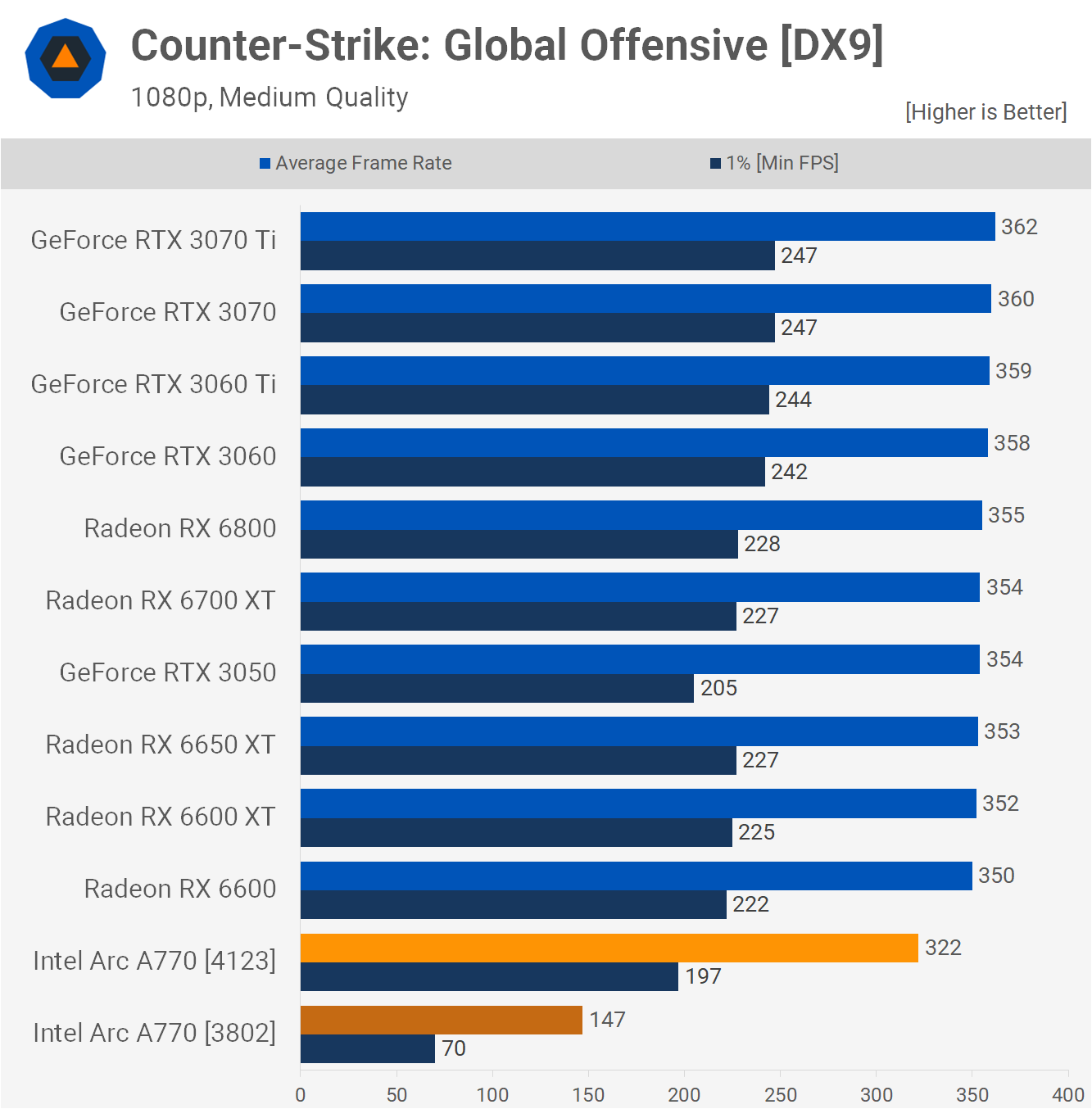

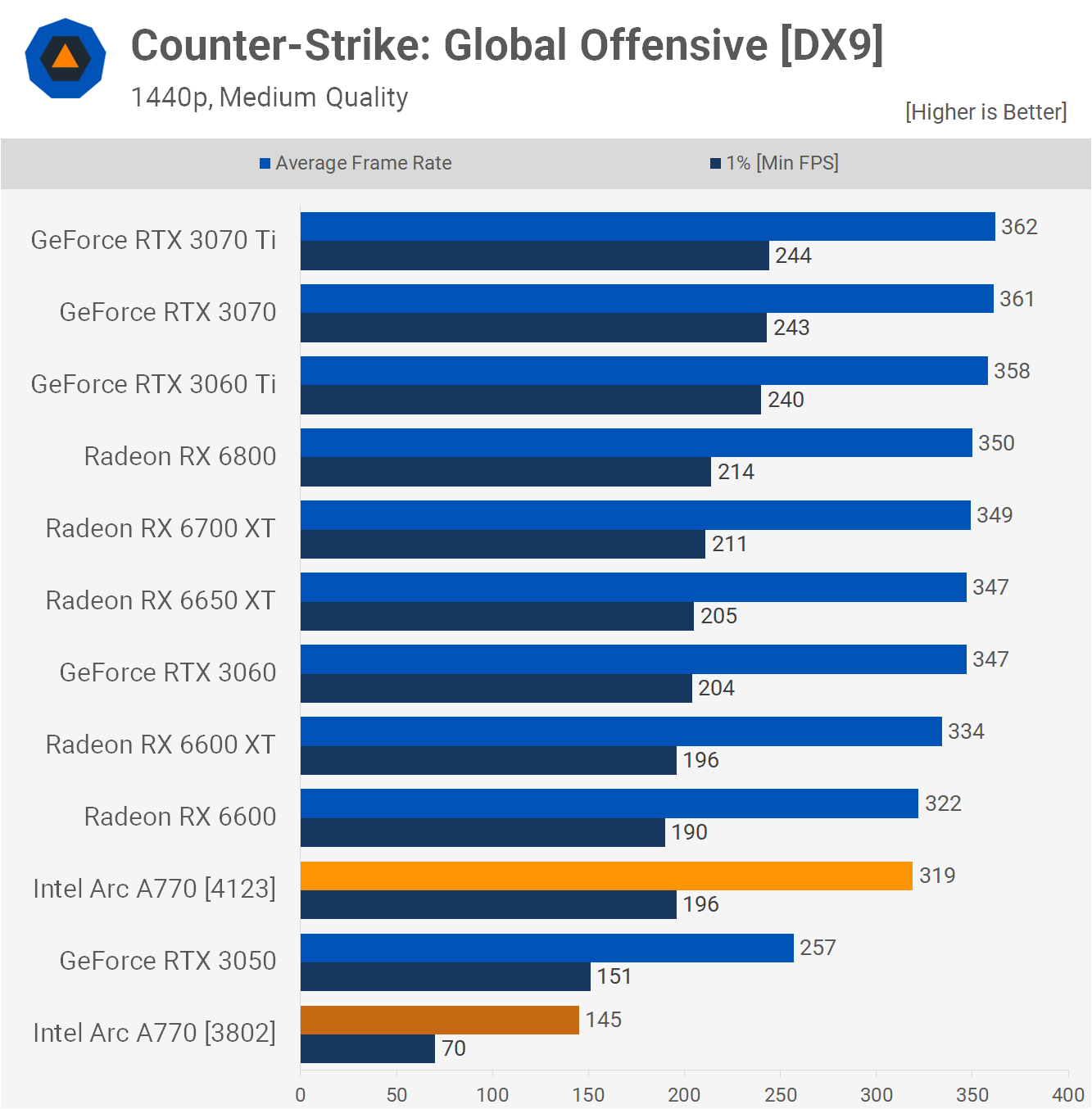

In the launch review, the only DirectX 9 game that was tested was Counter-Strike: Global Offensive, and the Arc results were truly horrible.

We're very happy to report that Intel has largely solved the performance-related issues, as we saw a massive 119% performance improvement to the average frame rate and a frankly ridiculous 181% increase in the 1% lows.

Intel still isn't up to speed with AMD and Nvidia, though, who are CPU limited in this testing,

We saw the same level of improvement at 1440p, which showed just how badly the Arc GPUs were being held back by the drivers. No matter how you look at the figures, these are great results.

However, the performance issues in CSGO aren't completely solved, as we did still see quite a few stutters when actually playing the game, though it's worlds better than it was.

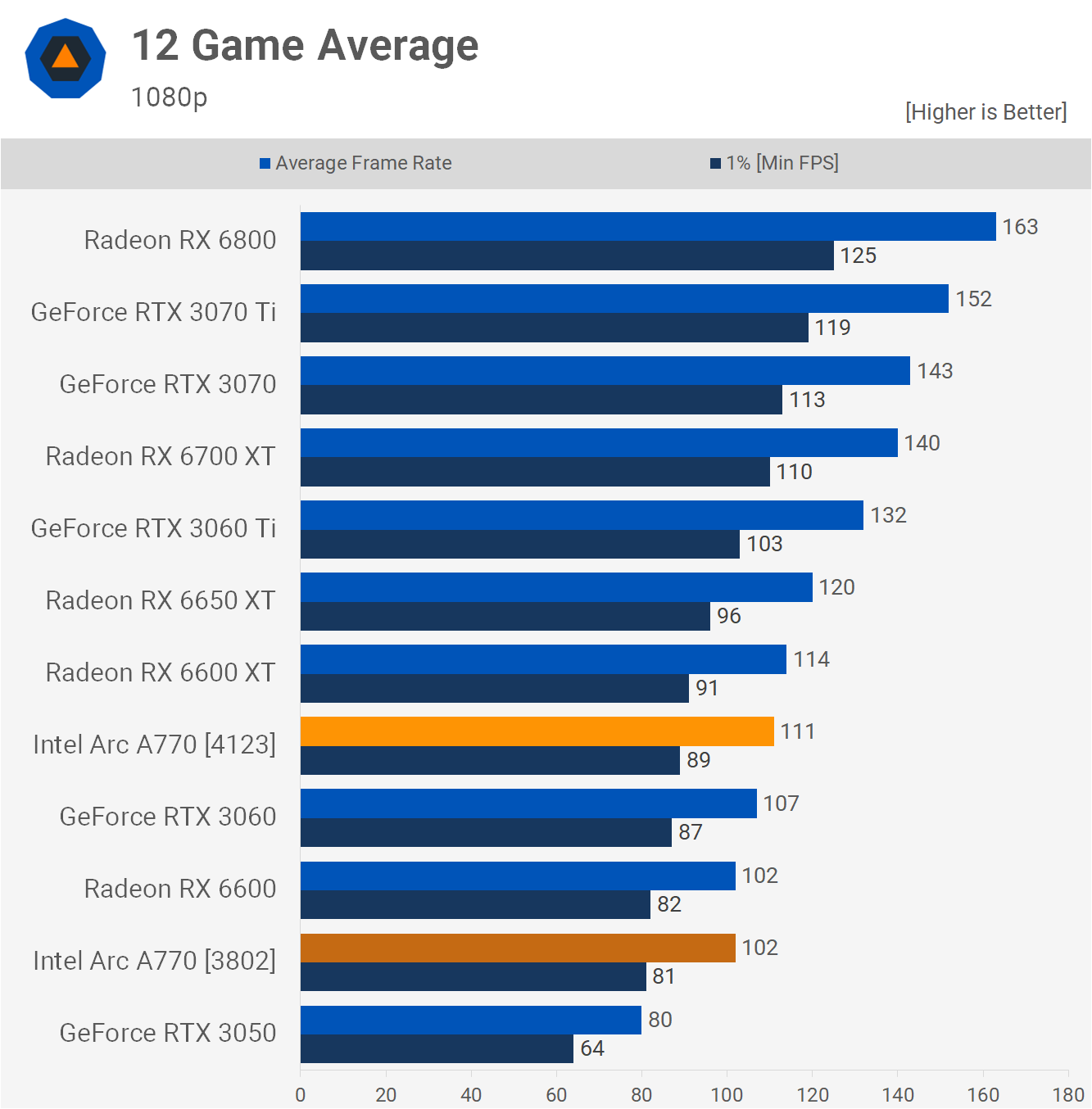

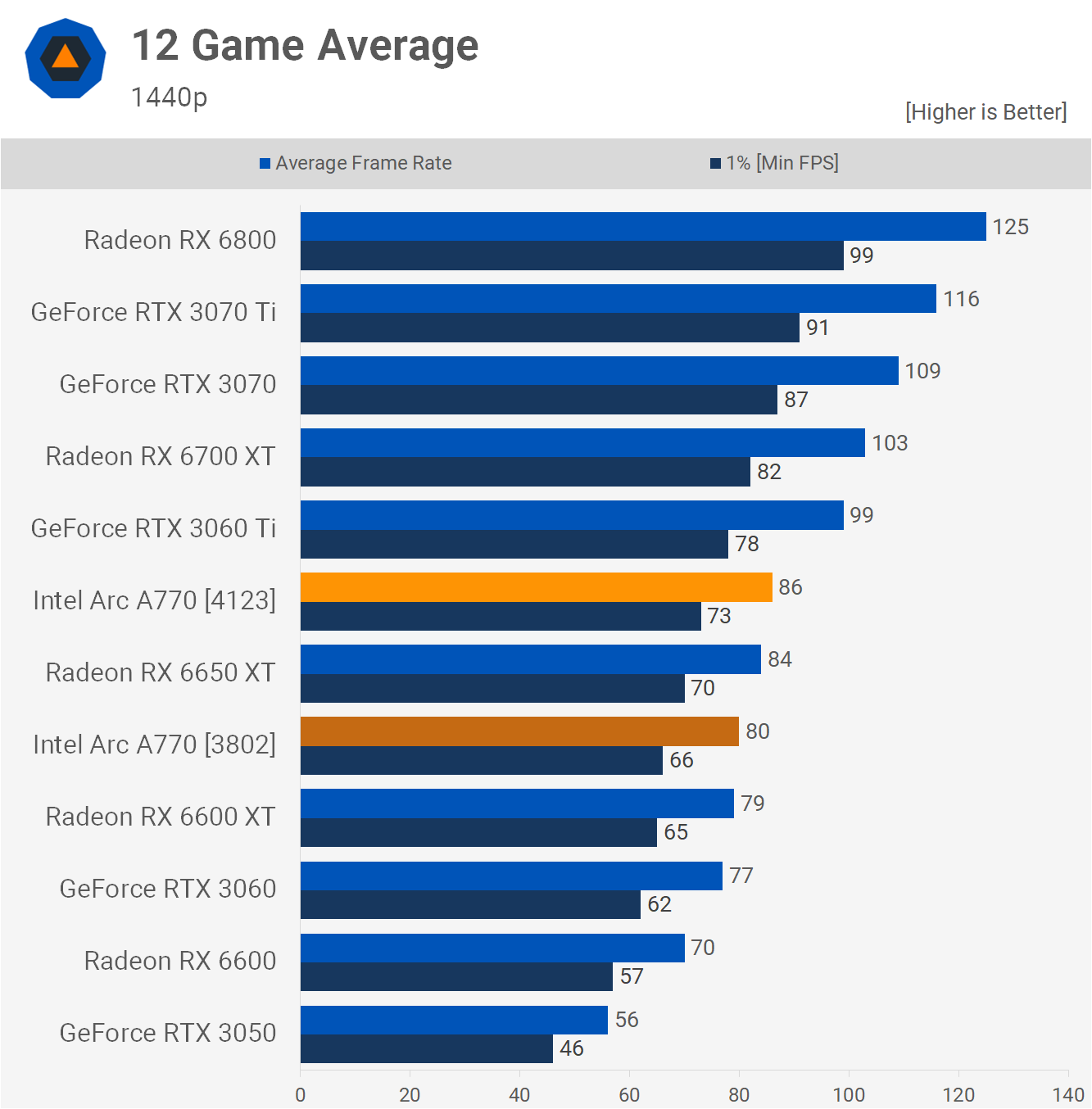

12 Game Average Results

Taking the geometric average of all the testing, the Arc A770 is 9% faster than how it was at launch, which is certainly an impressive uplift, considering that this has come just from driver updates. But that also places it just 4% ahead of the RTX 3060 and it's still 8% slower than the 6650 XT.

While it's great to see Intel making good gains in a relatively short period of time, at 1080p the model really needs to be beating the 6650 XT if Intel wants to charge $350. We'll take a closer look at this pricing difference in a moment.

Now at 1440p things look up for the A770, as the newer drivers boosted performance in our testing by 8% on average, and that was enough to just edge out the 6650 XT, albeit by just a 2% margin. Regardless, it does mean that the Arc A770 is now faster than the more affordable Radeon GPU in our 12 game benchmark.

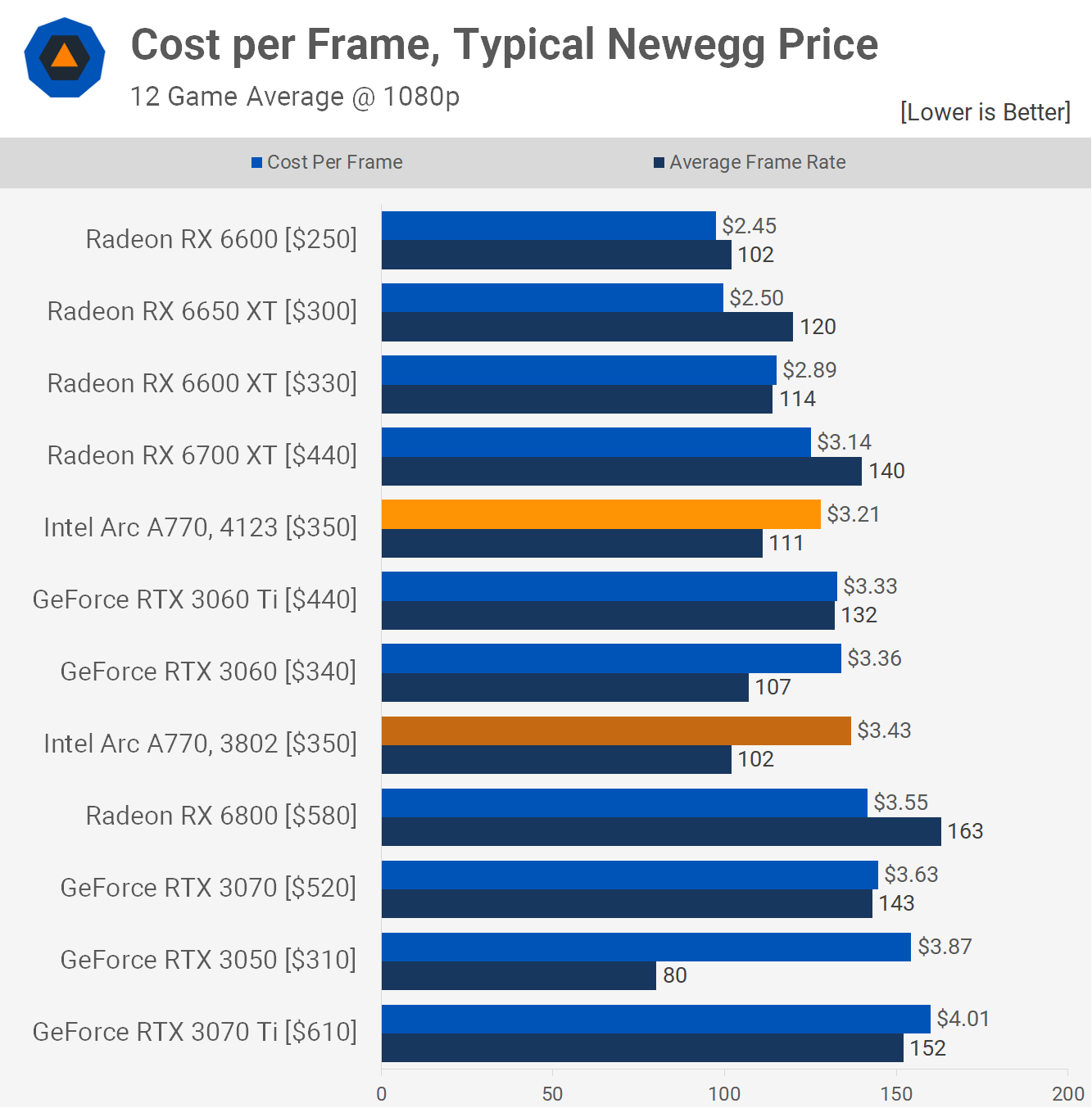

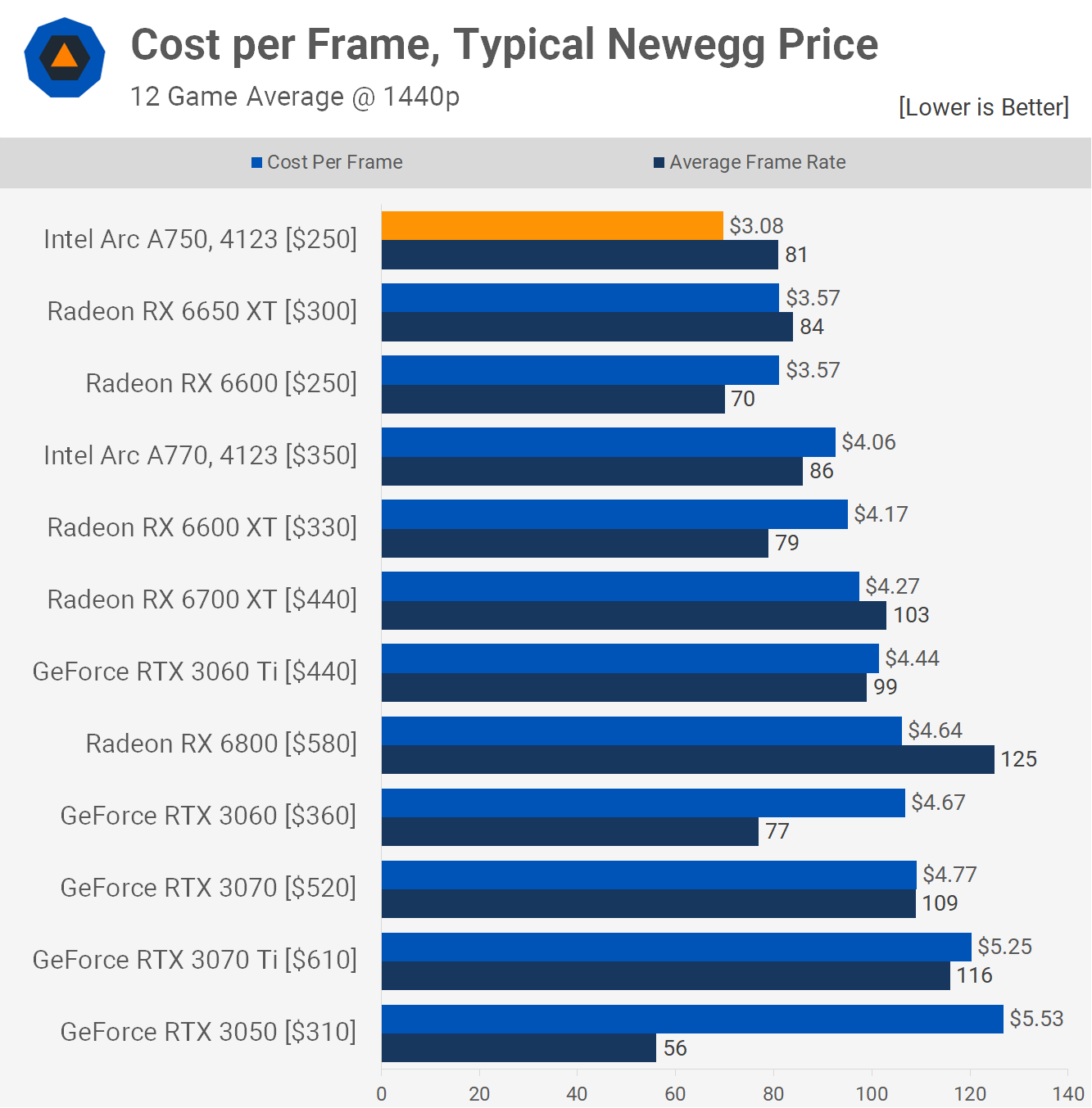

What This Means For Cost per Frame

As for what this new and improved performance does for the value of the A770, the increases have certainly helped, but ultimately it doesn't change the picture all that much, at least at 1080p. At $350, the A770 is still radically more expensive than the Radeon RX 6650 XT, costing almost 30% more per frame.

The 1440p results are far more competitive though, here the A770 was just 14% more costly per frame than the 6650 XT. While the $50 discount the Radeon GPU has over the A770 is still quite substantial, Intel's now in the same ballpark and certainly offering far more value than Nvidia.

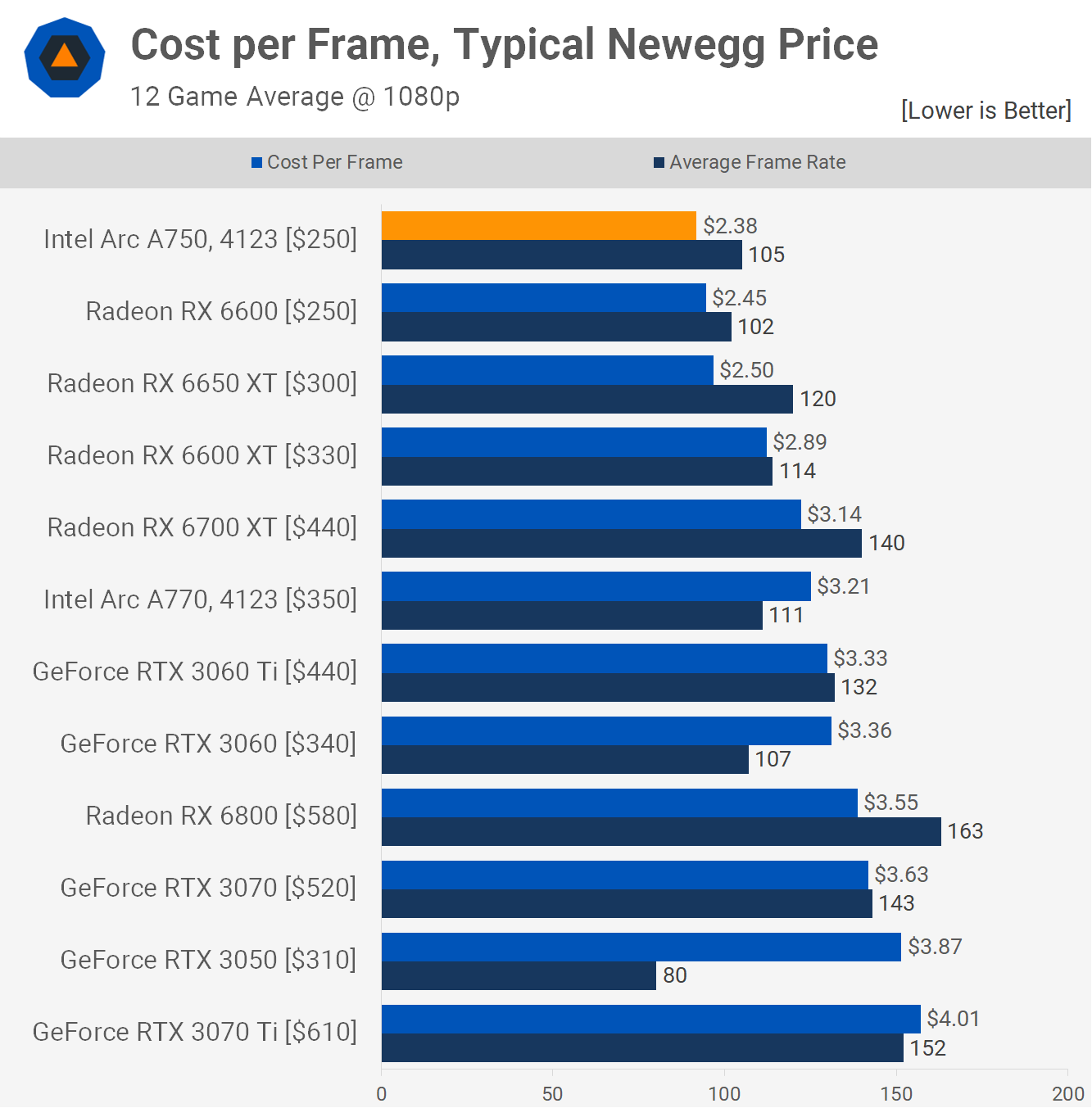

But while this is all good news, without a price cut from $350 the A770 is still a bit of a tough sell. However, it's worth noting that Intel has dropped the price of the lower-spec A750 to just $250.

When we first reviewed this card, it was priced at $290 US, and we said for it to compete with the likes of AMD's Radeon 6650 XT, it needed to cost nearer to $220. It's not quite there, but adjusting for the improved performance brought by the drivers, our $220 wish price could be revised to $240, and that means we're now very close with the new $250 price tag.

That being the case, we went back and quickly ran the A750 through the 12 game gauntlet using the 412 drivers, and here are the upgraded cost-per-frame figures.

At 1080p the A750 just edged out the RX 6600 as the best value GPU, reducing the cost per frame from the 6650 XT by 5%. That's great but we're not convinced it's enough to steer you away from a more tried and true option in the AMD Radeon 6650 XT.

However, if you're playing at 1440p the A750 does become the more obvious choice, given it's now offering a 14% saving per frame when compared to the 6650 XT. Regardless of its absolute performance, $250 is a great price and if you're in the market for an entry-level GPU, we recommend that you seriously consider choosing Intel's Arc A750.

What We Learned

Overall we didn't see much of an improvement across the majority of the games we feature in our GPU reviews. The massive 120% gain in Counter-Strike was largely to thank for the average 9% boost – ignore that game and the average increase is about 2%. Essentially, DX9 performance has improved out of sight, but that was really it in our testing.

At $350, the Arc A770 is still too expensive for what it's offering and Intel needs to address this if they want to boost its market share. But paired with the latest drivers, the A750 is certainly worth considering now at $250, thanks to the price drop.

The Arc A750 is only a little slower than the A770 (and the competition) and has some other potential advantages over the 6650 XT, namely ray tracing support and upscaling performance. Intel's GPU graphics chip has dedicated units for accelerating every part of both technologies, especially the latter.

Granted, RT support is fairly pointless on these entry-level GPUs. The A750 averaged 81 fps at 1440p and 105 fps at 1080p in our testing, so halving those frame rates, or worse, isn't something anyone is going to be interested in.

To get the best upscaling performance with the A750 or A770, Intel's XeSS needs to be implemented in games, and this is where the Radeon RX 6650 XT has a firm lead, as far more games offer FSR than XeSS. For now, though, Intel appears committed to making Arc work, and driver support for new games has been good, so fingers crossed that this level of dedication continues.