A hot potato: As AMD unveiled two new graphics cards to round out the RDNA 3 lineup, it also tried clarifying some recent controversy regarding its agreements with game developers. As the company unveils a hardware bundle for Starfield, the details on whether it will support Nvidia DLSS remain unclear.

On Friday, AMD Chief Architect of Gaming Solutions Frank Azor said that if Bethesda wanted to implement rival Nvidia's DLSS upscaling technology in its upcoming RPG Starfield, AMD would not stand in the way.

"If they want to do DLSS, they have AMD's full support," Azor told The Verge, adding that nothing is blocking Bethesda from using it.

The recent comments address rising suspicions that Team Red prevents DLSS from appearing in most games for which it signs marketing agreements. Despite this, the game likely won't officially support the feature at launch.

Lists comparing game sponsorship deals with the two companies show that most major Nvidia-sponsored titles support AMD's FSR technology. In contrast, far fewer Team Red games include Team Green's upscaling solution.

AMD's prior hesitance to clarify the situation didn't help, and the company still couldn't reveal all the details of its contracts this week. Azor admitted that the agreements involve money in exchange for technical support and that AMD expects partners to prioritize its technology over its competitors but stressed that it doesn't forbid implementing DLSS.

So after combing through Starfield preload files on PC, I don't see any sign of it supporting DLSS/XeSS. If true I think this would generate a lot of backlash for Bethesda & AMD (due to their refusal to confirm whether they block rival GPU vendor techs in their sponsored games). pic.twitter.com/U67On3x6Kt

– Sebastian Castellanos (@Sebasti66855537) August 18, 2023

A few past cases support his assertion. Sony's recent PC conversions include both upscaling tools regardless of sponsor, and Microsoft's Deathloop added DLSS in a post-launch update despite an AMD partnership. Unfortunately, current data mining shows no sign of DLSS or Intel's XeSS in Starfield, but the game will support FSR 2 at launch.

Azor suggested that Bethesda might have focused on FSR 2 for Starfield because Xbox and all recent PC graphics cards can access the technology, while DLSS requires an Nvidia GPU from the GeForce RTX 2000 series or later. Despite FSR's broader compatibility range, comparisons usually give DLSS the edge regarding image quality.

In any case, similarities between the AI workloads underpinning both solutions and Intel's XeSS mean that implementing one makes including the other two relatively straightforward, especially for a developer with Bethesda's resources. The key evidence for this is how games only supporting DLSS or FSR 2 receive third-party mods, adding the missing upscaling method. Many RTX GPU owners anticipate one such implementation from prolific modder PureDark, who has recently inserted DLSS into multiple older AAA titles, including Bethesda's Skyrim and Fallout 4.

However, users have criticized PureDark's policy of locking downloads behind $5 Patreon contributions. One Star Wars Jedi: Survivor player warned that while a single payment grants access to the latest version of that game's DLSS mod, downloading patches requires a continuous subscription. However, PureDark is not the only modder making DLSS patches. Other modders could just as easily bring DLSS 2 to Starfield for free.

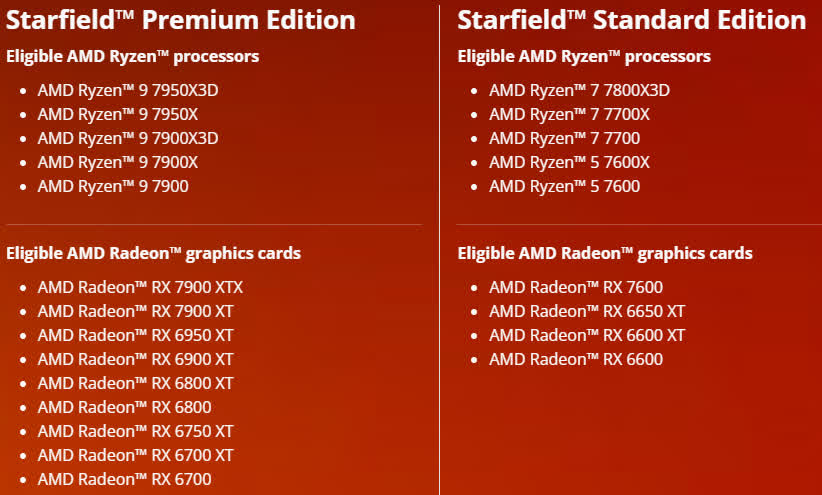

In related news, AMD revealed the details of its Starfield hardware bundle. Purchasing any currently available Ryzen 9, 7, or 5 CPU from the 7000 or 6000 series guarantees a Steam copy of the game. Radeon RX 7000 and 6000 GPUs from the RX 6600 or better are also eligible, but the deal doesn't include the recently unveiled 7800 XT and 7700 XT, which share the same release date as Bethesda's upcoming game. High-end processors and graphics cards come with the premium edition, while mid-range chips include the standard edition.

Starfield launches on September 6 for PC, Xbox Series X, and Xbox Series S, but customers who pre-order the premium edition gain access starting September 1.

https://www.techspot.com/news/99929-amd-denies-blocking-bethesda-adding-dlss-starfield.html