Highly anticipated: AMD will unveil its new Radeon RX 7000 series graphics cards based on the new RDNA 3 architecture next week. The company claims that the new GPUs will offer over 50 percent higher performance per watt compared to its current lineup thanks to a 5nm process node and chiplet design.

According to the latest rumors, Team Red will announce two high-end models next week, the Radeon RX 7900 XTX and RX 7900 XT. Both will utilize AMD's flagship Navi 31 GPU, which might feature a Multi-Chip-Module design with one graphics compute die and six memory dies. The latter should allow for up to 96 MB of Infinite cache in a standard configuration (or more if the company decides to use its 3D stacking technology).

The flagship Radeon RX 7900 XTX will reportedly use a full-fat version of the Navi 31 GPU with 12,288 stream processors and a 384-bit memory bus width. It will come with twelve 16 Gbit GDDR6 memory chips running at 20 Gbps for a total of 24 GB of VRAM with a bandwidth of 960 GB/s.

Meanwhile, the Radeon RX 7900 XT should make use of a cut-down Navi 31 GPU with 10,752 stream processors and a 320-bit wide memory bus. As a result, it will only have ten memory chips of the same capacity and speeds for a total of 20 GB of GDDR6 VRAM and 800 GB/s bandwidth.

The Radeon RX 6000 series and upcoming RDNA 3 GPUs will not use this power connector.

— Scott Herkelman (@sherkelman) October 25, 2022

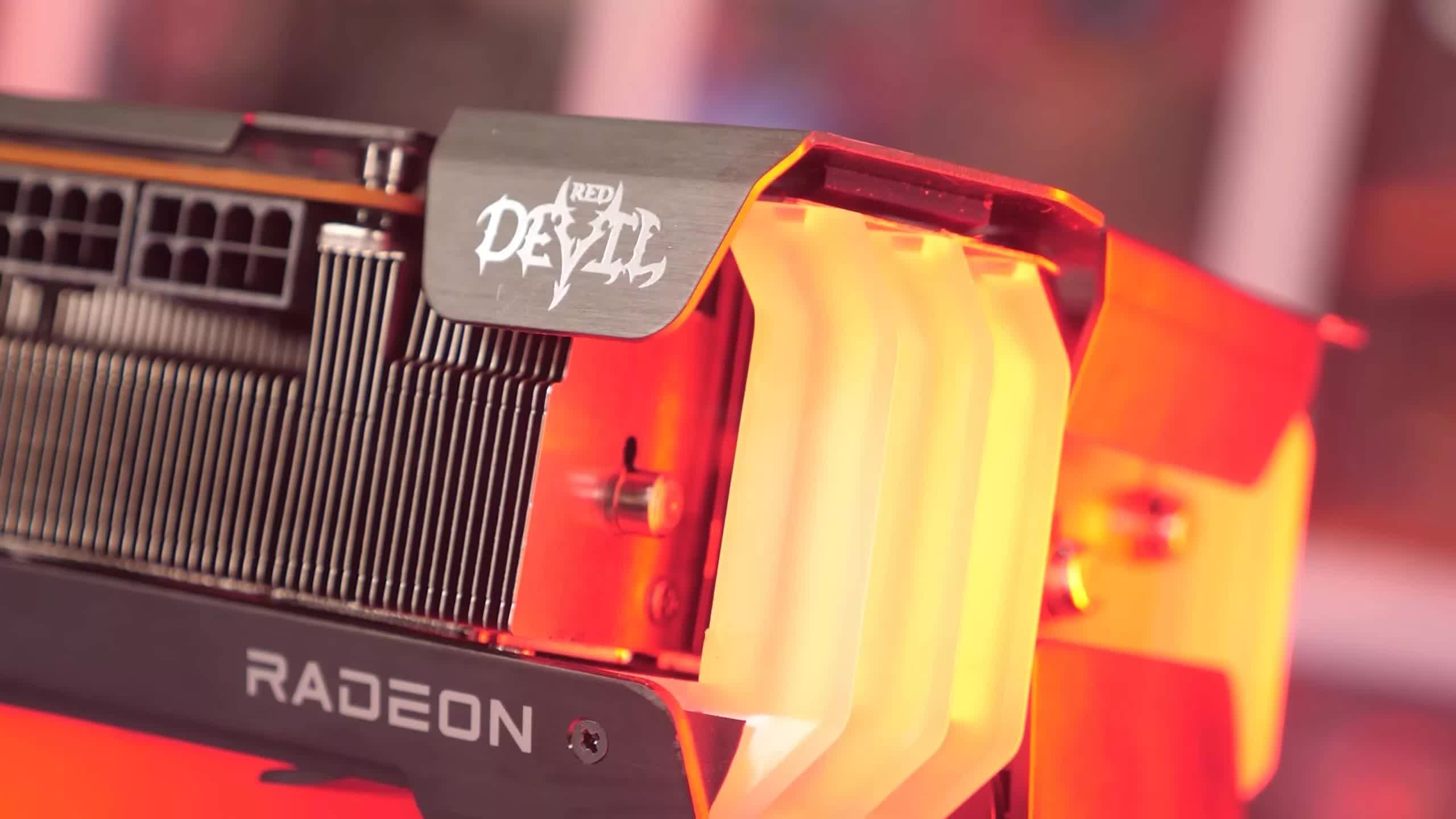

Unfortunately, TDP values are still a mystery at this time. However, AMD SVP and GM Scott Herkelman recently confirmed that the company's upcoming cards won't use the 12VHPWR connector. Therefore, they should be compatible with current PSUs without requiring any adapters, possibly saving users from some headaches. Custom designs from board partners will probably ship with up to three 8-pin power connectors for increased overclocking headroom.

AMD will officially announce the specs, availability, and pricing of its Radeon RX 7000 cards on November 3. They will go up against Nvidia's GeForce RTX 4090 and (now sole) RTX 4080, with lower-end cards from both companies only arriving next year.

https://www.techspot.com/news/96478-amd-radeon-rx-7000-flagship-graphics-card-reportedly.html