You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

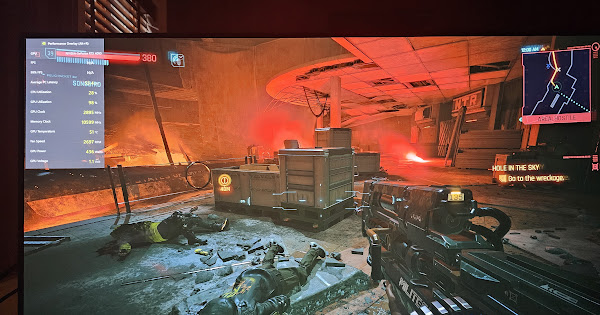

Cyberpunk 2077: Phantom Liberty GPU Benchmark

- Thread starter Steve

- Start date

Well, for someone who switched to Team Red this year - I can definitely tell the difference with RT on vs. off, but not so much with all the RT options enabled.

Specs: 7700X, Red Devil 7900XTX, LG C1

With RT Lighting and Path Tracing enabled - the benchmark definitely crawled along in slo-mo, However, turning those off (and all the advanced options set to High/Ultra/Psycho) is where it got interesting...

Setting FSR 2.1 to Auto and .5 Sharpening yielded the following results in 4K (Avg/Min/Max):

All the RT options enabled (except for RTL and PT): 75/58/100

Turning off RT Shadow options: 84/62/119

All RT off: 114/83/152

My preference is quality and while I can't tell the difference between RT Shadows on/off, I do notice the missing reflections with RT completely off. Can't wait to get back into a whole new game on a new pretty screen.

Specs: 7700X, Red Devil 7900XTX, LG C1

With RT Lighting and Path Tracing enabled - the benchmark definitely crawled along in slo-mo, However, turning those off (and all the advanced options set to High/Ultra/Psycho) is where it got interesting...

Setting FSR 2.1 to Auto and .5 Sharpening yielded the following results in 4K (Avg/Min/Max):

All the RT options enabled (except for RTL and PT): 75/58/100

Turning off RT Shadow options: 84/62/119

All RT off: 114/83/152

My preference is quality and while I can't tell the difference between RT Shadows on/off, I do notice the missing reflections with RT completely off. Can't wait to get back into a whole new game on a new pretty screen.

Nvidia sponsored games run well on all hardware, unlike "cough cough"I'm kinda surprised at how good the 7900xtx is doing on a Nvidia tech demo

It's perfectly playable , 3600x1800 res here, DLSS + RT +PT + FG = around 110 fps average with a 4090.Soon, game developers will drop off this RT nonsense. It's just not worth it. When 5090 or 6090 RTX card is released, they will still play catch up with the next gen games. All at the stake of losing gameplay values.

Are you going to game or stop and look at light and water reflections? You are not watching a screensaver. At the frantic pace of avoiding enemy bullets you don't have time to admire all these gimmicks.

Its still pointless to me to be chasing raytracing, these cards have massive headroom so why not use it on soemthing other than shiny puddles?

theres so many games that look better than cp2077 and also run very well, I guess these tech sites need clicks though so they have to follow whatever nvidia tosses from the table.

and I say this as someone with a 4080...

It's cutting edge, and it's fascinating, but it's presence in this game is just as much a marketing excerise to make us think we need a new GPU.

kira setsu

Posts: 741 +823

2077 feels more like a tech demo than a game these days, I played it and beat it and it was fine, a bit to on the nose and tryhard sometimes and imo wouldve been better in 3rd person but a fine game.It's cutting edge, and it's fascinating, but it's presence in this game is just as much a marketing excerise to make us think we need a new GPU.

but far as tech goes I think something like ratchet and clank rift apart crushes it, that game looks like a movie and does it during some of the craziest setpieces ive ever seen and without needing the best card available to do it.

meanwhile people just slowly walk through cyberpunk while staring at water puddles and think its the bees knees.

godrilla

Posts: 1,365 +894

I am playing the Phantom liberty dlc. It's actually pretty fun at the hardest difficulty. Getting 45 fps at 4k max settings with dlss set to quality, frame generation off and reflex set to plus mode. I remember playing Crysis 1 like this on my 8800 ultra. There is definitely a learning curve with the 2.0 patch that changed all the buffs and in game upgrade paths. I will be replaying this game from the beginning once the hardware catches up, because of the different play styles you can branch off to.

photos.app.goo.gl

photos.app.goo.gl

photos.app.goo.gl

photos.app.goo.gl

New item by Aberkae Godrilla

photos.app.goo.gl

photos.app.goo.gl

New item by Aberkae Godrilla

photos.app.goo.gl

photos.app.goo.gl

nicoff

Posts: 8 +2

Hi.

I splurged on the new Samsung G9 57". I have a new rig (19-13000K, 64G DDR5, pure SSD) which I slotted my 4080 into. Driving the monitor via HDMI 2.1 maxing at 120Hz.

I think you should start testing with this new high-end monitor purely to test the upscaling technologies with Ray Tracing. I say this because the old excuse to have visible pixels and a hideous desktop experience in order to have a good gaming experience are no longer valid. No need to look at a Mac screen in envy anymore, you can do both.

For reference, I run RT Ultra with DLSS, currently in auto. Path Tracing without ray reconstruction is not playable, but with ray reconstruction I get 30-40fps. Not enough to play the game. But I can't convert clearly enough how awesome that looks in dual 4k.

I did experience with lower, custom resolutions. I've gone back to native res with DLSS.

Like most non-sim games, CP2077 2.0 supports 32:9 but not properly with a massive fish-eye experience at the edges of the screen. I run a minimum vertical FOV.

I splurged on the new Samsung G9 57". I have a new rig (19-13000K, 64G DDR5, pure SSD) which I slotted my 4080 into. Driving the monitor via HDMI 2.1 maxing at 120Hz.

I think you should start testing with this new high-end monitor purely to test the upscaling technologies with Ray Tracing. I say this because the old excuse to have visible pixels and a hideous desktop experience in order to have a good gaming experience are no longer valid. No need to look at a Mac screen in envy anymore, you can do both.

For reference, I run RT Ultra with DLSS, currently in auto. Path Tracing without ray reconstruction is not playable, but with ray reconstruction I get 30-40fps. Not enough to play the game. But I can't convert clearly enough how awesome that looks in dual 4k.

I did experience with lower, custom resolutions. I've gone back to native res with DLSS.

Like most non-sim games, CP2077 2.0 supports 32:9 but not properly with a massive fish-eye experience at the edges of the screen. I run a minimum vertical FOV.

alexnode

Posts: 149 +56

Path tracing and ray tracing is here to stay ...AMD will be part of the game soon. The visual potential is amazing.It's cutting edge, and it's fascinating, but it's presence in this game is just as much a marketing excerise to make us think we need a new GPU.

m3tavision

Posts: 1,439 +1,223

wanna play rasterized games till death?

Tessellation is a default feature now, even for ps4 era games.

You are missing the point....

NVidia is known for lying and making boasts, that are just marketing.

You do NOT need a NVidia GPU for tessellation, PhysX, ray tracing, or hairworks which are just more marketing gimmicks, like RTX ON.

You don't have to pay for any of it, DX12U has that stuff, NVidia just renames it and tells you ONLY they can do it...

RT is absolutely here to stay and the future. However it's also true it was pushed too hard and way too early. You have to spend a grand on the best GPUs available and then upscale from half your monitor's resolution to maaaaaybe play at 60fps and ignore half your monitor's refresh rate. If that's not a niche of a niche of a niche then I don't know what is.Path tracing and ray tracing is here to stay ...AMD will be part of the game soon. The visual potential is amazing.

Someday it will presumably be more manageable though, and then everyone who acted like it's pointless will suddenly turn it on. Funny thing is some games today use it super sparingly, like how RE4 Remake uses it to avoid ugly SSR issues, but then get bashed for using "weak RT."

VcoreLeone

Posts: 289 +146

Can you please link where they said that ?DX12U has that stuff, NVidia just renames it and tells you ONLY they can do it...

m3tavision

Posts: 1,439 +1,223

You must be real new.... only RTX ON can do ray tracing... and only RTX ON can only do upscaling.... it what us RTX20 owners were told. Remember...? Only to find out that nothing in the RTX20 series pseudo-hardware actually mattered, you only had to have a compatible DX12U card, etc...Can you please link where they said that ?

Again, just like the BS crap about how great RTX40 is... that it's "super-special hardware" supports this new thing called Frame-Generation...!

Only to find out dlss3 & frame-gen was a major marketing blooper and a lie.

Thank you AMD.... for supporting Industry Standards.

VcoreLeone

Posts: 289 +146

if that was told to rtx20 owneers, I'd say nvidia were correct.only RTX ON can do ray tracing... and only RTX ON can only do upscaling.... it what us RTX20 owners were told.

Developed by microsoft and nvidia.Thank you AMD.... for supporting Industry Standards.

m3tavision

Posts: 1,439 +1,223

if that was told to rtx20 owneers, I'd say nvidia were correct.

Developed by microsoft and nvidia.

So you are new to the dGPU scene. And don't remember Jensen Huang walking on stage talking about RTX ON... and now why years later, most Gamers have caught on to NVidia's marketing gimmicks... and that their hardware, doesn't offer any more performance, just moAr gimmicks.

As for Industry Standard and the thee Gaming Industry Standard.... are both centered around AMD's RDNA architecture which was engineered from the ground up for Gaming.

RTX is just a blip, compared to the AMD's vast RDNA technologies that make up the entire gaming market. Again, bcz RDNA was 100% engineered for gaming and can scale to any size, it's architecture is not riddled with gimmicks.

RDNA:

- 75 millions PlayStation5 & Counting (who may have sold over $168B in games so far)

- 45 million Xbox Series X/S with all those Game Developers.....

- 3 million+ SteamDecks sold & a slew of new Handhelds .....

- RDNA is roughly 50% of the dGPUs sold (for gaming) in the last 2 years.

NVidia developed marketing and hoped people wouldnt notice Ada Lovelace isn't for gaming, that is why Content Creators, soft enterprise and Professionals boosted 4090 sales above the 4080 & 4070ti combined. Why spend $3,200 for a RTX Pro card, when u can snatch up a 4090 for less than half...?

CUDA isn't for gaming, RDNA is...

VcoreLeone

Posts: 289 +146

can I have some of what you're smoking ?AMD's vast RDNA technologies that make up the entire gaming market

Here's the forum you should register on, this one is for PC enthusiasts.RDNA:

- 75 millions PlayStation5 & Counting (who may have sold over $168B in games so far)

- 45 million Xbox Series X/S with all those Game Developers.....

- 3 million+ SteamDecks sold & a slew of new Handhelds .....

- RDNA is roughly 50% of the dGPUs sold (for gaming) in the last 2 years.

Last time I checked HUB reviews, the cuda cores on my 3080 run almost as fast as 7900gre does in gaming. 3 year old last gen 5th down from top ampere sku vs new gen 3rd down from the top rdna3 sku.CUDA isn't for gaming, RDNA is...

Last edited:

m3tavision

Posts: 1,439 +1,223

Last time I checked HUB reviews, the cuda cores on my 3080 run just as fast as 7900gre does in gaming.

Are those counterpoints..? Or just throwing salt bcz I make valid points and went from EVGA cards to a Radeon brand, bcz Radeon technology has far superior Price/Performance and my old RTX2080 running in a old machine gets FSR3 too...

After being told by Jensen Huang, that I needed to buy at least a RTX4070 to get the benefits of FSR3....

I don't think anybody really cares about your $1,300 3080... when the 7800xt is $500 bucks.

VcoreLeone

Posts: 289 +146

bought 3080 used for 389eur actually (was 700eur on 2021 invoice), 6800 was 400eur (1250eur on 2021 invoice). Both bought after the boom.I don't think anybody really cares about your $1,300 3080...

I kept the 6800 in case FSR3 is good, but so far what we have:

-it doesn't run with VRR on (automatic failure)

-iq is still worse as fsr3 upscaler is still worse than dlss super res.

So I'll sell it, just not now, when another shortage is here. Price can only go up, all 7700xt/7800xt did here in EU is raise 6800/xt prices by 50eur.

and yes, cuda cores run games,ask 88% of dGPU owners.

Last edited:

m3tavision

Posts: 1,439 +1,223

bought 3080 used for 389eur actually (was 700eur on 2021 invoice), 6800 was 400eur (1250eur on 2021 invoice). Both bought after the boom.

I kept the 6800 in case FSR3 is good, but so far what we have:

-it doesn't run with VRR on (automatic failure)

-iq is still worse as fsr3 upscaler is still worse than dlss super res.

So I'll sell it, just not now, when another shortage is here. Price can only go up, all 7700xt/7800xt did here in EU is raise 6800/xt prices by 50eur.

and yes, cuda cores run games,ask 88% of dGPU owners.

Understood^

Now go talk to a real 3080 owner, who didn't get to stand in line and paid $1,300~$1,800 for their RTX3080...

And then you start to understand how you weren't bit by Jensen Huang's words, as badly as many of my friends, clan members and co-workers... who believed! You wouldnt be so dismissive of NVidia's situation, nor such a cheerleader if you bought new.

Lastly, upscaling is not meant for new cards, it is software technology used on older cards when upgrading to newer resolution. (ie: a GTX1080ti owner moving from 1080p to new 1440p Monitor, can turn on upscaling... and get relatively the same performance on their new monitor as before.

Logic dictates, If Someone spends $500+ on a new dGPU (from 6 years ago), why in their mind would they think about upscaling... when they can do that on their old card...?

VcoreLeone

Posts: 289 +146

Like the one I picked up the card from that literally paid msrp ?Understood^

Now go talk to a real 3080 owner, who didn't get to stand in line and paid $1,300~$1,800 for their RTX3080...

Of course it is, playing 1920p dldsr+dlssb in every non-rt game here, blows my native 1440p to pieces in terms of quality and runs the same.Lastly, upscaling is not meant for new cards

Meanwhile, vsr+fsr is not only worse in still shots, but in real time due has tons of shimmer. Still has more detail than native 1440 though.

If someone is getting a new card and playing 1080p native instead of dlss balanced at 1440p, they are stupid. Losing performance and quality at the same time.

m3tavision

Posts: 1,439 +1,223

Like the one I picked up the card from that literally paid msrp ?

Of course it is, playing 1920p dldsr+dlssb in every non-rt game here, blows my native 1440p to pieces in terms of quality and runs the same.

Meanwhile, vsr+fsr is not only worse in still shots, but in real time due has tons of shimmer. Still has more detail than native 1440 though.

If someone is getting a new card and playing 1080p native instead of dlss balanced at 1440p, they are stupid. Losing performance and quality at the same time.

We understand that YOU bought used & didn't pay full price, I was asking why are you so dismissive of all those who did...? Someone in my clan bought a Watercooled Asus 3080 for $1,799 just 15 months ago....

He can laterally upgrade to a 7800xt for $500 and use less energy and get the same frames in COD.

Lastly, your scenario of "someone getting a new card and playing at 1080p, instead of dlss balanced" doesn't make any sense.... at all. (It illogical notion and perhaps not what you meant..?)

Upscaling technology in games is for when your old GPU card, can't match your new TV/Monitor display rates. SO you use upscaling.

But when you buy a new GPU why would you have to use upscaling..? Isn't that the reason for buying a new GPU, to get away from the upscaling you are currently using in your older GTX/RTX cards?

VcoreLeone

Posts: 289 +146

upgrade what ? a 3080 to 7800xt ? for 1% ?We understand that YOU bought used & didn't pay full price, I was asking why are you so dismissive of all those who did...? Someone in my clan bought a Watercooled Asus 3080 for $1,799 just 15 months ago....

He can laterally upgrade to a 7800xt for $500 and use less energy and get the same frames in COD.

and why are you dismissive of those who bought 6800s for 1300eur ? both parties were screwing us the same way.

check for yourself, report back.Lastly, your scenario of "someone getting a new card and playing at 1080p, instead of dlss balanced" doesn't make any sense.... at all.

Not just that, it mixes perfectly with dldsr downsampling.Upscaling technology in games is for when your old GPU card, can't match your new TV/Monitor display rates. SO you use upscaling.

I can tell you never even thought of using dldsr+dlss that way, and are just sour amd can only offer outdated vsr and inferior fsr in response.

Last edited:

Ultraman1966

Posts: 194 +116

RT is awesome and should be on any game that wants to have realistic lighting. If you don't like it, keep it off and enjoy. Play the game your way, wireframe if that's your jam!

Joey Rakas

Posts: 40 +34

Definitely a title that Nvidia cards enjoy a impressive lead, Especially with RT enabled. No doubt Nvidia reigns supreme here. I'm still sticking to my guns here, that Ray Tracing in itself is not the answer. I've personally only used Ray Tracing in a few titles on my 6900 XT, and visually speaking, I just don't see the hype, especially given the performance impact(especially on AMD hardware). Comparing my own results to that of others, all show similarities. Visually speaking it's not like you're getting some hidden revolutionary graphic setting that makes things look 10x better, such as what you get from going from low texture settings, to high. Or the difference between no Anisotropic filtering, and 16x Anisotropy. Both of which can make immense visual differences that even a novice can notice. And they also don't half your framerate.

Thank you for the Article TS. Definitely a lot of hard work went into this. With how much division there is on the topic of RayTracing, I can imagine it kind of of "muddies the water" when doing a big write up like this, but it is solid content, that regardless of which side of the fence someone is on, the consumer can see what to expect out of said hardware for this particular title, and what's worth it to them.

Thank you for the Article TS. Definitely a lot of hard work went into this. With how much division there is on the topic of RayTracing, I can imagine it kind of of "muddies the water" when doing a big write up like this, but it is solid content, that regardless of which side of the fence someone is on, the consumer can see what to expect out of said hardware for this particular title, and what's worth it to them.

Frame Generation. Turn that **** on if you have the card. All this talk about 'fake frames' is just laughable, and there is no latency either. You say my 4070 only gets 32fps @ 1440 Ultra w/ RT, but I get about 130fps with FG on and there is literally no noticeable difference. If you have to pause the game and go frame-by-frame to point it out, then it doesn't matter, because your eyes don't see frame-by-frame, so please people, stop being stupid. Also, my MSI 4070 Ventus 3x has no overheating issues even though some derp donkey's out there thought that having the headsink off one of the chips would cause an issue it hasn't in like half a year so far and my gaming is sublime.

Similar threads

- Replies

- 8

- Views

- 1K

- Replies

- 6

- Views

- 386

- Replies

- 12

- Views

- 621

Latest posts

-

Ford is losing boatloads of money on every electric vehicle sold

- Endymio replied

-

-

This genius tool ensures flawless thermal paste application every time

- DanteSmith replied

-

Generative AI could soon decimate the call center industry, says CEO

- Underdog replied

-

Mercedes-Benz becomes first automaker to sell Level 3 autonomous vehicles in the US

- captaincranky replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.