Holly cow, for a website that targets PC enthusiasts and writes such detailed in-depth write-ups on GPUs, it appears most of you just skip over how GPU architectures work and only look at the FPS graphs.

"hahahanoobs said:

What the...? Doesn't Tegra 4 have more shader cores than this?"

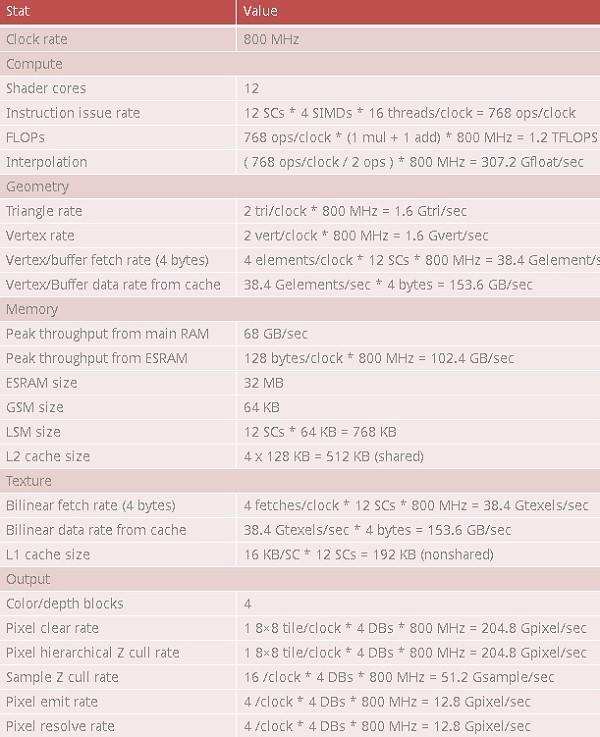

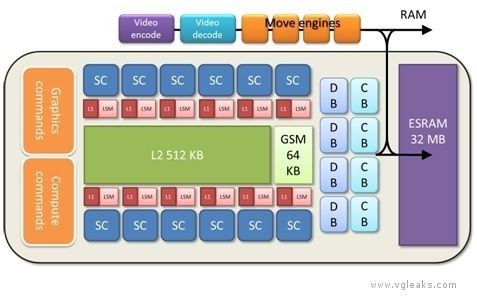

No, the "SC" in this case is interchangeably used with the term Compute Unit. HD7000 is made up of CUs (or in this case they labelled it as a Shader Core Unit), each comprised of 64 Stream Processors. 12 CUs (or "SC") equates to 12x 64 = 768 Stream Processors. Clocked at 800mhz, that gives a theoretical floating point value of 1.23 Tflops. In comparison, HD7770 is made up of 10 CUs, or 640 SPs, clocked at 1Ghz, for 1.28 Tflops of floating point performance.

"St1ckM4n said: I dunno, I wasn't really expecting much. To be quite honest, I don't see how they can make anything more powerful than a mid-range laptop GPU. There's just too much heat to be dispersed."

They could. Wimbledon XT (HD7970M) is an 850mhz downclocked HD7870 card with 100W TDP.

http://www.notebookcheck.net/AMD-Radeon-HD-7970M.72675.0.html

"Fat" launch PS3 used roughly 200-240W of power in games (http://www.gamespot.com/features/green-gaming-playstation-3-6303944/)

That leaves plenty of room for a 65-95W TDP CPU, whether a quad-core A10-5700/6700 APU, or an FX8300 95W part, with reduced clocks (or a Jaguar 8-core if MS is cheap).

" Littleczr said: 32 MB of video Memory? Even with gimmicks, it's still bad. My gtx 560 has 2gb of video memory and you can get it for $200 dollars."

32MB of eSRAM has nothing to do with the overall video memory of a GPU. The comparison to your GTX560 is meaningless. This eSRAM is used for color, alpha composing, Z/stencil buffering and can also be used to reduce the performance hit with anti-aliasing. Xbox 360's Xenos GPU used 10MB of eDRAM, which is completely different from the 512MB of DDR3 the GPU shared with the system. The Xbox 720 GPU is rumored to be using 8GB of shared DDR3 system memory for actual VRAM.

http://en.wikipedia.org/wiki/EDRAM

"TomSEA: You know, I wouldn't expect I-7 3930k / GTX 690 type numbers, but these figures don't even add up to a low/mid-range system from 2 years ago. "

Sure they do. 68 GB/sec of memory bandwidth, 12 Compute Units (768 SPs) HD7000 GPU clocked at 800mhz is nearly a sister equivalent to the 72 GB/sec memory bandwidth 10 CU (800 SPs) HD7770 clocked at 1Ghz. That's a modern low-end GPU, not from 2 years ago.

http://www.gpureview.com/show_cards.php?card1=675&card2=

"TheinsanegamerN said: Honestly....this is kinda disappointing. texture fill rate of this is 38.4 Gtexles. for reference, the geforce gtx 650 ti has a 59.3 Gtexel fill rate. also, the gtx 650 ti has an extra 200 GFlops of processing power. essentially, the xbox 360 sucessor is already outdone by nvidia's lowest end GTX gpu"

You cannot directly compare texture fillrate (or anything theoretical really) between AMD's and NV's GPus. In fact you can't even compare these metrics between AMD vs. AMD or NV vs. NV unless you are discussing the exact architecture. For example, you cannot compare the theoretical pixel fill-rate of HD6970 to HD7970. Both have 32 ROPs and HD7970 only has a 5% GPU clock advantage, but nearly a 50% Pixel Fillrate advantage in the real world.

http://www.anandtech.com/show/5261/amd-radeon-hd-7970-review/26

Similarly, HD7970GE is only 10-11% faster than a GTX680 despite a 50% memory bandwidth advantage. I am not going into details why this is but you cannot compare theoretical #s on paper between different GPU architectures, especially not across NV and AMD. At best you can only do so with some accuracy across all HD7000 cards or across all GTX600 cards, but not interchangeably.

For example, GTX650Ti has a near 50% texture fill-rate advantage over HD7770 (http://www.gpureview.com/show_cards.php?card1=675&card2=682), but in real world games it just 13% faster at 1080P:

http://www.computerbase.de/artikel/grafikkarten/2012/test-vtx3d-hd-7870-black-tahiti-le/4/

Based on the specs, a GTX650Ti would be slightly faster than the GPU in the Xbox 720. It's possible MS decided to keep costs down. Supposedly the GPU in PS4 is Liverpool based HD7970M with 18 Compute Units and 1152 SPs, 1.84Tflops of floating point, which is roughly 50% faster than the rumored Xbox 720 GPU. If you want a more powerful console, pay more attention to PS4's specs. Consoles won't have high-end GPUs due to high costs and the fact that something like a GTX690 uses nearly 300W of power.

"St1ckM4n said: And exactly which DX11 features will be able to be run after a resolution of 1080p? There's no power left to run tessellation etc.."

You are not the target market for next generation consoles. If you want the best graphics with tessellation, stick to PCs

Considering PS360 don't render most games beyond 1280x720 at 30 fps, moving to 1080P with some DX11 effects will be a huge step up for console gamers. Even games like Black Ops 2 or Uncharted 3 only run at 880x720 / 896 x 504 resolutions:

http://forum.beyond3d.com/showthread.php?t=46241

"Amstech, TechSpot Enthusiast, said: I see the new PS4 getting a Nvidia GPU like the PS3, only stronger obviously with 2GB VRAM and similar results for the new xbox..."

Little to no chance. PS4 is likely going to be running on Linux. As a result developers will want the flexibility of using the fastest GPU architecture for DirectCompute, OpenCL and OpenGL. That leaves only 1 choice - AMD's HD7000 series (or HD8000 series refresh). With games like Bioshock Infinite, Tomb Raider, joining Sleeping Dogs, Dirt Showdown, Sniper Elite V2, Hitman Absolution and 20nm Maxwell and HD9000 series focusing even more on compute, the industry is going to use more and more GPGPU functions for accelerating gaming graphical effects such as HDAO, contact hardening shadows, etc.

http://videocardz.com/39236/amd-nev...ith-tomb-rider-crysis-3-and-bioshock-infinite

More importantly, since MS/Sony are trying to keep costs down as the market is unlikely to bear $500-600 consoles as was the case with PS3, price/performance becomes a critical factor. Right now GTX680M is just 5% faster than HD7970M when tested with recent drivers but costs $330-400 more. Since Enduro vs. Optimus functionality is completely irrelevant for consoles, mobile AMD GPUs provide by far the superior price/performance for consoles:

"Thanks to the enormous lead in the last three titles, the Nvidia GPU is more or less 5 % ahead of the AMD card - a negligible difference. With regards to costs, however, the performance of the Radeon HD 7970M is truly impressive as the 680M can run users $400 USD more than the Radeon. Nvidia's high-end graphics card continues to have very poor value per dollar."

http://www.notebookcheck.net/Review-Update-Radeon-HD-7970M-vs-GeForce-GTX-680M.87744.0.html

That makes an NV GPU inside PS4 almost a non-starter assuming Sony wants to keep the MSRP close to $400-450.

"MrBungle said: My memory is a bit fuzzy since it was so long ago but isn't 1.2TFLOPS roughly equivalent to a 9800GTX?"

No, only 0.432 Tflops:

http://www.gpureview.com/show_cards.php?card1=559&card2=

Regardless, you cannot compare theoretical Tflops between different GPU architectures and apply it to games. Case and point:

HD7970GE is about 10-11% faster than a GTX680 despite having a 33% floating point performance advantage:

http://www.gpureview.com/show_cards.php?card1=667&card2=679

GTX680 has a 95% Tflops advantage over GTX580 but is only 35-40% faster on average:

http://www.gpureview.com/show_cards.php?card1=667&card2=637

The only useful information you can get from the above specs is to find out a similar HD7000 card and then look up its performance in this chart:

http://alienbabeltech.com/abt/viewtopic.php?p=41174

The GPUs in PS3/360 are only at 12-14 VP level at best. If Xbox 720 has a GPU similar to HD7770, it will be at least 5x faster. If PS4 has a slightly downclocked/neutered HD7970M with 18 Compute Units, with performance around HD7850 2GB, then it will be 9-10x faster than PS3's RSX.