We compare the GeForce RTX 4070 head-to-head with the more affordable RTX 4060 Ti 16GB. Recently both GPUs have seen price cuts, so which is the better buy and at what price target?

https://www.techspot.com/review/2739-geforce-4070-vs-4060-ti/

We compare the GeForce RTX 4070 head-to-head with the more affordable RTX 4060 Ti 16GB. Recently both GPUs have seen price cuts, so which is the better buy and at what price target?

https://www.techspot.com/review/2739-geforce-4070-vs-4060-ti/

It's not odd, the 4060 is a rebranded mobile chip. Nvidia are scumbags. The 3060 is a better productivity chip because it has a wider memory bus. The idea that the 4060 has a 128bit bus is a joke and I'm not laughingAs Steve mentioned in one of the HUB videos that they will focus a bit less on productivity benchmarking... Puget found the 4070 generally 30-50% faster than the 4060Ti 8GB with NLE video editors, and 33% faster on Blender. The really odd thing they found was that the 3060Ti actually outperformed the 4060Ti in several productivity benchmarks, which one could assume is due to the narrower memory bus - and possibly wouldn't be addressed by the higher VRAM version.

Radeon 7xxx series also appears to have made dramatic inroads with productivity (video encode/decode, Topaz AI etc) regarding parity with Nvidia performance, so IMHO it's turning into a two-horse race between the RX7800XT and RTX4070 for jack-of-all-trades system builds. Just need someone to do formal 7800XT PugetBench runs.

This is even worse that I thought... My idea was that the current 4060 is a 4050 from specs and perf. If this is a mobile part then it's lower than a desktop 4050.It's not odd, the 4060 is a rebranded mobile chip. Nvidia are scumbags. The 3060 is a better productivity chip because it has a wider memory bus. The idea that the 4060 has a 128bit bus is a joke and I'm not laughing

Yes one has to tread carefully if entertaining a 4060Ti purchase because the performance gains and losses are very application specific. In this other Puget test they found even the 3060 non-Ti outperformed the 4060Ti consistently in Davinci Resolve and some tasks in After Effects. In short, I can’t think of any use case that justifies buying a 4060Ti.It's not odd, the 4060 is a rebranded mobile chip. Nvidia are scumbags. The 3060 is a better productivity chip because it has a wider memory bus. The idea that the 4060 has a 128bit bus is a joke and I'm not laughing

I love the dual fan ones, but at $499, it's close, but I believe the 4070ti should be the $499 card. And nobody can say it's because prices of making the cards have gone up. Read up on nVidia's profit margin.In case you missed it

https://videocardz.com/newz/neweggs...-rtx-4070-at-499-and-radeon-rx-7800-xt-at-449

Newegg sale on 4070s and 7800xt.

Hence why I try to shed light on price drops for potential customers, I would argue it's more effective than pediatric level complaints about the prices, like we have any control over disturbance of pricing from the like of crypto mining craze and now ai compute. I'm am not a shareholder so I really do bother with the profit margin spreads and target revenue figures. Although I hear it's 10x roi on ai dedicated hardware for team Green currently. Now that AMD learned the smoke and mirrors trick that is frame gen, Nvidia's bedazzled affects are abating and thus the price corrections in some markets are in fruition.I love the dual fan ones, but at $499, it's close, but I believe the 4070ti should be the $499 card. And nobody can say it's because prices of making the cards have gone up. Read up on nVidia's profit margin.

Not many people care about Puget when it comes to graphics cards, its all about gaming be happy you have that.As Steve mentioned in one of the HUB videos that they will focus a bit less on productivity benchmarking... Puget found the 4070 generally 30-50% faster than the 4060Ti 8GB with NLE video editors, and 33% faster on Blender. The really odd thing they found was that the 3060Ti actually outperformed the 4060Ti in several productivity benchmarks, which one could assume is due to the narrower memory bus - and possibly wouldn't be addressed by the higher VRAM version.

Radeon 7xxx series also appears to have made dramatic inroads with productivity (video encode/decode, Topaz AI etc) regarding parity with Nvidia performance, so IMHO it's turning into a two-horse race between the RX7800XT and RTX4070 for jack-of-all-trades system builds. Just need someone to do formal 7800XT PugetBench runs.

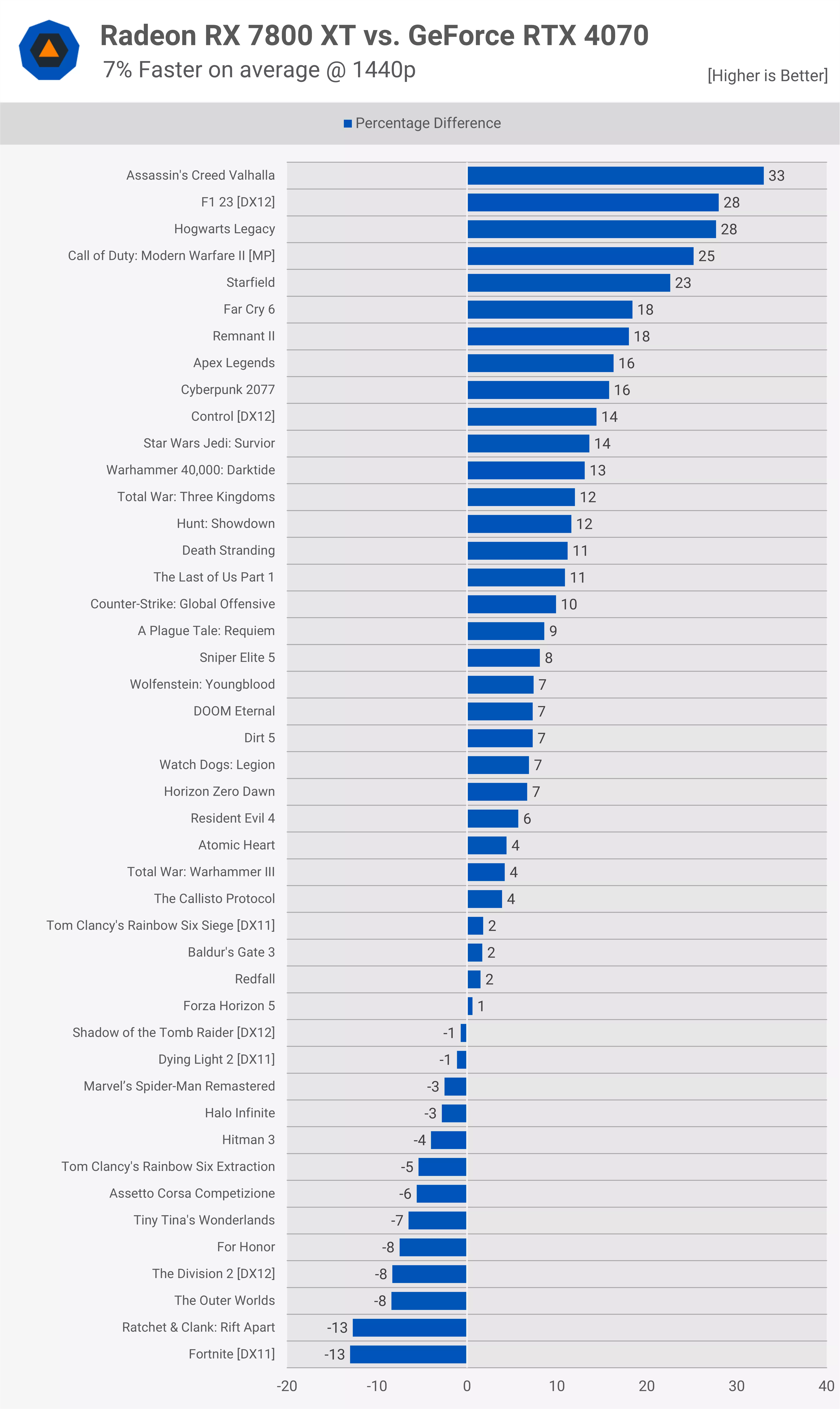

And be stuck with AMD's crappy and always late upscaling, frame interpolation and ray tracing? Nah, thanks.If you're in that $500 ballpark range, spend $500 and get a 7800 XT and play games at the same level (or slightly better) as a 4070 that costs $550.

At least that's what I would do. Because at that price point I want a GPU that plays games at 1440p, but it also means I don't have the money to spend towards a $1000+ GPU from Nvidia that handles raytracing better than what AMD can offer while still giving proper framerate performance. Unless you're dropping money on a 7900XTX or 4080/4090, use of RT is kind of pointless. The $500 range, you can get a good GPU that plays games at 1440p well.

I also don't give a rip about DLSS or FSR, those features can eat a fat one. So, for me, I'd much rather save a few bucks and have a card with more VRAM and plays games just as well (or better) at 1440p by getting a 7800XT over the 4070.

To each their own, but.....And be stuck with AMD's crappy and always late upscaling, frame interpolation and ray tracing? Nah, thanks.

I was a big fan of ray tracing, but it just isn't worth the frame rate hit on every game I play. With that said I'm not going to buy a GPU when they've gotten this expensive. I'm going to let both AMD and NVIDIA know they are too expensive by not buying.And be stuck with AMD's crappy and always late upscaling, frame interpolation and ray tracing? Nah, thanks.