Update (8/5): Nvidia has clarified a previous statement regarding availability of its next-gen GPU codenamed Kepler. In a nutshell, Nvidia won't be shipping any final products using the new silicon this year. "Although we will have early silicon this year, Kepler-based products are actually scheduled to go into production in 2012. We wanted to clarify this so people wouldn’t expect product to be available this year," said Ken Brown, a spokesman for Nvidia. Both AMD's and Nvidia's upcoming GPUs are slated to use a new 28nm process technology, with the likely possibility of brand new Radeon graphics cards making it into the market before the end of the year.

Original: We've seen various conflicting reports over the last six months about Nvidia's next-gen GPU launch schedule. It was originally reported that the graphics firm planned to unleash its codenamed "Kepler" (28nm) parts toward the end of this year. That story changed in early July when inside sources cited by DigiTimes claimed that Nvidia tweaked its roadmap due to low yields of TSMC's 28nm wafers, effectively shifting Kepler from 2011 to 2012, while "Maxwell" (22/20nm) cores would be bumped from 2012 or 2013 to 2014.

Apparently, that wasn't entirely accurate. Speaking at the company's GTC Workshop Japan event, Nvidia co-founder Chris Malachowsky reportedly said that Kepler is still (or back) on track for launch later this year. However, Malachowsky was careful with his wording, saying only that the parts would begin "shipping" by the end of 2011, and that doesn't necessarily mean you'll be able to purchase one in that window. It's fairly common for tech companies to paper launch products months before they're actually available.

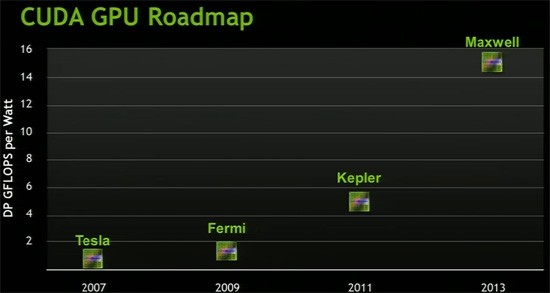

When Kepler does arrive (almost certainly under the GeForce 600 series moniker), it will supposedly deliver a threefold increase in double precision performance per watt over Fermi along with being easier for developers to utilize for GPGPU applications. Considering it's still a few years out, even less is known about Maxwell. Based on Nvidia's year-old roadmap, its 22/20nm GPUs are expected to yet again triple Kepler's performance per watt and bring a sixteenfold increase in parallel graphics-based computing.

https://www.techspot.com/news/44951-nvidia-promises-to-ship-next-gen-kepler-gpu-this-year-not.html