Today we're going to take a quick look at how current generation GPUs, along with a few older ones, perform when put to the task of Ethereum mining. Cryptocurrency mining is big news at the moment and it seems just about everyone wants in on the action. We are often asked what the best GPUs to mine with are, and since we didn't have an exact answer we decided to find out.

First, I should note that performance can vary significantly depending on the display driver used, the software client used, and the configuration of the graphics card, so please take this as a rough performance guide.

Initially I started testing with the go-ethereum client but started to find mixed results with certain GPUs, I wasn't sure why that was and couldn't solve a few issues, so I scrapped the results and moved on. Parity is another popular client, but instead I decided to use Claymore's Dual Ethereum miner version 9.6 for a few reasons. Number one was it worked really well with the current generation AMD and Nvidia GPUs.

Second, I worked out a way to benchmark using different DAG (directed acyclic graph) file sizes. Chances are the other clients can do this as well, I just didn't work out how. I felt it important to test various DAG file sizes to show future performance as well. As the DAG file size increases, so too does the difficulty. A new DAG is generated for each epoch around every 30,000 blocks and right now it is estimated that this will happen every 4 days or so. For example, at the start of the month Ethereum was at DAG epoch #126 and now we're already over #130 and next month we should hit #140, but don't hold me to that.

Something miners started to notice was as the DAG increased in size AMD Radeon 400/500 series cards took a performance hit, whereas the GeForce 10 series and older AMD Radeon 200/300 series cards didn't. So this may be bad news for those that snapped up Radeon RX 470/480 and Radeon RX 570/580 graphics cards. As of writing, it isn't clear if this is simply a driver issue or a weakness of the Polaris 10 architecture. Anyway let's take a look at the results...

Benchmarks

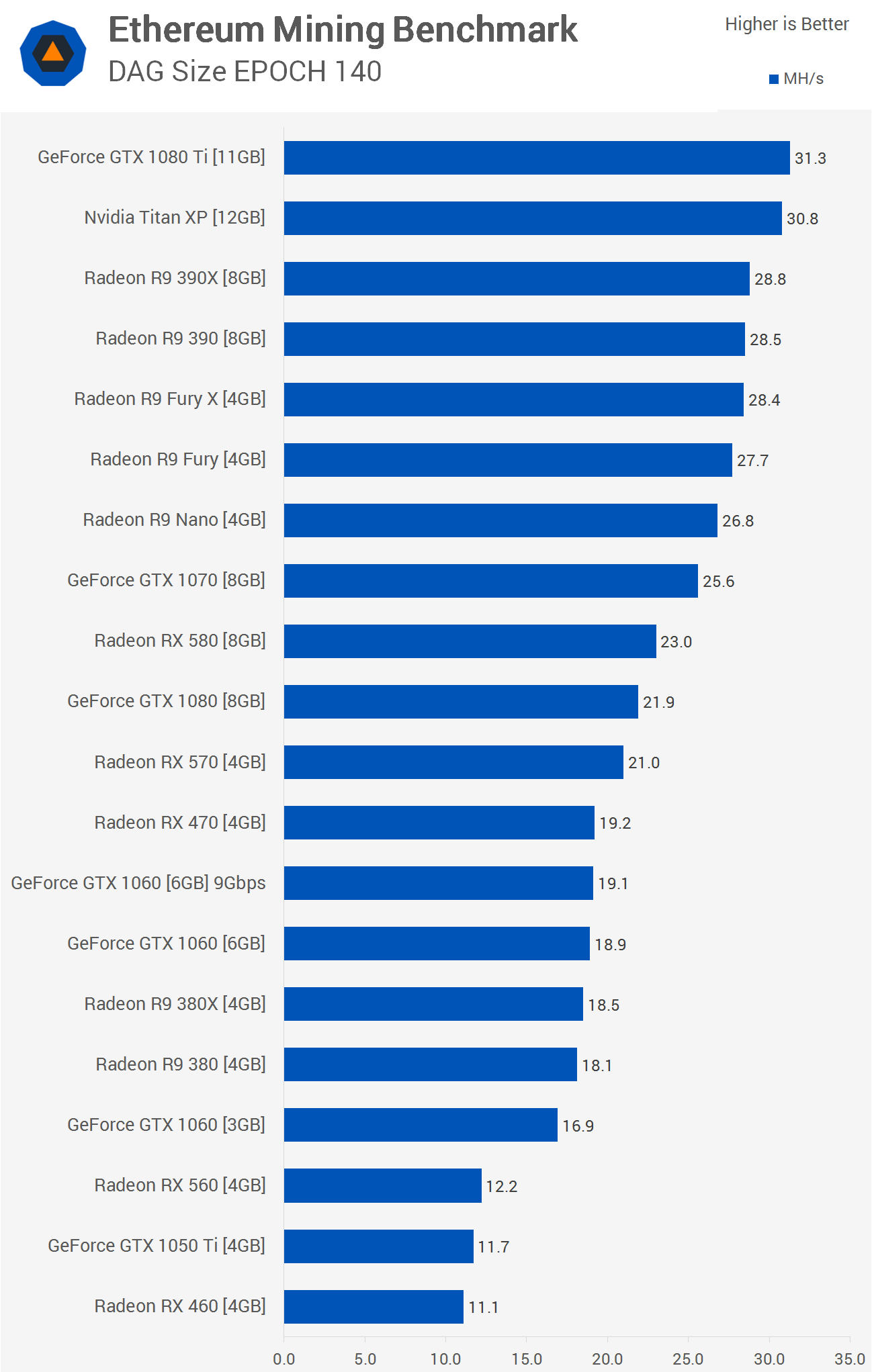

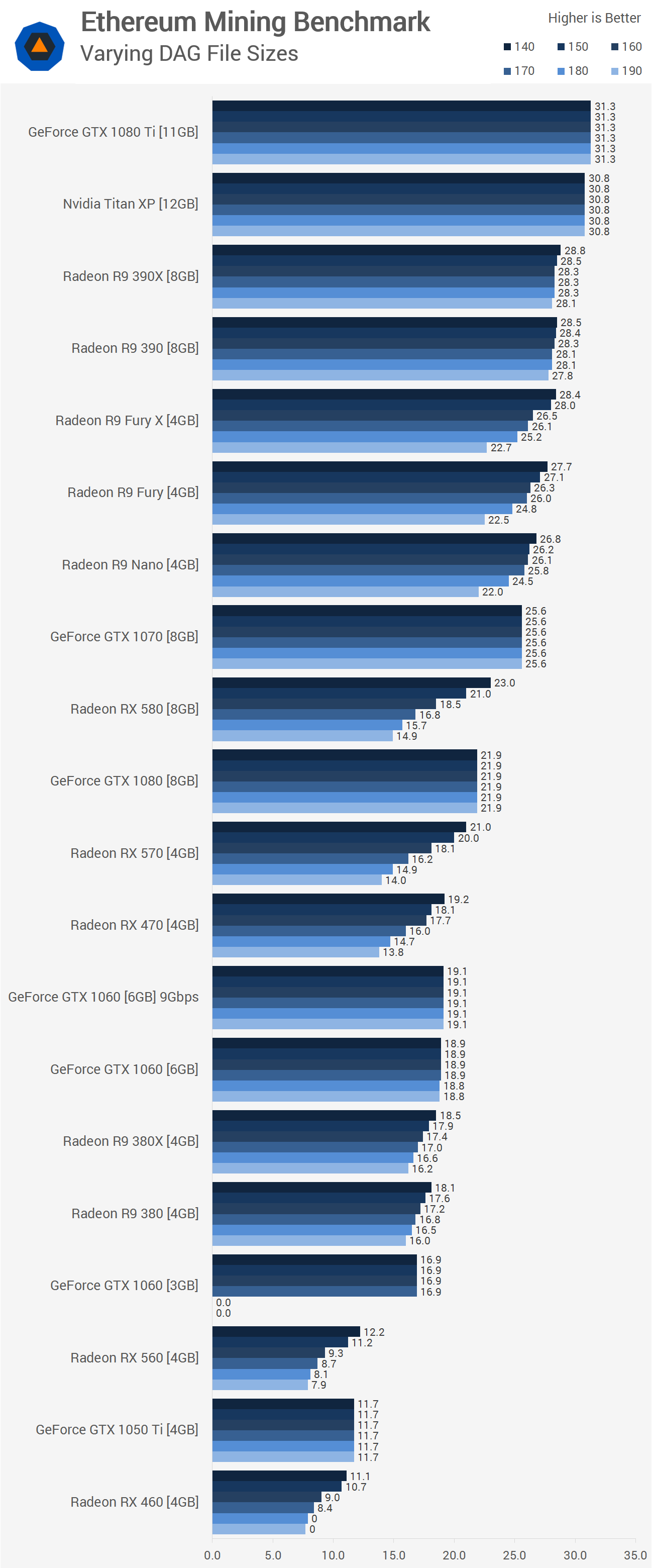

So if you're looking at snapping up a few GPUs to mine Ethereum you're probably not that interested in performance right at this very moment. So I've skipped epoch 130 and started testing at 140. Here the RX 580 still looks good in relation to the GTX 1060 series. In fact, the RX 470 and 570 are offering a similar level of performance, as are the older R9 380 series.

The R9 390 and 390X look great. They aren't much slower than the Titan XP and 1080 Ti. The GTX 1080 tanks though and surprisingly the GTX 1070 is faster here, apparently this is down to an issue GDDR5X has when it comes to mining, despite offering more memory bandwidth it's actually slower for mining, go figure.

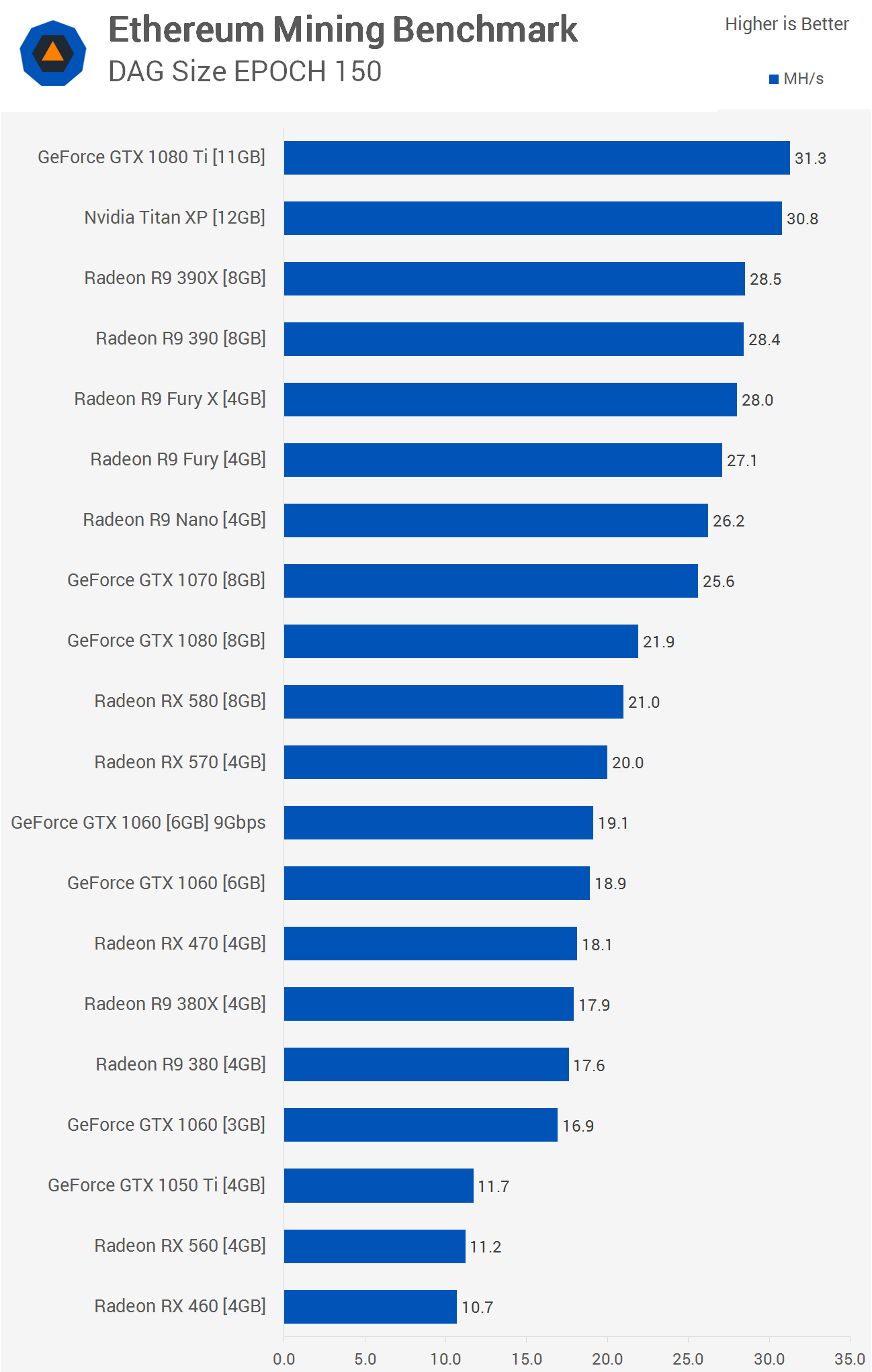

As we move to epoch 150 the RX 580 can be seen falling behind the GTX 1080 as the hash rate decreases by 9%. The same kind of decline can be seen for all RX 400 and 500 series GPUs. Meanwhile the R9 390 series barely sees a change while the GeForce 10 series sees no change at all.

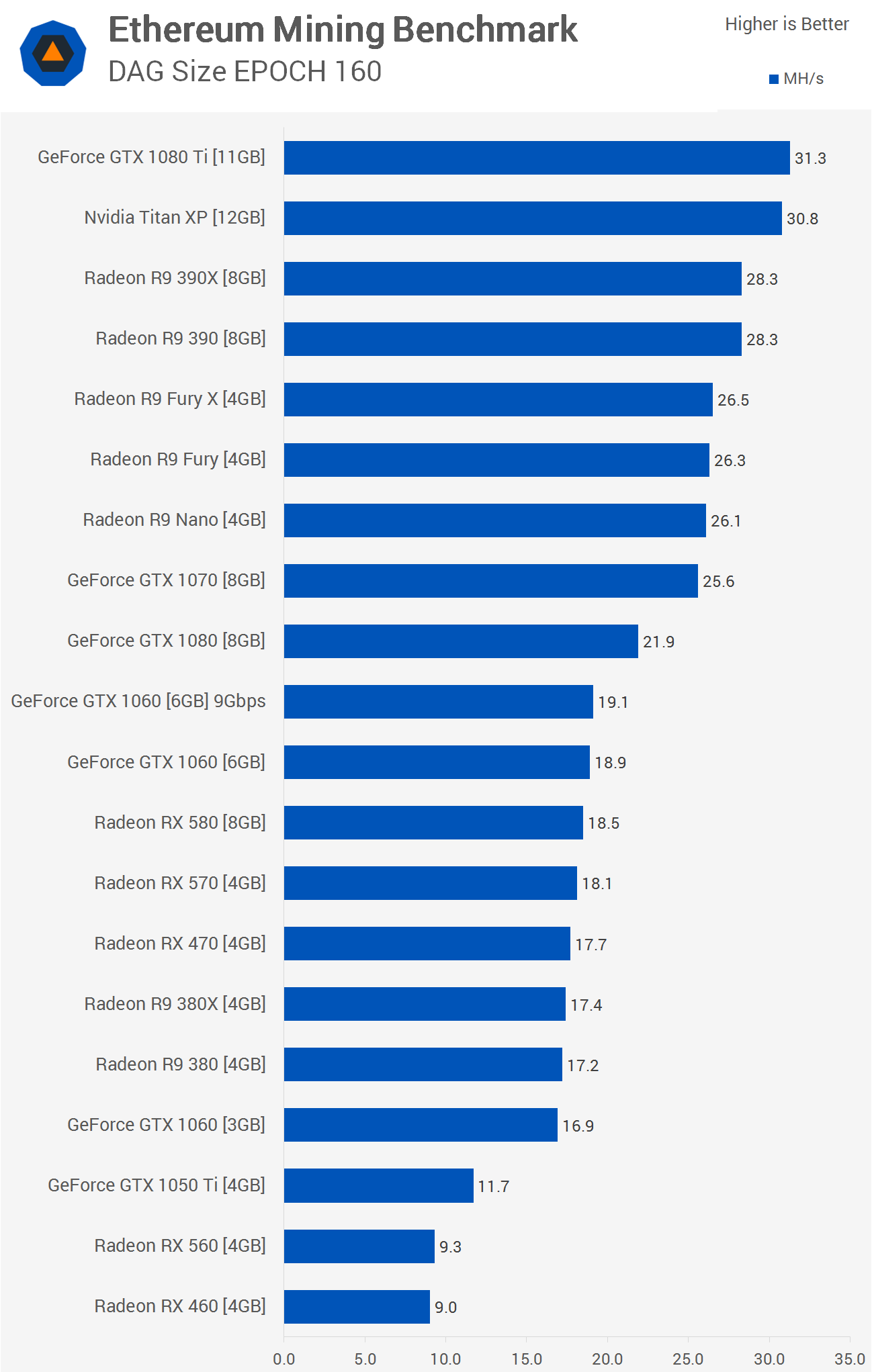

At epoch 160 the RX 500 and 400 series continues to fall away and now we are seeing a pretty big decline for the Fury series as well. The GTX 1060 is not slightly faster than the RX 580.

Now at epoch 170 the GTX 1060 is a good bit faster than the RX 580, while the R9 Nano, Fury, and Fury X all provide much the same performance.

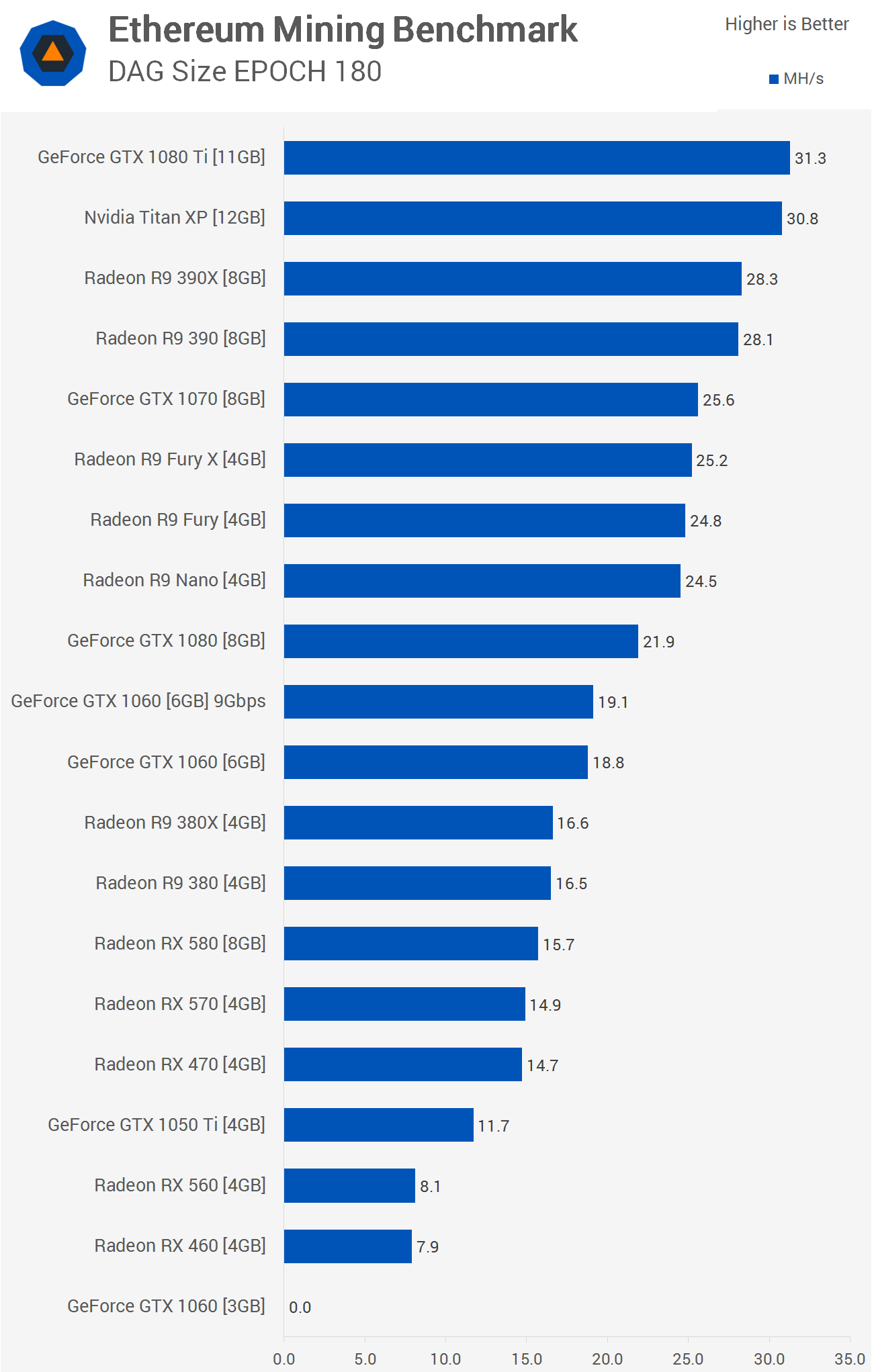

Moving to epoch 180 will see the death of 3GB graphics cards.

The GTX 1060 3GB, for example, no longer has sufficient memory to mine Ethereum.

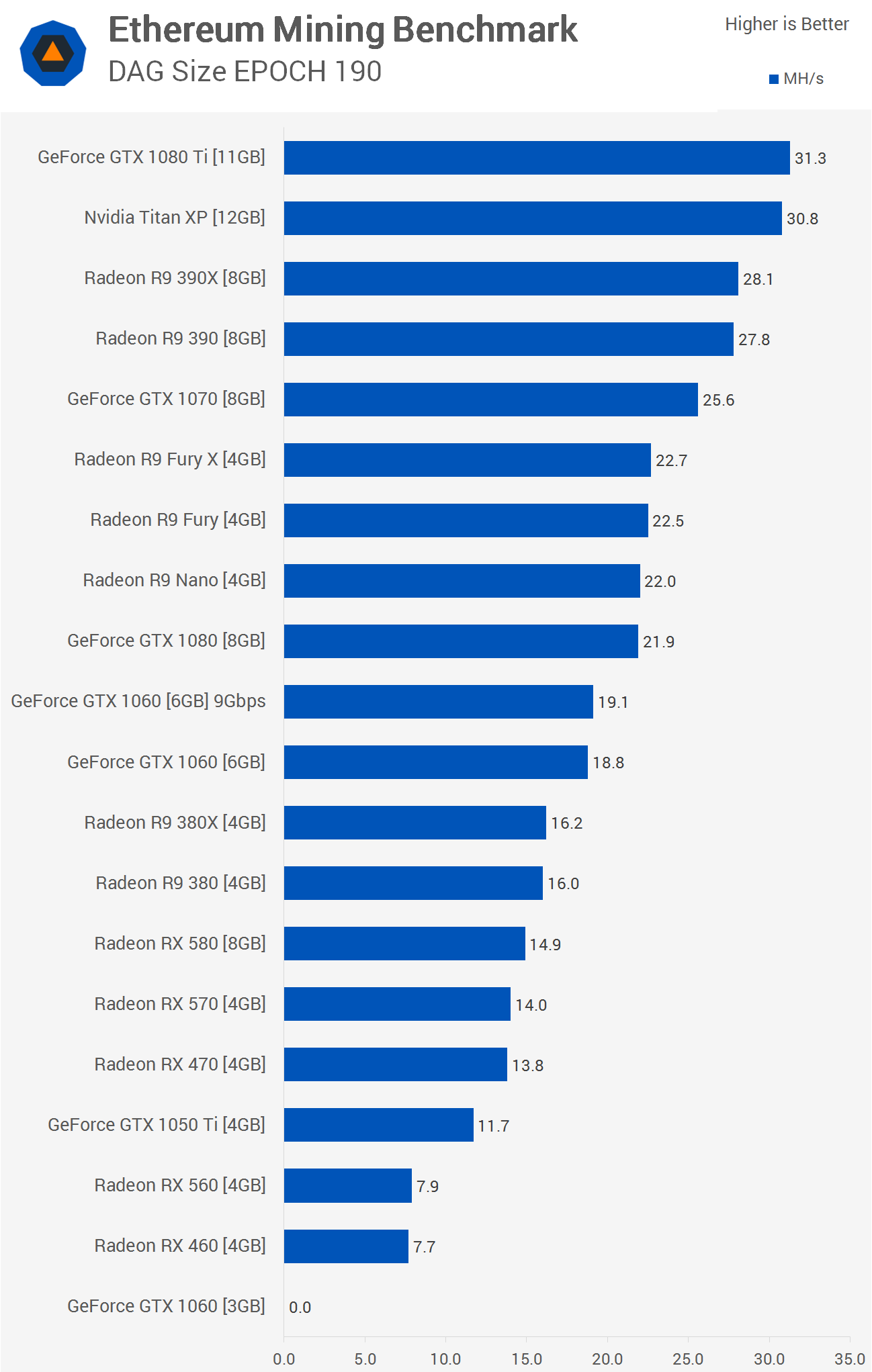

And finally epoch 190 way off in the future and here we see that the GeForce 10 series still retains its original performance while the RX 500 and RX 400 series falls away massively. The older R9 390 series remains strong however and still isn't much slower than the Titan X and 1080 Ti.

Here is a look at how the hash rates compare between epoch 140 and 190. As you can see the R9 390 series performance remains much the same while all GeForce 10 cards remain exactly the same, with the exception of the 3GB 1060 which runs out of memory. The once mighty RX 580 sees a massive 35% reduction in performance which is awful news for those that invested in the RX 400 or 500 series for the long haul.

Wrapping Things Up

My brief look into Ethereum mining performance was interesting, but I have to say this whole mining craze just doesn't get me excited. But that doesn't matter, the idea was to give you an idea how GPUs compare and how they should compare well into the future as well.

The GTX 1080's performance is a tad confusing, everyone points to the GDDR5X memory as the issue, no one seems to know why. As a guess I would say there is some kind of latency issue that impacts mining performance.

As for the RX 500 and 400 series GPUs, memory capacity clearly isn't the issue as the 8GB cards still suffer. I believe the issue is memory bandwidth, at least when comparing the RX 580 to the R9 390, for example. That said this doesn't explain why Nvidia's GTX 1050 Ti sees no performance degradation, so perhaps it is a driver issue. Compared to the R9 390 series which features a massive 512-bit wide memory bus for a bandwidth of 384 GB/s, the RX 580 uses a 256-bit wide bus for 256 GB/s. So that could certainly explain the issue, at least when comparing those two GPUs.

Shopping shortcuts:

- GTX 1080 Ti on Amazon

- GTX 1080 Ti on Newegg

- Radeon R9 390 on Amazon

- Radeon R9 390 on Newegg

- GTX 1070 on Amazon

- GTX 1070 on Newegg

The Fiji based GPUs such as the Nano and Fury aren't that cost effective and as the file size increases due to their limited VRAM buffer capacity they do fall well behind the R9 390 series. When it all boils down to it the GTX 1070 looks to be king as it offers solid performance and a very high level of efficiency. The R9 390 and 390X are faster, but will consume much more power.

Remember these are just out of the box numbers. Using a custom BIOS designed to maximize mining performance will lead to better results, so keep that in mind. What you've seen here should serve as an accurate baseline however.