Although we've thoroughly benchmarked PlayerUnknown's Battlegrounds at this point and it's based on the same UE4 engine as Fortnite, we're nonetheless interested to see just how much better optimized Fortnite is and we know many of you are as well having read all of your requests for this one.

For those of you unaware, Fortnite is a co-op sandbox survival game released as an early access title for Windows, macOS, PlayStation 4, and Xbox One last July and it received a standalone 'Battle Royale' mode late last year. The title is due for a full free-to-play release this year after Epic Games finishes ironing out its kinks, though in my experience there appear to be few if any issues, especially compared to PUBG.

Update, check this out: Fortnite Chapter 2 GPU Benchmark Update

Fortnite's Battle Royale mode has proven to be quite popular, attracting more than 10 million players in less than two weeks. Obviously the battle royale genre is trending at the moment but helping Fornite's cause is the fact that the game seems to play well on just about anything. Even so, there will be those of you with older hardware looking to upgrade to enable high quality visuals and our benchmarks should be handy here.

For testing, our focus is on High quality settings rather than Epic as we feel this is where most people playing on older or lower-end hardware will be aiming. The difference in visual quality between High and Epic isn't that noticeable, while the drop in frame rate is, so High will make more sense for most.

That said, be aware that the '3D Resolution' setting was set to Epic as this ensures 100% scaling with the selected display resolution. It's a bit confusing but just setting the display resolution doesn't mean the game will be rendered at that resolution. This ensures that just the HUD will be rendered at the designated resolution. The actual game resolution can be set to 480p, 720p, 1080p or Epic, which should really just be called 100% scaling to minimize confusion.

For the benchmark test we dropped into the game at the exact same location every time. The test started the moment we hit the ground and we then followed the exact same 60 second path each time and reported the average result from a three-run average.

Also please note that we're skipping the Radeon RX 400 series as for the most part the new 500 series is exactly the same, just a little factory overclocking applied. The RX 550 is the only truly new product while the RX 560 has two extra CUs enabled, but overall isn't much faster than the RX 460. So if you have a 400 series Radeon GPU, drop a few percentage points off the 500 series figures and you'll be in the ballpark for your GPU.

As usual we used the latest AMD and Nvidia display drivers available at the time of testing and all graphics cards were tested on our standard GPU test rig. Inside we have a Core i7-8700K clocked at 5GHz with 32GB of DDR4-3200 memory.

Benchmark Time!

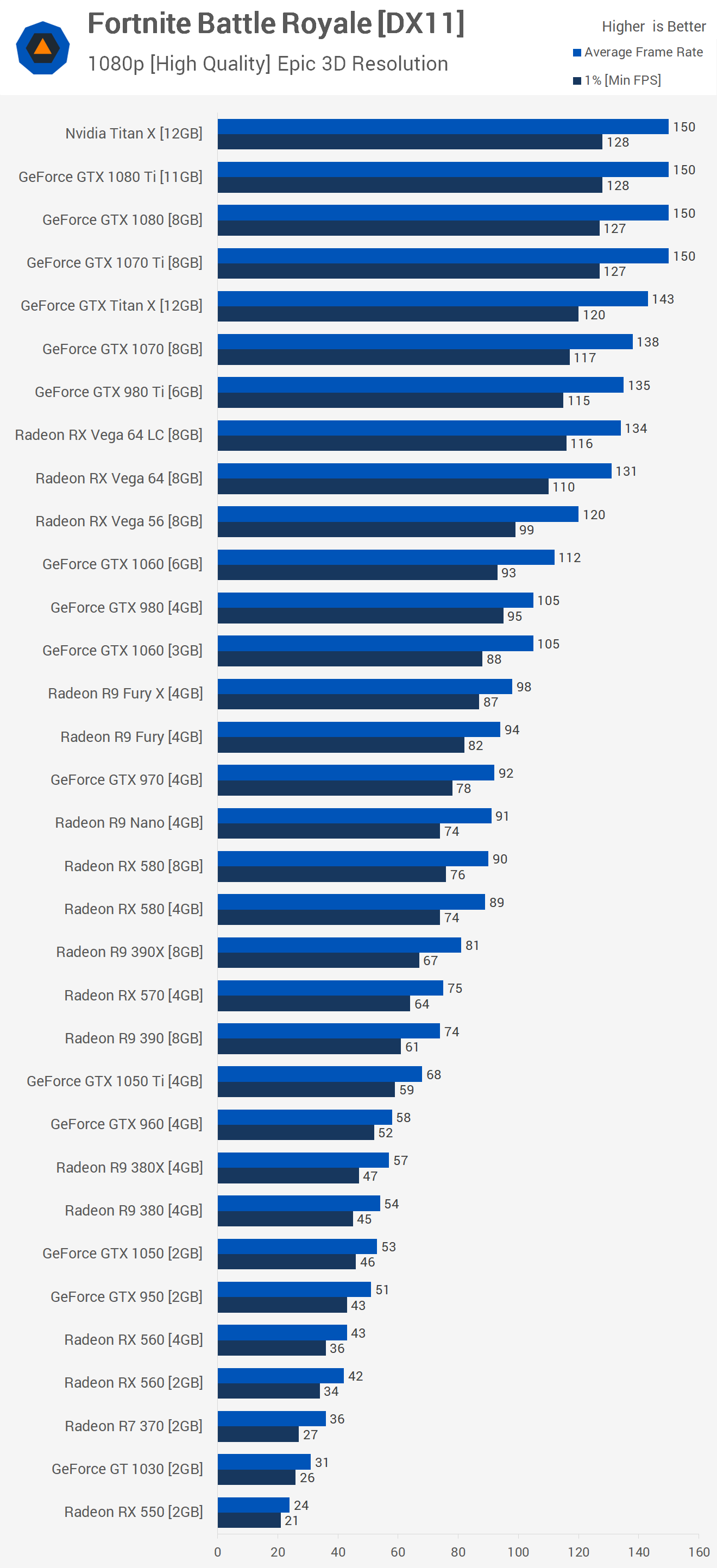

When running Fortnite at 1080p using high quality settings we find playable performance on almost all the GPUs tested. In fact, the Radeon R7 370, RX 550 and GeForce GT 1030 are the only exceptions and will require players to use medium or possibly even low quality visuals. Looking at the previous-generation GPUs, you'll still require a Radeon R9 390 or GTX 970 if you refuse to drop below 60fps (based on my experience, keeping this game above 60fps at all times makes it significantly easier to aim).

If we look at those two GPUs, we find yet another game where the GTX 970 puts a real beat down on the R9 390. Of course the game is built on UE4 and we've typically found this game engine to favor the green team, but the point is that the GTX 970 is still going strong, offering a whopping 24% more frames than the R9 390.

Even the GTX 960 which has become a bit of a failure in a number of recently released games manages to beat the R9 380 and even the 380X. Then we find the GTX 980 leaving the Fury X behind while the GTX 980 Ti needs draw distance set to epic before it can even see how far behind the Fury X is. Let's move on to 1440p and see how things look there.

If we turn our attention to the newer current generation GPUs we find that the GTX 1080 Ti, Titan X, GTX 1080 and 1070 Ti are all CPU limited to 150fps on average. This isn't a frame cap either, frame rates would shoot up well above 200fps when looking at the sky. For those wondering out standard GPU test rig was used and that packs a Core i7-8700K clocked at 5GHz with DDR4-3200 memory.

The good news here being that for an average of 60fps you'll only need a GTX 1050 Ti or RX 570. The RX 570 also keep the minimum above 60fps and the 1050 Ti was pretty close at 59fps. Dropping down to the GTX 1050 wasn't too bad but the RX 560 really struggled to deliver playable performance.

As we saw when comparing the GTX 970 and R9 390, Nvidia has a commanding lead in this title and we see numerous examples of this with the current generation models as well. Take the GTX 1060 6GB and RX 580 for example, here the GeForce GPU was 26% faster hitting 112fps on average.

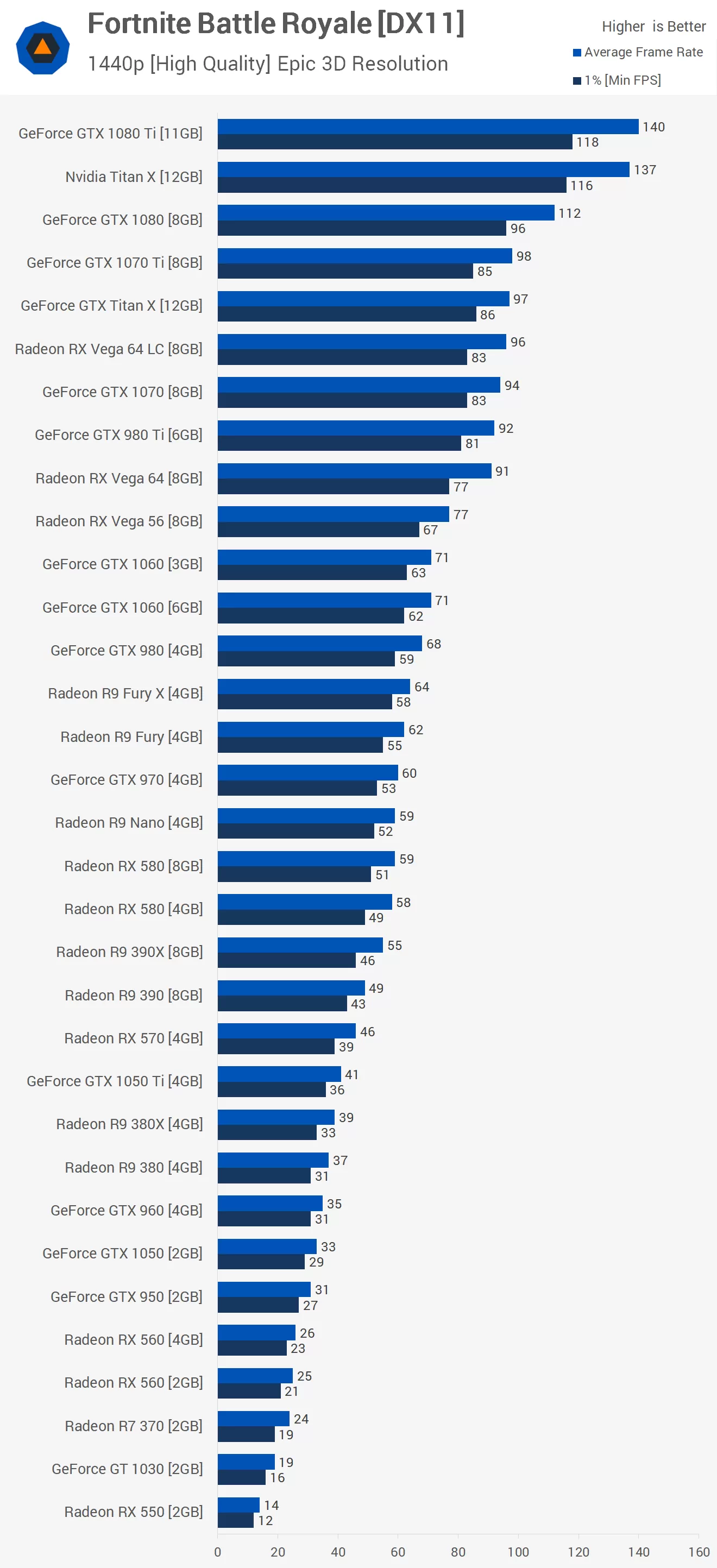

Here we find for those wanting to keep the frame rate around 60fps as the minimum you'll require a GTX 980 or Fury X graphics card while the GTX 980 Ti and Maxwell Titan X streak ahead delivering over 80fps at all times. For an average of around 60fps, the GTX 970 or R9 Nano will suffice, while the R9 390X and 390 weren't too bad but beyond that you'll need to turn things down.

The GTX 1060 remained 20% faster than the RX 580 here at 67fps versus 58fps. The GTX 1070 roughly matched Vega 64 Liquid while the GTX 1080 was 17% faster and the GTX 1080 Ti was 46% faster and in danger of being limited by the CPU.

Value-wise, the GTX 1060 3GB is the stand out here, though if you can afford it I suggest getting the 6GB model.

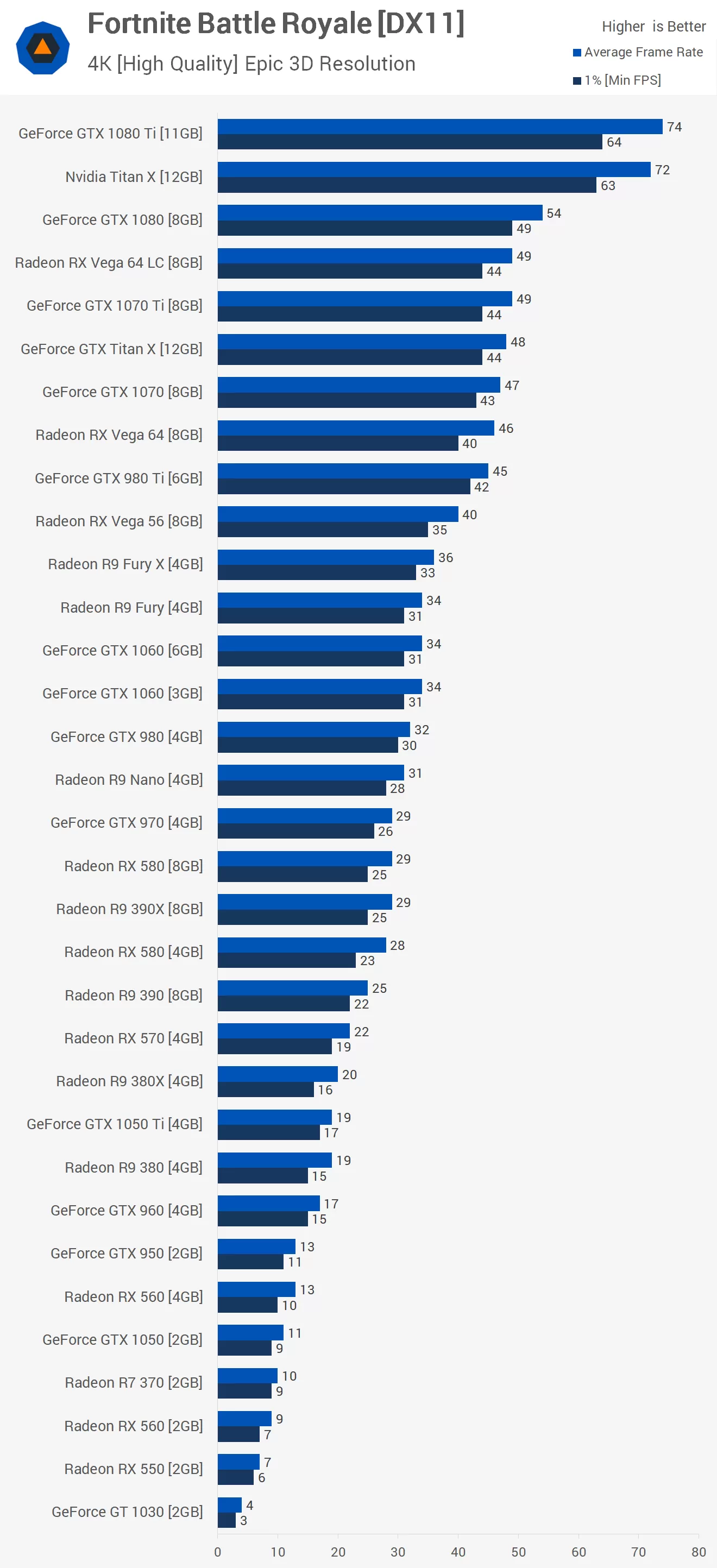

For those wanting to play Fortnite at 4K you will require a GTX 1080 Ti for perfectly smooth game play with over 60fps at all times. The GTX 1080 wasn't bad but again I had to lower the quality settings as it found dips into the 40s just made aiming too difficult.

Older-Gen GPUs

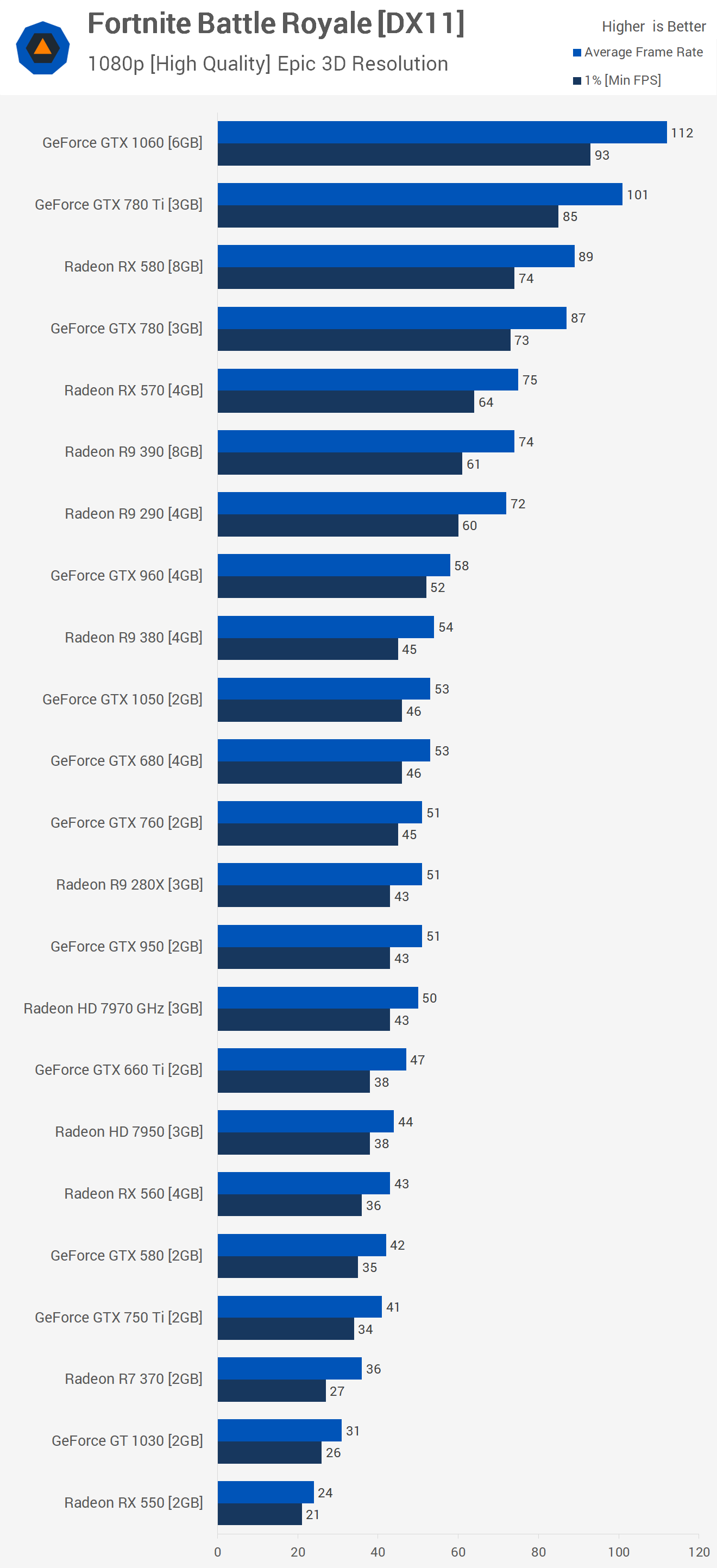

Before wrapping up we decided to look at a few older GPUs again and compare them to the more modern mid-range and entry level offerings at 1080p. Starting from the top we have the GTX 780 Ti. As we mentioned, this card is beginning to look dead in most of the more recently released games, but that wasn't the case in PUBG and it's certainly not the case with Fortnite, in which the card offered an average of 101fps at 1080p using the same high quality settings, a mere 10% slower than the GTX 1060 and on par with the RX 580.

The R9 290 was quite a bit slower, matching the R9 390 and RX 570. Then we have the old GTX 680 which only matched the GTX 1050 though this did make it slightly faster than the R9 280X and HD 7970 GHz Edition. The GTX 760 also squeezed in there with those Radeon GPUs. The HD 7950 roughly matched the RX 560 while the GTX 660 Ti was faster than both of them.

Finally, the GTX 750 Ti did surprisingly well for what it is. Again, as was the case with all the entry-level GPUs, you'll want to reduce the quality settings for improved frame rates but 41fps on average was still a decent step up from the R7 360.

Wrap Up

Well, there you have it... a battle royale of 44 GPUs in Fortnite Battle Royale, all tested under the same conditions, so you should be able to determine what card you need for your desired frame rate.

Going into this we knew Fortnite was a lot like other games that use cartoon style graphics such as Overwatch and Team Fortress, which makes it somewhat easy for the game to look great artistically while still being gentle on the GPU. Still, if you want to play at 1440p with high quality settings you'll still want a GTX 1060 or better, especially if you're like me and can't aim worth a damn at less than 60fps.

Shopping Shortcuts:

- Nvidia GeForce GTX 1060 on Amazon, Newegg

- Nvidia GeForce GTX 1070 on Amazon, Newegg

- Nvidia GeForce GTX 1070 Ti on Amazon, Newegg

- Nvidia GeForce GTX 1080 on Amazon, Newegg

- Nvidia GeForce GTX 1080 Ti on Amazon, Newegg

- Nvidia Titan X on Amazon

- Radeon RX Vega 56 on Amazon, Newegg

- Radeon RX Vega 64 on Amazon

- Radeon RX Vega 64 LC on Amazon