Come 2020 Intel will be back in the discrete graphics business and is expected to launch a new GPU for gamers. We can see this going one of two ways: Intel graphics become the butt of the next generation of PC jokes, or they achieve a miracle and enter a market that's been dominated by just two players since the start of the millennia.

Here's a brief timeline of how this imminent launch took shape in the public eye:

June 12, 2018: Intel's former CEO Brian Krzanich tells investors at a private meeting that Intel has been silently developing a discrete GPU architecture, "Arctic Sound," for years, and that it would be released in 2020.

January 8, 2019: Senior VP of client computing Gregory Bryant during CES discloses that the upcoming GPU will be manufactured on Intel's 10nm process.

March 21, 2019: Intel presents two designs for their GPUs on stage. Stylistically, they appear similar to their Optane SSDs and size-wise, they have mid-range cooling capacity.

May 1, 2019: Jim Jeffers, senior principal engineer and director of the rendering and visualization team, announces Xe's ray tracing capabilities at FMX19. In addition, Intel has continued to hire talent away from the competition.

Numerous leaks and whispers have been bustling about the tech sphere in-between those dates and we can glean titanic amounts of information by delving into the fundamentals of Intel's architecture. That's what this article is about.

Development

Not wanting to take any chances, Intel decided their entire graphics team would be made up of experts stolen from AMD and Nvidia. This was how we first learned Intel would be making discrete GPUs: they hired AMD's director of graphics architecture and manager of graphics businesses, Raja Koduri, to become the senior vice president of core and visual computing at Intel in 2017.

Then came Jim Keller, the lead architect behind AMD's Zen architecture, to the role of senior vice president of silicon engineering. Intel then snagged Chris Hook, once the senior director of global product marketing at AMD, to the position of lead discrete GPU marketer. Then Darren McPhee, former AMD product marketing director; Damien Triolet, former tech journalist turned AMD marketer; Tom Peterson, Nvidia's director of technical marketing and a chip architect; and finally Heather Lennon, AMD's manager for graphics marketing and communications.

Those of course, are just the bigger more public hires. Intel's graphics team is 4,500 strong. And you can't have that many people and such talented ones without doing something at least vaguely interesting.

Architecture

Core Count

While Xe belongs to the future, no piece of technology is separate from the generations of past products and development that birthed it - generations that happen to be well documented. The first round of Xe products will use an architecture evolved from the presently released "Gen11," but still closely related, going off Intel's statements at CES. Thus, we can gather some intriguing info from investigating Intel's whitepaper on Gen11.

As you might recall from our Navi vs Turing comparison, all graphics architectures are built out of progressively more complex parts. For Nvidia, 16 CUDA cores are grouped into blocks, four of which comprise a streaming multiprocessor, a pair of which make a texture processing cluster (TPC), and there's either four or six of those in one graphics processing cluster (GPC). Each GPC thus contains either 512 or 768 CUDA cores.

As illustrated in the table below, the number of GPCs and TPCs per GPC determine the core count of each die. Do note, the GPUs for sale may have some of those cores disabled. For example, an RTX 2070 Super uses a TU104 die with 83% of the cores enabled limiting it to 2560 CUDA cores.

| 6 Graphics Processing Clusters | 3 Graphics Processing Clusters | |

| 768 CUDA per GPC (6 TPCs per GPC) | 768 x 6 = 4608 CUDA (TU102) | 768 x 3 = 2304 CUDA (TU106) |

| 512 CUDA per GPC (4 TPCs per GPC) | 512 x 6 = 3072 CUDA (TU104) | 512 x 3 = 1536 CUDA (TU116) |

Let's apply the same concept to Intel's architecture.

Intel's cores are nothing like Nvidia's CUDA, but they bear similarity to AMD's stream processors, so let's discuss in terms of those. Each stream processor is built from one arithmetic logic unit capable of performing one floating-point or integer operation per clock. Intel's arithmetic logic units can perform four operations per clock, so let's call them equivalent to four cores.

Intel starts by pairing two arithmetic logic units into one execution unit (eight cores), and then another eight of those in a sub-slice (64 cores), then a further eight of those into one full slice (512 cores).

Intel's next generation CPUs will contain one integrated slice, but as you may have guessed, the Xe discrete line-up will be built from multiple slices. Fundamentally, this implies that Intel, much like Nvidia, can only produce dies with core counts that are multiples of 512. A die's core count is the number of slices multiplied by 512.

| No. of Slices | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Core Count | 1024 | 1536 | 2048 | 2560 | 3072 | 3584 | 4096 |

| Name* | iDG2HP128 | iDG2HP256 | iDG2HP512 |

Note, we're assuming Intel will keep the basic slice configuration of Gen11 because, quite frankly, they may not have time to completely redesign their graphics architecture by next year. Gen11, of course, is well suited to be both integrated and discrete by design, but certain elements must change like the render backend, which if left unaltered would bottleneck a multi-slice design.

Possible Architecture of a 2048 Core (Four Slice) Xe

Evidence

Conveniently, Intel has provided a strong suite of evidence to support this hypothesis. A driver accidentally published in late July contained the names of various unreleased products; "iDG2HP512," "iDG2HP256," and "iDG2HP128;" and we've interpreted the codes as "Intel discrete graphics [model] two high-power" followed by the number of execution units.

Each execution unit is eight cores, so we get "512" implying four slices and 4096 cores, "256" implying two slices and 2048 cores, and "128" implying two slices and 1024 cores. These configurations support our analysis perfectly.

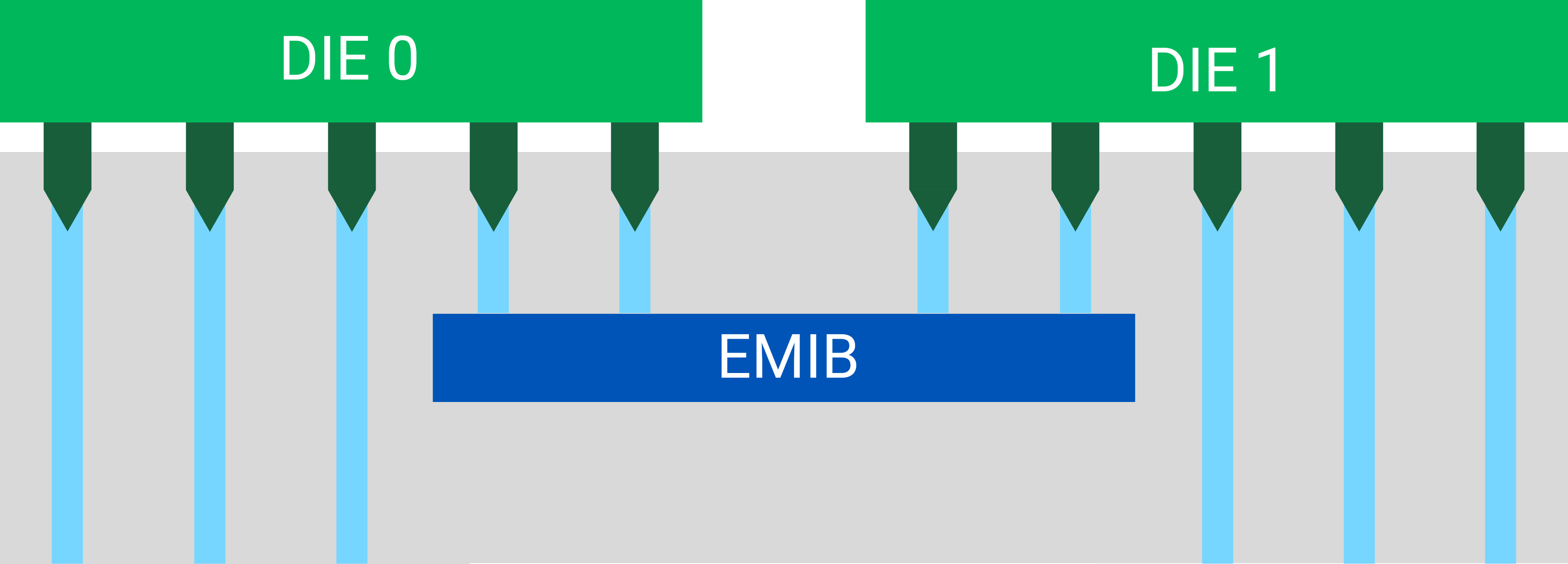

Intel have also made it clear they've investigated ways to combine slices. At the beginning of 2018 Intel demonstrated a prototype discrete GPU comprised of two Gen9 slices combined on a single die, and they put this into practice with their recent integrated Iris Plus Graphics 650 that combines two Gen9.5 slices on one die. Furthermore, they've also been experimenting with a chiplet approach using EMIB (embedded multi-die interconnect bridge) that was first used in Kaby Lake G.

EMIB Architecture Design

EMIB "connects multiple heterogeneous dies in a single package cost effectively," essentially combining two or more physical dies into one virtual die for massive cost savings and a slight performance penalty. As a 2017 Nvidia research paper asserts, this is because "very large dies have extremely low yield due to the large numbers of irreparable manufacturing faults."

By creating lots of small dies and combining them, Intel reduces the chances of error when manufacturing them, and makes those errors cheaper to remedy. While EMIB isn't quite ready for mass deployment, in April Intel confirmed to Anandtech that they intended to use EMIB to support their GPUs soon, so that is something to look forward to.

Clock Speeds

Graph time. We've found a reasonable estimation of core counts, so let's start by looking at that in a worst-case scenario to form a baseline. Namely, this is pairing the core counts with the clock speeds of Intel's current best integrated graphics, which operate at 1150 MHz. To combine speed and core count we'll graph in TFLOPs, a theoretical measure of performance based on those two variables. It's worth noting that although TFLOPS is an excellent indicator of performance within one generation of GPUs built by one manufacturer, it is less reliable for comparing two manufacturers.

TFLOPS at 1.2 GHz

Though Intel is competitive on the core count front, at 1150 MHz they simply can't compete. Luckily that's not the situation we find ourselves in. We know they can at least exceed integrated speeds because they're limited by the thermal output of the CPU, which isn't an issue when there's no CPU. Secondly, Intel's new Xe chips will use faster and superior 10nm.

Intel's 10nm process is considered equivalent to TSMC's 7nm at worst, which can sit at 1800 MHz while gaming in a well-cooled Radeon 5700XT. Intel also has a long history of squeezing faster speeds out of its processes than its competitors can. However, this is Intel's first generation of GPUs in a long, long time, so cautious pessimism offers more accuracy: let's assume Intel will be able to manage at least 1700 MHz.

TFLOPS at 1.7 GHz

At these potentially more realistic speeds, Intel appears to be somewhat competitive on the TFLOPS front. Bear in mind though, these estimations of speed are not meant to be reliable, particularly compared to the core count calculations.

In summary: it is reasonably likely that Xe discrete GPUs will appear in 512 core multiples at roughly the same price per core as Nvidia, and with competitive 1.7 GHz speeds.

Software

There's much more to GPUs than just the hardware. Nvidia famously hires more software engineers than hardware ones. Supporting every game is a costly and time-consuming investment, which is part of the reason new entrants to the gaming GPU market are virtually non-existent. Except for Intel, that is, though they're new to the discrete hardware game they're certainly not new to software. Consider drivers a translator between the games and the hardware; only the hardware is changing, so the conversion from their existing integrated drivers to discrete is a straightforward process.

Those drivers are not all that well maintained, and this is something Intel has been criticized over for a long time. The mean time between their last ten driver releases was twenty-five days for Intel, eighteen days for Nvidia, and just ten days for AMD. Fortunately, there's a good chance Intel will pull their socks up for Xe, considering how much more important drivers are for discrete GPUs relative to integrated ones.

It's not all bad, however. Intel has been making strides in the past year and gained ground on Nvidia and AMD with their new Command Center, a hub enabling far finer and more painless control over GPUs and games than Nvidia's GeForce Experience. It offers game optimization with thorough explanations on what every setting does and its performance impact, quick multi-display setup with refresh rate and rotation syncing, display color accuracy and style adjustments, and driver control. And, a trivial but pleasing detail, Intel also supports async, so all Xe products will support FreeSync monitors and their ecosystem out of the gate.

Market

Little about an architecture or ecosystem matters when the market position of the products is undefined. On that front, we know very little. Will they cost $100 or $1,000? One model, two, or ten? When recently asked if Xe might target the high-end market, Raja Koduri replied:

"Not everybody will buy a $500-$600 card, but there are enough people buying those too - so that's a great market. So the strategy we're taking is we're not really worried about the performance range, the cost range and all because eventually our architecture as I've publicly said, has to hit from mainstream, which starts even around $100, all the way to data center-class graphics with HBM memories and all, which will be expensive.

We have to hit everything; it's just a matter of where do you start? The first one? The second one? The third one? And the strategy that we have within a period of roughly - let's call it 2-3 years - to have the full stack."

And there you have it: Intel will release a few GPUs. Not exactly valuable insight, but it is expected Intel would want to hold their cards close to the chest. And because that's the only official word on the matter, we must now turn to an alternative source: the leaked driver.

Image: Intel concept render by Cristiano Siqueira

The three GPUs named on Intel drivers which may be the ones aimed for initial release have 1024, 2048 and 4096 cores. This would make them competitive performance-wise with the RTX 1650, RTX 2060 and RTX 2080 Ti, respectively, at the $150, $350 and $1000+ price points. Intel may choose to outperform Nvidia with faster speeds or out-value them with lower prices, or simply match them and rely on other features to differentiate.

The driver also provides insight into a longer-term perspective with two "developer" cards. Grouped with the other three is an "iDG1LPDEV" that translates to an "Intel discrete graphics [model] 1 low power developer" card which could signify low-power laptop GPUs are being experimented with. There's also a separate listing of an "iATSHPDEV" referencing the "Arctic Sound" codename for Xe's architecture, but it can't be confirmed this is a discrete GPU.

If there's one thing to remember... What Intel will release is ultimately an unknown, and speculation does not constitute buying advice. On the other hand, things look promising. Very promising.

Shopping Shortcuts:

- AMD Radeon RX 5700 XT on Amazon, Google Express

- AMD Radeon RX 5700 on Amazon, Google Express

- GeForce RTX 2070 Super on Amazon, Google Express

- GeForce RTX 2060 Super on Amazon, Google Express

- GeForce RTX 2080 Ti on Amazon, Google Express

- AMD Ryzen 9 3900X on Amazon, Google Express

- AMD Ryzen 5 3600 on Amazon, Google Express

- AMD Ryzen 5 2600X on Amazon, Google Express