For today's article we'll be once again looking at many benchmark graphs filled with RTX 3080 data, though this one is going to be a little different. While we're benchmarking the new GeForce RTX 3080, it won't be the main focus of our attention but rather we'll be looking deeper into CPU performance.

By now we've spent quite a bit of time and energy investigating which flagship CPU we should use to test the new GeForce 30 series, with the two top choices being Intel's Core i9-10900K and AMD's Ryzen 9 3950X.

Initially, we were considering the 3950X for two key reasons: number one, it supports PCIe 4.0 and two, in late 2020, Ryzen seems like a more relevant choice for our readers. Going by stuff you buy after reading our reviews, the vast majority of our audience either already owns or are looking at buying a Ryzen processor over anything Intel. Our CPU buying recommendations follow that pattern pretty consistently as well.

And yet we know beyond a shadow of doubt that when it comes to ultimate gaming performance, Intel remains king. That's why over the past couple of years every time we've updated our Best CPU guide, we've recommended an Intel processor for those seeking maximum frame rates.

We didn't expect this to change with a high-end Ampere GPU supporting PCIe 4.0, and said as much at the time. We also put out a poll, asking you about this CPU choice and an overwhelming majority favored the 3950X. That was 83% out of the 62K people that voted. But not entirely convinced, as we typically opt for the scientific method rather than the most practical or most popular, we carried out some extensive testing using the majority of the games that we'd use to benchmark the RTX 3080.

What we found was that at 4K with an RTX 2080 Ti, the 3950X and 10900K delivered the same performance overall, while the AMD processor was just 2% slower at 1440p and 5% slower at 1080p. Given that the RTX 3080 is a $700 GPU and the RTX 3090 will cost close to $1500, we weren't going to be testing them at 1080p. So with such a small margin at 1440p and no difference at 4K, the choice of processor from a performance standpoint didn't really matter for the most part.

The RTX 3080 was supposed to be faster than the 2080 Ti, but unless it was over 100% faster, the margin at 4K wasn't going to change. We were confident the 3950X wouldn't skew the results to the point where we'd see stuff like our cost per frame and average performance data at odds with other media outlets, and as we now know, we didn't.

Had we tested the RTX 3080 at 1080p, the R9 3950X might have been a problem depending on the games used, as we've found in the past processors like the 3900X can be up to 10-15% slower than competing Intel parts. On that note, we're amazed by how many people were demanding 1080p benchmarks for the RTX 3080 on the basis that it's the most popular resolution on the Steam Hardware survey.

The reason 1080p is so popular is because it's an entry-level resolution, and the most affordable monitors are 1080p. It's the same reason why the GTX 1060, 1050 Ti and 1050 are by far the most popular graphics cards, not because they're the best, but because they're cheap, and also Nvidia has incredible brand power, but that's a different conversation.

So for the same reason we don't test CPU performance with a GTX 1060, we're not going to test an extreme high-end GPU at 1080p, a resolution almost entirely reserved at this point for budget gaming. As we noted in previous articles covering this subject, it shouldn't matter which CPU reviewers use for testing the RTX 3080, as they're almost certainly going to be doing so at 1440p and 4K.

Having said all of that, there are a few titles that do appear to cause some problems for Ryzen, even at 1440p, and we're going to look at that today. We're also including 1080p results in this article, as they weren't included in our day-one RTX 3080 review. Both AMD and Intel CPUs were tested with four 8GB DDR4-3200 CL14 memory modules running in a dual-channel, dual-rank mode. We'll discuss the memory choice towards the end of the article, for now let's check out the blue bar graphs...

Benchmarks

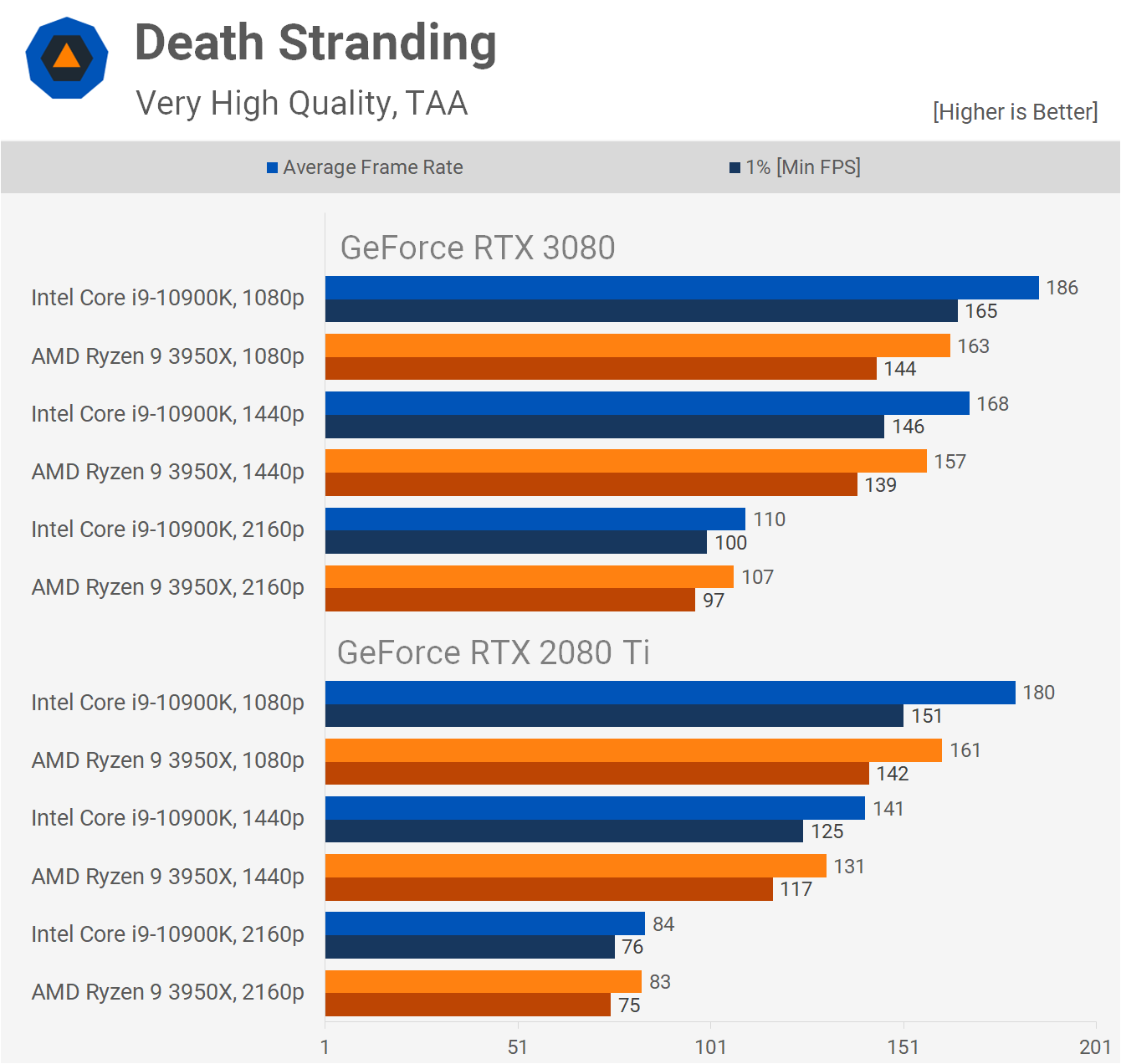

Starting with the Death Stranding results we see that the 3950X is 7% slower than the 10900K at 1440p when paired with the RTX 3080, though it's very important to note that it was also 7% slower with the RTX 2080 Ti, so the margins aren't changing.

We also see a similar thing at 1080p, the 3950X was 12% slower than the 10900K with the 3080 and 11% slower with the 2080 Ti.

When moving to 4K we see virtually no difference between the two CPUs, the 3950X is 3% slower with the RTX 3080, and we're talking about just 3 fps which won't influence the end result or our conclusion in a meaningful way. But we'll admit, for a more scientific approach, the 10900K makes more sense to use here.

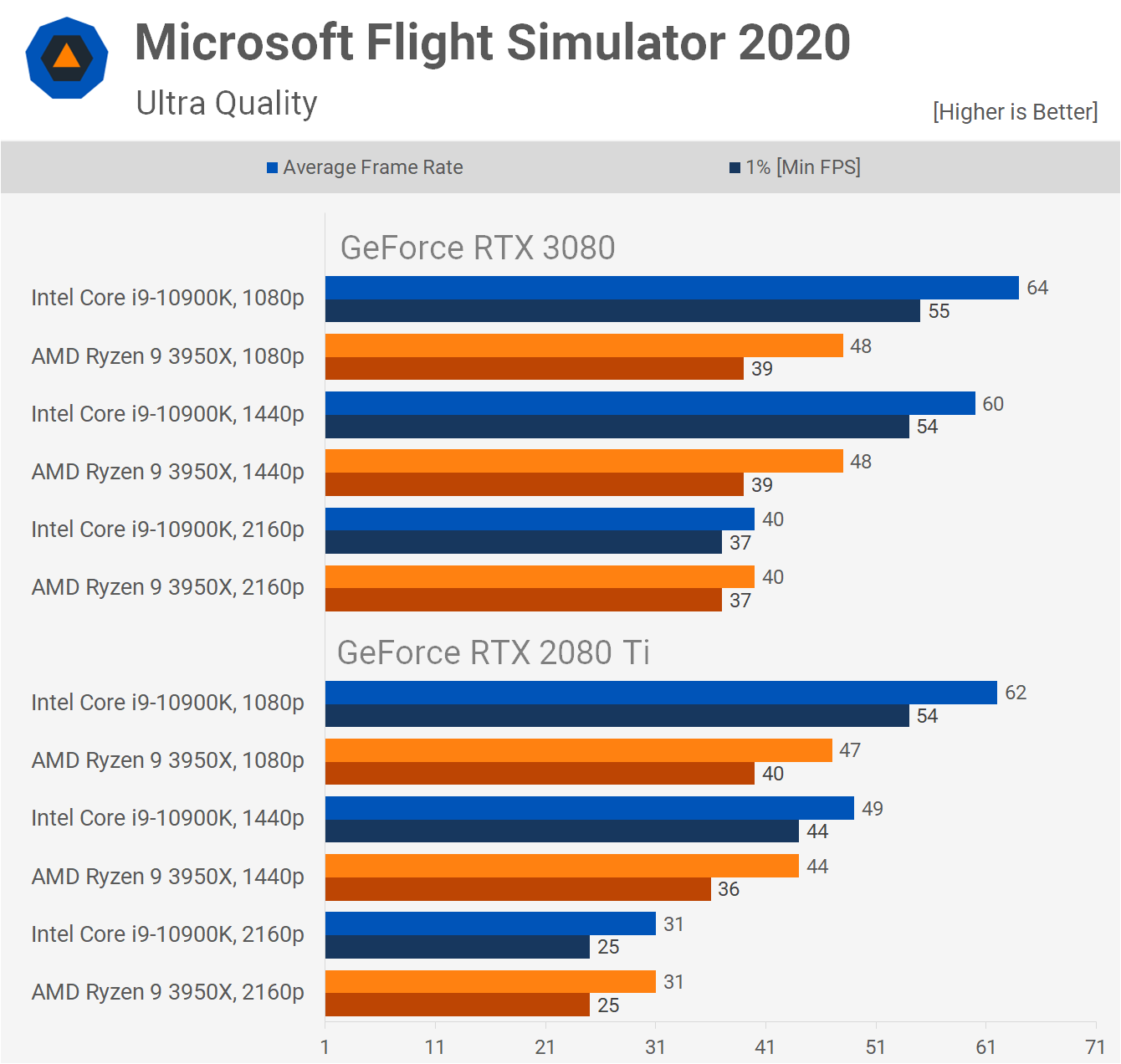

A really bad case for Ryzen can be seen with Microsoft's Flight Simulator 2020. Bizarrely this brand new next-gen simulator uses DirectX 11 and for the most part heavily relies on single threaded performance. The game is expected to receive DX12 support in the near future and if true that could turn things around for CPU performance, but for now the CPU is the primary bottleneck at 1440p and below.

Here the 3950X was 20% slower than the 10900K at 1440p with the RTX 3080 and 10% slower with the 2080 Ti. Margins of this size can be a real problem for our data and the only reason we got away with this was due to the fact that we tested 14 games. Had we used a smaller sample of 6-8 games, bigger margins like this in a single title could have been problematic.

On the positive side, once you hit 4K resolution the CPU bottleneck is entirely removed, and we're looking at identical performance with either processors using the 2080 Ti or 3080.

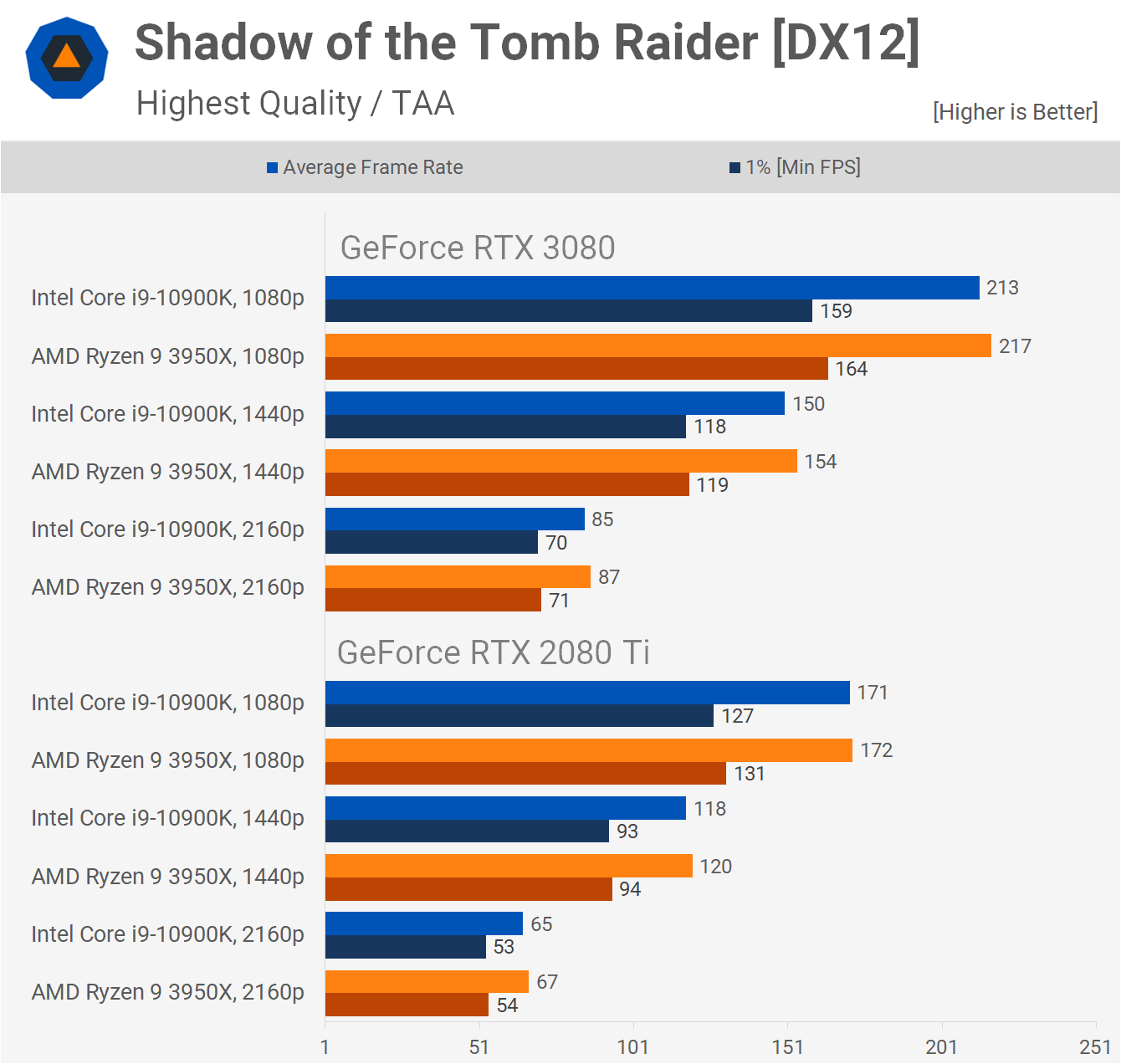

Moving on to Shadow of the Tomb Raider, we find a game that under all conditions plays as well or even slightly better on the Ryzen 9. Doesn't matter if we're talking about 1080p, 1440p or 4K, the results are virtually identical across the board.

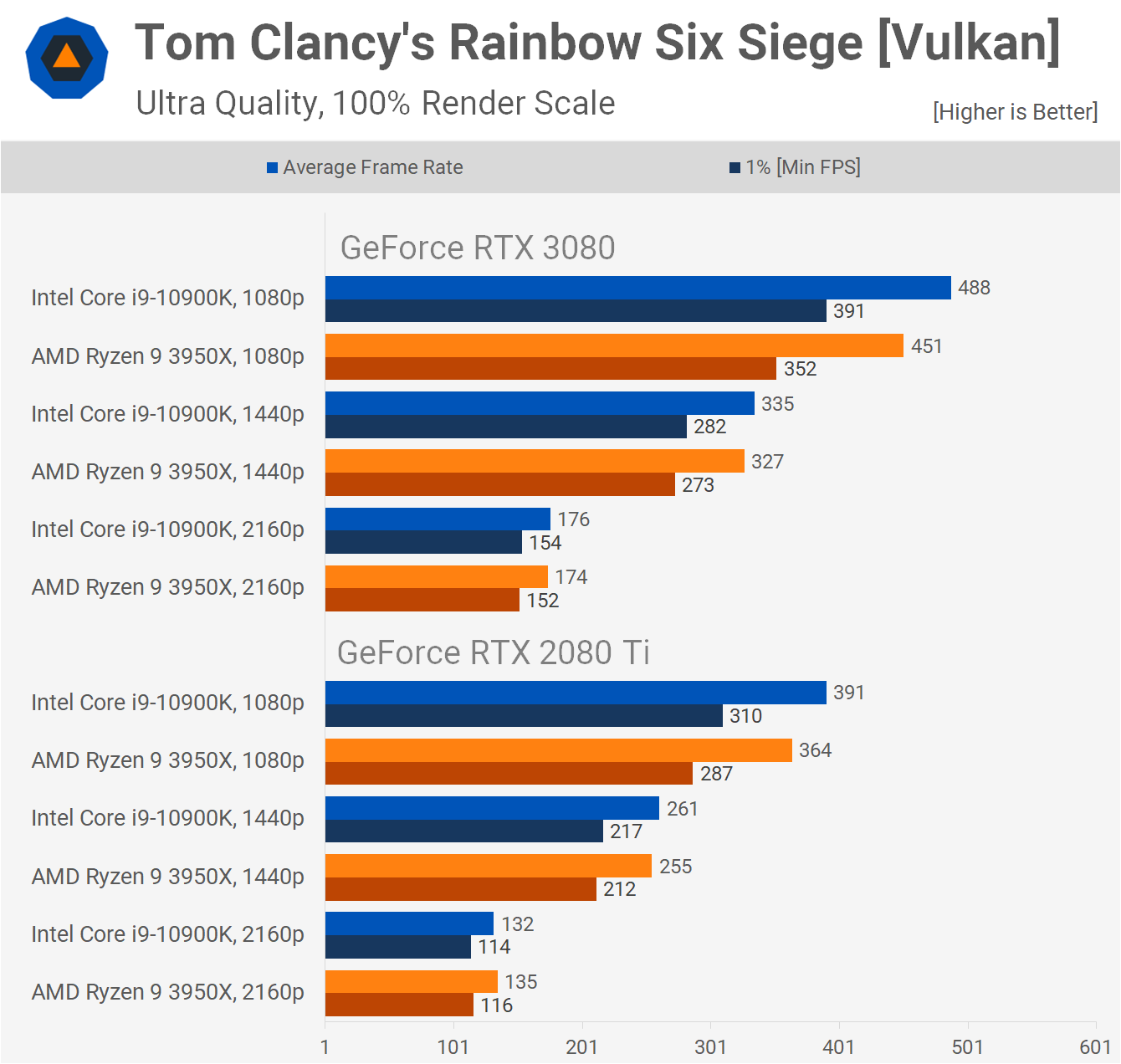

Rainbow Six Siege results are interesting. At 1440p with the RTX 3080, the 3950X was just 2% slower than the 10900K, and we see the exact same with the RTX 2080 Ti.

At 1080p the 3950X was 8% slower, and once we started to push over 400 fps the limitations of the Ryzen processor can be seen. Although we didn't test at 1080p before, it's interesting to see a similar 7% margin with the 2080 Ti. Of course, as we've seen in the previous titles, there's no difference between these two processors when testing at 4K, even with the RTX 3080.

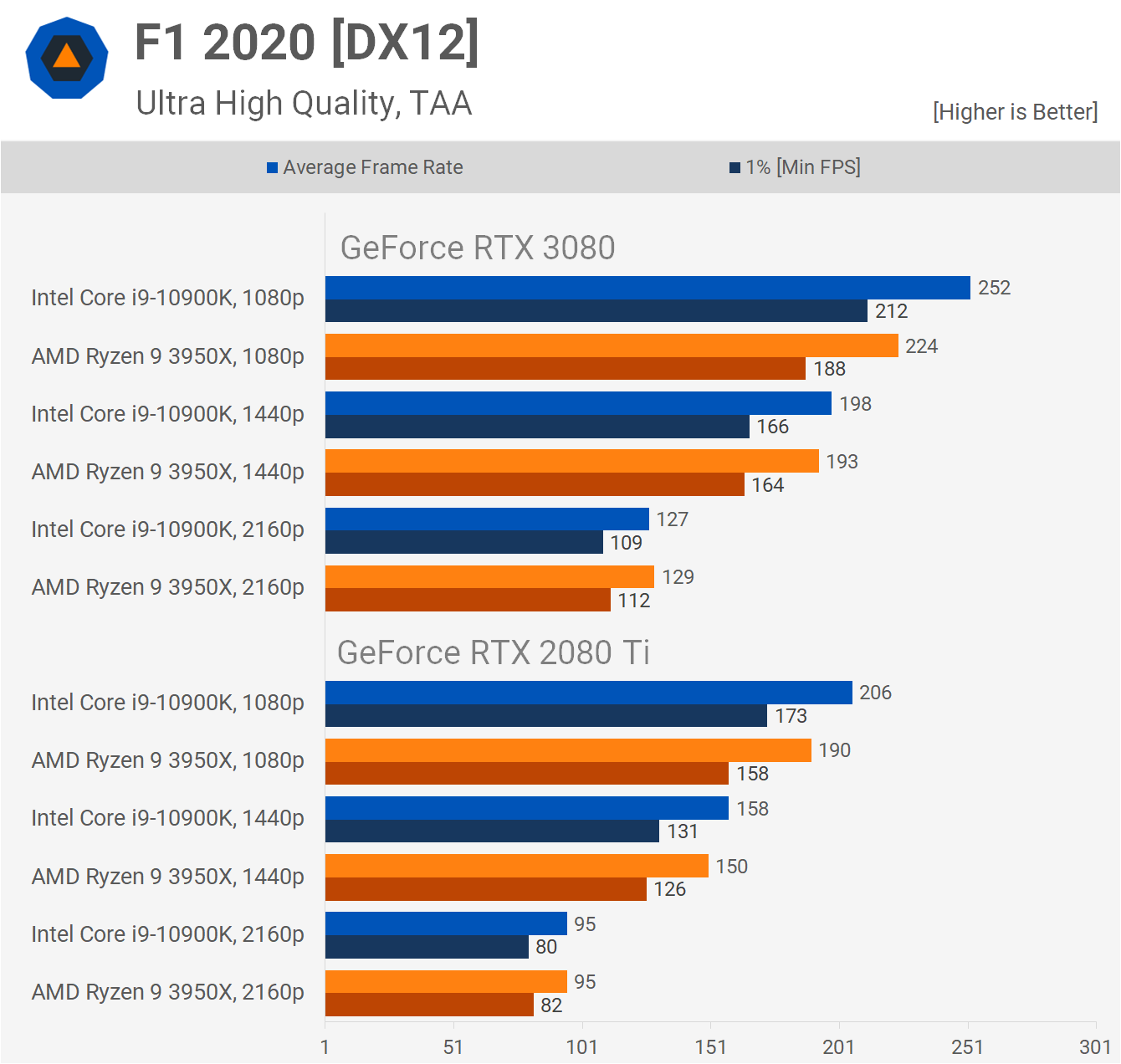

The F1 2020 results are similar to what we saw in Rainbow Six Siege, the 3950X is just a few percent slower than the 10900K at 1440p, so not enough to influence the results overall.

We do see a more significant difference at 1080p where the 3950X was 11% slower. Then at 4K, both CPUs are able to maximize the performance of the RTX 3080.

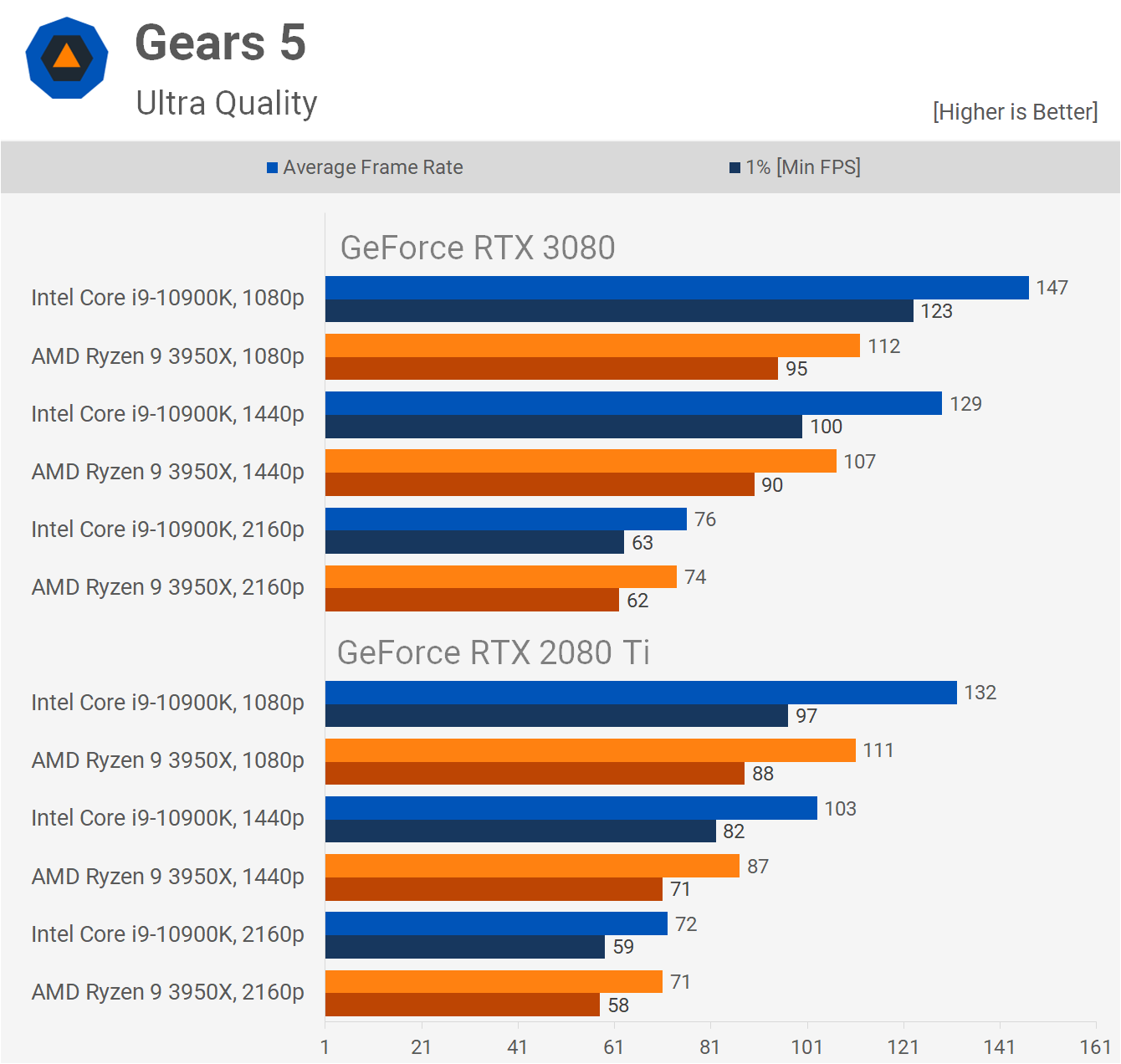

We ran into an issue testing with Gears 5 for our RTX 3080 review, but this is now solved. While 4K performance between the 3950X and 10900K is very similar as expected, the Ryzen processor does struggle at 1440p and 1080p in Gears 5.

Here we're looking at a 17% performance deficit with the RTX 3080 at 1440p which is less than ideal. That margin grows to 24% at 1080p.

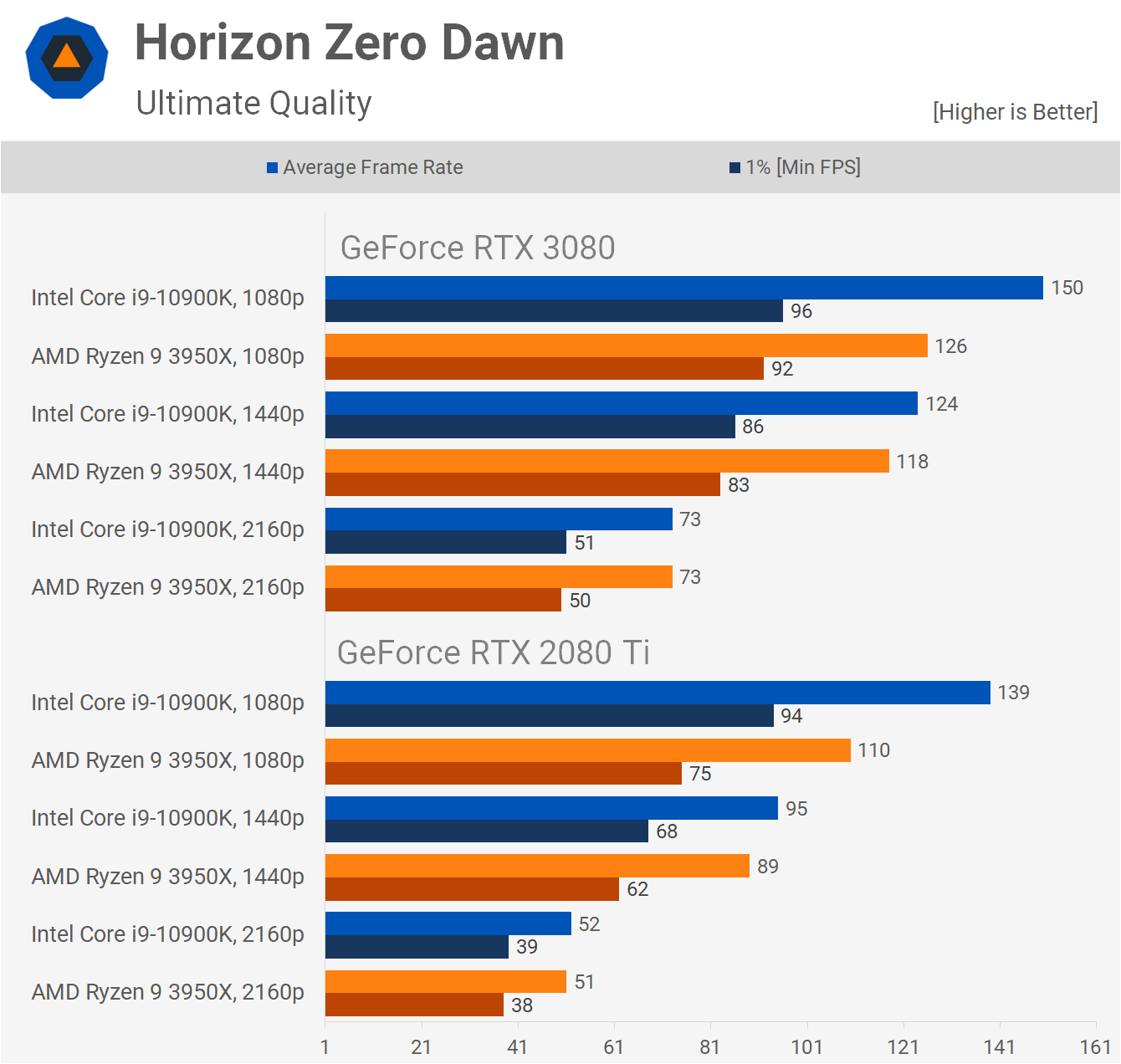

The Horizon Zero Dawn results are also interesting as we see a serious performance penalty with the 3950X at 1080p, here the AMD processor was 16% slower with the RTX 3080.

By the time we get to 1440p that margin is reduced to just 5% and then at 4K we see no difference between the AMD and Intel processors. It's worth noting that the 5% performance penalty seen at 1440p with the RTX 3080 was also seen with the 2080 Ti, so the margin doesn't actually change between those two GPUs when using an AMD processor opposed to an Intel processor.

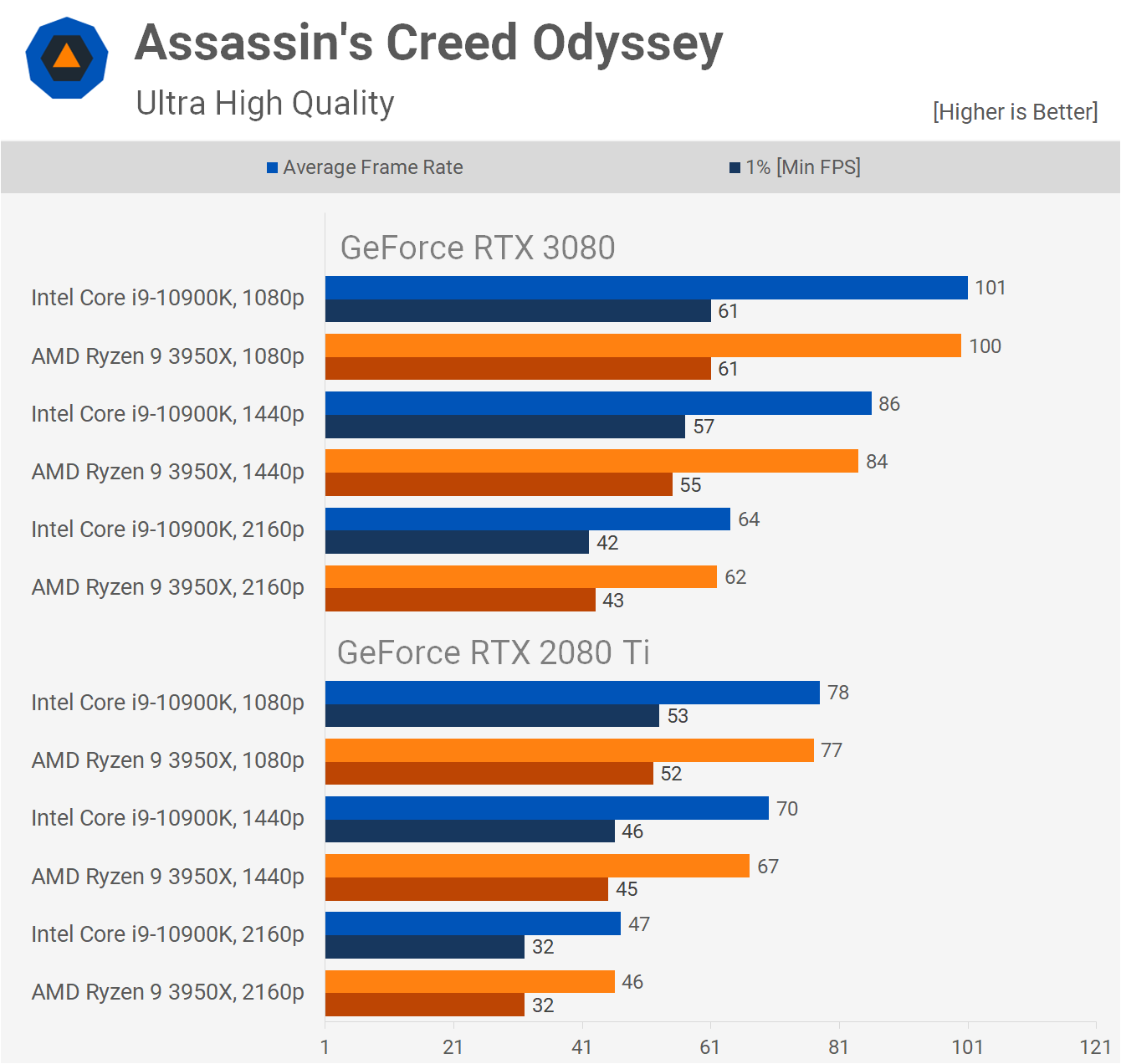

In Assassin's Creed Odyssey we're looking at comparable performance across the board, as the 10900K only lead by 1-2 fps, which as most was a 3% difference.

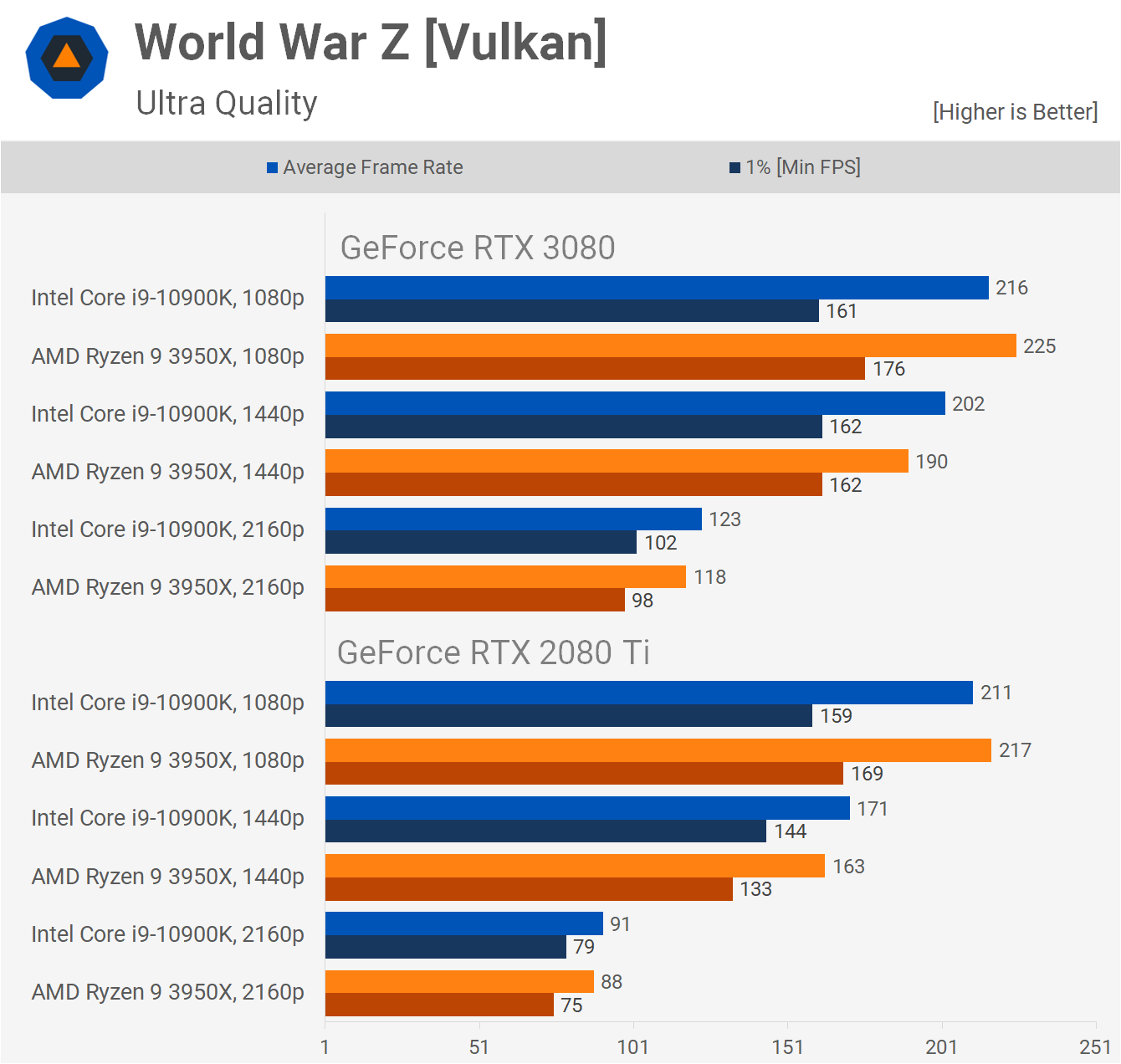

The World War Z results slightly favor Intel. Here the 3950X was 6% slower at 1440p with the RTX 3080 and 5% slower with the 2080 Ti, so again we're seeing a similar performance penalty with both GPUs. Then at 4K we're talking about a narrower 4% gap.

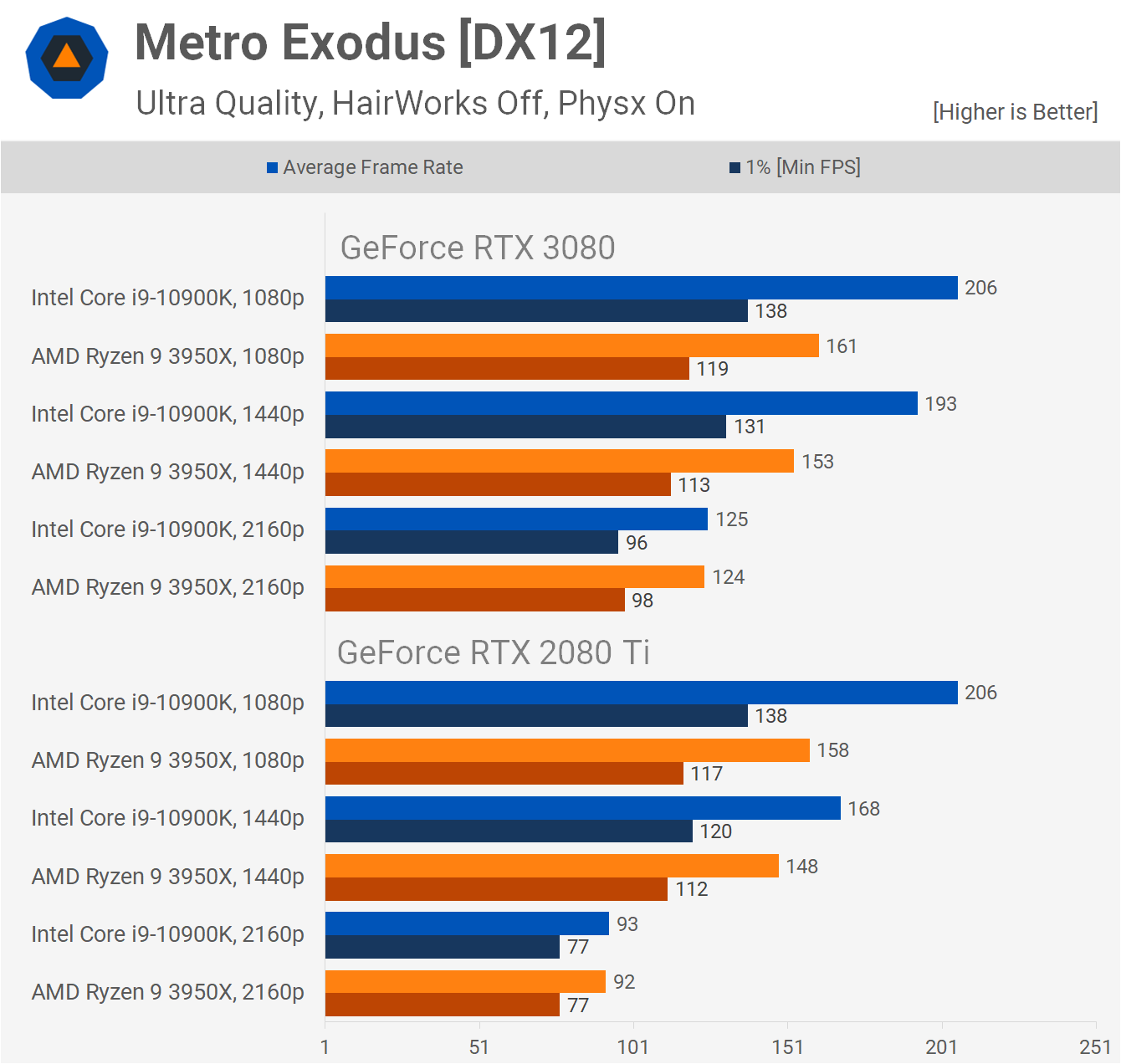

We have to admit we were really surprised by how weak the 3950X was in Metro Exodus, even at 1440p. In more recent benchmarks we've updated where we test within the game which drives less CPU limitations with more regular draw distances, and we suppose it's an overall better benchmark for GPUs.

Previously the RTX 2080 Ti maxed out at 130 fps with the 10900K, but now it's able to render almost 170 fps and this is a problem for the 3950X which limits performance to around 150 fps.

We're now looking at a scenario where the 3950X limits performance of the RTX 3080 by as much as 21% at 1440p. Again, that's far from ideal, though we see no such performance limitation at 4K.

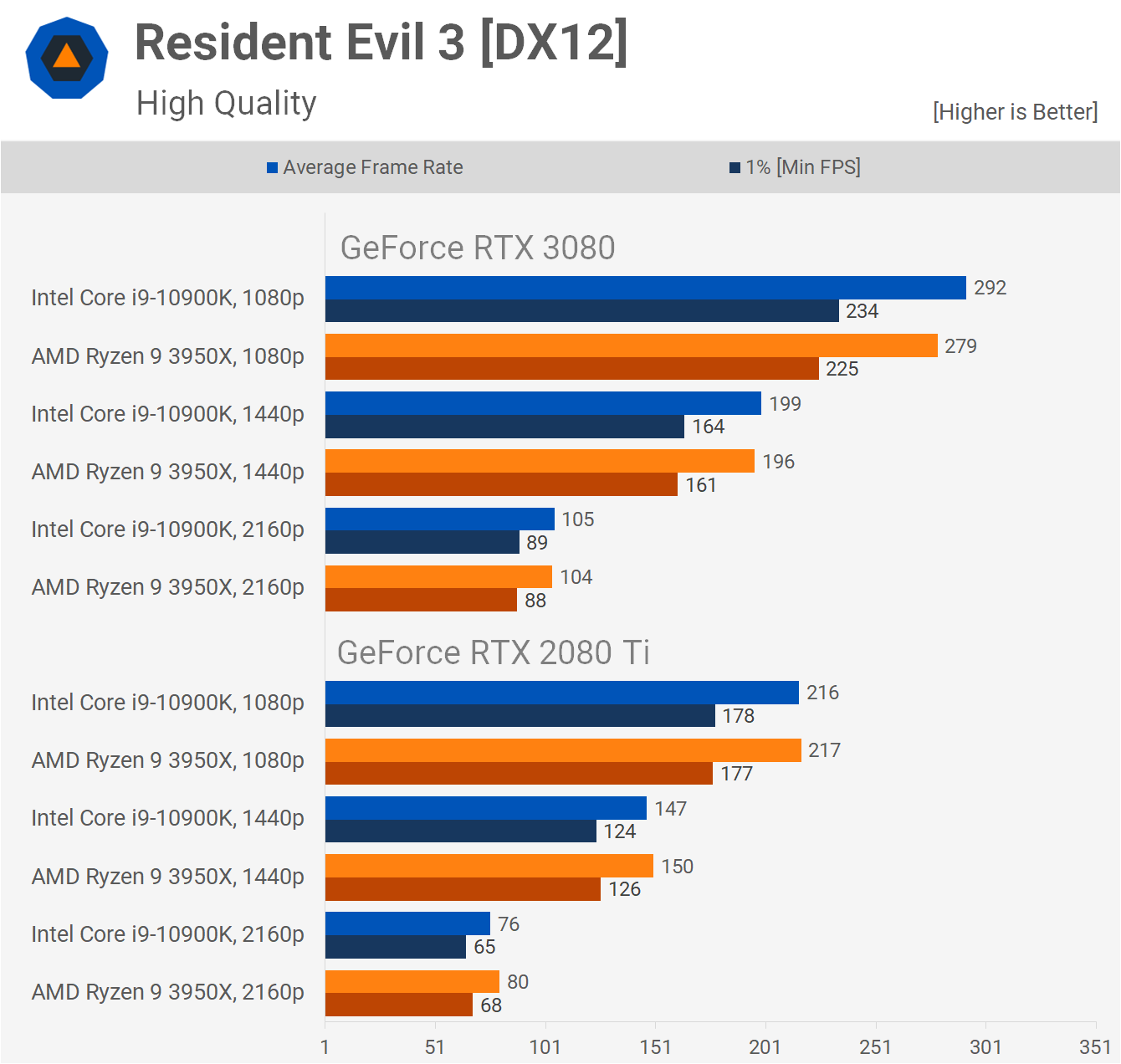

Resident Evil 3 sees no difference in performance between the 3950X and 10900K at 1440p and 4K with the RTX 3080. The same is also true even at 1080p with the 2080 Ti, though we are seeing a 4% difference with the RTX 3080.

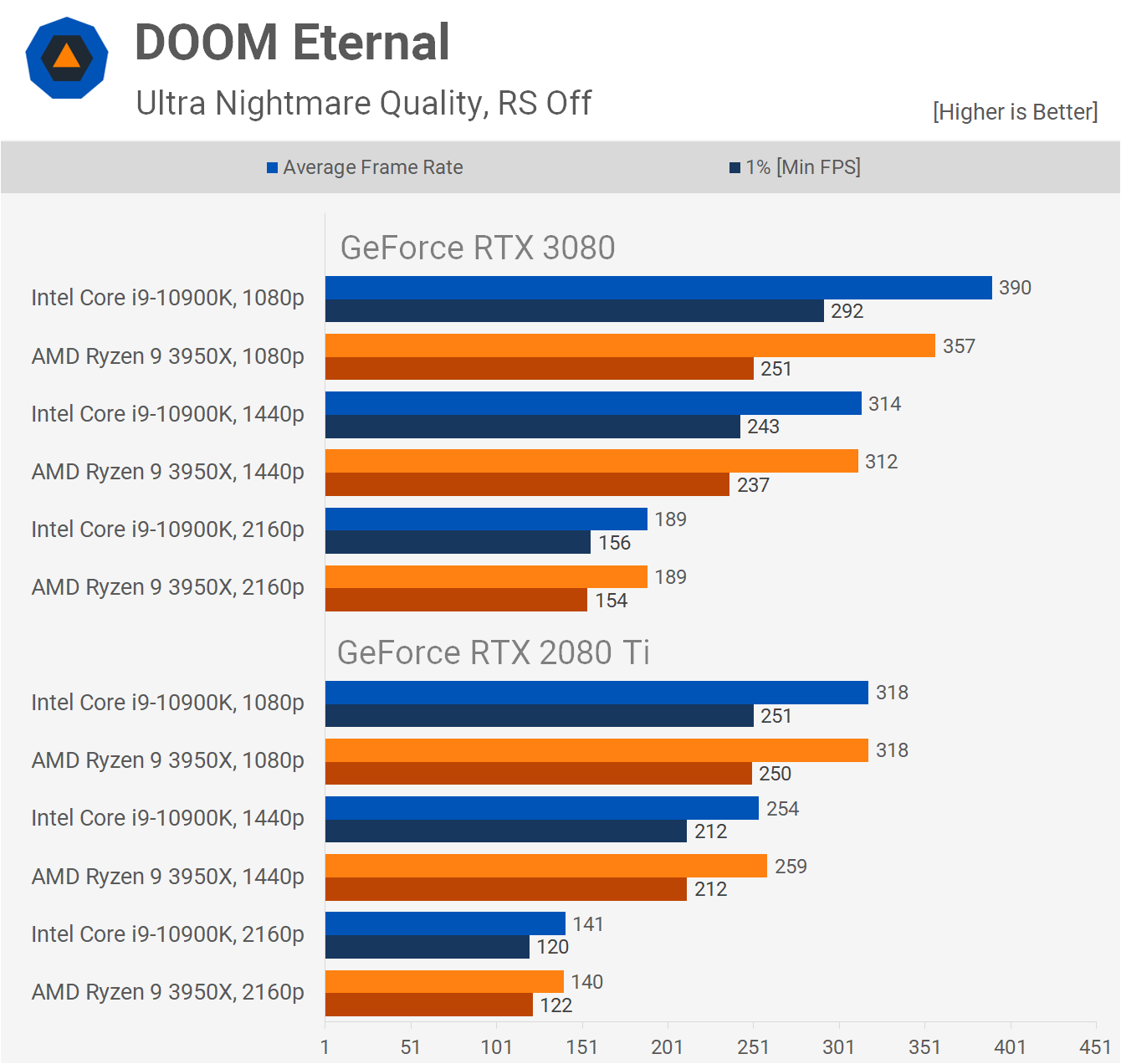

Doom Eternal is another game where we see no difference in performance with the RTX 3080 at 1440p and 4K using either the 3950X or 10900K. The 3950X was 8% slower at 1080p however.

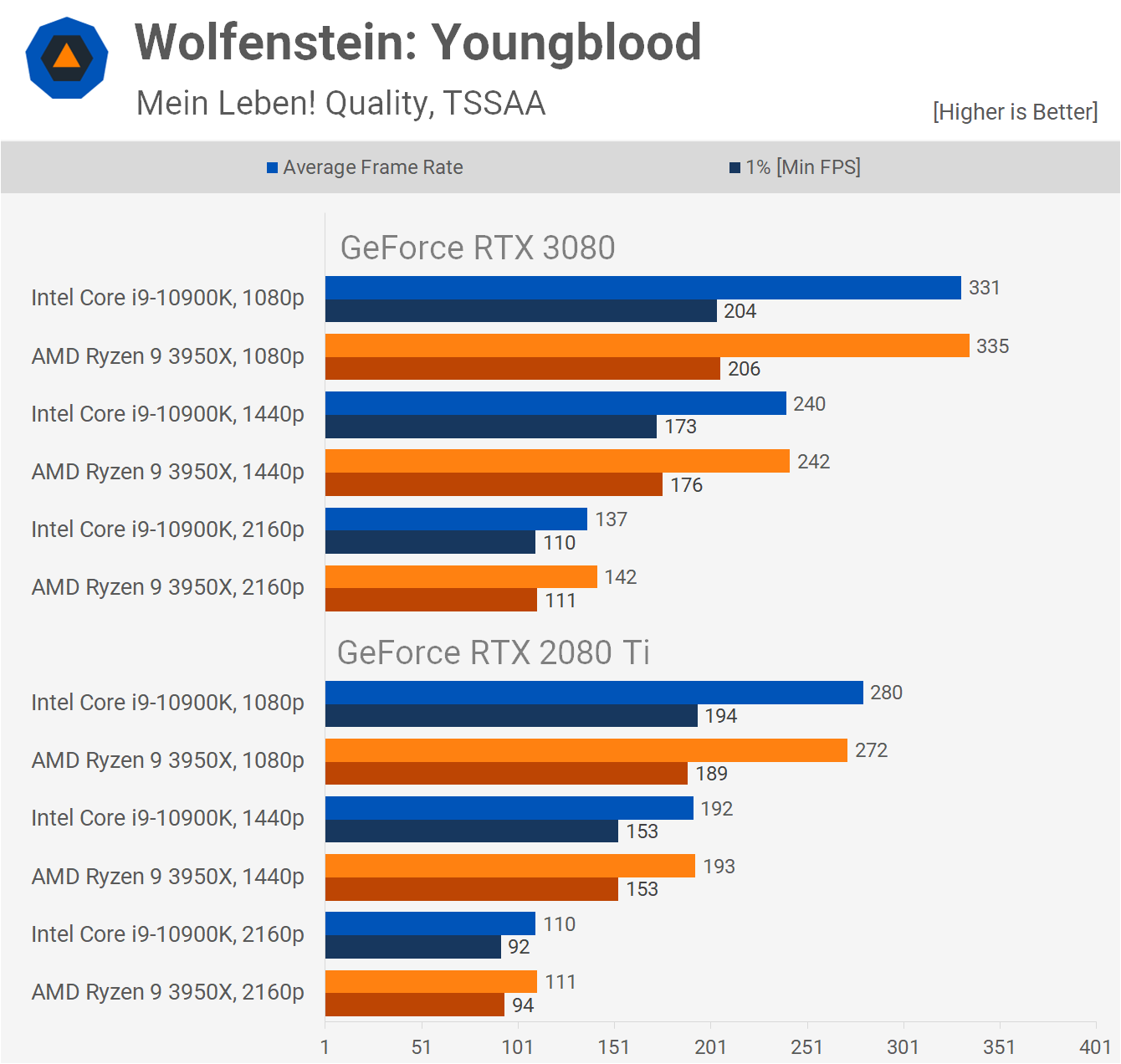

Wolfenstein: Youngblood sees no difference in performance between the 10900K and 3950X at 1440p and 4K, at least a difference worth talking about. The 10900K was 8 fps faster than the 3950X when running the RTX 3080 at 1440p, that remains a small 2% increase though.

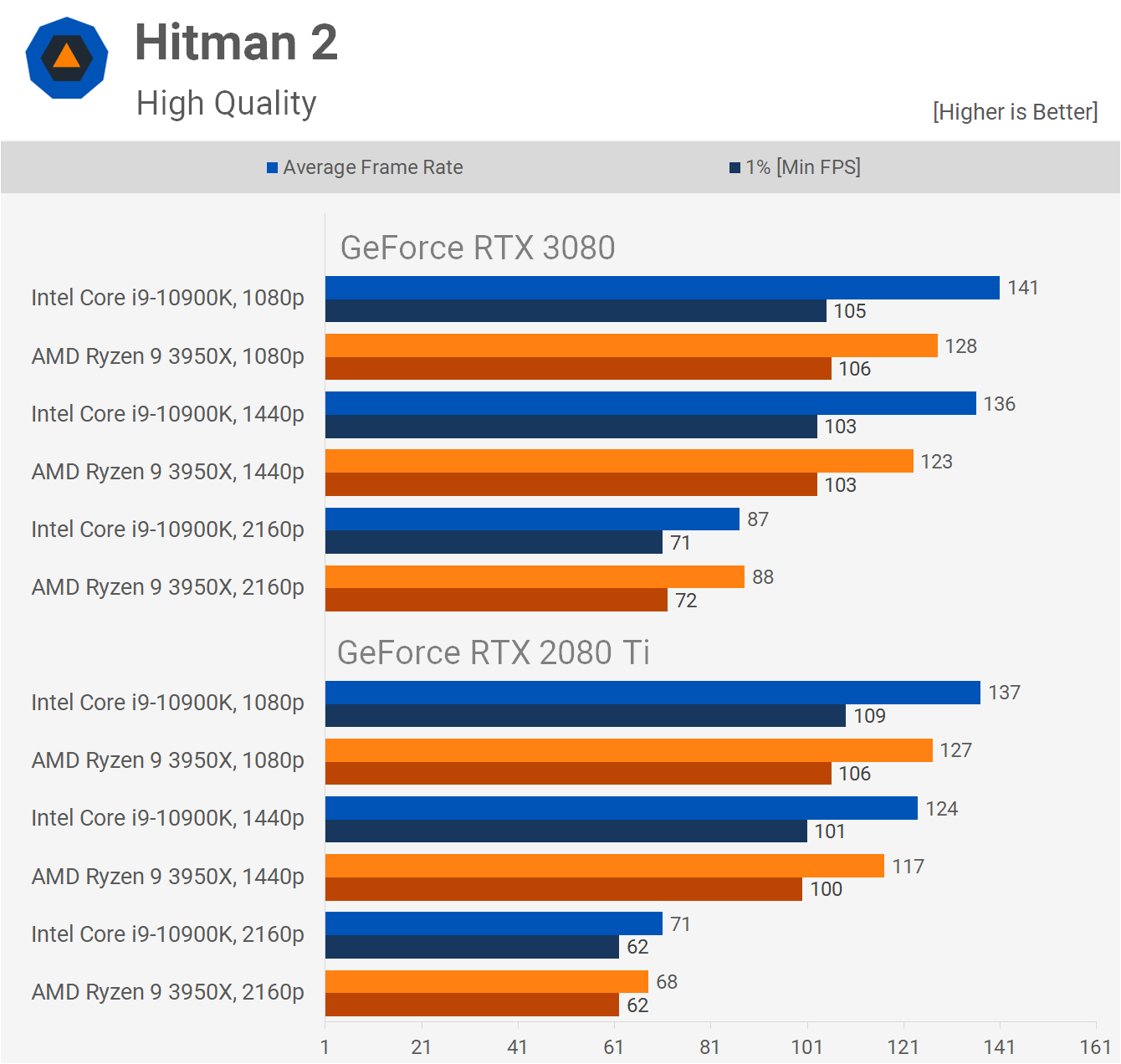

Hitman 2 is another title that shows some difference between the processors, not running particularly well on the 3950X. Here the AMD CPU was 10% slower at 1440p with the RTX 3080, though we see basically no difference at 4K.

Performance Summary

Having seen the results for 14 games, we've got to say things were a little messy with the Ryzen 9 3950X at times. Of course, the GeForce RTX 3080 is an extremely fast GPU and marginal changes in performance at this level won't make a difference in gameplay.

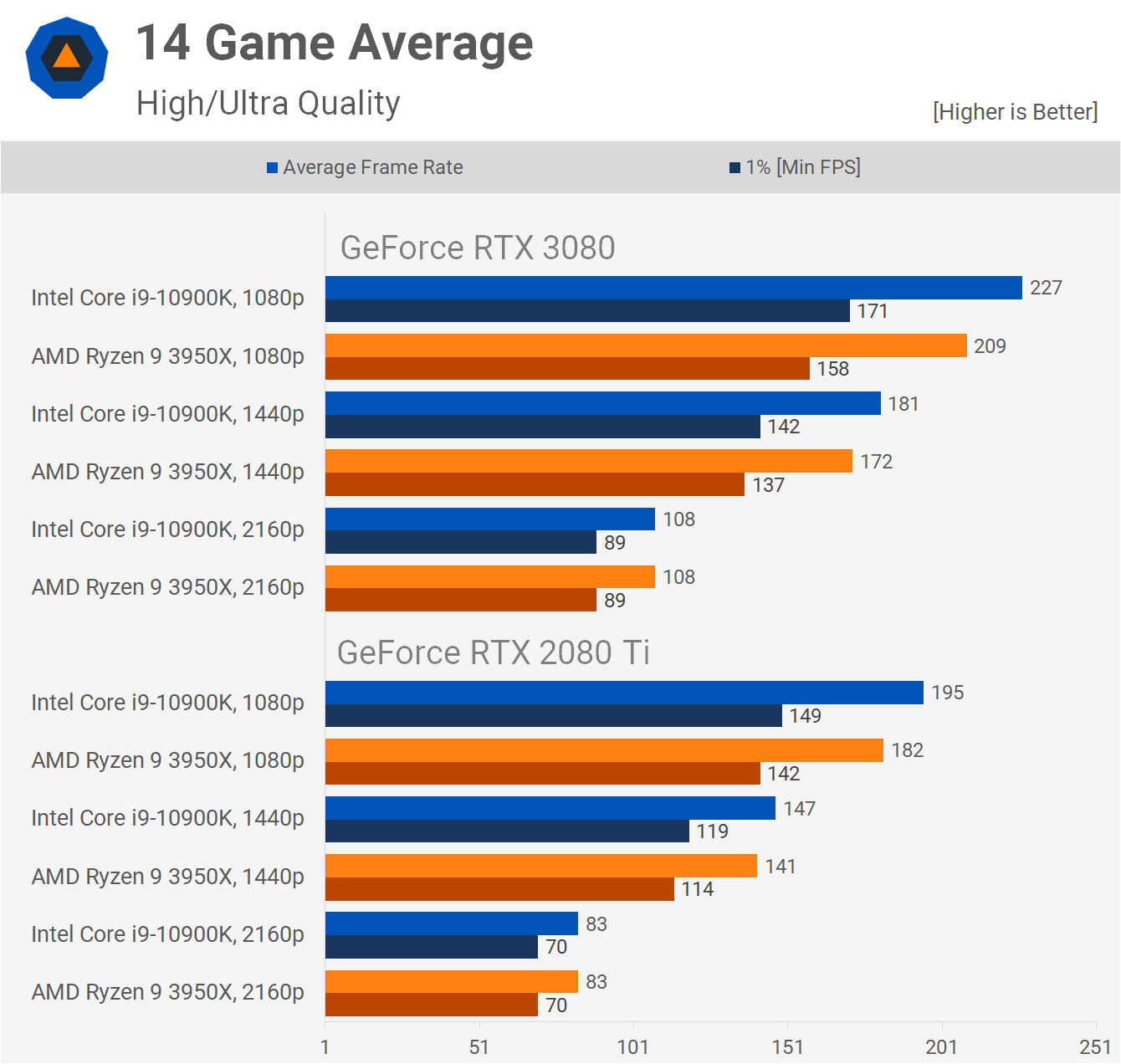

Some games were a match, in others Intel had a clear raw performance advantage, and for the most part we saw no real difference anywhere at 4K. Here's the 14 game average.

The data you see here is what we use to evaluate GPU performance and calculate stuff such as cost per frame, so these numbers are very important.

We see that at 1440p the 3950X was 5% slower than the 10900K, which can influence the findings. That said, it was also 4% slower with the 2080 Ti, so when comparing these two products you're going to come to the same conclusion regardless of which CPU is used. It's a similar story even at 1080p, where the 3950X was 8% slower with the RTX 3080, but also 7% slower with the 2080 Ti.

What We Learned

Out of the 14 games tested, in half of them we saw virtually no difference in performance at 1440p and 4K between the Ryzen 9 3950X and Core i9-10900K. This includes titles such as Doom Eternal, F1 2020, Resident Evil 3, Rainbow Six Siege, Shadow of the Tomb Raider, Wolfenstein Youngblood and Assassin's Creed Odyssey.

We saw single-digit margins at 1440p in Death Stranding, Horizon Zero Dawn and World War Z.

The bad titles for AMD include Gears 5, Hitman 2, Metro Exodus and Microsoft Flight Simulator 2020, though none of these showed a difference at 4K.

When we lump all that data together the 3950X was 5% slower on average at 1440p, but it's not skewing the data relative to other GPUs like the 2080 Ti by that much. We estimate it's closer to 1% to 2%, as there's still a small performance penalty with those slower GPUs.

There are a few things we could have done to reduce that margin, like using faster memory. DDR4-3800 CL16 boosts performance by about 4%, and then manually tuning the timings can boost performance by a further 14%. At least this is what we saw at 1080p with the RTX 2080 Ti. In hindsight, maybe that's something we should have done, but we opted not to as tuning memory timings on a test system can get complicated, and also risk causing stability issues.

We could have also stuck with our Core i9-10900K test system, but after seeing the response from readers, it seems like giving the 3950X a shot was worth it. Most importantly, we were going to come to the same conclusion when testing the RTX 3080 with either CPU.

For example, TechPowerUp found the RTX 3080 to be 52% faster than the 2080 at 1440p, based on a 23 game sample, and they used a 9900K clocked at 5 GHz with DDR4-4000 memory. That is very much inline with our own data. We found the RTX 3080 to be on average 49% faster than the 2080. Looking around at all the usual suspects, it seems pretty unanimous that overall the RTX 3080 is about 50% faster than the RTX 2080 at 1440p and about 70% faster on average at 4K.

Do we think it was a mistake to test with the Ryzen 9 3950X? In some ways, yes, the margins seen in Gears 5, Hitman 2, Metro and Flight Simulator 2020 are less than ideal, and while it didn't really change the overall picture, we'd rather not be CPU bound at 1440p in those titles.

Going into this we were torn as to which way we should go – frankly having now reviewed the RTX 3080, we still are. There's definitely pros and cons to either option, so we'll seek some comfort in the fact that we tested on the platform most of you wanted to see. Looking not so far beyond, we predict we'll be moving to a Zen 3-based test system in a few months' time, so regardless of which way we went, having to do it all over again relatively soon is inevitable.