EA's latest Battlefield title recently debuted and it's arrived to some gameplay and connectivity issues, but we're not going to waste time talking about gameplay or review this title, instead we'll take the opportunity to measure graphics card performance, so you can get an idea of what you'll need to get into the action.

Battlefield 2042 is powered by the Frostbite game engine, developer DICE is relying on the third iteration of the engine which was also used by Battlefield 5, Battlefield 1, Battlefield Hardline and Battlefield 4. If you've seen any gameplay of this latest title, you can expect a modified or upgraded version of Frostbite that supports new weather effects amongst other things.

Visually, Battlefield 2042 is breathtaking and certainly one of the best-looking games we've experienced. We first jumped in with an RTX 3090 at 4K and frame rates were decent, not as high as we'd like for competitive gameplay, but they were surprisingly good given the visuals. Still, we couldn't help but think the game was going to murder mid-range hardware and even 1080p would be a struggle with a modest graphics card, but we've got to say, it's far better optimized than we were expecting.

That isn't to say the game is without issues and of course, we had to deal with the crappy Origin launcher and the 5 hardware lockout DRM trash, but after buying a little over half a dozen EA Play accounts for a month, plus my personal Steam version, I have been able to test a good number of GPUs over the past 3 days.

So, let's talk about testing, as Battlefield games are always fun in that regard. Given this is a multiplayer-only game, we're forced to test that and while I'm sure many of you would love us to jump into a 64-player Conquest match to do all of our testing, it's simply not feasible nor accurate.

Even if we were to only compare two different hardware configurations, you'd still need dozens of benchmark runs just to get a ballpark comparison. That's due to the dynamic nature of multiplayer games, depending on where other players are on the map and what they're doing, system performance can vary quite a lot, making run-to-run variance inconsistent.

Our work around here was to use the new 'Portal' mode to create our own benchmark server with AI, as this would be both CPU and GPU heavy and likely do a good job of accurately representing real-world performance. Sadly, this being an EA game, that feature didn't work for the first 2 days of testing, so we were unable to try it.

Instead, we've used the Arica Harbor 'free-for-all' custom experience without any other players. Now, before you stamp your feet in protest, claiming this isn't an accurate way to test as it doesn't replicate the kind of load you'll see in a big multiplier game, that's not actually true for a GPU test. I know this because I spent a lot of time comparing both modes on the same map.

The reason for this is that additional players don't massively increase the GPU load, but rather the CPU load, and because we're testing with a Ryzen 9 5950X using low-latency memory, at no point was either test mode approaching anywhere near CPU-limited performance. In fact, the frame rates were almost always close, but it was easier to control the test scene without players shooting at me.

To be clear, for GPU testing this mode is perfectly fine and since we're only interested in GPU performance for this video, the method works. What it's not suitable for is CPU testing and for that we'll use a completely different method, hopefully using Portal to create a custom match.

For testing we have data from 33 different GPUs at 3 resolutions and 2 visual quality presets. Then for a bonus, we've included ray tracing results as well. Our testbed is powered by the Ryzen 9 5950X and 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory. Now let's jump into the data.

Benchmarks

Ultra Quality Performance

Starting with the 1080p ultra quality data we find that the GeForce RTX 3090, 3080 Ti, Radeon RX 6900 XT and RTX 3080 all pushed up over 150 fps while keeping the 1% low above 100 fps, so great performance there given the visuals.

The 6800 XT was also right there, basically matching the RTX 3080.

Then we see a bit of a drop down to the RX 6800 and RTX 3070 Ti, but again both did maintain over 100 fps at all times. The RTX 2080 Ti, RTX 3070, 6700 XT and 3060 Ti were all fairly comparable and did average over 100 fps.

Then we drop down another performance tier with the RTX 2080, 2070 Super, 5700 XT, 6600 XT, 1080 Ti and RTX 3060. Here we have yet another new game where the 5700 XT is found punching well above its weight, beating not just the 1080 Ti, but basically matching the 2070 Super, a product if you remember cost $100 more. A very impressive result from the 5700 XT, while the 6600 XT was a lot less impressive, but we've come to expect that.

Now if we head down towards 60 fps on average, we find the GTX 1080, 1660 Ti and Vega 56. Vega also does well here as traditionally you would have expected Vega 56 to almost match the GTX 1080, but that's what we're seeing here and that meant it did beat the GTX 1070, which just managed to average 60 fps.

Then we have the 5500 XT 8GB hanging in there with 54 fps on average, making it only slightly faster than the never-say-die RX 580, which edged out its competitor, the GTX 1060. Meanwhile the 4GB cards all died a slow and painful death with the 5500 XT unable to deliver playable performance, while the GTX 1650 series were almost impossible to test.

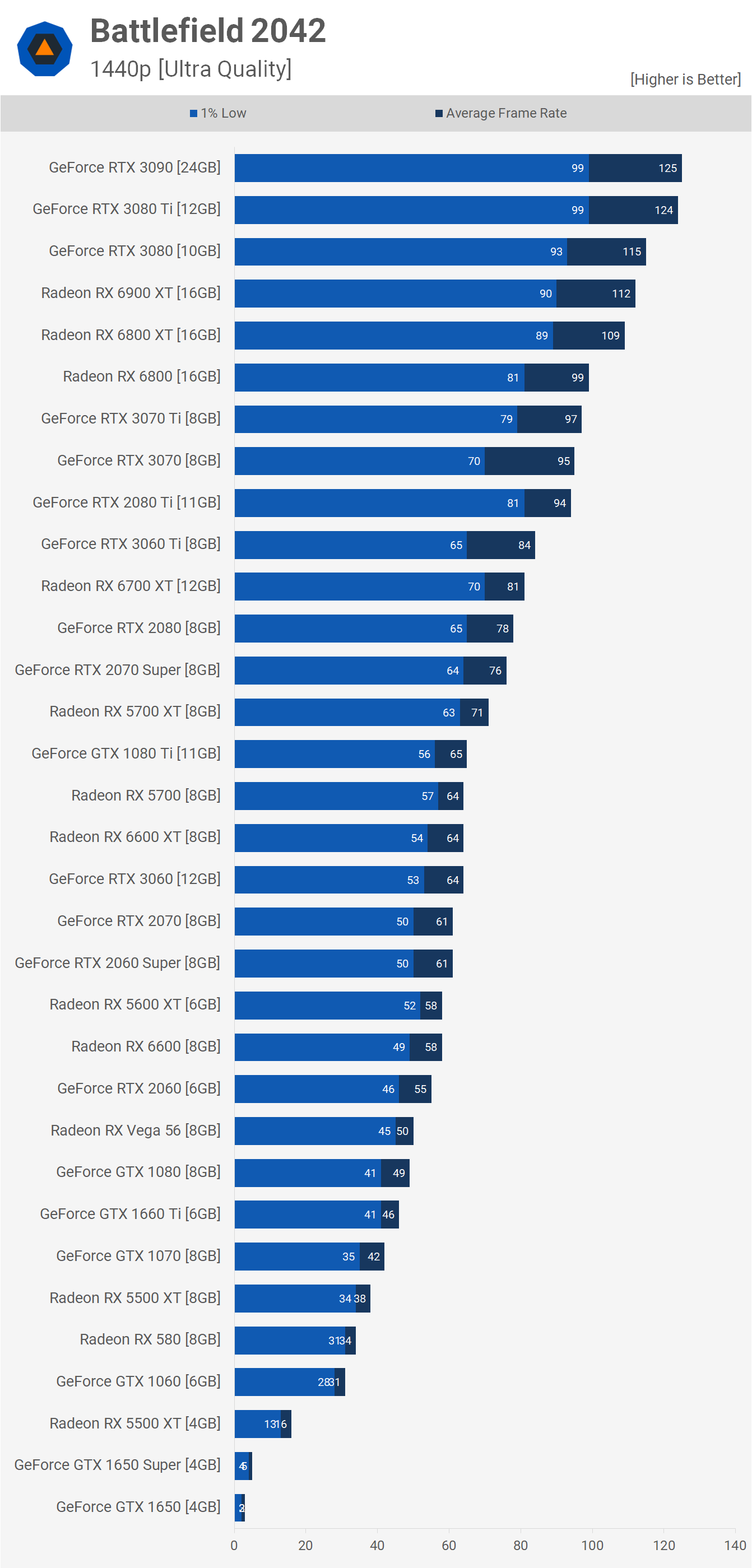

Jumping up to 1440p we see that the Ampere GPUs start to take over with the RTX 3090, 3080 Ti and 3080 all pulling ahead of the 6900 XT in our test. The 6900 XT averaged 112 fps, making it a few frames faster than the 6800 XT which was 10% faster than the vanilla 6800.

The Radeon RX 6800 did compete well with the RTX 3070 Ti and 3070, and I got the feeling that VRAM was starting to become an issue here for some of the faster 8GB cards. The 2080 Ti, for example, felt smoother than the newer RTX 3070, despite the frame rates being almost identical. It's possible after a longer test period that the 3070 would start to struggle with memory usage.

Again, frame time consistency was better with the 12GB 6700 XT though as we drop down the list, the slower 8GB cards seem less phased by VRAM consumption, at least in our fairly short 3-run average testing. The 5700 XT can be found punching above its weight once again, almost matching the 2070 Super, making it a good bit faster than the RTX 2060 Super and 2070.

The RTX 3060 also performed well with just over 60 fps on average and then we see the 2060 Super and 2070 basically hitting 60 fps. The 5600 XT and 6600 were able to deliver a good playable experience just shy of 60 fps. The RTX 2060 did well at 1440p despite the more limited 6GB VRAM buffer.

Interestingly, the move up to 1440p saw Vega 56 match the GTX 1080, an impressive result for the old GCN architecture. Most of the Pascal GPUs struggled at this resolution and we see below the GTX 1070 that the 5500 XT and 580 weren't that playable, at least by competitive online shooter standards. Anything with just 4GB of VRAM was unplayable using the ultra quality settings.

Moving to 4K, we have a lot less usable data, the RTX 3090 pumped out an impressive 80 fps which made for a breathtaking experience. This may be less than what I'd want for a multiplayer shooter, but as far as the visual experience goes it was incredible. The same can be said about the 3080 Ti and even the standard RTX 3080. The 6900 XT and 6800 XT were less impressive as the 1% low wasn't kept above 60 fps, but overall still a nice experience.

For those wanting to keep the average over 60 fps, you'll find yourself struggling with an RTX 3070 Ti or RX 6800, and by the time we get down to the 5700 XT and 2070 Super we were struggling to keep frames per second above 40.

Medium Quality Performance

Let's dial back the quality preset a few notches from 'Ultra' to 'Medium'. Doing so greatly reduces VRAM requirements, and of course, overall GPU horsepower. As a result, the 6900 XT is now pushing near 200 fps at 1080p with most current generation high-end GPUs good for over 170 fps. In fact, if we scroll down to previous generation mid-range parts like the 5600 XT and RTX 2060, we find that under these conditions those parts are good for just over 100 fps.

Incredibly, most graphics cards are able to deliver highly playable performance at 1080p using the medium quality preset. For 60 fps you need only an RX 580, 1650 Super or GTX 1060.

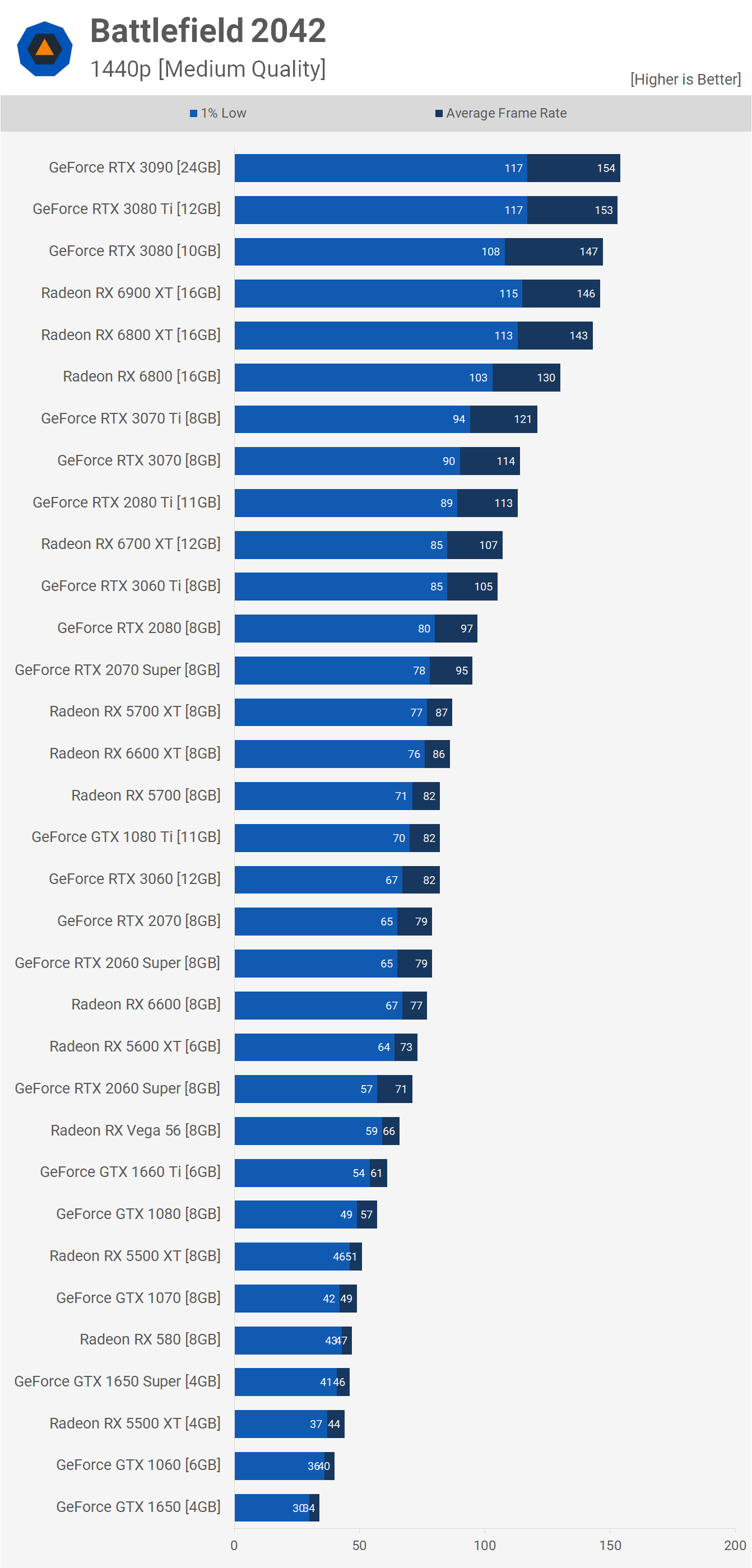

Jumping up to 1440p still sees most GPUs able to deliver highly playable performance with the medium quality preset. Again the high-end current generation GPUs are pushing over 140 fps with previous gen models still easily breaking the 100 fps barrier.

We see that the 2070 Super is a bit faster than the 5700 XT with 95 fps on average versus 87, while the 1080 Ti was good for 82 fps. Even Vega 56 performed well with 66 fps on average and incredibly that got it very close to the 2060 Super. Meanwhile the RX 580 did dip down to 47 fps on average, but that still meant it was 18% faster than the GTX 1060 6GB.

At 4K resolution the high-end Ampere GPUs come up just short of 100 fps which is a great result, especially given the 6900 XT averaged 86 fps, making it slower than the RTX 3080. Further down the stack, the RX 6800 did well edging out the 3070 Ti and comfortably beating the standard 3070.

For around 60 fps, you'll require the RTX 3060 Ti, 2080 Ti or 3070, with the Radeon RX 6700 XT just falling short with 58 average fps. Below that you are best off just lowering the resolution.

Ultra Quality Performance with Ray Tracing

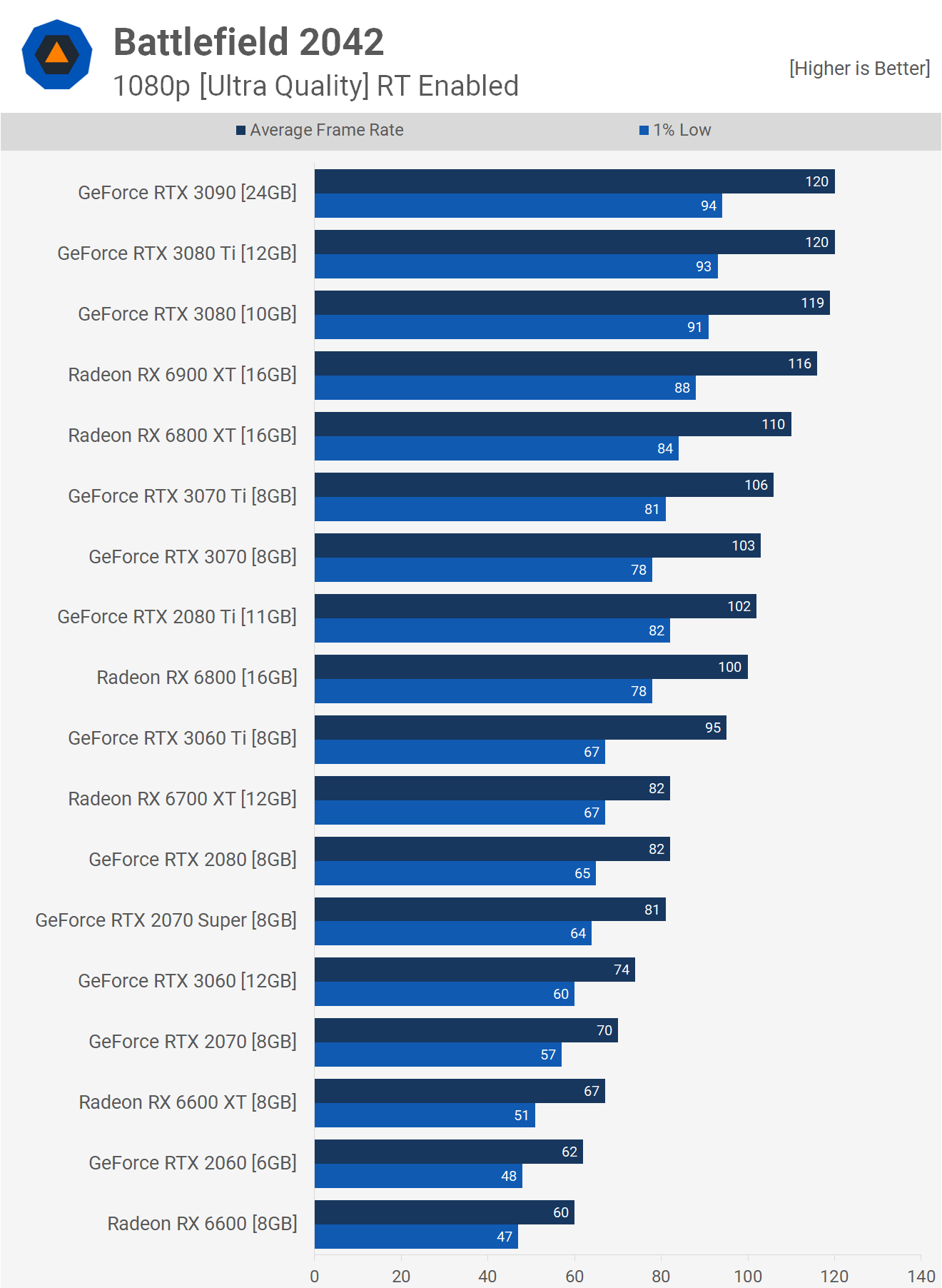

It's time to take a look at performance using the ultra quality preset with ray traced ambient occlusion enabled. In the case of the RTX 3080, we're looking at a 22% decline in performance at 1080p and a 19% decline for the RTX 3060. Then from AMD we're looking at a 26% performance hit for the 6800 XT and a 27% performance drop for the RX 6600.

A slightly larger performance hit for AMD as you'd expect. In fact, you might have expected a bigger drop off for the Red Team. Anyway, at 1080p the game was still very playable with ray tracing enabled, with any of the graphics cards tested. That said, I'd really only recommend playing with RT enabled with higher-end models, though you might be fine with a 60 fps average, but that's ultimately up to you to decide.

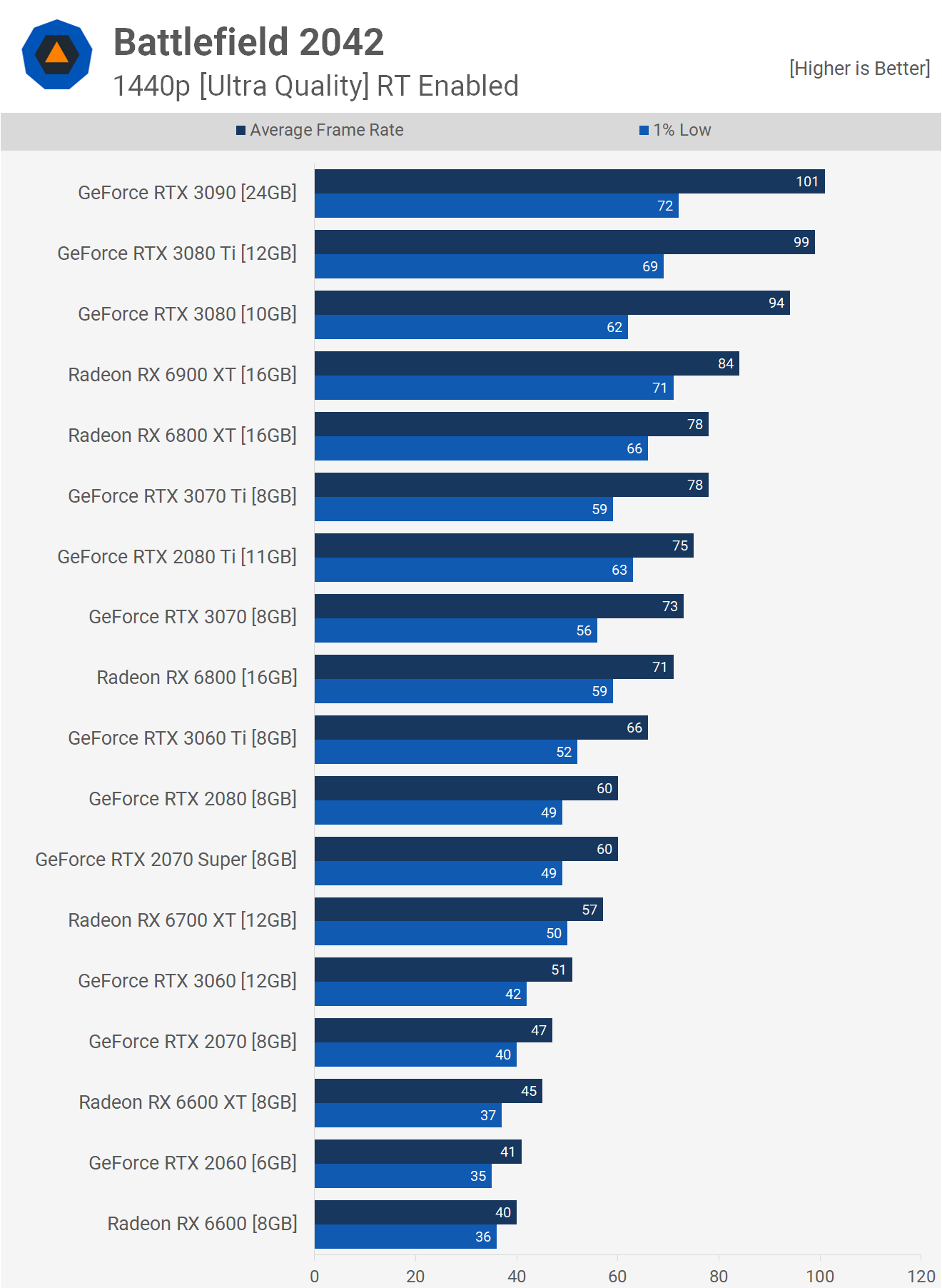

Now at 1440p, we're looking at a ~30% performance hit for Radeon GPUs with RT enabled and a 20% hit for GeForce GPUs. This meant the 6900 XT was now 17% slower than the RTX 3090, while the 6800 XT was also 17% slower than the RTX 3080. Those seeking 60 fps will get away with the RTX 2080 or 2070 Super, and from AMD you'll have to make do with the 6700 XT.

No surprises at 4K, you'll want the RTX 3090, 3080 Ti or 3080 for the best performance while you can sort of enjoy the game with the 6900 XT or 6800 XT but the experience is much better with RT disabled.

Image Quality Comparison

Now that we know how a few dozen AMD and Nvidia GPUs perform in Battlefield 2042, the question is how much difference those tested quality settings make to the visuals. So let's take a look at that...

The difference between medium and ultra is substantial, though the changes won't always jump out at you. Essentially everything is improved: textures, lighting, post processing, vegetation, and so on. Depending on the scene, the differences may be so evident that will justify a 20-30% decrease in performance.

Then we have ray traced ambient occlusion, which does have quite a significant impact on visuals, though not always for the best, and the example in the video illustrates that well. The debris around the burnt out car looks better with ray tracing enabled as the greater emphasis on shading really jumps out at you, as objects gain depth.

That's the good stuff. The bad can be seen when looking at the floating debris, which has an unpleasant ghosting effect. It looks bad and completely breaks immersion. Surely they need to fix this.

Overall, ray tracing helps in making the game look more realistic, so if they can fix the dynamic particle issue, it would certainly be worth using. I do feel most Battlefield gamers will be favoring frame rates over visual quality though. Not only that, but the medium preset generally makes it easier to spot enemies, so while ultra with ray tracing looks amazing, it's not the "best" way to play the game, at least competitively.

How Does It Run?

That's our look at GPU performance in Battlefield 2042 and what a nightmare this game has been to test, but we think the data's been worth it (and hopefully the game, but that's up for you to decide).

Those of you targeting 1080p gaming, the good news is just about anything works with the medium quality preset, assuming you have the CPU power to fully unleash the GPU and we'll soon look at CPU performance using a different test method.

Something along the lines of a GTX 1650 Super or Radeon RX 580 at 1080p using medium settings should do it. Then for those wanting to experience ultra, the GTX 1070 or Vega 56 will be required. Needless to say, all currently released current generation GPUs work really well.

For 1440p medium settings, a GTX 1660 Ti or GTX 1080 will enable a 60 fps experience, as will Vega 56. But if you want to crank the visuals up here with the ultra preset, you'll want an RTX 2060 Super, RTX 3060 or 6600 XT / RX 5700.

On that note, AMD's previous-gen RDNA GPUs performed exceptionally well and it was good to see the 5700 XT hanging in there with the 2070 Super.

Overall, Battlefield 2042 looks very promising and is no doubt set to become a standard title amongst our benchmarks. For now, we're keen to start comparing AMD and Intel CPUs and mess around with the Portal mode to see what the options are there for testing, fingers crossed it's working now.