In context: Given what a hot topic generative AI has been, particularly for cloud computing providers like Microsoft and Google, it has been a bit surprising not to hear more about the matter from Amazon. After all, their AWS cloud computing platform owns the number one market share position according to multiple firms that track those numbers.

It turns out Amazon and AWS did make several generative AI announcements back in mid-April, but they didn't generate as much buzz or initial interest as one might expect. Having had the chance to digest the news and observing where much of the attention on generative AI-related news and stories has been focused lately, I can now understand why that may have been the case.

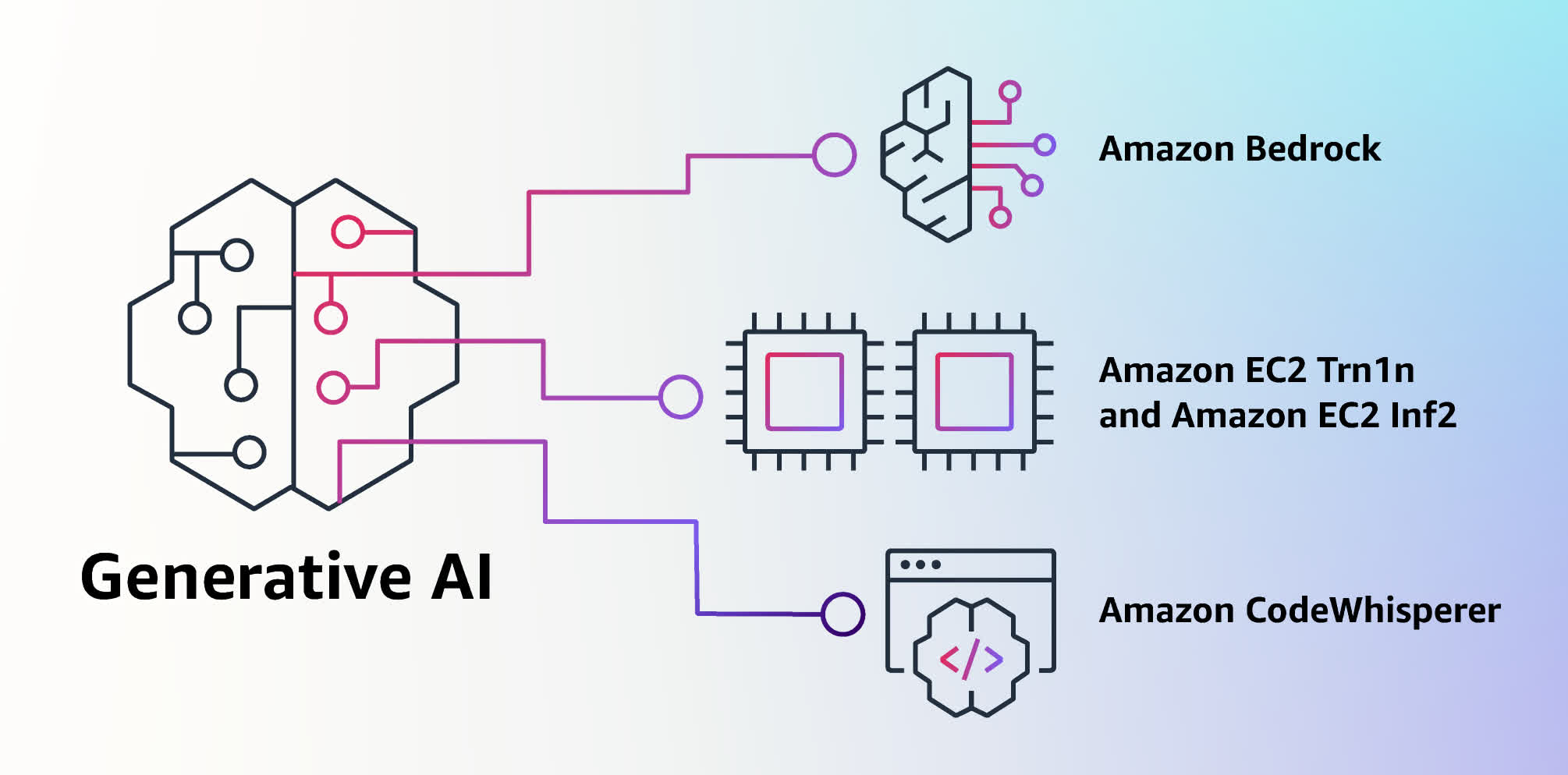

In short, the Amazon story around AI is simply more complex than most. Instead of having a single application or small set of applications based on large language models (LLMs), like ChatGPT or Stable Diffusion, Amazon has launched an AWS service called Bedrock that's designed to let companies build applications using one of several different AI foundation models.

Some of those are actually LLMs that Amazon has created – called Titan – but they aren't available for general use just yet. Amazon also announced the general availability of a developer tool called CodeWhisperer, first previewed last year, which they said is based on a generative AI foundation model.

CodeWhisperer can automatically generate code from natural language input in one of 15 different programming languages, offering the closest conceptual comparison to similar tools from Microsoft and Google (among others). However, it is only available to developers (though it is free for individual developers).

Despite these less news-friendly announcements from Amazon, the ideas behind Bedrock are intriguing. They suggest an evolving approach to how companies intend to offer and consume generative AI-focused products and services. First, Amazon made a point to focus on choice when it comes to the models that are being made available as part of the service. They initially plan to offer access to AI21 Labs' Jurassic-2 family of multi-lingual LLMs (for text generation, summarization, etc.), Anthropic's Claude conversational and text processing-focused LLM, and Stability AI's Stable Diffusion image generating AI tool, as well as their own Titan LLM and Titan embeddings models.

The embeddings model is noteworthy because instead of generating text, it's designed to generate numerical representations (called embeddings) that Amazon says "contain the semantic meaning of the text." Amazon further explained that it's using a version of its embeddings model to help power the product search engine on Amazon.com so that people can find what they're looking for without necessarily having to type in the correct word or phrase. Bedrock makes all of these models accessible to developers through open APIs.

Bedrock demonstrates a growing interest in having more options for the core foundational models powering generative AI services. While most of these enormous models are known for being general-purpose tools – indeed, that's one of the key differences that transformer-based neural network architectures have enabled versus previous more highly specialized machine learning models – there's still a sense that different models could be better suited for different tasks.

Whether the market really demands this degree of choice or if organizations are satisfied with the simplicity of using a single general-purpose model for multiple applications remains to be seen. Common sense (and market dynamics) suggest increased choices are inevitable, but it's also very easy to imagine the options exploding out of control. Of course, a range of choices may be less important than offering access to the right choice, and Bedrock's initial lack of support for the immensely popular ChatGPT could be an issue.

An even more intriguing capability in Bedrock is the ability to customize whichever model you choose to use for a given application with an organization's own data. Many organizations are bound to find this appealing for multiple reasons. First, as impressive as general-purpose generative AI tools may be, organizations regularly spend millions of dollars to create custom applications that fit their specific needs, and they're going to want that same level of customizability for their generative AI tools. Being able to train a model using a business's own documents and other information should also enable the generation of output that more closely matches the specific requirements of the organization.

Second, the way in which AWS does this customization is by creating a copy of the main model and then training that private model in a virtual private cloud within AWS. Third, because of how this works, that means queries or other input into the model are kept private (and encrypted) as well and not used for further training of the main general-purpose model. As companies start to learn about these risks with general-purpose models, I'm convinced these capabilities will move from advanced features to base-level requirements, but for now, it's a nice advantage for AWS to tout.

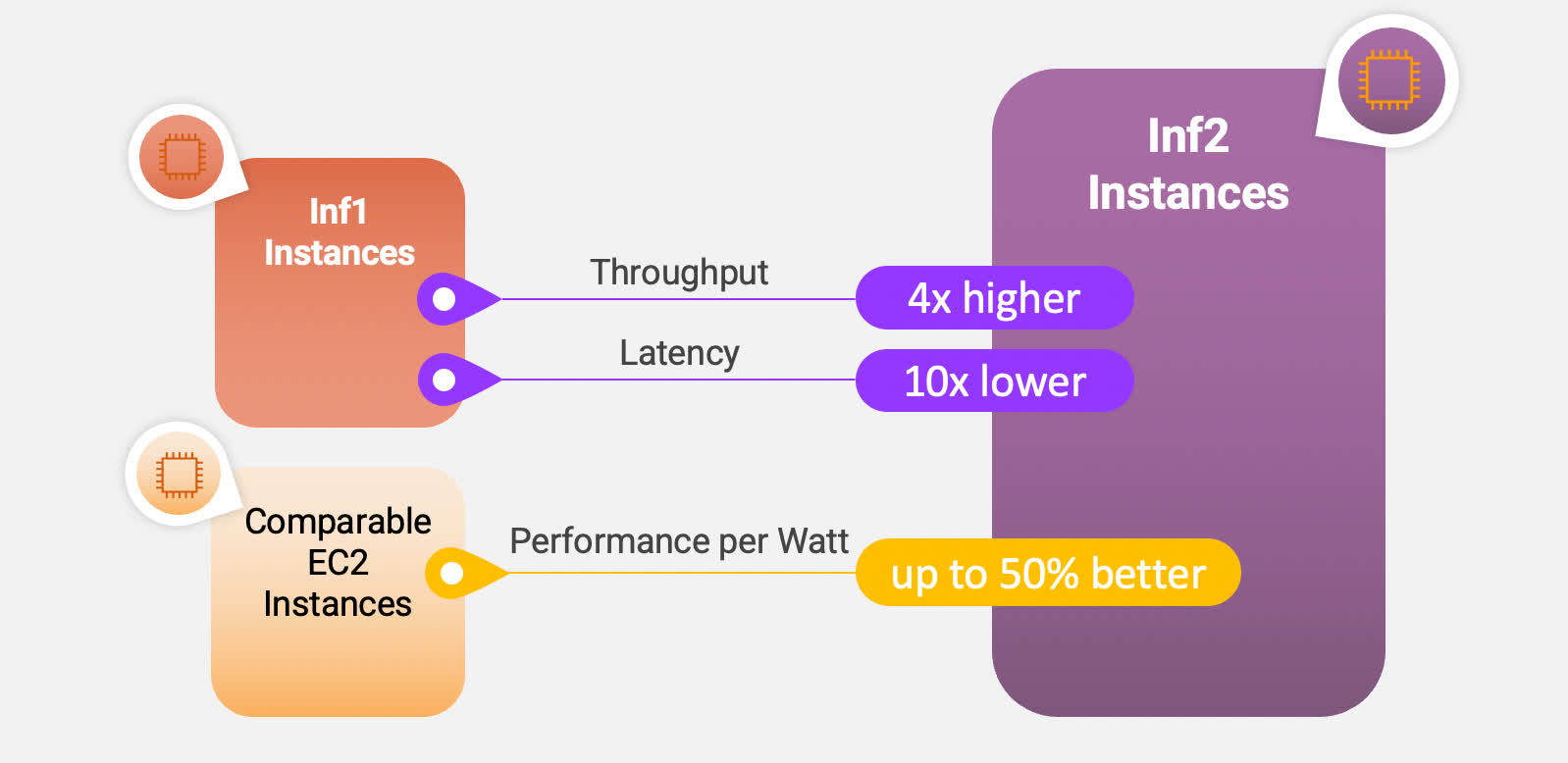

Key performance improvements with the new Inf2 instances

From a hardware perspective, AWS also announced some new compute instances (Inf2 and Trn1n) specifically designed to run both AI model training and inference workloads powered by the company's own silicon: the newly upgraded Inferentia2 chips for inference and the Trainium chips for training.

While it's obviously great for Amazon to showcase their own custom silicon, what's important about these chips is that they're focused on performing these challenging tasks at lower power levels. As many companies (and cloud providers) are quickly discovering, training and running these models require an enormous amount of computing and, therefore, electrical power – both of which directly translate to higher costs. I expect to see significant advancements in this area from all the major semiconductor players over the next few years, but it's good to see AWS taking important steps in this direction.

The final point to emphasize is that Amazon's generative AI strategy is clearly focused on developers, not business users nor consumers, making the announcement more challenging to explain and harder for people to really appreciate. From a business perspective, however, it makes sense and positions the company well to continue serving as a platform where companies go to build applications, store their data, and leverage that data for new insights.

Ultimately, the story isn't as flashy as some of the developments we've started to see from other big tech players that directly concern consumers, but it's clear that Amazon intends to be a serious player in the burgeoning landscape of generative AI platforms, tools, and services for enterprises.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

https://www.techspot.com/news/98565-amazon-debuts-bedrock-new-cloud-service-ai-generated.html