A hot potato: Although Nvidia has caught the most flak for continuing to sell mid-range $400+ graphics cards with just 8GB of VRAM, AMD has also persisted with this approach in the budget-performance segment. Although independent benchmark data reveals the ongoing quality and performance sacrifices associated with smaller VRAM pools, Team Red continues to defend its lower-tier products with statements that, while technically accurate, obscure the true value propositions of modern GPUs.

AMD's Frank Azor recently defended the company's decision to sell an 8GB variant of the Radeon RX 9060 XT amid growing criticism of mid-range and mainstream graphics cards featuring limited VRAM. While Nvidia is more frequently guilty of this trend and AMD cards often offer more memory, the most affordable and popular products from both companies suffer from the same issue.

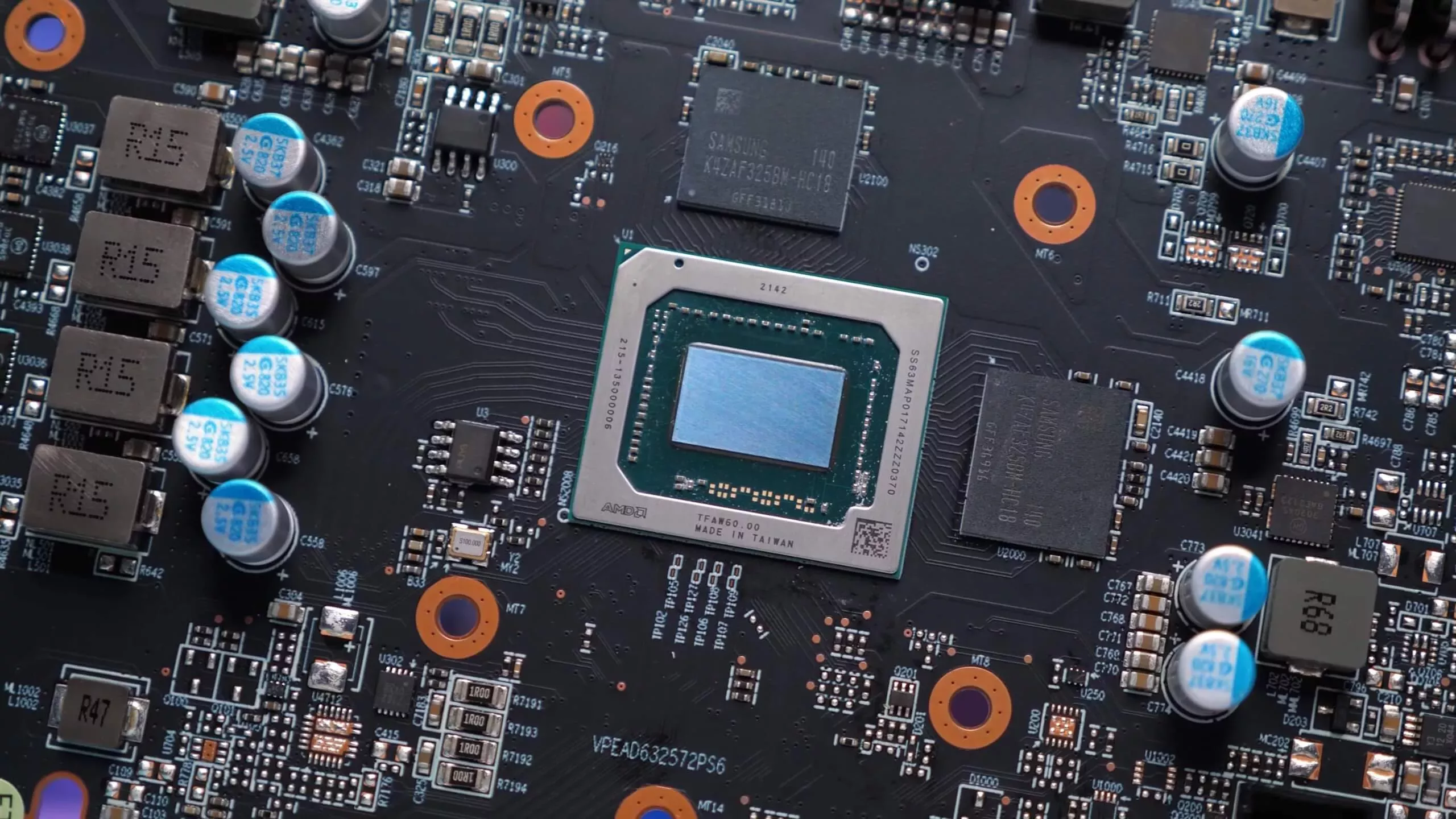

Following a Computex unveiling of the RX 9060 XT, which offers 8GB and 16GB configurations, Azor responded to a question regarding the cheaper version by claiming 8GB of VRAM is sufficient for 1080p, the most popular PC gaming resolution.

Majority of gamers are still playing at 1080p and have no use for more than 8GB of memory. Most played games WW are mostly esports games. We wouldn't build it if there wasn't a market for it. If 8GB isn't right for you then there's 16GB. Same GPU, no compromise, just memory…

– Frank Azor (@AzorFrank) May 22, 2025

Our reviews of similar GPUs like Nvidia's RTX 5060 Ti 8GB and 5060 reveal that, while this is true, low VRAM significantly handicaps cards compared to similar hardware with more memory.

While virtually any title in 2025 is playable with 8GB of VRAM at the right graphics settings, the size of the VRAM pool can still significantly impact the user experience. Comparing the 8GB and 16GB versions of the RTX 5060 Ti reveals that while average frame rates are often similar, running out of memory can dramatically worsen one-percent lows, leading to noticeable stuttering. Some games perform worse overall on the 8GB model, and others – like Indiana Jones and the Great Circle – crash at settings where the 16GB GPU runs smoothly.

Warhammer 40,000: Space Marine 2 highlights a different issue. Both cards deliver nearly identical frame rates at ultra settings with the 4K texture pack enabled, but the 8GB version struggles to render high-resolution textures.

In another benchmark, the 8GB RTX 5060 Ti even falls behind Intel's Arc B580 – a 12GB card that costs over $100 less and targets a lower performance tier. These issues can arise even at 1080p, a resolution still used by 55% of surveyed Steam users.

Azor also noted that the mainstream GPU market is aimed largely at esports players, who likely represent the largest segment of users. Steam's most popular games – esports titles like Counter-Strike 2, Marvel Rivals, Dota 2, and Apex Legends – can still achieve high frame rates on 8GB cards.

Related reading: 4GB vs. 8GB: How Have VRAM Requirements Evolved?

However, as GPUs become more expensive, many users are turning to budget hardware for playing demanding AAA titles, which benchmarks show they can handle surprisingly well. With sufficient VRAM and upscaling technology, mainstream GPUs are perfectly capable of 4K gaming.

Nvidia's approach to reviews of 8GB GPUs also suggests manufacturers are aware of the shortcomings. The company withheld 8GB RTX 5060 Ti review units from independent outlets and restricted access to RTX 5060 drivers for reviewers unwilling to benchmark the card under favorable conditions.

One such condition was enabling quadruple frame generation, a feature that distorts raw performance metrics and consumes additional VRAM. Meanwhile, ray tracing – a feature Nvidia frequently markets as a core benefit – is notably memory-intensive.

AMD's first 8GB card, the Radeon RX 480, launched nearly nine years ago at $229. It's remarkable that manufacturers still sell GPUs with the same VRAM capacity for over $300. Outside of esports, these products are likely to age poorly, especially if next-generation consoles, which are expected to feature more than 20GB of memory, launch within these cards' life spans.

AMD defends 8GB VRAM on GPUs... by admitting they are primarily for esports