The big picture: Recent rumors about upcoming graphics cards have stoked fears of rising energy consumption. Nvidia and AMD haven't fully taken the shroud off the GPUs they expect to launch later this year, but the company did reveal some worrying numbers in its future roadmap this week.

This week, VentureBeat's interview with AMD senior vice president Sam Naffziger included visuals showing the company's predictions for near-future hardware. While Naffziger expresses confidence AMD can hit its ambitions for energy efficiency gains over the next few years, AMD's numbers paint a concerning picture of rising energy demands.

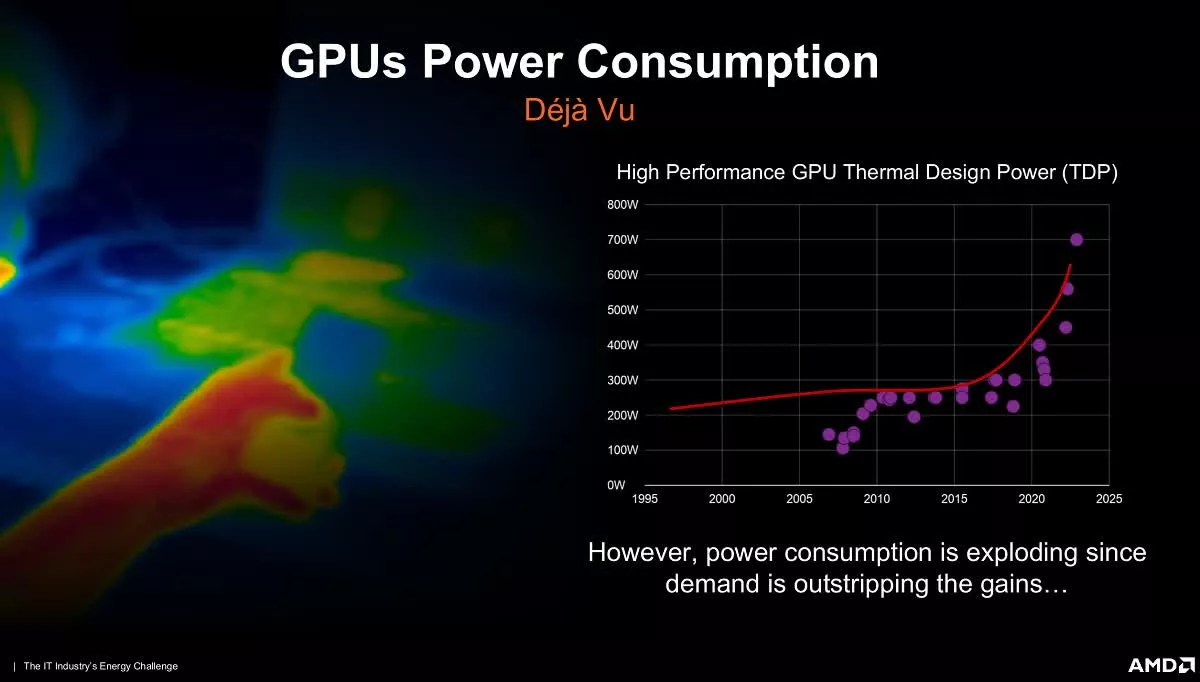

As engineers everywhere bump into the limits of Moore's Law, consumers worry that GPU TDPs could grow to alarming levels. Rumors have suggested Nvidia's next-generation flagship, the RTX 4090, which might launch late this year, could need as much as 600W. The company's current top card — the 3090 Ti — draws 450W.

If we don't see 600W cards in the upcoming generation, AMD's chart suggests we'll see them in the following or soon after. It shows the dramatic increase in power consumption around 2018, with GPUs hitting 600W and then 700W before 2025. However, the visual doesn't indicate whether it only accounts for top-end hardware. Currently, the most popular cards on the market consume a fraction of that energy, but even future mainstream GPUs will need more power.

Naffziger said AMD could get the performance gains consumers expect from new graphics cards while tackling the power consumption problem. The company has been pushing Infinity Cache as a unique advantage, but Naffziger also highlights chiplet design as an area where it leads Nvidia.

https://www.techspot.com/news/95274-amd-predicts-600-700w-gpus-2025.html