Mr Majestyk

Posts: 2,420 +2,251

So with so many governments around the world passing climate change policies and going after energy and every dam day in the news climate change is in the news and a company thinks it is okay to just increase power drew. And in Europe they are talking about rolling black outs in the fall because they have energy criss.

How wonderful is capitalism when you only have one or two companies with no incentive yes no incentive of bankruptcy or major drop in market shares.

Why should I get the 4090? I should keep the 3090 or buy used cheaper 3090 and just increase the clock and call it 4090.

The way GPU and CPU is going now days with every year only 10% to 15% gain only and more power draw and becoming a space heater I’m done yes done buying or building computers these days.

A computer in year 2030 will not be two or three times faster than a computer in year 2020.

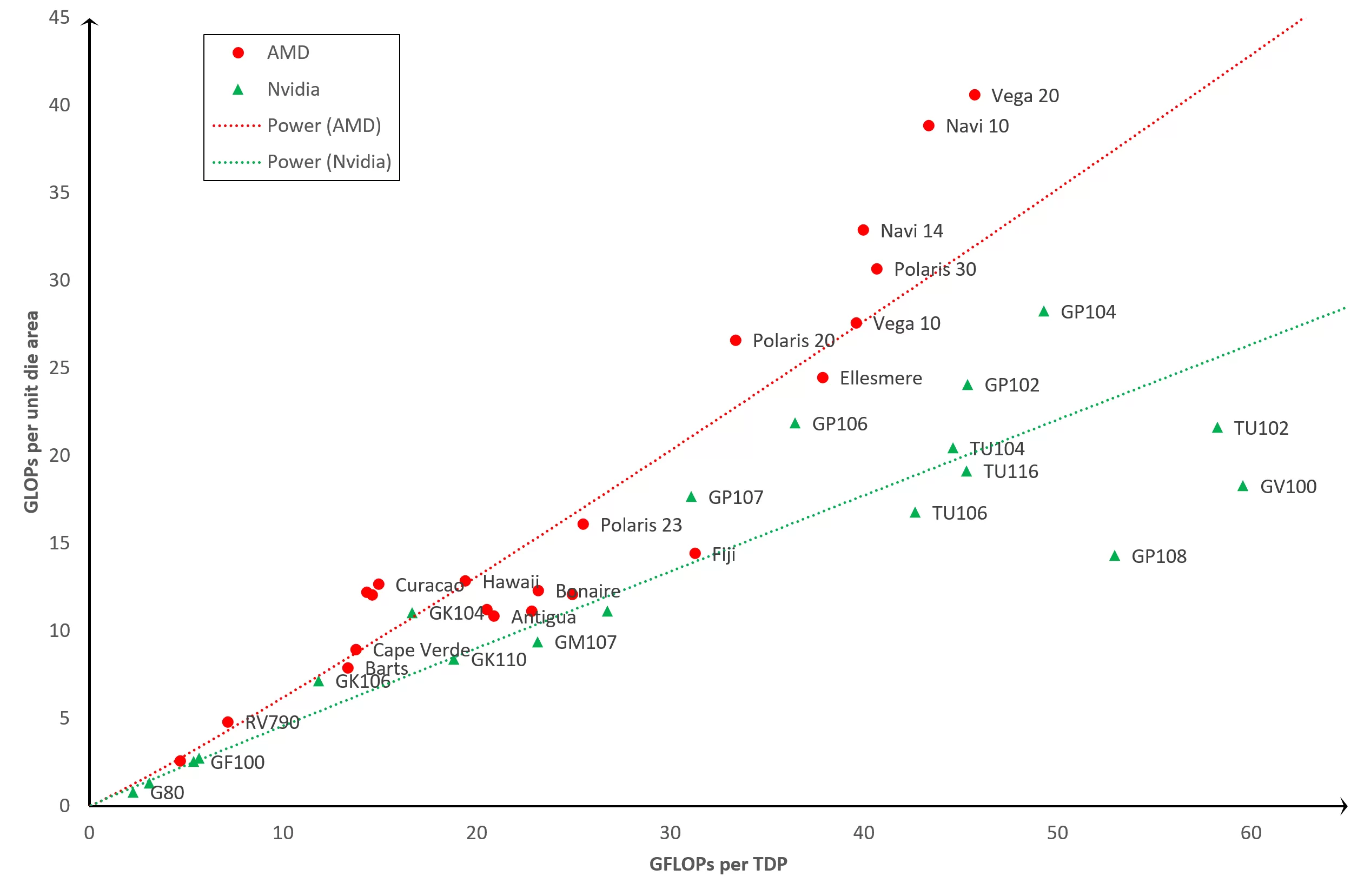

For GPU it is way more than 15% and we already know this next gen it's 80-110% increase on the top cards. RDNA2 and Ampere weren't 15% either. CPU is also more than 15% now and the gap will increase by 2025. Both AMD and Intel are claiming ~ 75% by 2025 which means 30%+ gen-on-gen.

You do realise you don't have to run a next gen card at full power. 50% increase in performance per watt means you can stay at the same power as you currently have and average 50% more fps. If 6700XT can run the game you want with the setting you want at 60fps in 1440p say, then with 7700XT you can run it at 90fps for same power, rather than 120fps for 33% more power. Obviously it's hard to set-up direct comparisons with architectural changes etc, but the point stands.