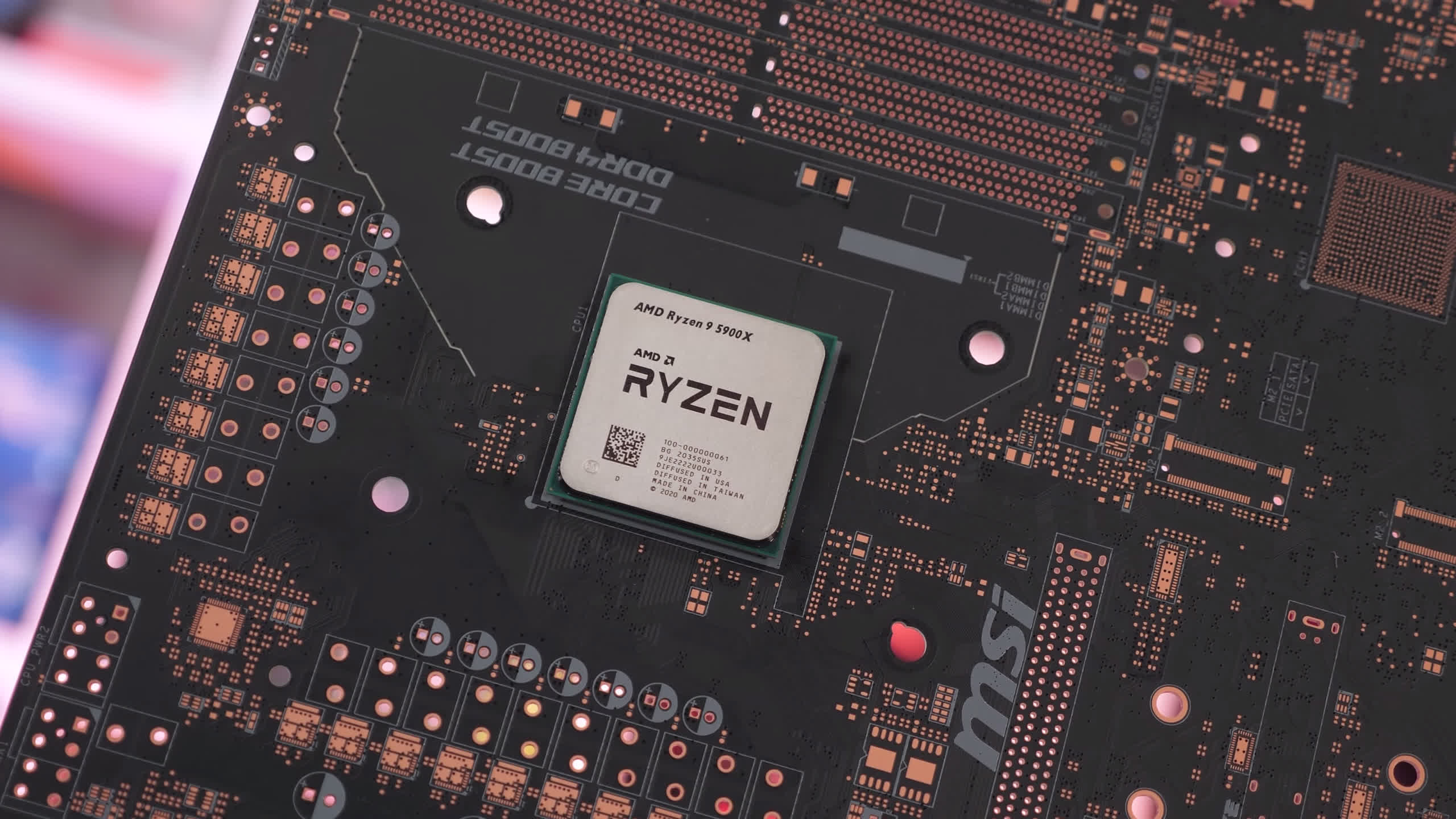

Why it matters: A smattering of new AMD processors will soon release, completing the deployment of the Zen 3 architecture. Included are the Ryzen 7 5800 and Ryzen 9 5900 CPUs, low power models based on existing hardware, and the Ryzen 7 5700G, an octa-core APU.

A reliable leaker has posted the pivotal details of the Ryzen 7 5800 and Ryzen 9 5900. The two CPUs will target the same 65 W TDP that previous non-X and non-XT models did, down two-fifths from the 105 W granted to the 5800X and 5900X. At 4.6 GHz and 4.7 GHz, respectively, their boost clocks are 100 MHz lower than the 5800X and 5900X's.

It's also expected that the vanilla models will have lower base clocks, and will sustain maximum clocks for a shorter period of time. The downgrade is usually counterbalanced by a sturdy discount, though that may be irrelevant to the 5800 and 5900; rumor has it they'll be exclusive to OEMs, at least at first.

Meanwhile, in China, a leaker's encapture of a Ryzen 7 5700G has exposed the APU's specifications and performance bracket. In short, it's a slightly crippled 5800X octa-core processor paired with Vega graphics.

A shot of a 100-1000000263 engineering sample. It's being referred to as a 5700G in accordance with previous generations' naming schemes, but it doesn’t have a confirmed name.

CPU-Z shows that the processor belongs to the Cezanne family, which also includes some of the upcoming mobile APUs. The 5700G uses a fully-equipped Cezanne chip, containing 8 cores and 16 threads built on TSMC's 7nm node. Software registers a 4.4 GHz clock speed.

In the CPU-Z benchmark, the 5700G scored 613.6 points in the single-threaded test and 6292.2 points in the multi-threaded test, making it about 5% slower than the 5800X. Part of the difference can be explained by the lower clock speed, but it's partly architectural differences, too. While the 5800X contains a pricey 32 MB L3 cache, the 5700G has just 16 MB of L3 to work with.

Nevertheless, that's an incredible showing for an APU. It's only a shame that the leaker chose not to test the integrated GPU, too. They did, however, experiment with overclocking, and reportedly achieved a stable 4.7 GHz that put performance almost on par with the 5800X.

Like the 5800 and 5900, there's a good chance that the 5700G will be exclusive to OEMs. Given how unconventional it is, that would be a disappointing outcome though. If any of the above are coming to shelves, they'll probably be announced on Tuesday during the AMD CES 2021 keynote.

https://www.techspot.com/news/88224-amd-ryzen-7-5700g-apu-leaks-alongside-5900.html