The big picture: AMD finally revealed the specifications, pricing, and performance details of Radeon RX 9070 and Radeon RX 9070 XT graphics cards, with full reviews expected in the coming days. While we await those, we've already discussed the potential performance implications, FSR 4 upscaling, and now we want to provide additional context on improvements in the new Radeon's encoding quality – an often overlooked aspect of new GPUs (including by us).

Update (Mar 5): TechSpot's Radeon RX 9070 XT review is now live.

AMD's GPU encoders have long been criticized for poor video encoding quality when using popular formats and bitrates for game streaming, leaving Nvidia as the clear choice for anyone who wants to use video encoding.

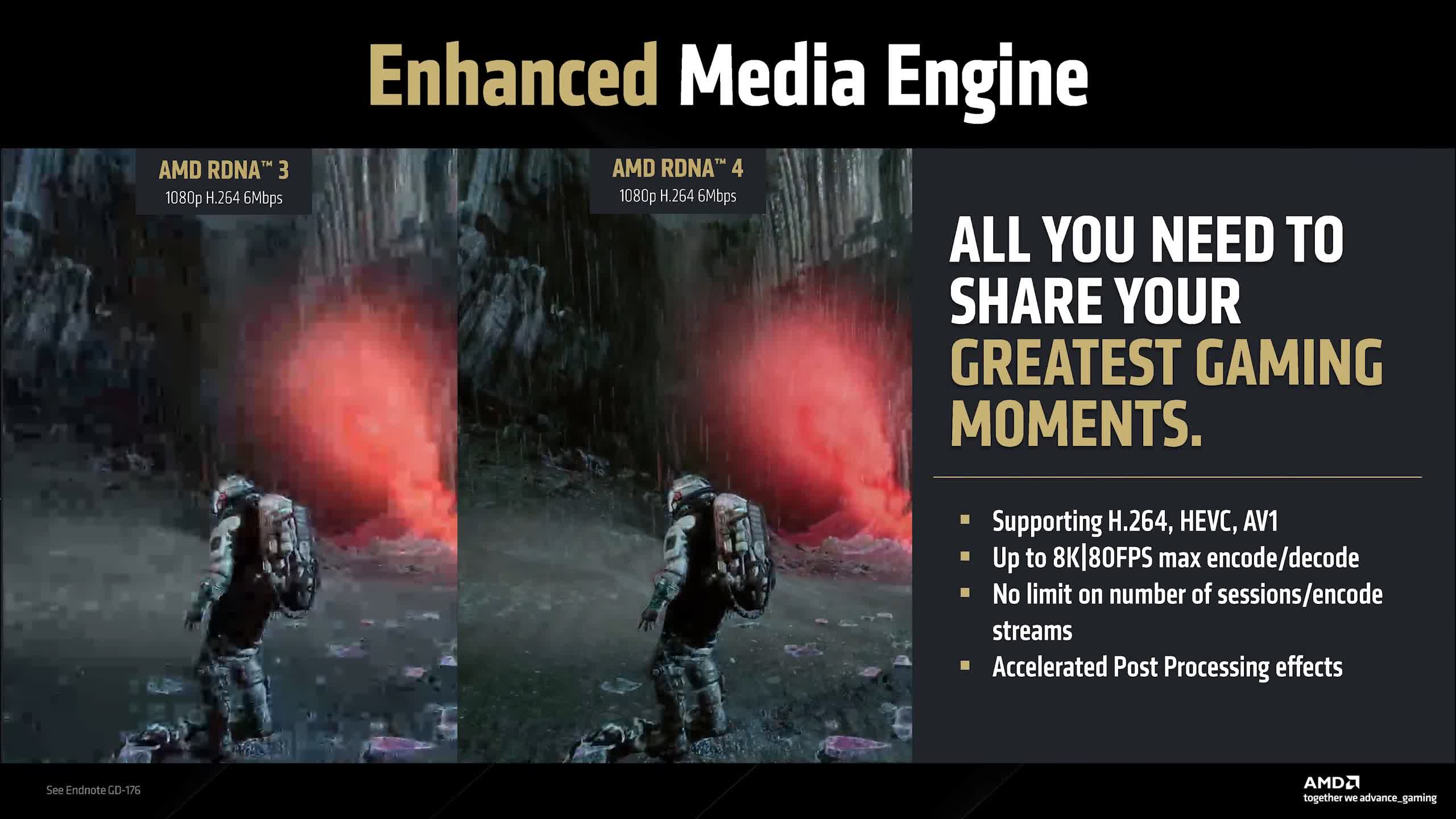

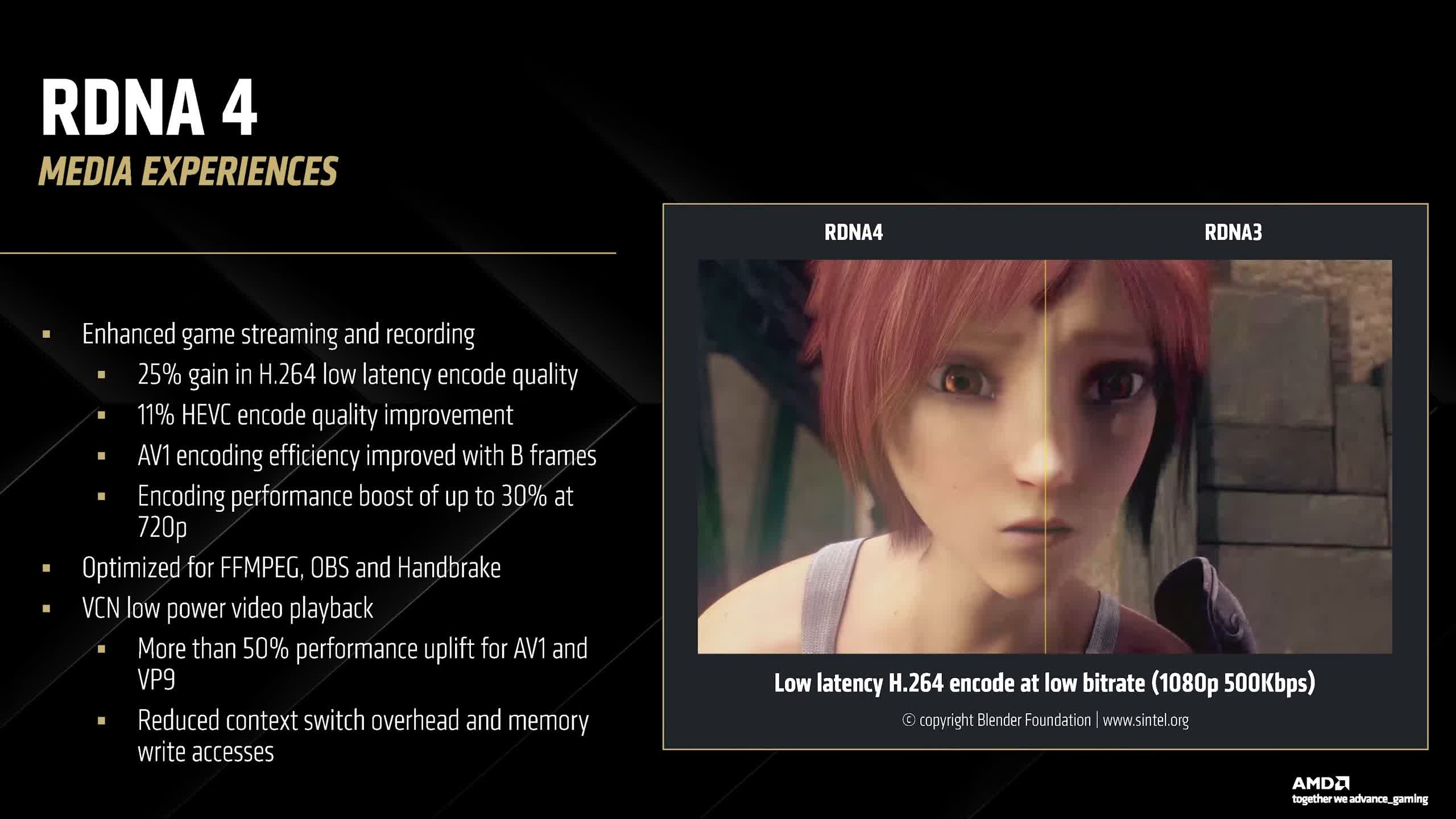

With RDNA 4, AMD claims encoding quality is significantly improved, and the examples they showcased were certainly attention-grabbing. AMD is specifically highlighting 1080p H.264 and HEVC at 6 megabits per second – one of the most commonly used setups – demonstrating a substantial increase in visual quality.

Whether this will hold true across a broad variety of scenarios remains to be seen, but historically, AMD's discussions on encoding quality have revolved around supporting new formats like AV1. With RDNA 4, AMD is focusing on tangible improvements in real-world use cases, suggesting they are far more confident in their encoder's quality.

AMD is touting a 25% gain in H.264 low-latency encode quality, an 11% improvement in HEVC, better AV1 encoding with B-frame support, and a 30% boost in encoding performance at 720p. These numbers likely refer to VMAF scores.

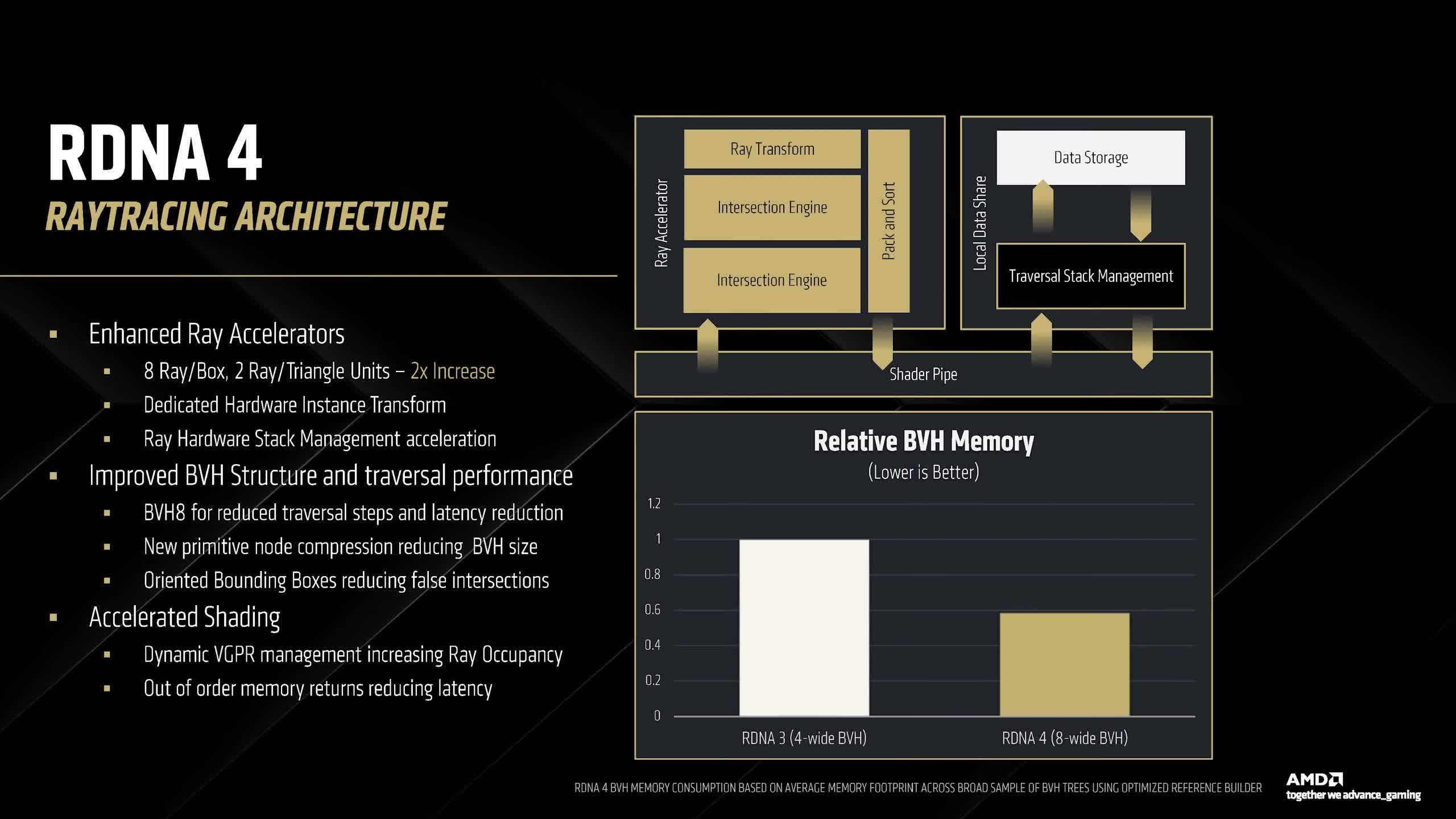

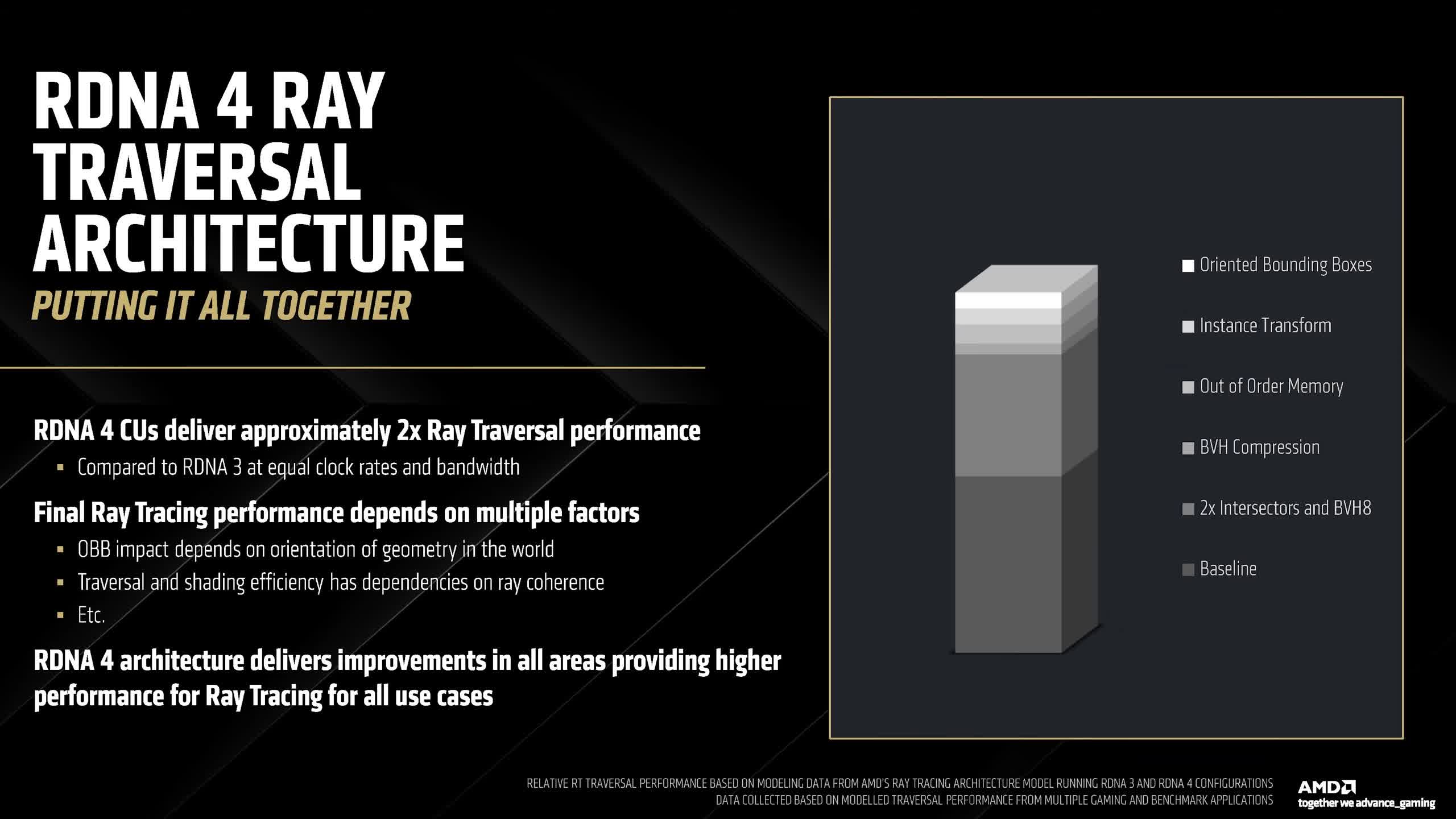

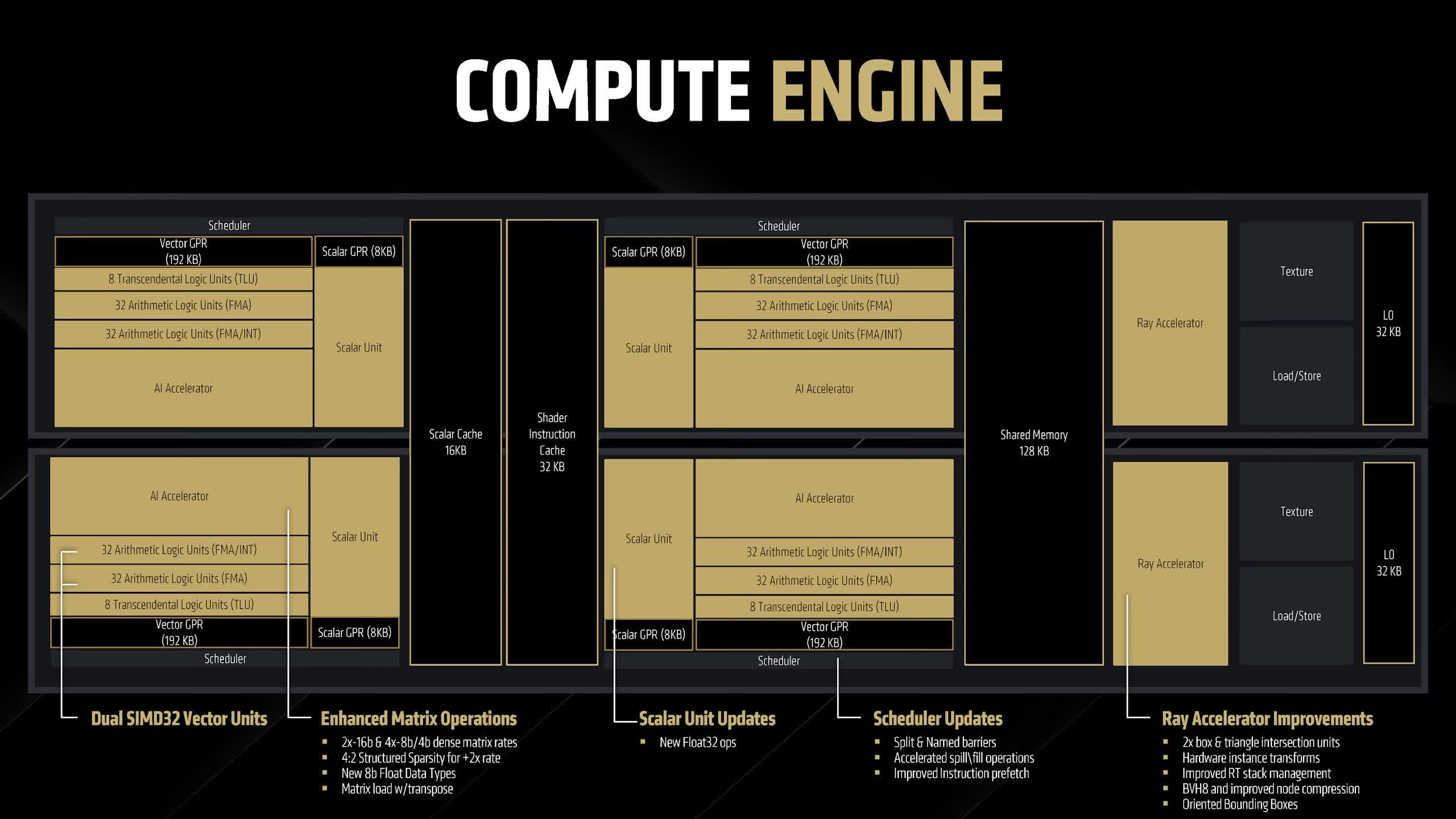

Beyond encoding, there are several other notable improvements. The ray tracing core now features two intersection engines instead of one, doubling throughput for ray-box and ray-triangle intersections. A new ray transform block has been introduced, offloading certain aspects of ray tracing from shaders to the RT core.

The BVH (Bounding Volume Hierarchy) is now twice as wide, and numerous other enhancements have been made to the ray tracing implementation – one reason why RDNA 4's ray tracing gains exceed its rasterization improvements.

Compute, Memory, and Display Enhancements

The compute engine also includes several optimizations, along with a PCIe 5.0 x16 interface and a 256-bit memory bus using GDDR6. AMD claims enhanced memory compression, and the GPUs are equipped with 16GB of VRAM, which should be sufficient for most modern games.

The display engine, however, is a mixed bag. While it supports DisplayPort 2.1, its capabilities remain unchanged from RDNA 3, with a maximum bandwidth of UHBR 13.5 instead of the full UHBR 20 now used on some 4K 240Hz displays and supported by Nvidia's Blackwell architecture.

HDMI 2.1b is also included. On a positive note, AMD claims lower idle power consumption for multi-monitor setups, and video frame scheduling can now be offloaded to the GPU.

The Navi 48 die is 357mm² of TSMC 4nm silicon, featuring 53.9 billion transistors. This makes it 5% smaller than Nvidia's Blackwell GB203 used in the RTX 5080 and 5070 Ti, yet it contains 18% more transistors, meaning the design is more densely packed.

However, Nvidia still holds an advantage in terms of die area and transistor efficiency, as the RTX 5080 is expected to be about 15% faster in rasterization and possibly over 50% faster in ray tracing based on AMD's RX 9070 XT claims – all while using fewer transistors and a smaller die. That said, the TDP of the RTX 5080 is 360W, compared to 304W for the 9070 XT, an 18% higher power draw – though actual power consumption in games may vary. The 9070 XT should be closer to the RTX 5070 Ti with its 300W TDP.

A couple of additional details to round things out: AMD is not producing reference models for the RX 9070 XT or RX 9070, meaning all designs will come from board partners. These partners include ASUS, Gigabyte, PowerColor, Sapphire, XFX, and other familiar names.

Availability is expected to be strong on March 6th, as these cards have reportedly been ready since early January.

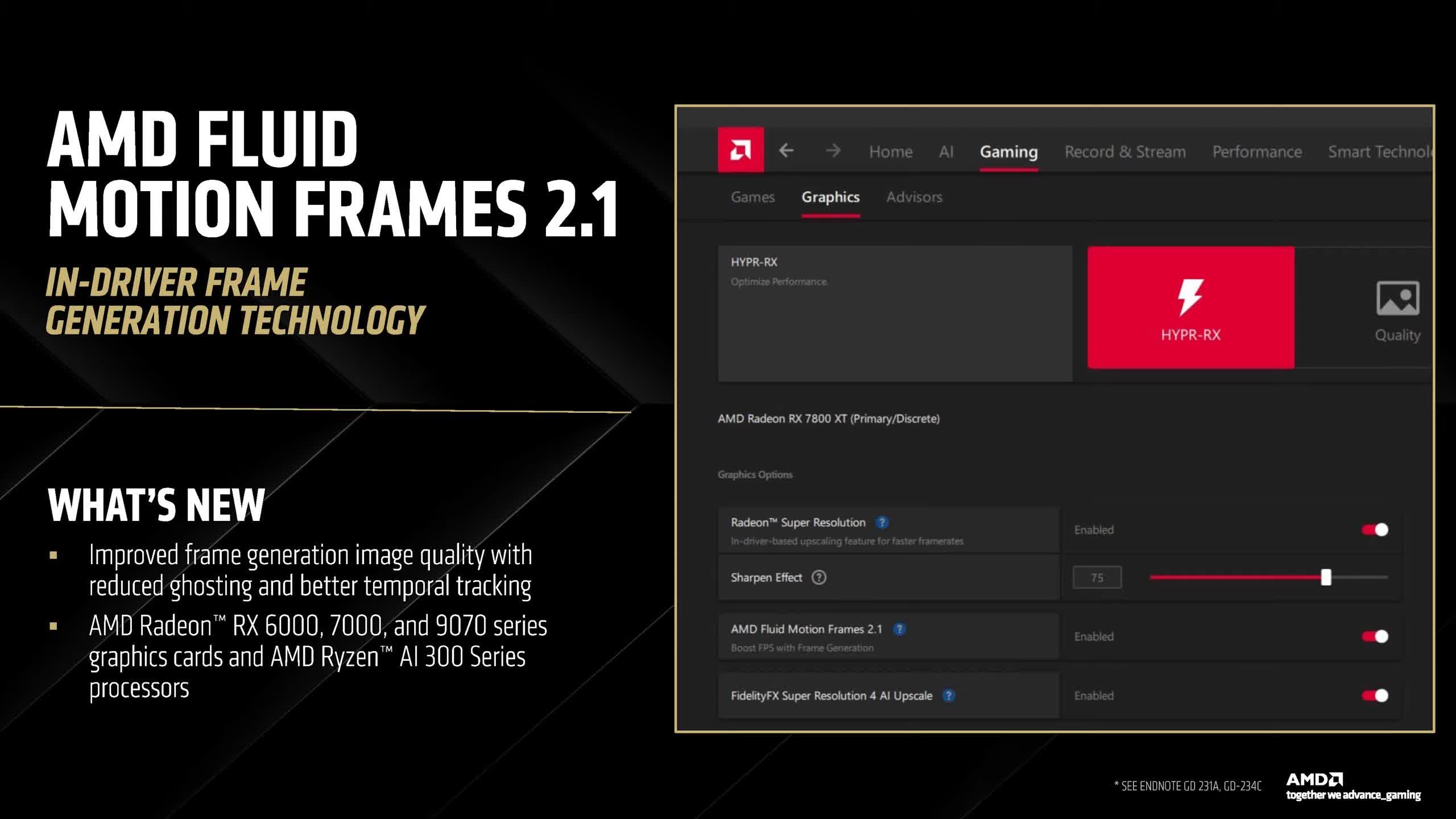

Additionally, AMD is releasing a new version of its driver-based frame generation technology, AFMF 2.1, which will be available for the Radeon RX 6000 series and newer GPUs.

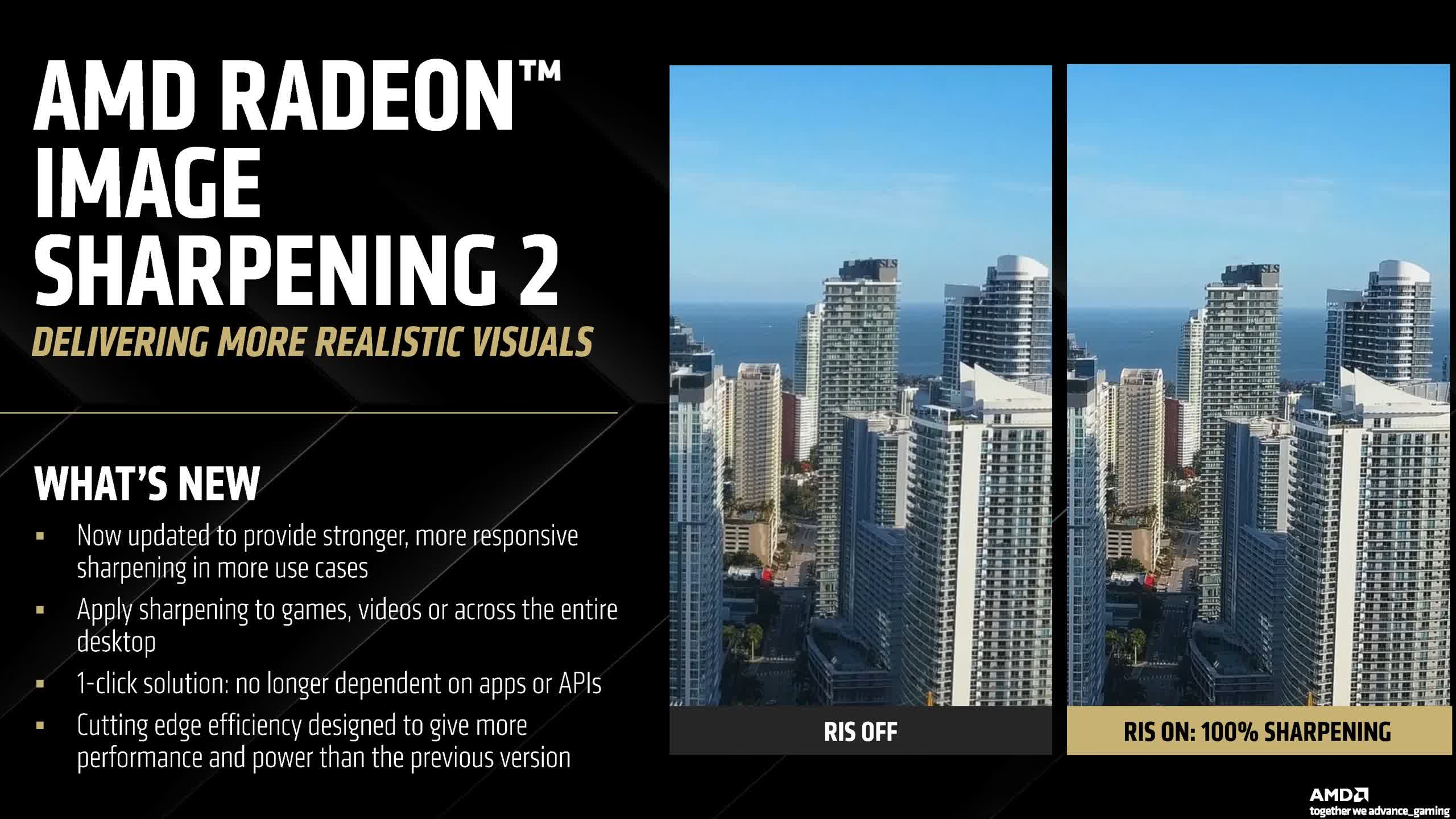

This version promises superior image quality and includes Radeon Image Sharpening, which can be applied to any game, video, or application at the driver level. AMD also claims improved quality for this feature. There are also some AI-related features, though nothing particularly groundbreaking.

AMD's RDNA 4 GPUs bring major encoding and ray tracing upgrades