Something to look forward to: Nvidia's Deep Learning Super Sampling (DLSS) 2.0 offers some fantastic results for those who like to play games at high resolutions with high-quality settings without sacrificing a huge amount of performance, and it could soon make titles look even prettier with the addition of a new "Ultra Quality" mode.

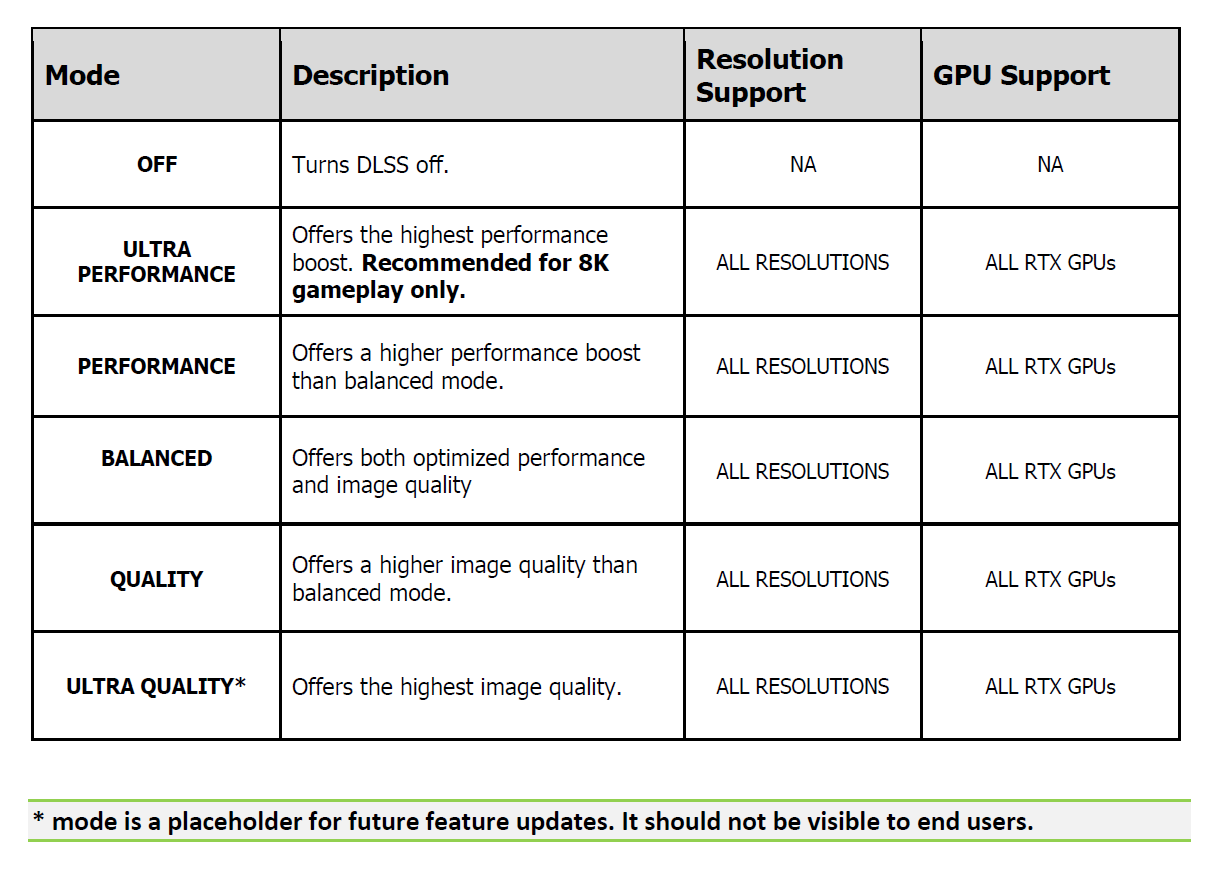

DLSS currently has four presets: Ultra Performance, Performance, Balanced, and Quality, each one altering the balance between picture fidelity and a game's performance. But Redditor u/Reinhardovich has spotted what appears to be a new Ultra Quality preset.

The new option is listed as a placeholder in Unreal Engine 5's DLSS documentation PDF. The preset comes with a note that reads: "mode is a placeholder for feature updates. It should not be visible to end users," suggesting it's still in development.

Currently, the DLSS 2.0 option setting that offers the best image is Quality, which renders a game at 66.6% of the native resolution, upscaling it 1.5x times. It could be that this new Ultra Quality setting is Nvidia's response to AMD's FSR. Team Red's DLSS rival has an Ultra Quality setting that renders games at around 77% of the native resolution, about 1.3x upscaling, so Nvidia may follow suit with its own UQ option.

The latest version of DLSS is 2.2.6.6 that's supported by games including Rainbow Six Siege and Lego Builder's Journey. However, Alexander Battaglia from Digital Foundry notes you can modify the DLSS in Doom Eternal to implement the new DLSS version 2.2.9 found in the Unreal Engine 5 plugin. They shared a comparison on Twitter showing the differences between each iteration, though the Ultra Quality mode in 2.2.9 isn't available to users just yet.

Specifically 2.2.6 - not 2.2.9 interestingly enough :) https://t.co/Uy6brIn1oz pic.twitter.com/BV8M1JmIeX

— Alexander Battaglia (@Dachsjaeger) June 30, 2021

It'll certainly be interesting to see how FSR compares directly to DLSS 2.0, though we're still waiting for a game that supports both technologies. Check out Hardware Unboxed's analysis of AMD's FidelityFX Super Resolution in the video below.

https://www.techspot.com/news/90281-ultra-quality-mode-coming-nvidia-dlss-20.html