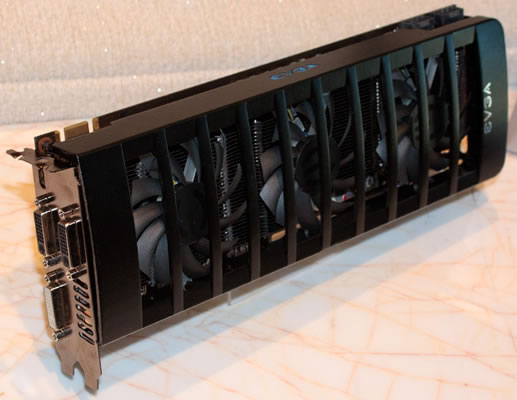

Nvidia-exclusive add-in card maker EVGA has taken the occasion to show some of its new and upcoming products at this year’s CES. Among those is one that is bound to give AMD a run for its money: a new dual-GPU board that would presumably debut under the GeForce 500 family. The upcoming card has one SLI connector for quad-SLI setups, a dual-slot cooler with three fans, two 8-pin PCIe power connectors, and three DVI outputs.

Not many details were shared in terms of specs. Judging by the apparent power and cooling requirements, Tech Report speculates that it may house a pair of GF110 GPUs, clocked somewhat lower than on the GTX 580, but Hexus claims to have confirmed it'll be based on two GF104 chips (found on the GTX 460). Other than that they say it will carry 2GB of memory, support three-monitor Surround Vision gaming out of the box, and cost less than a flagship GTX 580.

We’ll have to wait for an official confirmation, but if Hexus is on the money then this isn’t the card that is expected to go against AMD's upcoming dual-GPU Radeon HD 6990 (Antilles). Still, we know what a pair of GeForce GTX 460 in SLI are capable of so if EVGA gets the pricing right this could be a real winner.

https://www.techspot.com/news/41879-evga-teases-dual-gpu-geforce.html