A hot potato: Facebook is continuing to deny a recent report that claims it knows Instagram is toxic to teenage girls. It says the assessment of its internal research was "not accurate" and that the majority of girls in this age group found Instagram either made their wellbeing issues better or had no impact.

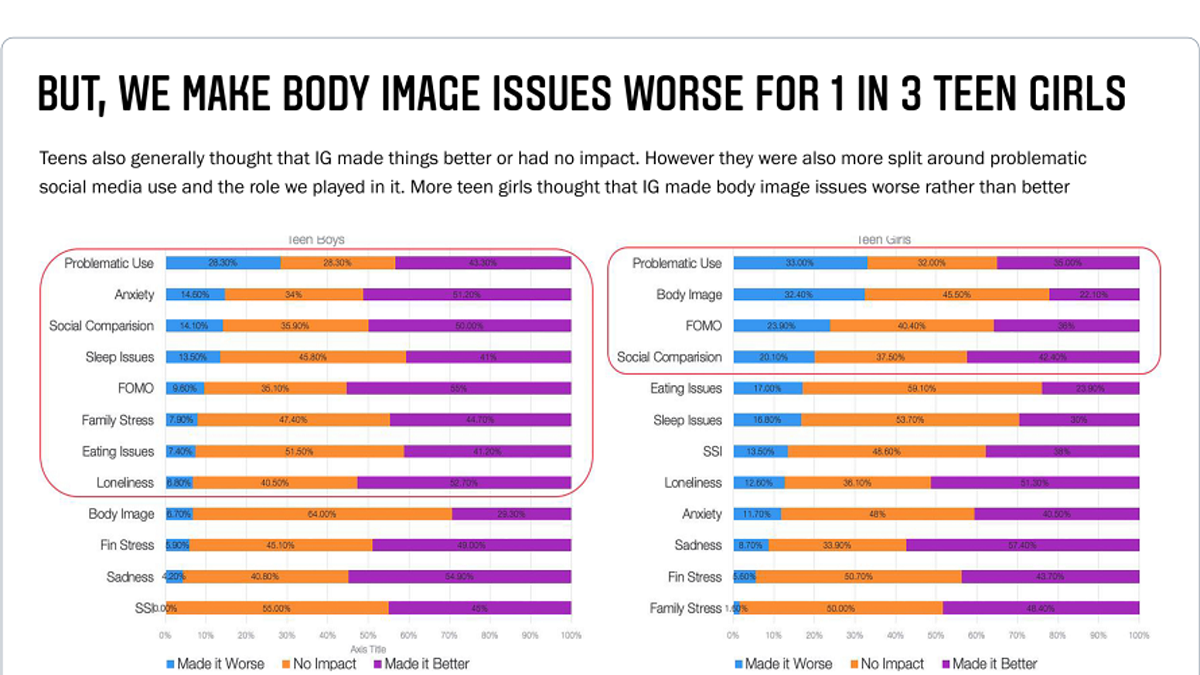

Earlier this month, the Wall Street Journal published a damning report that claimed Facebook was aware of just how damaging Instagram can be to teenagers. According to the social media giant's own internal research, the photo- and video-sharing app makes body image issues worse for one in three teen girls. "Thirty-two per cent of teen girls said that when they felt bad about their bodies, Instagram made them feel worse," researchers said in a 2019 presentation.

"Teens blame Instagram for increases in the rate of anxiety and depression. This reaction was unprompted and consistent across all groups."

Facebook denied the accuracy of the report, saying it focused on a limited set of findings and cast them in a negative light. Now, Pratiti Raychoudhury, Vice President, Head of Research at Facebook, has published a new response.

According to Raychoudhury, Facebook's research shows that on 11 of 12 wellbeing issues, including loneliness, anxiety, sadness, and eating disorders, teenagers said that Instagram made them better rather than worse. Body image was the only issue Instagram worsened.

"While the headline in the internal slide does not explicitly state it, the research shows one in three of those teenage girls who told us they were experiencing body image issues reported that using Instagram made them feel worse — not one in three of all teenage girls," writes Raychoudhury.

It's also noted that some of the research relied on input from only 40 teens and was designed to inform internal conversations about teens' most negative perceptions of Instagram.

This is all just Facebook's PR machine in motion, of course. Numerous studies across the last few years have shown just how damaging social media can be to our mental wellbeing and the ways it can exacerbate issues. It also seems strange that Facebook isn't releasing the full internal report that the WSJ piece is based on, which would surely settle the debate.

Facebook recently rolled out the ability to hide the Like count on its platform and Instagram after experimenting with the option for several years. It's hoped that the move will ease the pressure on those people, particularly younger users, who equate social media popularity with self-worth.

Facebook's response comes ahead of global head of safety Antigone Davis' expected appearance before the Senate Commerce Subcommittee to answer questions regarding the WSJ report and the company's plans for an Instagram for under 13s. "The Wall Street Journal's reporting reveals Facebook's leadership to be focused on a growth-at-all-costs mindset that valued profits over the health and lives of children and teens," said lawmakers.

https://www.techspot.com/news/91438-facebook-hits-back-report-calling-instagram-toxic-teens.html