Facepalm: This week the administrator that handles HDMI licensing confirmed to TFT Central that the HDMI 2.1 label no longer strictly requires features like 4K120Hz, dynamic HDR, or variable refresh rate (VRR). Customers might want to look more carefully from now on.

Monitor-focused tech site TFT Central noticed a Xiaomi monitor with “HDMI 2.1*” in its specs despite only being 1080p (at 240Hz) and explaining in fine print it only has bandwidth matching the HDMI 2.0 specification.

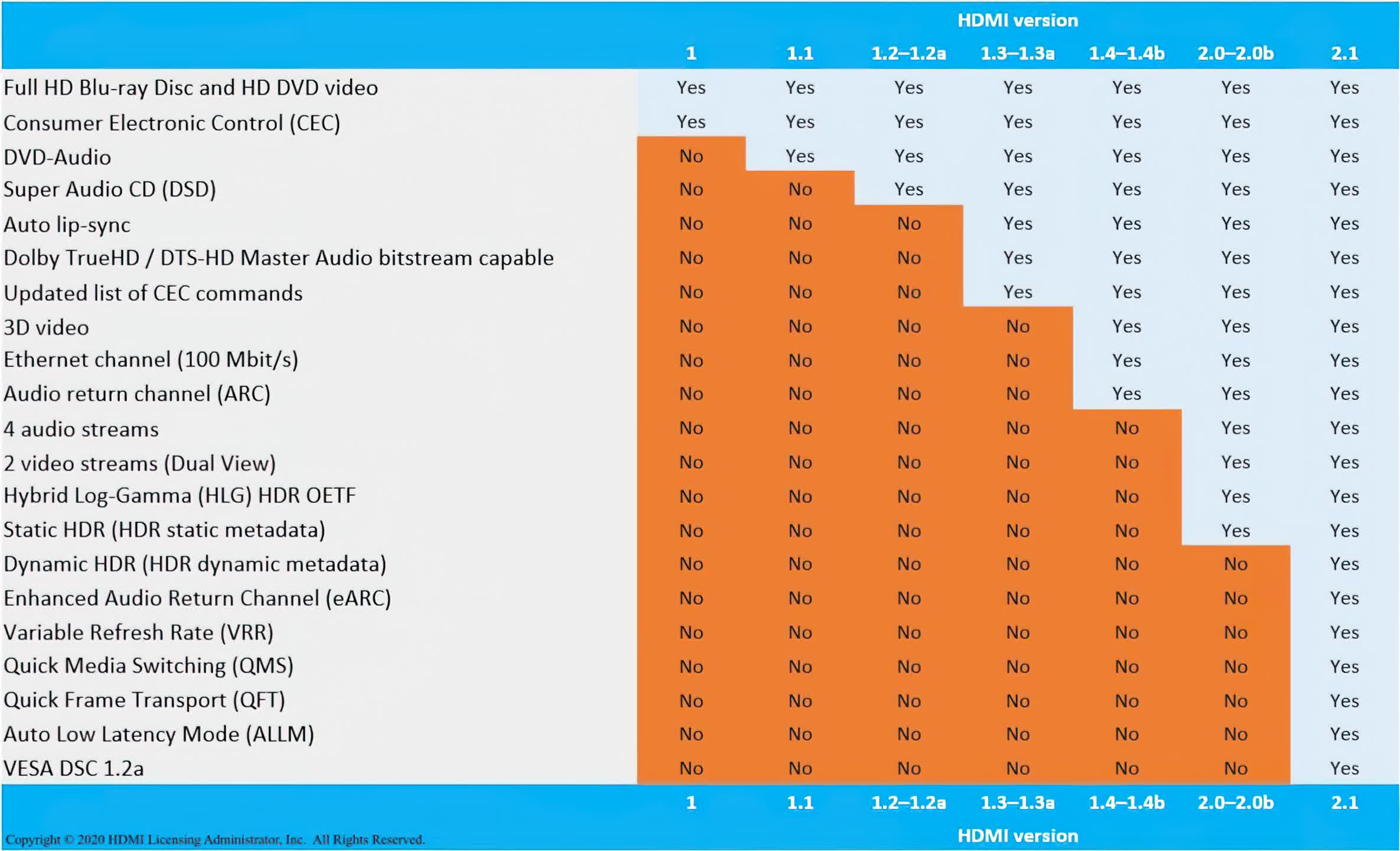

When the HDMI Forum announced the release of 2.1 in 2017, it highlighted improvements from 2.0, including 8K60Hz, 4K120Hz, VRR, Quick Media Switching, and Quick Frame Transport. Previously, the only real significant change from the HDMI 1.4 standard to 2.0 was the addition of static HDR.

The HDMI Forum's stance on the Xiaomi monitor's labeling is confusing. It said HDMI 2.1 labels need not necessarily include any of those added features. According to the Forum, HDMI 2.0 is now just a subset of 2.1. So any device with functionality associated with 2.0 or 2.1 can have the HDMI 2.1 label.

This arbitrary labeling of the standard may become confusing for customers shopping for new displays, cables, and devices with features like 4K, 120Hz, dynamic HDR, eARC, or VRR. Just seeing “HDMI 2.1” on the box might not guarantee those features anymore. Consumers will now have to carefully read specification labels to ensure they purchase a display with the advanced HDMI 2.1 functionality they want.

https://www.techspot.com/news/92606-fake-hdmi-21-doesnt-really-bother-hdmi-licensing.html