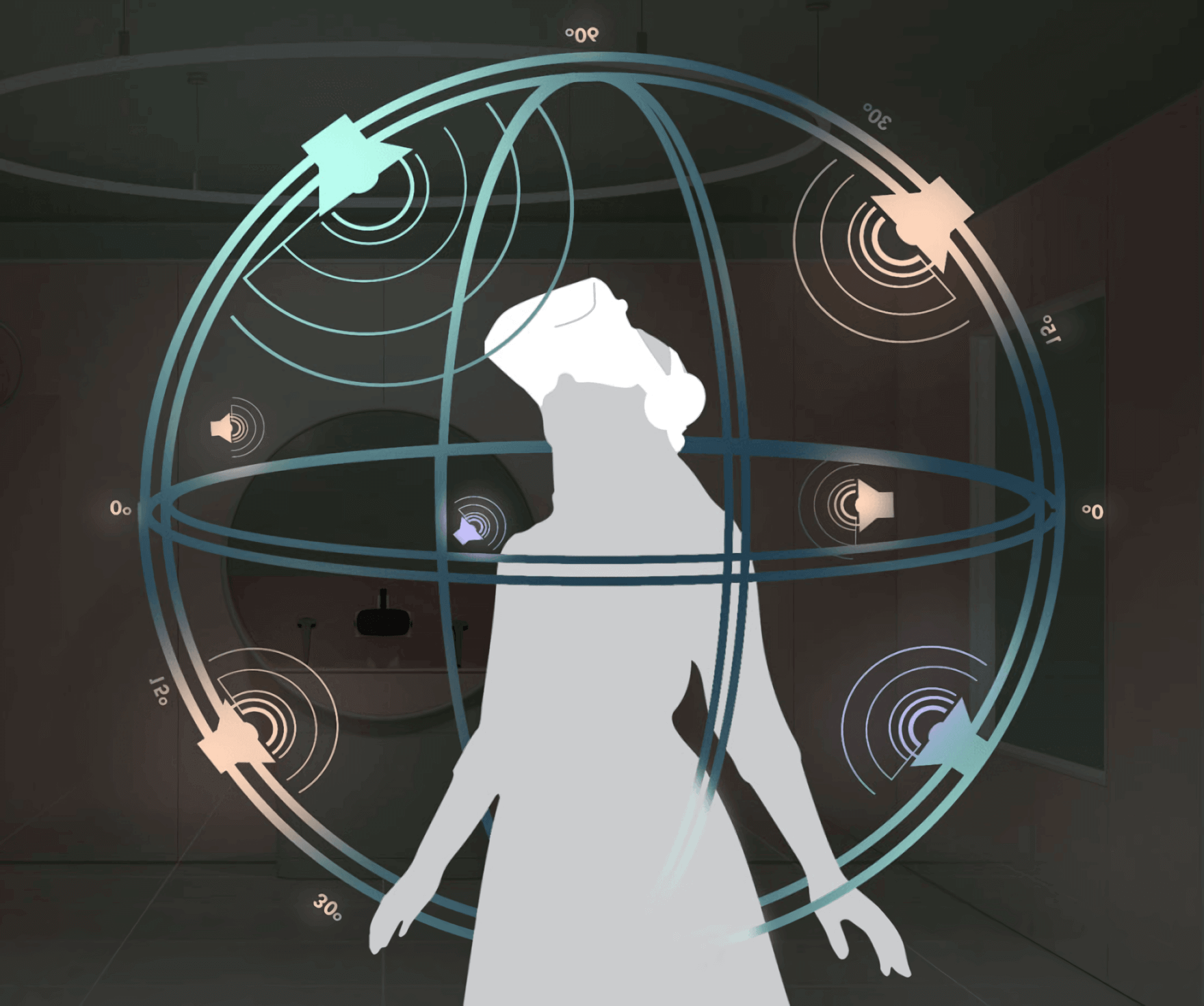

In context: Modeling sounds in a VR environment is extremely complex. For example, striking a wine glass at different points will produce different sounds. Likewise, a falling plate shattering is not only rendering the noise of it breaking but also the fragments bouncing on the floor. Not to mention the complexities directional audio adds.

Traditional sound propagation modeling can take hours or even days of computational time, making it useless in virtual reality due to the unscripted nature of the platform. However, researchers at Stanford University have developed an algorithm that can perform the calculations needed in seconds. It is not close enough to real-time to be able to produce noises as they happen in VR, but it could be used to pre-render sounds on-the-fly.

“Making it easier to create models makes it practical to build interactive environments with realistic sound effects,” said Stanford Professor of Computer Science Doug James.

Up until now, sound propagation models relied on algorithms based on work from 19th-century scientist Herrmann von Helmholtz. Traditional modeling uses Helmholtz equation and the boundary element method (BEM), which takes a very long time to render. James and one of his grad students, Jui-Hsien Wang, threw out the old Helmholtz model and focused on work done by the 20th-century Austrian composer Fritz Heinrich Klein.

Klein combined twelve piano notes into a single chord called the Mutterakkord (Mother chord) for his 1921 composition Die Maschine. James and Wang applied a similar approach to their algorithm, which they dubbed KleinPAT.

“[The] Mother chord is arranged so that it contains one instance of each interval within the octave,” James and Wang wrote in their paper, KleinPAT: Optimal Mode Conflation For Time-Domain Precomputation Of Acoustic Transfer. “Our KleinPAT algorithm optimally arranges different modal tones of a vibrating 3D object into chords, which are then played together by a time-domain vector wavesolver in order to efficiently estimate all acoustic transfer fields.”

The research is still considered to be in its early phase and requires specialized equipment. So it could be some time before we see it applied in commercial VR rigs. However, Stanford's GPU-boosted system can compute the same sounds as BEM modeling, except hundreds or even thousands of times faster.

Image credit: The Reality of Design