Base clock for 10875H is 2.2Ghz, you also miss some information here, lower TDP doesn't mean lower CPU temperature, it's a combination of voltage and frequency also. If you use only 1-2 core and load it up with 1.4v, which the 10875H will do if you leave it alone, will cause CPU to reach 85C almost instantly even with low TDP cap. That cause the fan that is tied to CPU temp to spin to 5000rpm at 52dBa just doing normal tasks.

Now for everyday task there is no need for CPU to run at 5ghz, it's just the way it is. For gaming I actually gain performance due to Nvidia dynamic boost feature that allow GPU to draw more power when CPU is not fully utilized. The 2070 Super Max-Q in my laptop is in fact drawing 105W during gaming here, which would make it faster than a 2080 Super Max-Q at 80W.

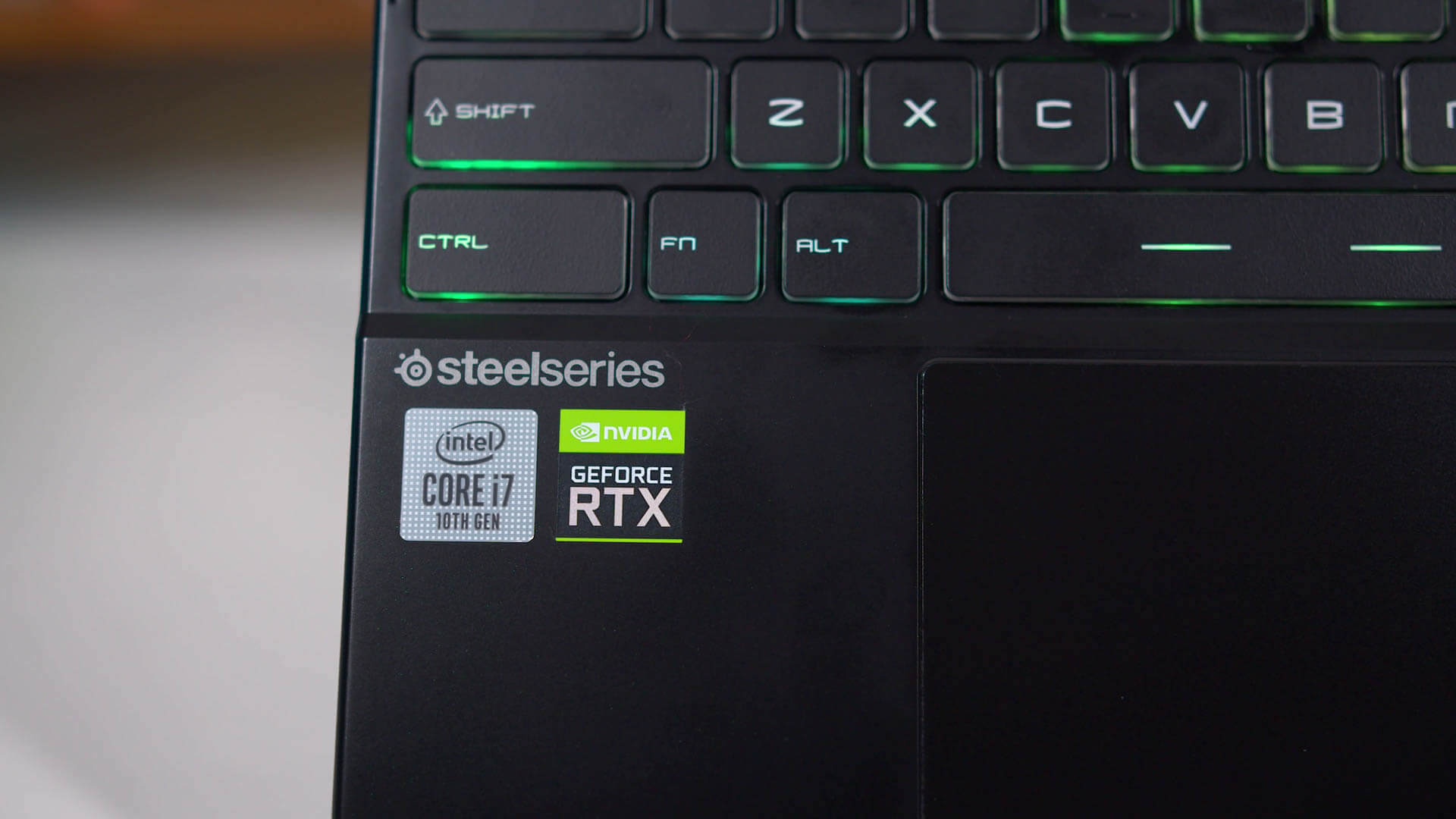

Here is the review for my laptop but with different config, mine is a 10875H + 2070 Super while this one is 10750H + 2080 Super

The Triton 500 series is Acer's premium performance and gaming ultraportable, a direct-tier competitor for the ROG Zephyrus, Stealths, and Blades out there.

www.ultrabookreview.com

Here is my

timespy score with the lowered CPU clocks (stock score is 9k2), with a CPU score of 8k6 that is already faster than a desktop 9700K CPU, there is really no point going for faster clocks here beside damaging my eardrum

.

Yeah it's really confusing, my 2070 Super Max-Q is 80W at stock, activating "Extreme" profile on Acer software will increase the TDP to 90W, using Nvidia Dynamic Boost (which is only available on selected models) will allow an additional 15W for the GPU. With 25W more power the performance can easily improve by 10%...