The big picture: Bringing wireless products to market requires filing a lot of regulatory paperwork. Google has been working on Soli, a small radar module for gesture detection and was granted FCC approval to use the device with far fewer restrictions.

Beginning in 2015 as part of Google's Advanced Technology and Projects group, Soli has grown into a 3D hand sensing that uses radar to quickly detect intricate hand gestures. In a late FCC ruling, Google has been granted permission to operate Soli at higher power levels and on aircraft.

Pressing your thumb and index finger together can simulate a virtual button push. Rubbing your fingers together can replicate turning a dial. Sliding your thumb along your index finger acts as a virtual slide control.

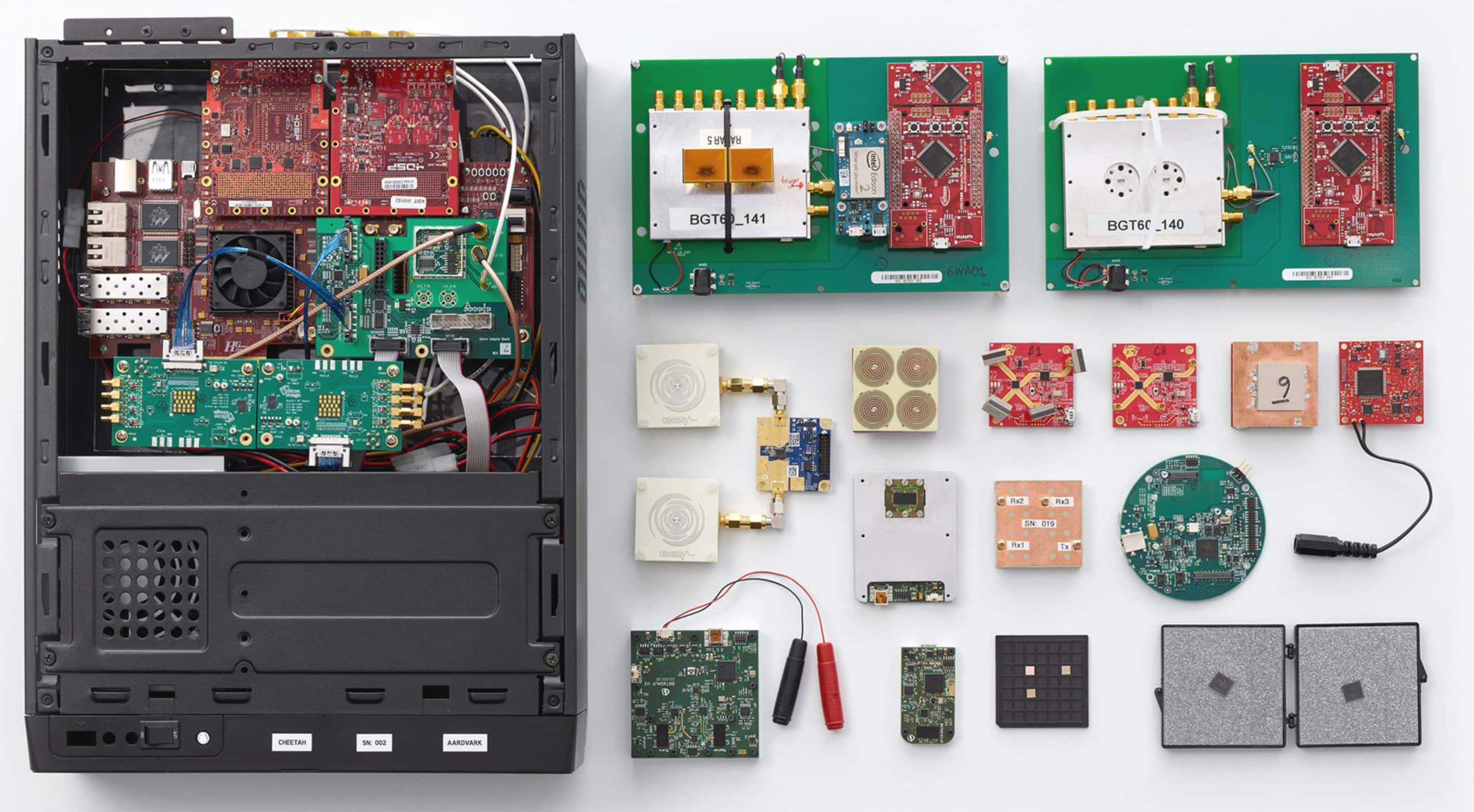

During the course of development, Soli started off as a box full of off-the-shelf components. Google engineers went through numerous iterations before condensing the system down to an 8mm by 10mm chip complete with integrated antennas operating in the 60GHz ISM band.

Now that regulatory restrictions on output power have been lifted, Google has a bit more freedom to get some real world testing done. Applications include wearables, smartphones, automotive use, and IoT devices.

For tracking small motions at relatively close range, one would expect that high resolution of radar would be required. However, Google has actually implemented a fairly coarse spatial resolution. Instead of directly tracking physical observations, movements are detected by looking at changes in the received signal which can be correlated to recognized actions. A group of students even used Soli to determine the composition of objects.

Assuming that a device has enough processing power, the Soli SDK allows for effect frame rates between 100 and 10,000 FPS. This truly real-time tracking has potential to create more natural interactions across a variety of Google's intended applications.

https://www.techspot.com/news/78073-google-gets-permission-operate-soli-gesture-detection-radar.html