What just happened? IBM Research recently published a paper detailing the development of an analog chip that promises GPU-level performance for AI inference tasks while markedly improving power efficiency. Although GPUs are widely used for AI processing, their high power consumption can lead to unnecessary costs.

The analog AI chip, which is still in development, has the capability to both compute and store memory in the same location. This design mirrors the function of the human brain, leading to enhanced power efficiency. This technology contrasts with current solutions that require constant data movement between memory and processing units, which reduces computational speed and heightens power usage.

In the company's internal tests, the new chip demonstrated a 92.81 percent accuracy rate on the CIFAR-10 image dataset when assessing the compute precision of analog in-memory computing. IBM claims this accuracy is on par with any existing chip employing similar technology. Additionally, its energy efficiency during testing is notable, consuming only 1.51 microjoules of energy per input.

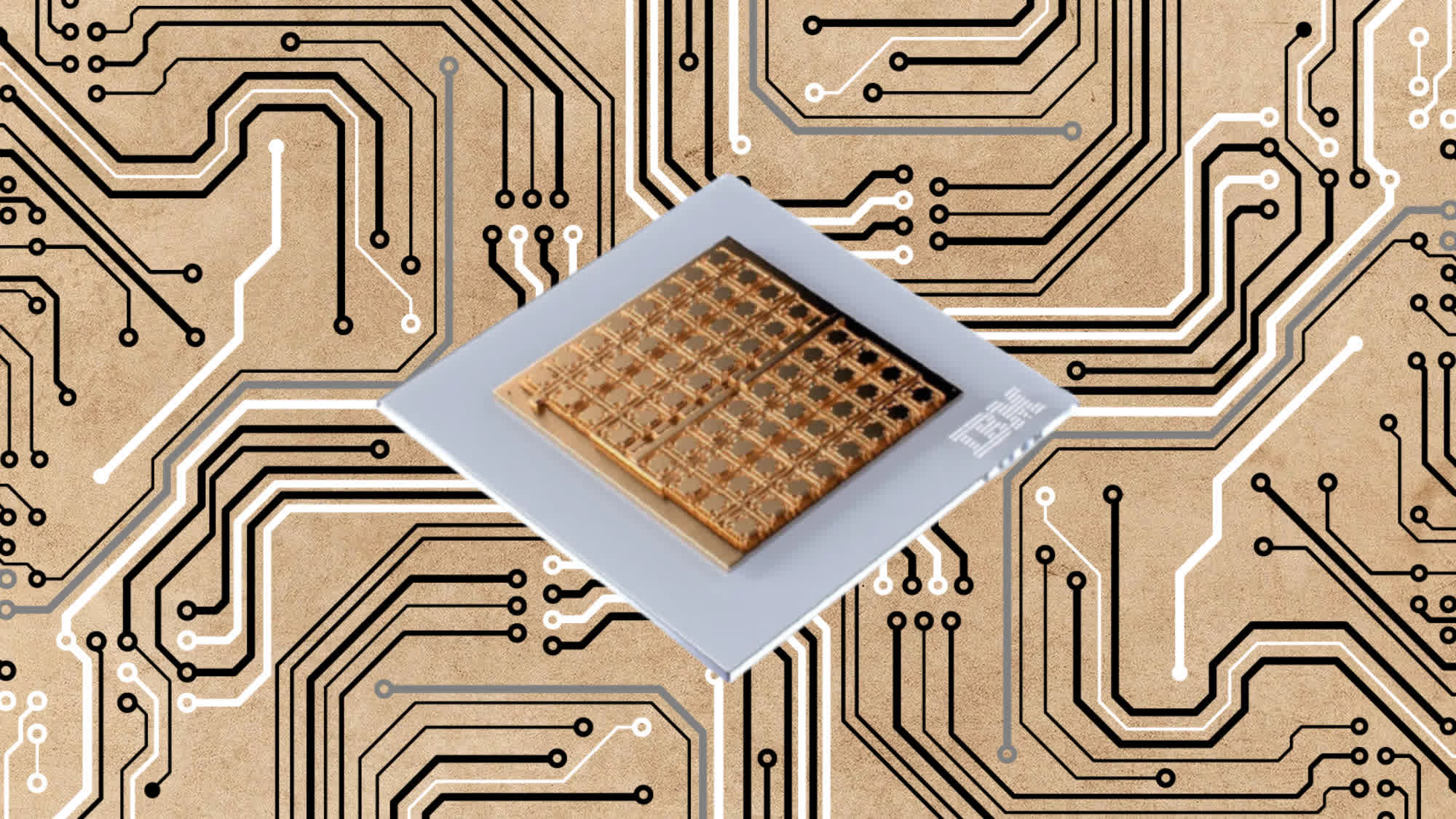

The research paper, published recently in Nature Electronics, delves further into the chip's construction. It is built using 14 nm complementary metal-oxide-semiconductor (CMOS) technology and incorporates 64 analog in-memory compute cores (or tiles). Each core features a 256-by-256 crossbar array of synaptic unit cells, enabling it to perform computations akin to a layer of deep neural network (DNN) models. Furthermore, the chip comes with a global digital processing unit that can handle more complex operations vital for certain neural networks.

The new IBM chip is undeniably an intriguing advancement, especially given the sharp rise in power consumption witnessed in AI processing systems. Reports indicate that AI inferencing racks can consume up to tenfold the power of standard server racks. This surge leads to both higher AI processing expenses and environmental concerns. In this context, any efficiency enhancement is likely to be embraced by the industry.

An added advantage is that a specialized, power-efficient AI chip might reduce the demand for GPUs, possibly leading to price drops which would benefit gamers. However, this is speculative at this stage, with the IBM chip still under development. The timeline for its commercial production remains unknown and until that materializes, GPUs will continue to dominate AI processing, making it unlikely that they will become more affordable in the near future.

https://www.techspot.com/news/99859-ibm-new-analog-ai-chip-more-efficient-than.html