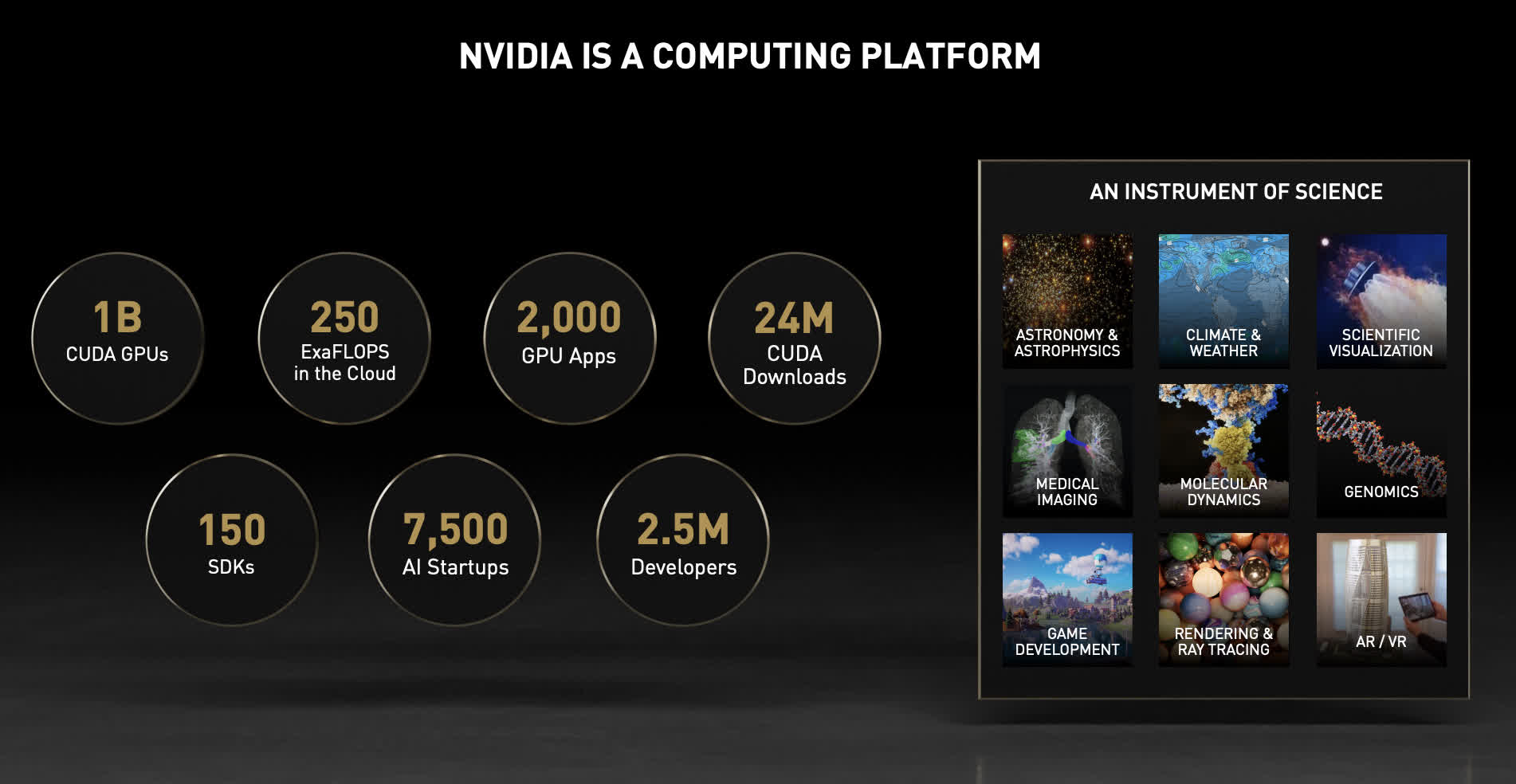

The big picture: For those of you who haven’t been paying close attention, Nvidia is no longer a gaming company. Oh, sure, they continue to make some of the best-performing graphics chips for gaming and host a global cloud-based gaming service called GeForce Now. But if you take a step back and survey the full range of the company’s offerings, it’s significantly broader than it has ever been—a point CEO Jensen Huang hammered home during his GTC keynote this week. In his words, “Nvidia is now a full-stack computing company.”

Reflecting how much wider Nvidia’s scope has become over the past few years, ironically there was probably as much news about non-GPU chips as there were GPUs at GTC 2021 (GPU Technology Conference).

Between a new 5nm Arm-based CPU codenamed “Grace,” a broadening of the DPU line created from the Mellanox acquisition, new additions to its automotive chips and platforms, and discussions of quantum computing, 5G data processing and more, the company is reaching to ever broader portions of the computing landscape.

Not to be left out, of course, there were new GPU and GPU-related announcements, including an impressive array of new cloud-based AI-powered GPU software and services.

To its credit, Nvidia has been broadening the range of applications for GPUs for several years now. Its impact on machine learning, deep neural networks and other sophisticated AI models has been well documented, and at this year’s show, the company continued to extend that reach. In particular, Nvidia highlighted its efforts targeted toward the enterprise with a whole range of pre-built AI models that companies can more easily deploy for a wide range of applications. The previously announced Jarvis conversational AI tool, for example, is now generally available and can be used by businesses to build automated customer service tools.

As impressive as the GPU-focused applications for the enterprise are, the big news splash (and some of the biggest confusion) out of GTC came from the company’s strategic shift to three different types of chips: GPUs, DPUs and CPUs.

The newly unveiled Maxine project is designed to improve video quality over low-bandwidth connections and to perform automatic transcription and real-time translation—timely and practical capabilities that many collaboration tools have but could likely be enhanced with the integration of Nvidia’s cloud-based, AI-powered tools. The company also made an important announcement with VMware, noting that Nvidia’s AI tools and platforms can now run on virtualized VMWare environments in addition to dedicated bare metal hardware. While seemingly mundane at first glance, this is actually a critically important development for the many businesses that run a good portion of their workloads on VMware.

As impressive as the GPU-focused applications for the enterprise are, the big news splash (and some of the biggest confusion) out of GTC came from the company’s strategic shift to three different types of chips: GPUs, DPUs and CPUs. CEO Huang neatly summarized the approach with a slide that showed the roadmaps for the three different chip lines out through 2025, highlighting how each line will get updates every few years, but with different start points, allowing the company to have one (and sometimes two) major architectural advances every year.

The DPU line, codenamed BlueField, builds on the high-speed networking technology Nvidia acquired when it purchased Mellanox last April. Specifically targeted at data centers, HPC (high-performance computing), and cloud computing applications, the BlueField line of chips is ideally suited to speed the performance of modern web-based applications.

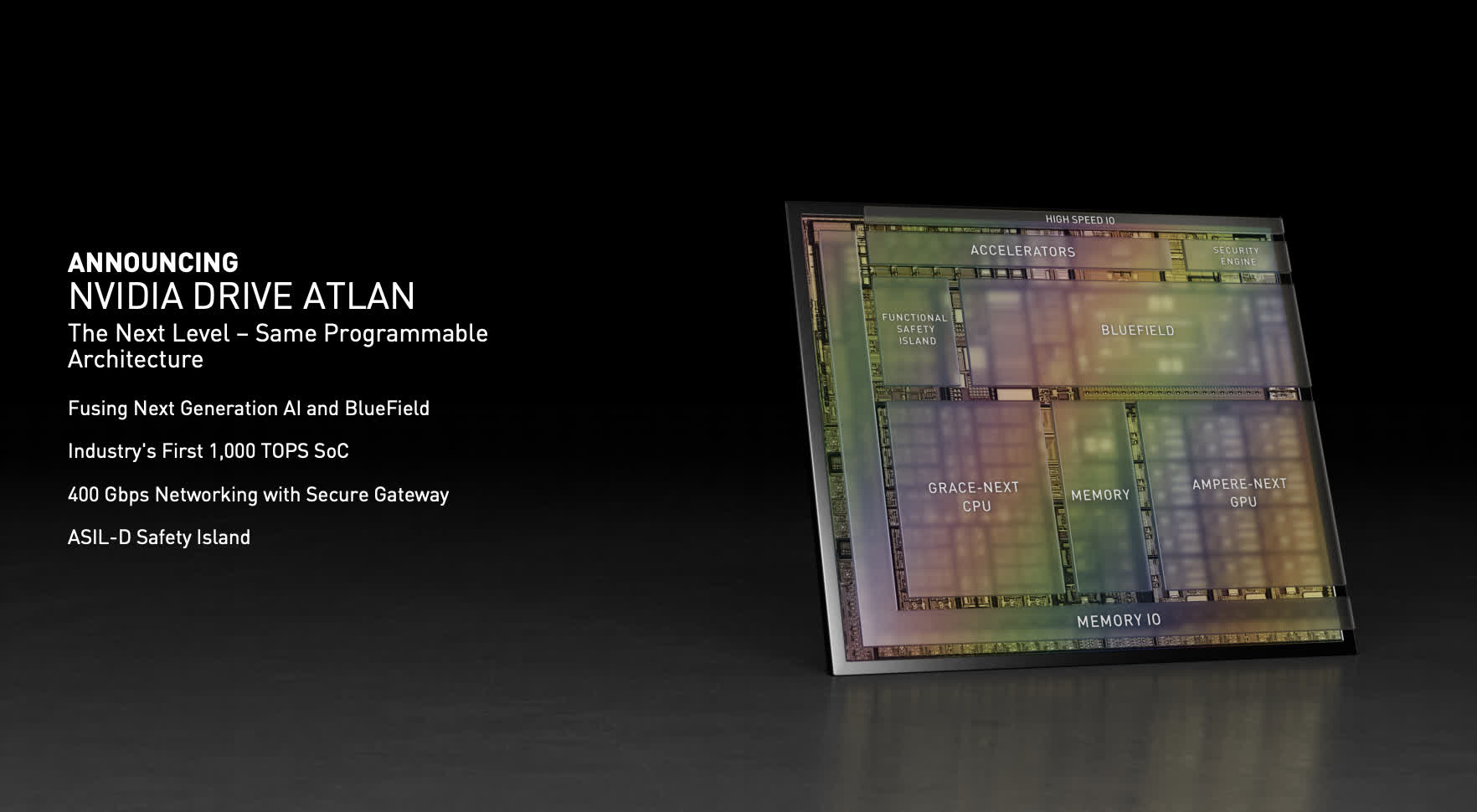

Because these applications are split into numerous smaller containers that often run on multiple physical servers, they are highly dependent on what’s commonly called “east-west” traffic between computing racks in a data center. Importantly, however, these same software development principles are being used for an increasingly wide range of applications, including automotive, which helps explain why the latest generation of automotive SoC (codenamed Atlan and discussed below) includes a BlueField core in its design.

The new CPU line—which unquestionably generated the most buzz—is an Arm-based design codenamed Grace (for computing pioneer Grace Hopper—a classy move on Nvidia’s part). Though many initial reports suggested this was a competitive product to Intel and AMD x86 server CPUs, the truth is that the original implementation goal for Grace is only for HPC and other huge AI model-based workloads. It is not a general-purpose CPU design. Still, in the kinds of advanced, very demanding and memory-intensive AI applications that Nvidia is initially targeting for Grace, it solves the critical problem of connecting GPUs to system memory at significantly faster speeds than traditional x86 architectures can provide. Clearly, this isn’t an application that every organization can take advantage of, but for the growing number of organizations that are building large AI models, it’s still very important.

Of course, part of the reason for the confusion is that Nvidia is currently trying to purchase Arm, so any connection between the two is bound to get inflated into a larger issue. Plus, Nvidia did demonstrate a range of different applications where it’s working to combine its IP with Arm-based products, including cloud computing with Amazon’s AWS Graviton, scientific computing in conjunction with Ampere’s general purpose Altra server CPUs, 5G network infrastructure and edge computing with Marvell’s Octeon, and PCs with MediaTek’s MT819x SoCs.

As with a next-generation BlueField DPU core, the new Atlan automotive SoC diagram incorporates a “Grace Next” CPU core, generating yet even more speculation. Speaking of which, Nvidia also highlighted a number of automotive-related announcements at GTC.

The company’s next-generation automotive platform is codenamed Orin and is expected to show up in vehicles from big players like Mercedes-Benz, Volvo, Hyundai and Audi starting next year. The company also announced the Orin central computer, where a single chip can be virtualized to run four different applications, including the instrument cluster, infotainment system, passenger interaction and monitoring, and autonomous and assisted driving features with confidence view—a visual display of what the car’s computers are seeing, designed to give passengers confidence that it’s functioning properly. The company also debuted their eighth generation Hyperion autonomous vehicle (AV) platform, which incorporates multiple Orin chips, image sensors, radar, lidar, and the company’s latest AV software.

A new chip—the aforementioned Atlan—is scheduled to arrive in 2025. While many may find a multi-year pre-announcement to be overkill, it’s relatively standard practice in the automotive industry, where they typically work on cars three years in advance of their introduction.

Atlan is also the first Nvidia product to include all three of the company’s core chip architectures—GPU, DPU and CPU—in a single semiconductor design.

Atlan is intriguing on many levels, not the least of which is the fact that it’s expected to quadruple the computing power of Orin (which comes out in 2022) and reach a rate of 1,000 TOPS (tera operations per second). As hinted at earlier, Atlan is also the first Nvidia product to include all three of the company’s core chip architectures—GPU, DPU and CPU—in a single semiconductor design.

The details remain vague, but the next generation of each of the current architectures is expected to be part of Atlan, potentially making it a poster child of the company’s expanded opportunities, as well as a great example of the technical sophistication of automobiles to be released in that era. Either way, Atlan will definitely be something to watch.

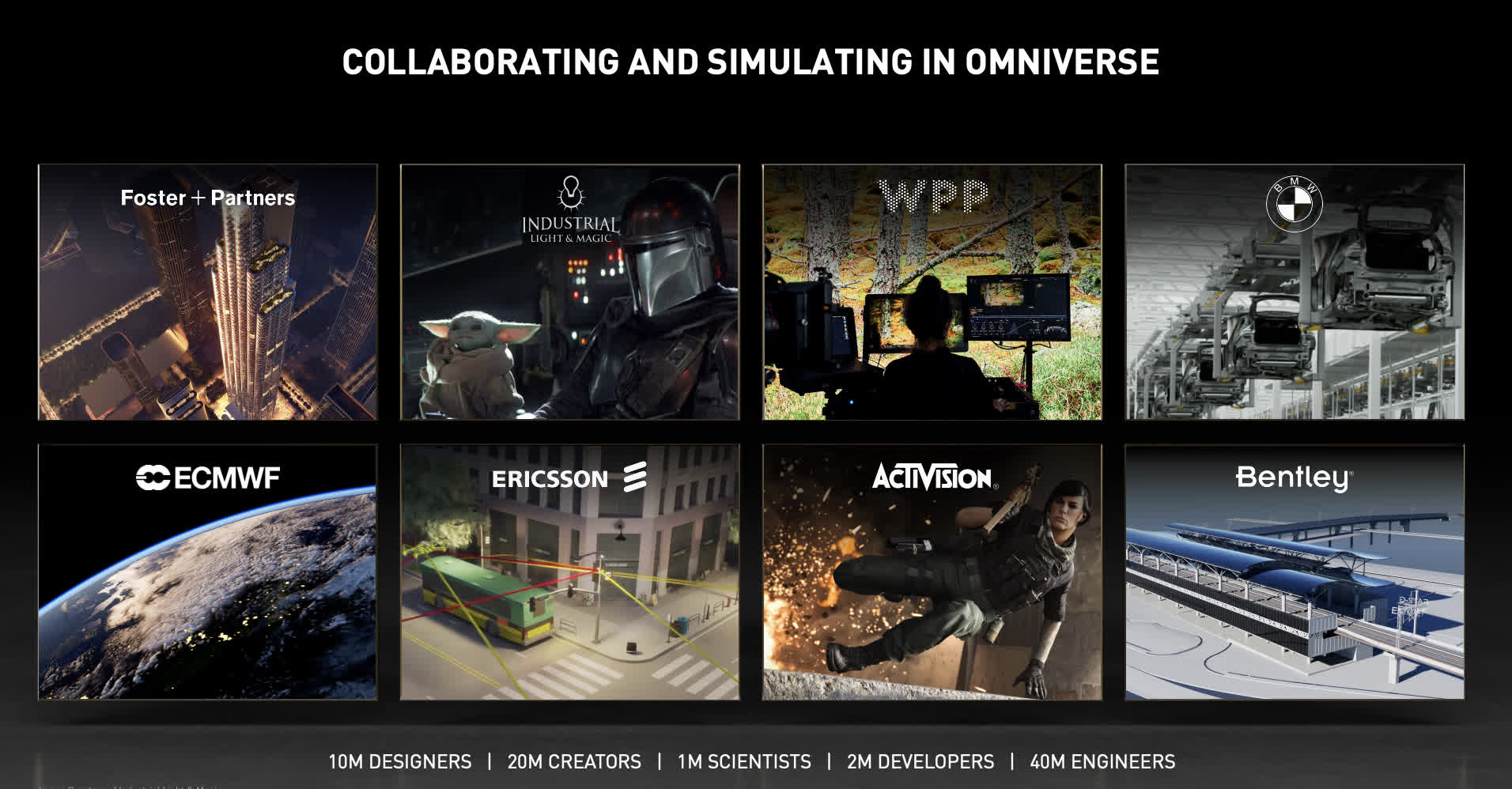

All told, Nvidia showcased a wide ranging and impressive story at GTC. I haven’t even mentioned, until now, its Omniverse platform for 3D collaboration, its DGX supercomputer-like hardware platforms, and a host of other announcements the company made. There was simply too much to cover in a single column. However, this much is apparent. Nvidia is clearly focused on a much broader range of opportunities than it ever has.

Even though gaming fans may have been disappointed by the lack of news for them, anyone thinking about the future of computing can’t help but be impressed by depth of what Nvidia unveiled. Gaming and GPUs, it seems, are just the start.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.

https://www.techspot.com/news/89280-if-you-havent-paying-attention-nvidia-no-longer.html