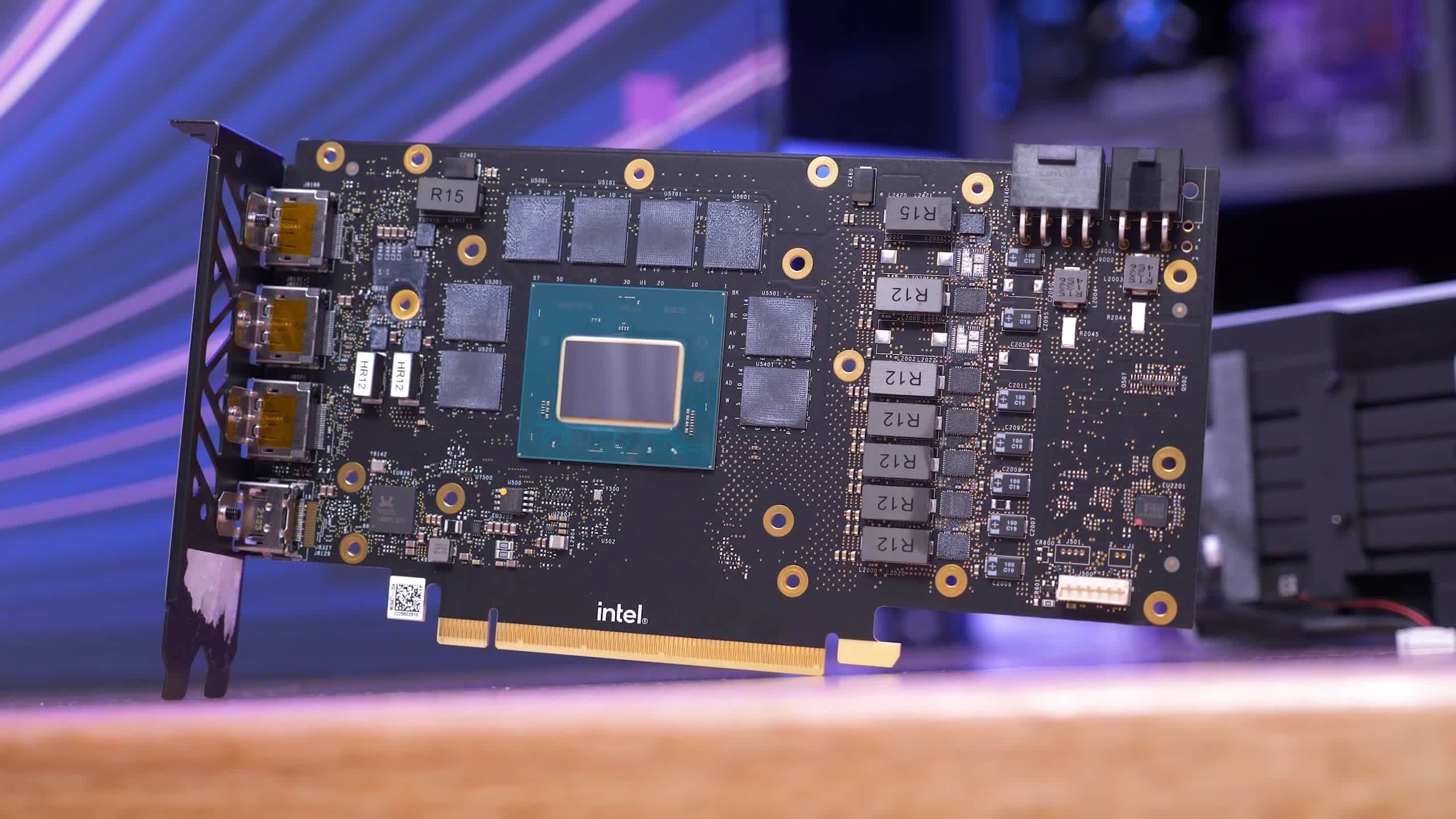

Why it matters: After a troubled launch and several big driver updates, Intel's Arc Alchemist GPUs aren't exactly on all PC gamers' wishlists. However, the company is taking all the negative in stride by incorporating the lessons learned from architectural and software mistakes into upcoming Battlemage discrete graphics solutions. There is no release date or confirmed specs to get excited about, but we now know that Team Blue is looking to push both AMD and Nvidia to rethink their pricing strategies moving forward.

Intel's foray into the discrete graphics market has been marred with problems, from the unfortunate timing of Arc Alchemist's launch to the various performance and stability issues experienced by early adopters. That said, Team Blue appears committed to the long, arduous process needed to make its products appealing to more gamers.

Tom Petersen, ex-Nvidia veteran turned Intel Fellow, confirmed as much during a recent podcast with PC World's Gordon Ung and Brad Chacos. Petersen candidly agreed with the public sentiment that the first generation of Arc hardware isn't exactly a viable option for people building a new PC or looking for an upgrade over an aging graphics card from Nvidia or AMD, especially if you're looking to play any of the noteworthy VR titles out there.

The Arc Control Center is notorious for being unreliable

One of the biggest reasons for that is Intel's software stack, which lags behind that of the other two companies. However, Petersen pointed to great strides made in the driver department since launch and explained it's only a matter of time before Intel will be able to squeeze all possible performance out of Arc silicon, particularly in DirectX 9, 10, and 11 titles.

Of course, most people may well avoid the first-generation Arc hardware due to the disappointing cost per frame in most regions. Petersen won't say how well Arc Alchemist is selling so far, but he insists that Intel is focused on growing market share and mind share through aggressive pricing at a time when Team Green and Team Red are even reducing supply to protect their profit margins. The end goal isn't to bring back sub-$200 graphics cards, but to offer better value for the majority of PC gamers out there.

With the leaked desktop graphics roadmap from last month, it's no surprise the hosts of the podcast popped some questions about the upcoming Battlemage GPUs. Unfortunately, Intel is keeping a tight lip on this outside of hinting that development is progressing along as expected. If the leak is accurate, that means we should see the first products from that family as soon as next year.

Peterson did say that Intel learned a great deal from its architectural mistakes with first-gen Arc silicon which is informing the design of Battlemage. This could mean a lot of things but, if anything, Intel is pooling most of its resources from the reorganized graphics division into making sure Battlemage doesn't suffer the same launch problems as Alchemist. And while the company did rush the first generation of its discrete GPUs out the door to gather user feedback, this won't be the case with future generations.

One thing is for sure — Intel's efforts in the short term are more focused on chipping away at AMD's market share and building "cool new open technologies" and less on challenging Nvidia at the high end. The company also wants to get better at DirectX 11 and 12 performance scaling as well as ray tracing and resolution upscaling, and expects these to evolve at a "discreet rhythm."

https://www.techspot.com/news/97720-intel-confirms-battlemage-promises-great-value-gpus-better.html