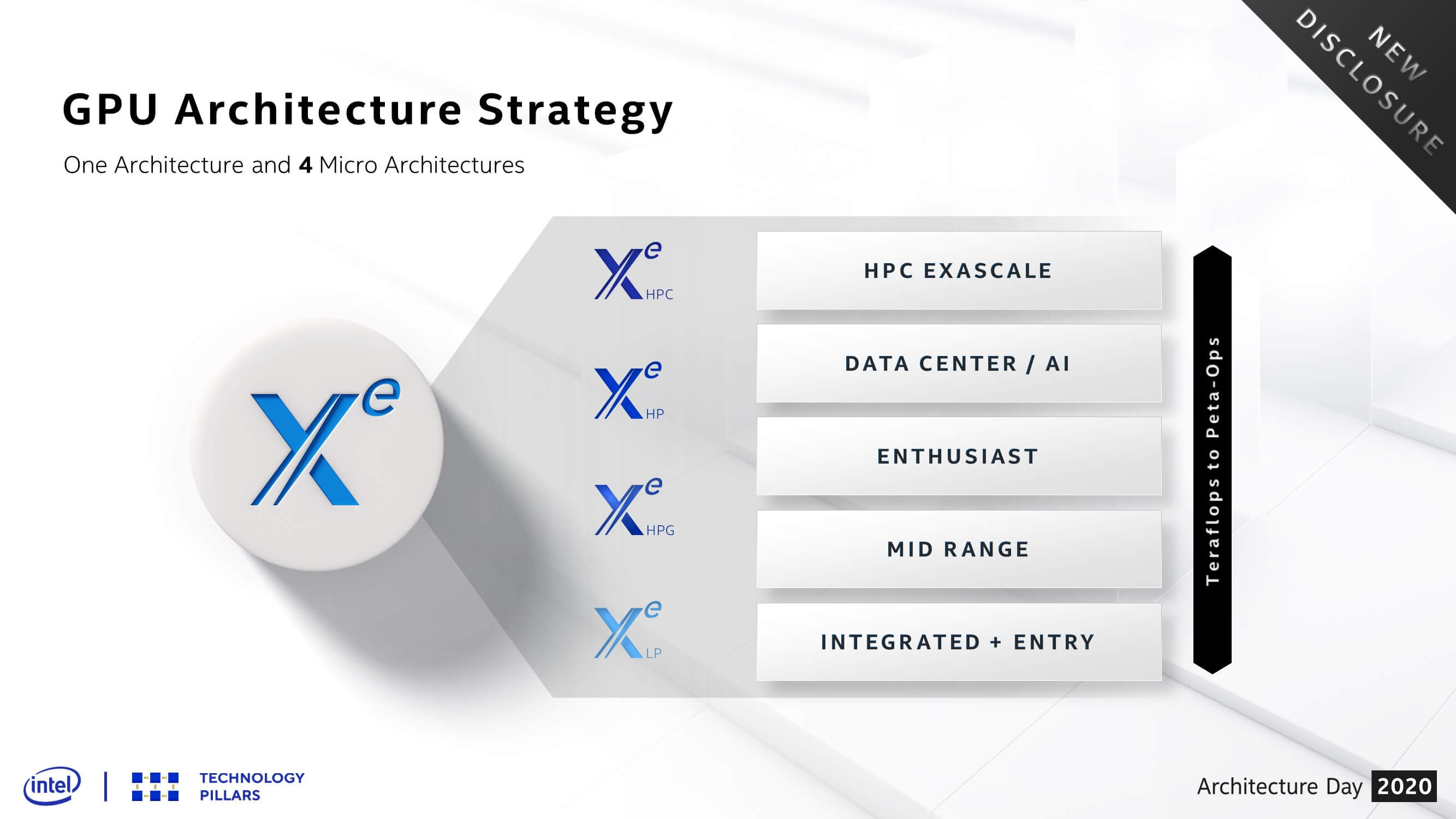

In a nutshell: Intel hasn't forgotten about hardcore gamers with its upcoming Xe graphics architecture. The chipmaker during its Architecture Day presentation on Thursday revealed a fourth microarchitecture in the Xe graphics family that's purpose-built for serious gamers. Unfortunately, we'll have to wait until next year to see how it stacks up to offerings from competitors AMD and Nvidia.

In development since 2018, the Xe HPG microarchitecture is said to leverage the best aspects of the three designs already in progress – the graphics efficiency of Xe LP, the scalability of Xe HP and the compute efficiency of Xe HPE – to create an optimization specifically for enthusiast gamers.

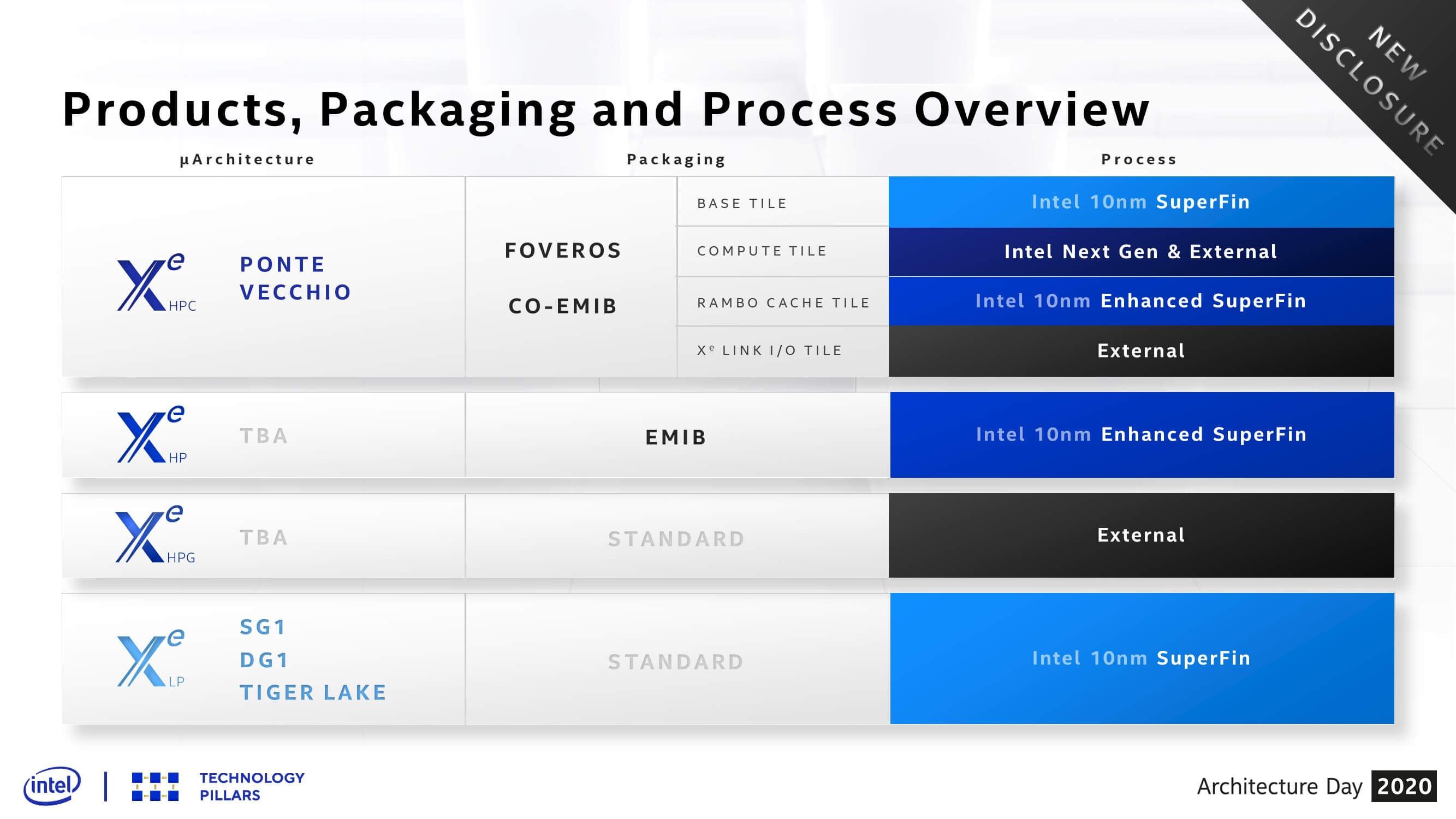

According to Intel, it’ll come with several new graphics features including ray tracing support. Xe HPG will also pack a memory subsystem based on GDDR6, reducing overall cost. In comparison, Xe HP and Xe HPC are based on HBM which is more expensive.

Raja Koduri, Intel’s chief GPU architect, joked that he still has the scars on his back from trying to bring expensive memory subsystems like HBM to gaming – a subtle jab at his former employer, AMD.

Tom’s Hardware further notes that Intel is also removing FP64 (64-bit floating point) support on Xe HPG, or at the very least, scaling it way back. Tensor cores could also get the boot, which would give the chipmaker more space for gaming-specific features.

Key to developing Xe HPG in parallel to its other tasks, Koduri said, was leveraging IP from the external foundry ecosystem. This means Xe HPG won’t slow down other projects relying on its limited 10nm SuperFin process node.

Early examples of Xe HPG are already being tested in Intel’s labs, we’re told, with plans to ship out to gamers sometime in 2021.

https://www.techspot.com/news/86369-intel-introduces-gamer-focused-xe-hpg-microarchitecture.html