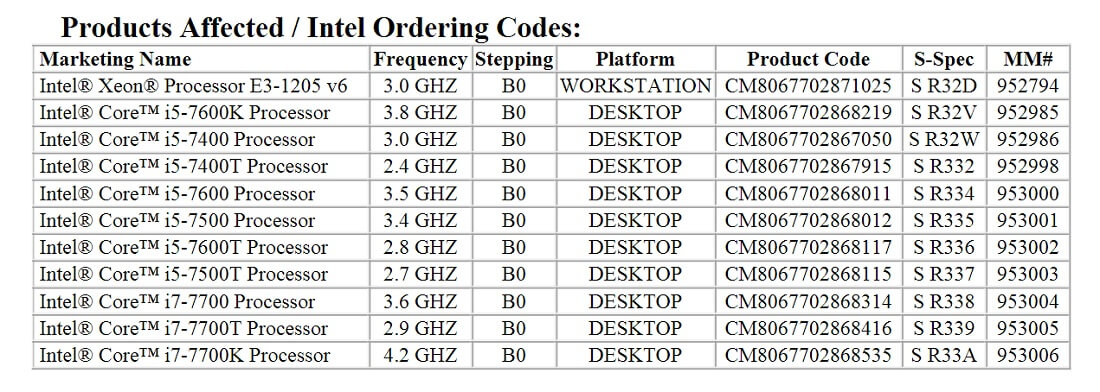

Intel wasn't expected to officially reveal their line-up of Kaby Lake desktop processors until early next year, as the company announced at IDF several months ago. However, they have essentially leaked details of their own unannounced CPUs through a product change notification (PCN) document, which was intended for manufacturing partners but published publicly.

In the PCN document, Intel lists ten desktop-class Kaby Lake Core processors and one Xeon product, along with their base clock speeds and product codes. As expected, Intel is using a similar naming scheme to Skylake: K-designators for unlocked 95W parts, T-designators for low-power 35W parts, and no suffix for regular 65W parts.

We can also expect to see a similar core configuration here, with Core i7 products receiving four cores and eight threads, while Core i5 CPUs get four cores and four threads. Cheaper Core i3 parts will be dual-core with four threads.

The main change moving from Skylake to Kaby Lake appears to be a slight increase in base clock speeds, somewhere in the 100 to 300 MHz range depending on model. Intel's refined 14nm+ manufacturing process allows these clock speed gains at no cost to power consumption, and users can also look forward to minor Speed Shift improvements as well.

A separate document has revealed Intel's upcoming chipset names, and again they aren't a massive surprise.

At the top end we're looking at the Z270 chipset, while we can also expect H270, B250, Q270 and Q250. Additionally, Intel revealed the C422 and X299 chipsets, which could be next-generation products for server and enthusiast processors respectively.

https://www.techspot.com/news/66873-intel-leaks-desktop-kaby-lake-processor-chipset-details.html