What just happened? Intel says its more powerful Arc Alchemist A700 cards are arriving very soon, though we still don't know precisely when that will be. With the launch seemingly just around the corner, Chipzilla has now published specifications of the entire A-series lineup.

We already know about Intel's entry-level A380—read Steve Walton's review of the underwhelming desktop graphics card here—so most of the interest will be on the higher-end A770 and A750.

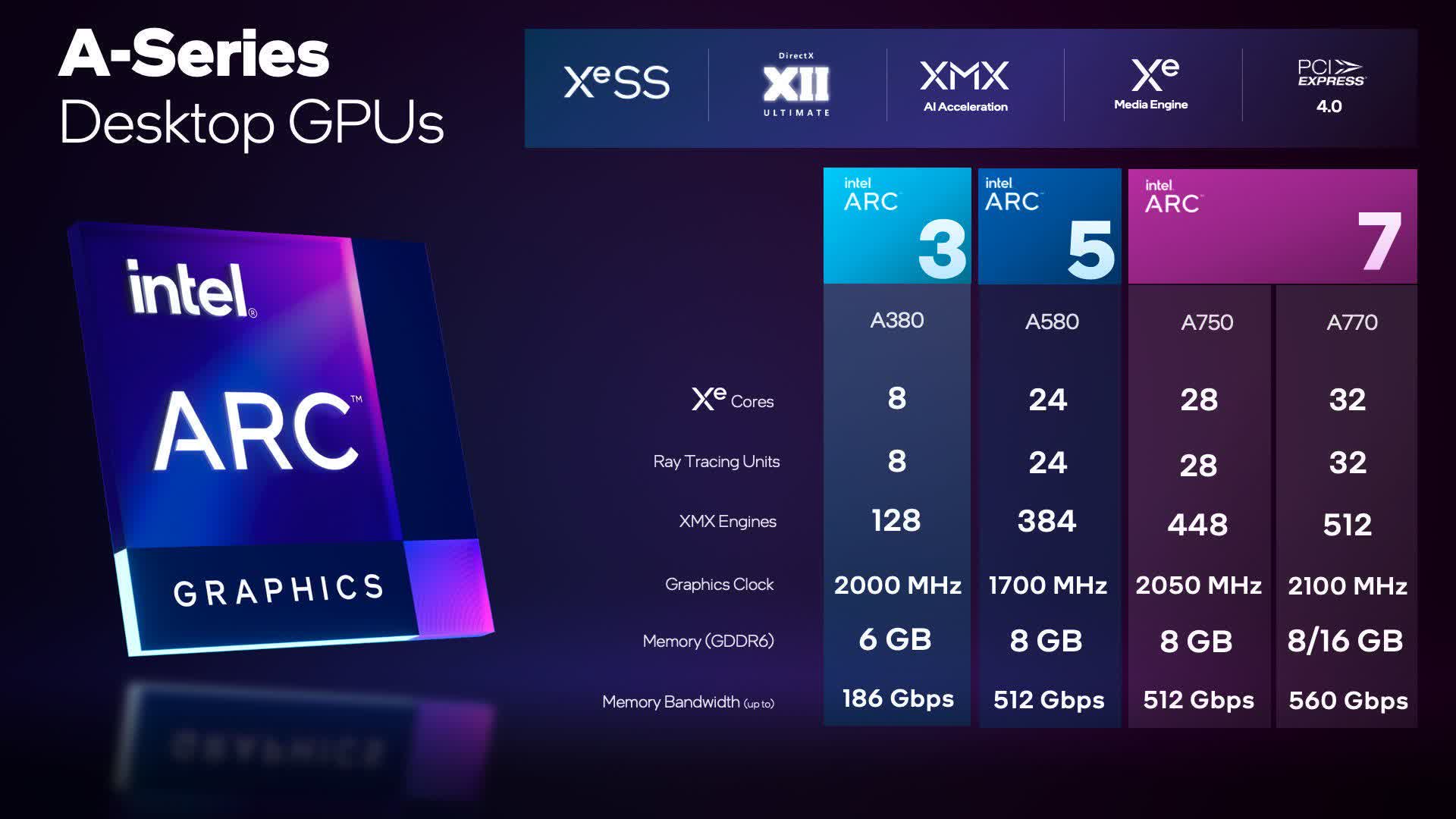

For the A770 flagship, Intel has confirmed that it will use the full-fat ACM-G10 GPU that packs 32 Xe cores, has a clock speed of 2,100 MHz, a total board power of 225W, comes with 8GB or 16GB of GDDR6, and a maximum memory bandwidth of 560 GB/s. Intel recently said that AIB partner cards would offer both memory sizes, while its Limited Edition (reference design) will only be available as a 16GB model.

Next in the A-series hierarchy is the A750. The card uses a cut-down version of the ACM-G10 GPU featuring 28 Xe cores, a 2,050 MHz clock speed, 8GB of GDDR6, and 512 Gbps of total bandwidth. As with the A770, the TBP is rated at 225W.

Intel has been positioning the Arc 7 A770 as a card that can outperform the RTX 3060 in 1080p ray tracing benchmarks, suggesting its performance will be closer to the RTX 3060 Ti, leaving the A750 as more of a rival for the non-Ti RTX 3060.

Next up is the A580, which has 24 Xe, a 1,700 MHz clock speed, 8GB of GDDR6 memory, and up to 512GB/s bandwidth. This presumably cheaper option will sit between the A380 and A750.

Intel said it would launch its own branded cards on "day 1" in key countries. The important element it still hasn't revealed is how much they cost. You can currently find an Arc A380 for about $140. As for the A-series, the cheapest RTX 3060 on Newegg is $349, while the lowest priced RTX 3060 Ti is $449, so Intel could be looking to price the A750 and A770 lower than their Nvidia competitors. We should find out for sure any day now.

https://www.techspot.com/news/95920-intel-reveals-full-spec-list-four-arc-alchemist.html