In context: As robotics and artificial intelligence advances, certain jobs become displaced. It’s just the natural progression of things. One only has to look at the history of the auto industry to see automation in play. For certain tasks machines are just more efficient than humans.

Most of the jobs that get displaced by computers or robots are menial labor that requires little or no education. However, now that machine learning algorithms are becoming more sophisticated, even highly educated positions could be replaced by automation.

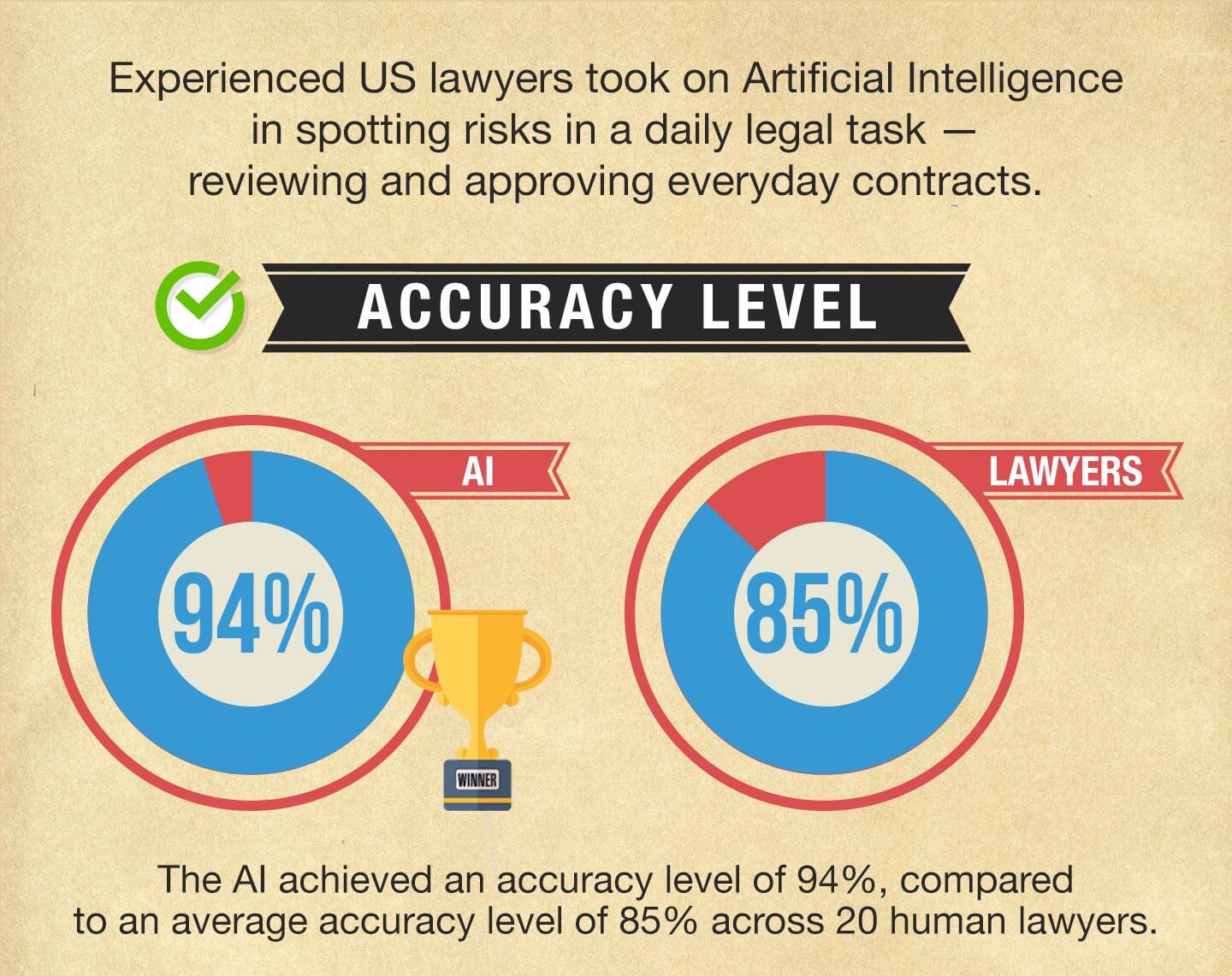

A recent study by LawGeex pitted its machine-learning AI against 20 human lawyers to see how it would fare going over contract law. Each lawyer and the LawGeex AI were given five nondisclosure agreements to review for risks. The humans were given four hours to study the contracts. The results were pretty remarkable.

The lawyers took an average of 92 minutes to complete the task and achieved a mean accuracy level of 85 percent. LawGeex took only 26 seconds to review all five contracts and was 94-percent accurate. The AI tied with the highest scoring lawyer in the group in terms of accuracy.

To be clear, these were not first-year grad students or even freshly minted lawyers with no experience. The group was comprised of law firm associates, sole practitioners, in-house lawyers, and General Counsel. Some of them worked at firms such as Goldman Sachs, Cisco, Alston & Bird, and K&L Gates. All of them had extensive experience reviewing contracts with these companies.

An independent panel of law professors from Stanford, Duke, and USC law schools judged the accuracy of the test. The study notes, “There is less than a 0.7-percent chance that the results were a product of random chance.”

So what does this mean? Are lawyers at risk of being replaced? Probably not, at least not for things such as arguing case law. However, some lawyers and paralegals study contracts all day, every day. Computer algorithms like the LawGeex AI could replace these common positions. On the bright side, these displaced legal workers likely have skills that can be utilized in more challenging areas.

It could also mean lower cost legal services for consumers down the road. Having a machine do the drudge work means lawyers can work more efficiently, the savings in time could be passed on to the client. Oh, but who are we kidding? We're talking lawyers passing on savings. I'm not betting on that horse.

Images via LawGeex

https://www.techspot.com/news/77189-machine-learning-algorithm-beats-20-lawyers-nda-legal.html