In context: Nvidia is expected to unveil the next-generation RTX 5000 graphics cards next month, but leaks have uncovered most of the new lineup's technical details. The latest reports offer a close-up of the flagship GPU and answer lingering questions regarding the mid-range options.

Leakers on the Chiphell forums recently provided two close-up images of the upcoming GeForce RTX 5090. Meanwhile, established tipster @kopite7kimi has provided near-complete specs for the 5070 Ti and 5070.

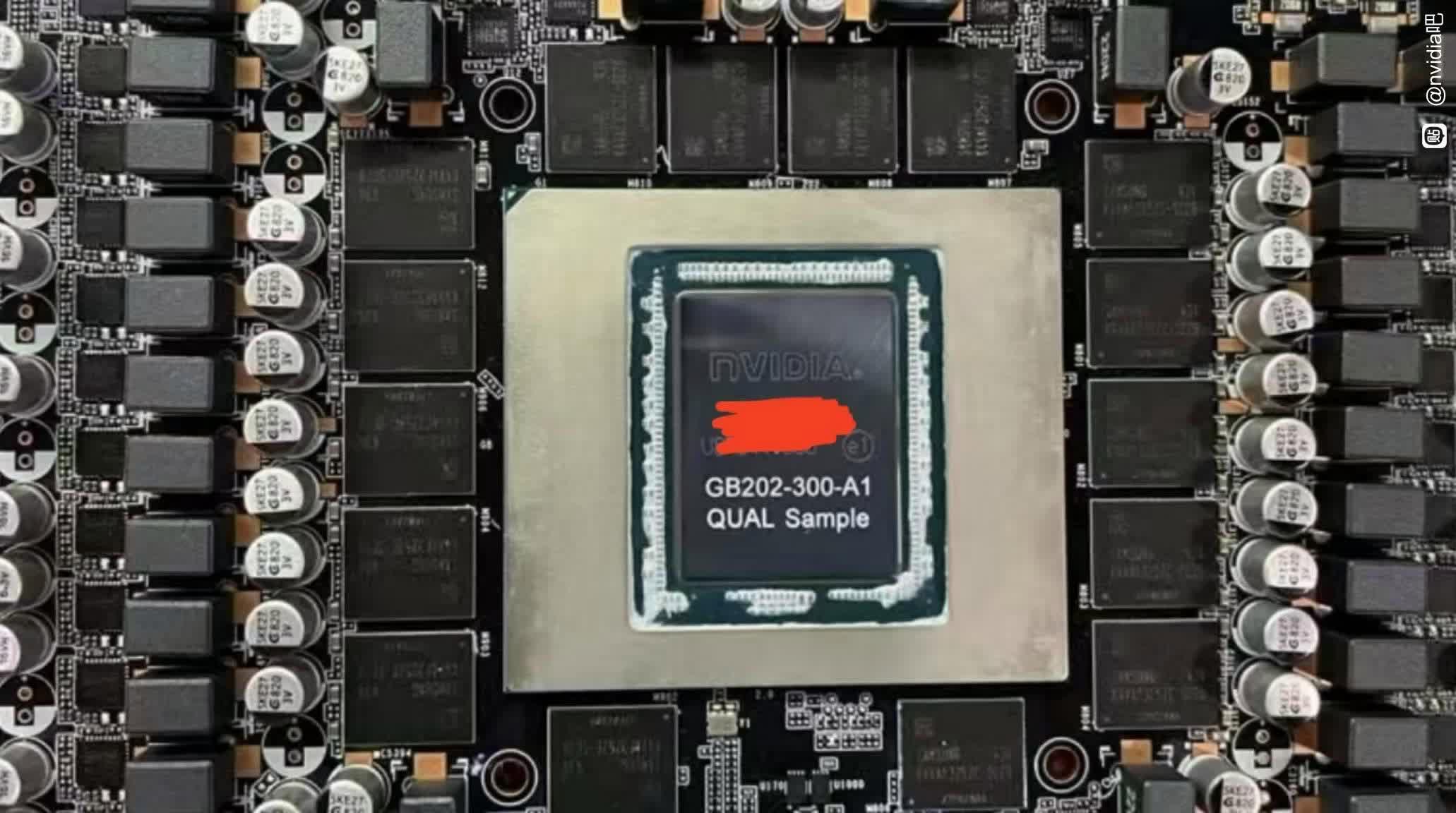

The first RTX 5090 photo, appearing to show a naked custom PNY variant, confirms prior rumors that the card will be big. A PCIe 5.0 connector, a single-slot 12V-2x6 port, 16 memory modules, and over 40 capacitors are visible. Another image shows the flagship card with its components installed, including its GB202-300-A1 GPU, said to be Nvidia's largest consumer GPU die in six years at 24mm x 31mm.

Based on prior reports, the RTX 5090 substantially outperforms the 4090 with 32 GB of GDDR7 VRAM running at 28Gbps on a 512-bit bus and 21,760 CUDA cores. While its TDP is rumored to be 600W, the final product's power draw might be considerably lower.

Meanwhile, two recent Twitter posts from Kopite corroborate prior leaks regarding the RTX 5070 Ti and 5070. Notably, the 5070's 6,144 CUDA core count confirms that it is slightly cut down from the GB205's maximum of 6,400. The GPU features 12 GB of VRAM on a 192-bit bus and draws 250W – a slight increase over the 4070.

Customers who are disappointed with the RTX 5070's modest VRAM pool but unwilling to pay the 5080's likely quadruple-digit price tag should consider the 5070 Ti. This card boosts memory to 16 GB while drawing 300W with 8,960 CUDA cores. All RTX 5000 graphics cards are equipped with GDDR7 VRAM.

Clock speeds and prices are the only remaining critical details that remain unknown. The latter likely won't leak before Nvidia's expected unveiling during its January 6 CES keynote, but the new images of the RTX 5090's monstrous PCB will likely stoke fears that it will break the $2,000 mark.

The upcoming Radeon RX 9000 series from AMD is also expected to debut at the trade show. Together with Intel's recently launched Arc Battlemage lineup, it could pose a serious challenge to the RTX 5060, which Nvidia might introduce later in the first quarter of 2025.

New leaks reveal Nvidia RTX 5090's massive PCB and full specs for 5070 Ti, 5070