The big picture: The two GPU rivals may argue over who has the more powerful AI acceleration graphics card, but there's no doubt about which hardware is more expensive. Nvidia has been selling its high-powered AI chips at ridiculous margins for months, and new estimates put AMD's prices at a fraction of those costs.

Citigroup estimates that AMD sells its MI300 AI acceleration GPUs for $10,000-$15,000 apiece, depending on the customer. AMD and Nvidia don't publicly disclose this pricing, so making estimations can be difficult, but the evidence indicates Nvidia's H100 cards might cost three or four times that of the competition's prices.

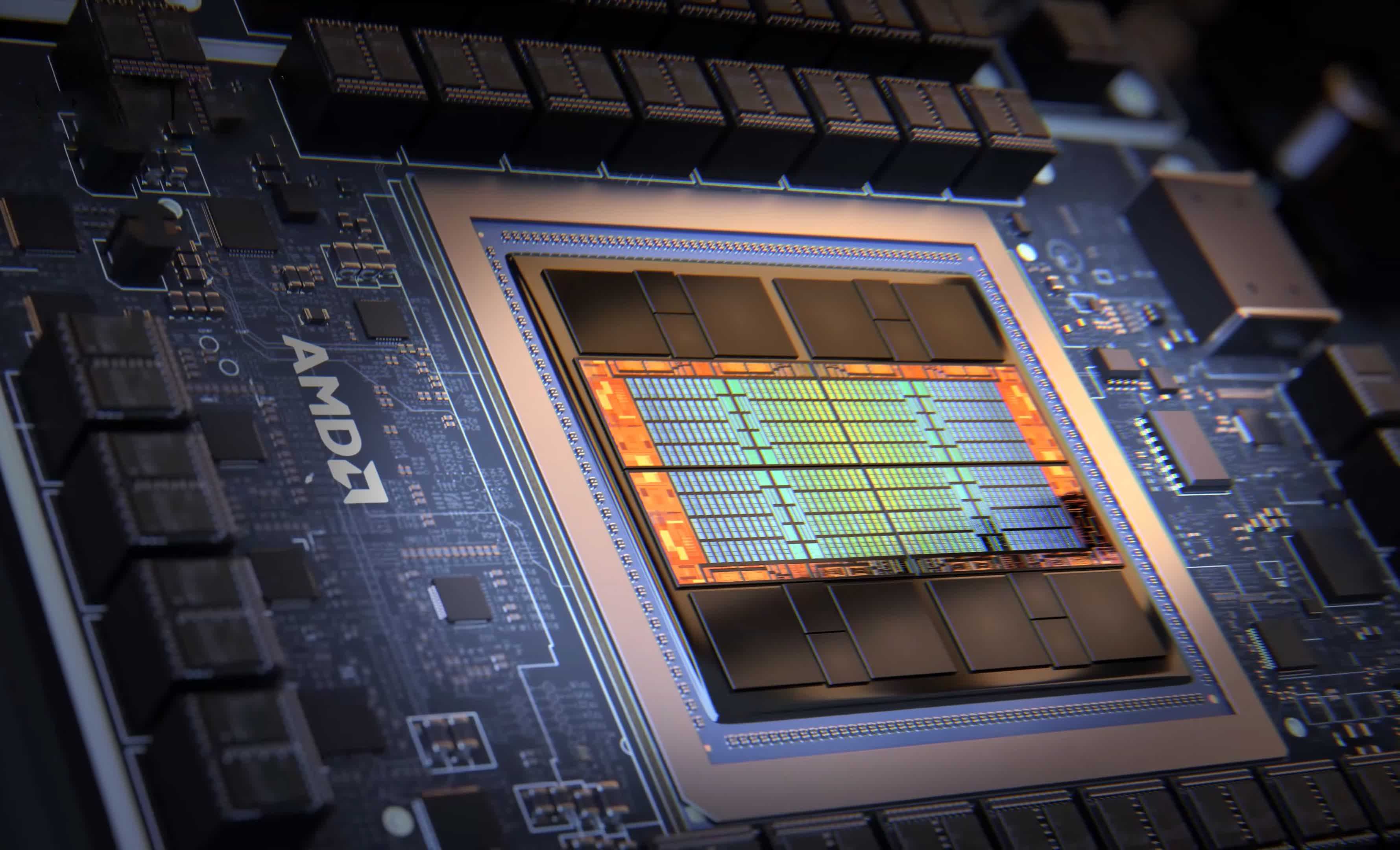

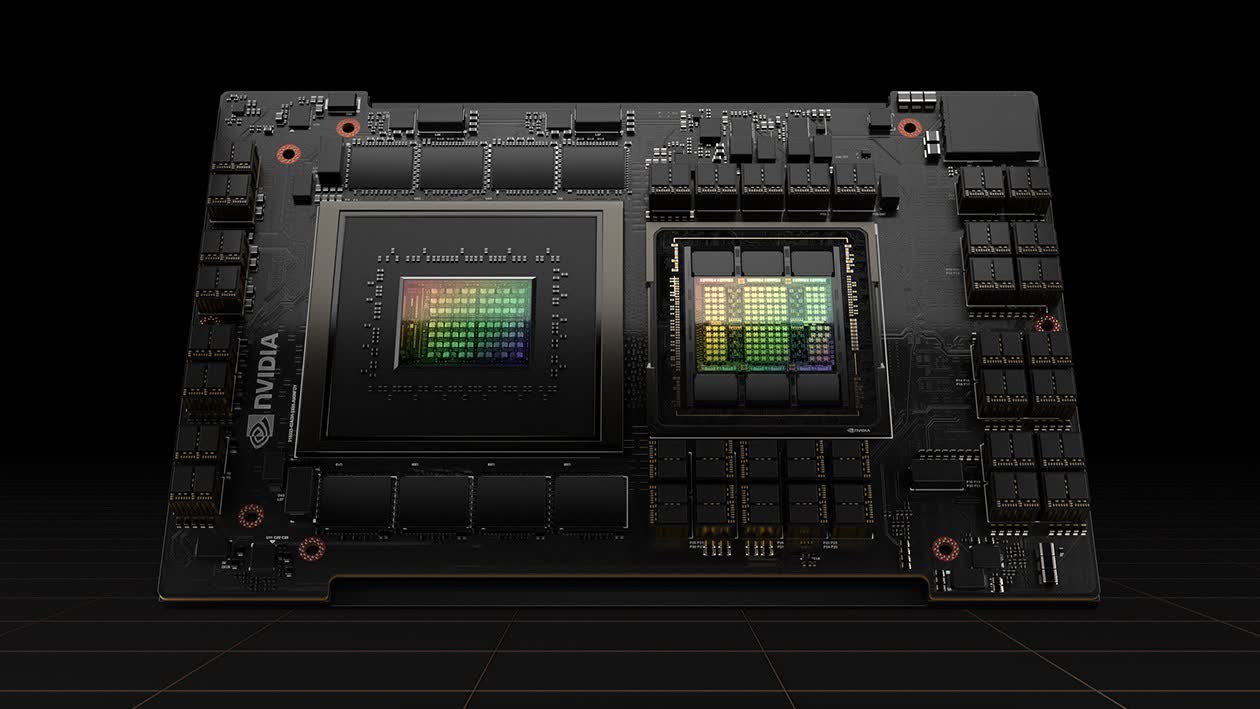

After Team Red introduced the MI300 series last year, it clashed with Nvidia over whether its MI300X or Nvidia's H100 was faster, with each company measuring performance using different software and metrics. If the H100 is superior, its performance advantage alone likely doesn't explain its estimated price of $30,000 per unit. eBay listings and investor comments put the H100 closer to $60,000, possibly five times what AMD charges its biggest clients – Microsoft and Meta.

Previous estimates suggested Nvidia currently makes a 1,000% profit on each H100, but the company's costs remain unclear. Last year, Barron's reported that manufacturing might account for only one-tenth of the workstation GPU's price, but other factors to consider include memory, labor costs, bulk versus individual purchases, arrangements with specific buyers, and IP royalty fees for CUDA. Nevertheless, Nvidia is undoubtedly leveraging its dominant market position and the incredibly tight supply of its AI chips.

Also read: Goodbye to Graphics: How GPUs Came to Dominate AI and Compute

Meanwhile, the dominance of its rival incentivizes AMD to mark down MI300 cards and take a smaller margin. The strategy certainly hasn't hurt the company's stock price, which the AI boom has driven to an all-time high in recent weeks.

In a Q4 2023 earnings call, CEO Lisa Su revised this year's AI-related sales revenue target from $2 billion to $3.5 billion. However, Citi accuses AMD of deliberately underestimating, claiming sales from MI300 will come closer to $5 billion in 2024 and $8 billion in 2025.

Team Green hasn't released its 2024 financials yet, but previous quarters show that the company's revenue skyrocketed from the demand for its AI chips. Nvidia's revenue from data centers, which includes its AI business, reached $14.51 billion in Q3 2023. Furthermore, analysts at Gartner estimate that the company's overall 2023 revenue was $23.98 billion.

https://www.techspot.com/news/101748-enormous-price-difference-develops-between-amd-nvidia-hpc.html