Rumor mill: Following the disappointing launches of Nvidia's RTX 5090, 5080, 5070 Ti, and 5070 graphics cards, the Blackwell lineup's mainstream and entry-level products are expected to emerge soon. Unsurprisingly, they feature slightly more CUDA cores, draw more power, and upgrade to GDDR7 VRAM without increasing the VRAM pool. The exception is the 5050, which closely resembles the RTX 3050.

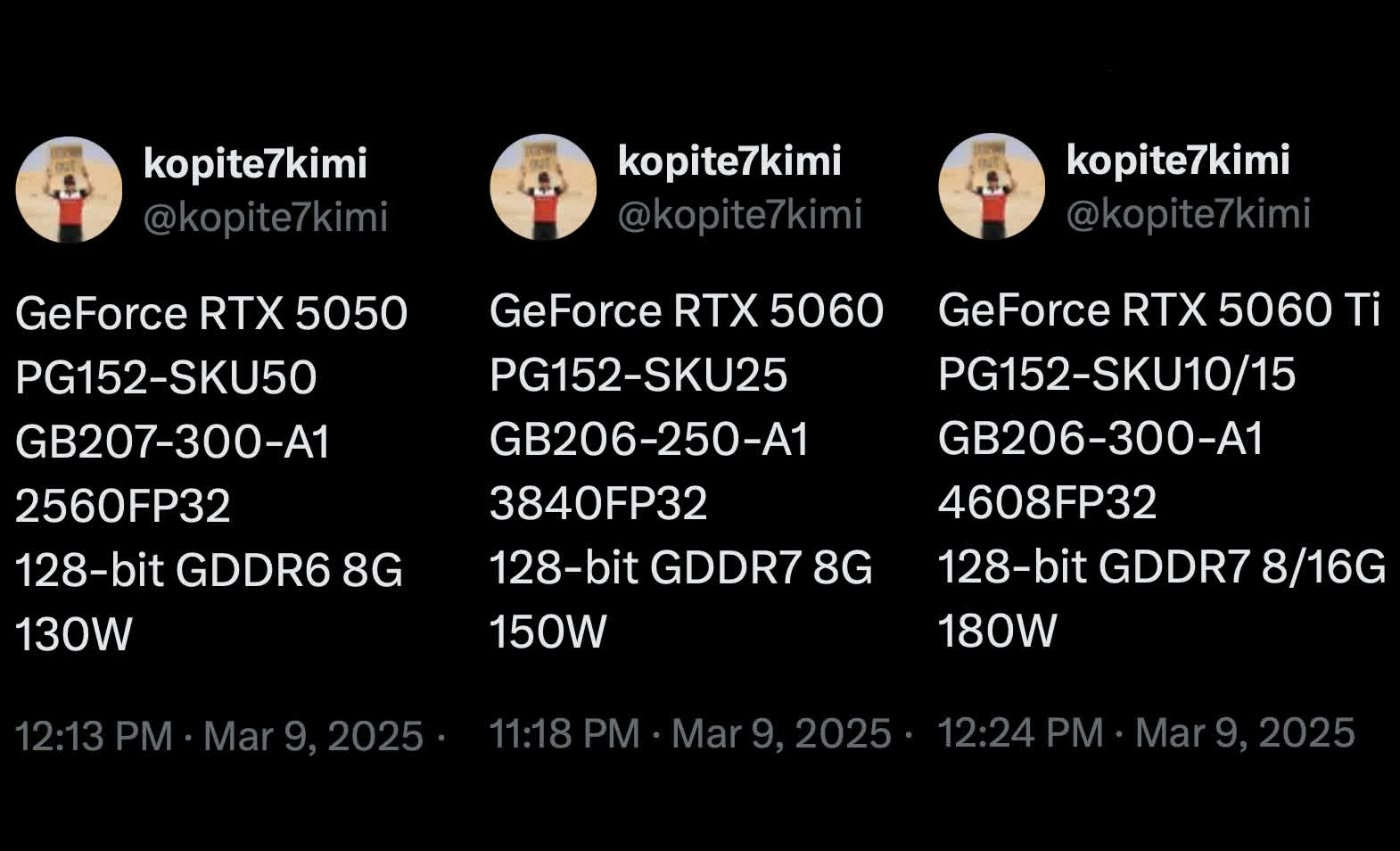

Prominent leaker "Kopite7kimi" recently shared specifications for Nvidia's upcoming RTX 5050, 5060, and 5060 Ti graphics cards, which the company may announce this week. Like the other Blackwell cards, the three lower-end GPUs appear to be, at best, minor upgrades over their predecessors.

The most glaring indication that this is the case is that the RTX 5060 and 5050 will still only have 8GB of VRAM. Come on, Nvidia. It's 2025. The 5060 Ti will have a 16GB option, confirming that the flagship 5090 is the only Blackwell GPU to feature more VRAM than its predecessor.

Nvidia may promote its new mainstream products as budget-oriented options for multi-frame generation on 460Hz and 500Hz 1080p monitors. However, even at mainstream price levels, some users might find three consecutive generations of 8GB graphics cards hard to swallow, especially considering that competing options, like Intel's Arc B580, include 12GB.

The RTX 5060 and 5060 Ti each feature a few hundred more CUDA cores than their 40 series equivalents. The 5060 upgrades from 3072 to 3850 cores, while the 5060 Ti receives an increase from 4352 to 4608. Of course, this factor makes them more energy-hungry, with TDPs rising from 115W and 165W to 150W and 180W, respectively.

Meanwhile, the RTX 5050 looks identical to the 3050, which launched in 2022. It still has 2560 CUDA cores, a 130W power draw, and 8GB of GDDR6 VRAM, while Nvidia upgraded all the other Blackwell GPUs to GDDR7. However, clock speeds for the three upcoming Blackwell cards remain unknown.

Each Blackwell graphics card released so far has provided smaller gains over its predecessor than the GPU ranked above it. The RTX 5070 performs almost identically and sometimes worse than the 4070 Super. This trend indicates improvements might be worse or nonexistent with mainstream and entry-level variants.

Despite their unimpressive specs, history suggests that the RTX 5060 and 5060 Ti might become the most popular Blackwell products, as 60 series GPUs usually dominate Steam hardware surveys. Still, the latest models might only be attractive to consumers upgrading from a card that is multiple generations old, and that's if AMD's upcoming Radeon RX 9060 and 9050 don't offer better value.

According to prior reports, Nvidia might announce the RTX 5060 and 5060 Ti this Thursday, March 13. However, availability isn't expected until next month.

Nvidia RTX 5050, 5060, and 5060 Ti specs leaked, slight core and TDP boosts expected