LemmingOverlrd

Posts: 86 +40

Forward-looking: Nvidia CEO Jen-Hsun Huang has demoed Turing and brought ray tracing graphics processing into real-time. Turing is Nvidia's new GPU architecture for AI, Deep Learning and Ray Tracing.

SIGGRAPH has long lost its exclusively "cinematic arts" roots and evolved into a mix of industry, prosumer and consumer technologies. While last year saw AMD make all the noise, this year... well, this year it sounds like the trophy goes to Nvidia, if CEO Jen-Hsun Huang is to be believed.

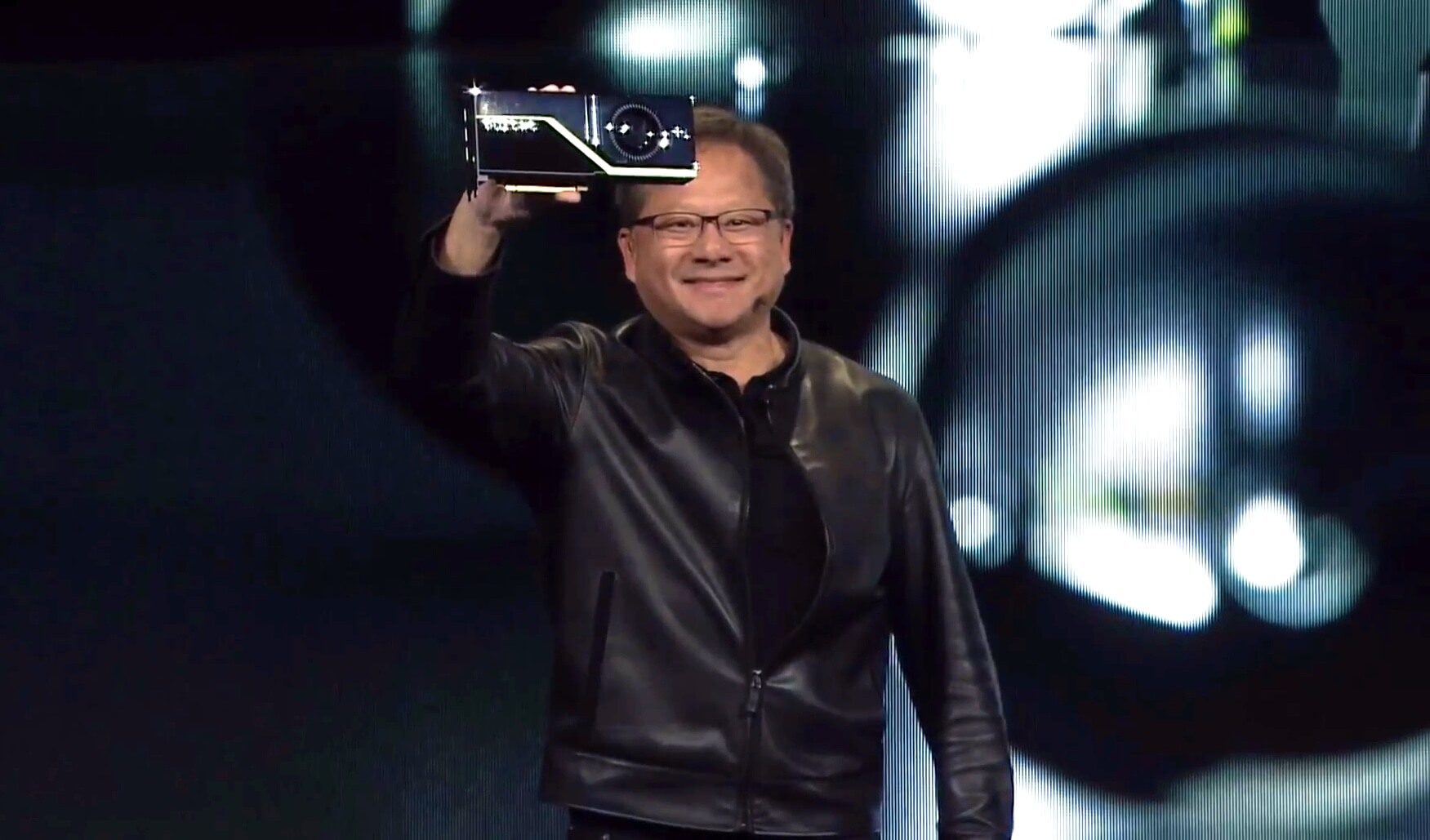

Taking to the stage in traditional garb (I.e. leather jacket), Jen-Hsun casually introduced the world to its next-generation of Quadro graphics cards, the Quadro RTX, powered by Nvidia's next-gen GPU architecture, Turing. It took just a few minutes to build up the narrative, the one about lighting being key to photorealism, from the early attempts of J. Turner Whitted at Ray Tracing on a VAX-11/780 to the holy grail that is what Nvidia is calling real-time Global Illumination, or the ability to accurately replicate light effects in an environment to the point where it is photorealistic.

This is something which, until today, was considered server farm material for processing over hours or even days. Then the Nvidia CEO whipped out the goods.

Nvidia formally introduced three new Quadro cards, the Quadro RTX 5000, RTX 6000 and RTX 8000, summarised below:

Jen-Hsun hailed it as the biggest achievement since the introduction of CUDA, ten years ago. And possibly with good reason. It is the first commercially available real-time ray tracing graphics processor.

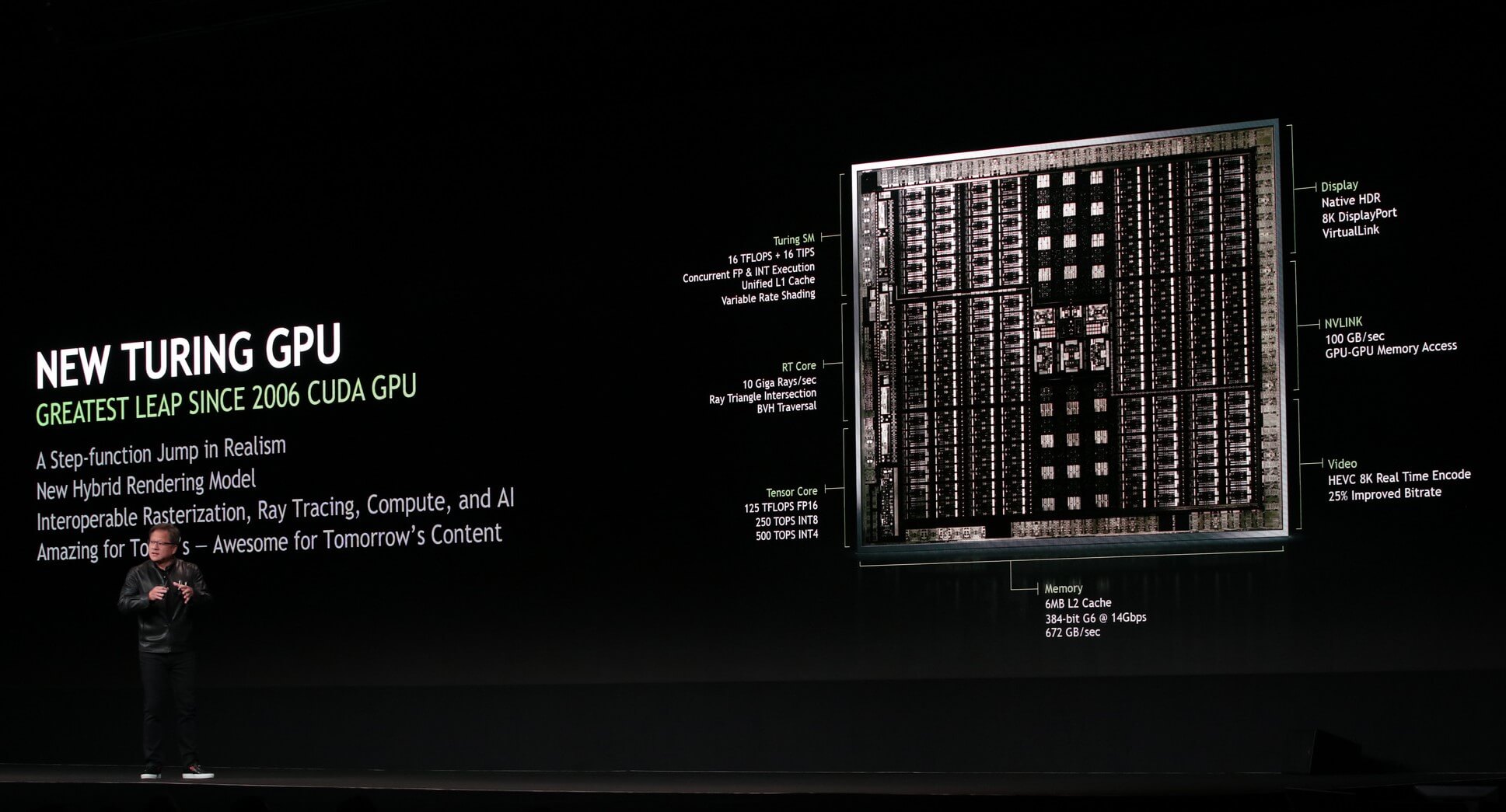

At the core of these cards, Jen-Hsun told, is the Turing GPU consisting of:

- The Streaming Multiprocessor (SM) core which provides compute and shading power, all in one;

- The Real Time Raytracing (RTRT) core, which provides, well... real-time ray tracing;

- The Tensor Core for Deep Learning and AI;

- Video subsystem, which provides HEVC 8K Encode;

- Memory subsystem, with a 384-bit bus and GDDR6 running at 14Gb/sec;

- NVLink subsystem which now shares framebuffer across all cards at 100GB/sec;

- Display subsystem which powers 4 displays + VirtualLink.

So what does Turing bring to the table? Well, if you know Volta, some parts are familiar, while one major architectural change stands out: what Nvidia is calling the Real-time Ray Tracing (RTRT) core.

Very little is known about it thus far -- except maybe a metric Nvidia is trying to establish, 'Gigarays' -- but we assume a lot more will be known over the coming days. Nvidia did discuss some techniques it is using in ray tracing, and how it is leveraging Deep Learning to teach the GPU to do better lighting effects. Jen-Hsun claims the real-time ray tracing performance on Turing is 6x that of Pascal (although we'd hardly use Pascal for comparison).

For actual CUDA performance we won't risk calculating much of what's going to happen at the Streaming Multiprocessor core, as it is a re-engineerd pipeline of FP and INT. Apart from this, Turing also introduces a new NVLink, which blows away the looming limitation of framebuffer sharing.

Yes. With the new NVLink, framebuffers are now cumulative instead of mirrored. This means two RTX 8000 cards are effectively sharing 96GB of GDDR6.

The Tensor cores are rated at 500 trillion operations per second, however, we've noticed that Nvidia seems to have 'dumbed down' from Volta. Jen-Hsun made no reference to single-precision (FP32) or double-precision (FP64) operations, focusing only on FP16 (125 TFLOPS), INT8 (250 TOPS) and INT4 (500 TOPS). This may provide a matter for controversy. Nvidia will take flak for its design, we're sure.

Out of curiousity, and as a matter of comparison, Turing has a massive die size of 741mm2 (Volta was 815mm2), and packs 18.6 billion transistors under the hood (Volta was 21.1 billion). These remain massive GPUs, and we cannot help but imagine what happens with defective dies.

Availability for Turing will be limited during Q4 2018, but is expected to be generally available going into 2019.

https://www.techspot.com/news/75952-nvidia-turing-here-next-gen-architecture-first-real.html