In brief: It looks increasingly likely that Nvidia has been preparing an RTX 3080 Ti with a more healthy 20 gigabytes of video memory, but it's not clear when you'll be able to buy one, even if you can afford to pay an inflated price. The more worrying part is that we won't have to wait for next-gen graphics cards to arrive in order to see single GPUs pushing over 400 watts -- the mid-generation hardware refreshes look hotter and more power hungry than ever.

When our own Steve Walton tested Nvidia's GeForce RTX 3080 Ti graphics card, he couldn't help but ask himself what the intended audience of this product was. The RTX 3080 Ti has proven to be a powerful member of the Ampere family, but at the same time it was an expensive, poorly-timed release with only half as much VRAM as the RTX 3090.

Rumors have been circulating for months about an RTX 3080 refresh with 20 gigabytes of VRAM, but when the RTX 3080 Ti arrived, it left Nvidia fans disappointed with a mere 12 gigabytes, less than any of AMD's high-end Radeon 6000 series cards. Still, both Asus and Gigabyte had slip ups last year where they revealed unreleased RTX 3080 Ti SKUs with 20 gigabytes of video memory, so it was only a matter of time before they surfaced again.

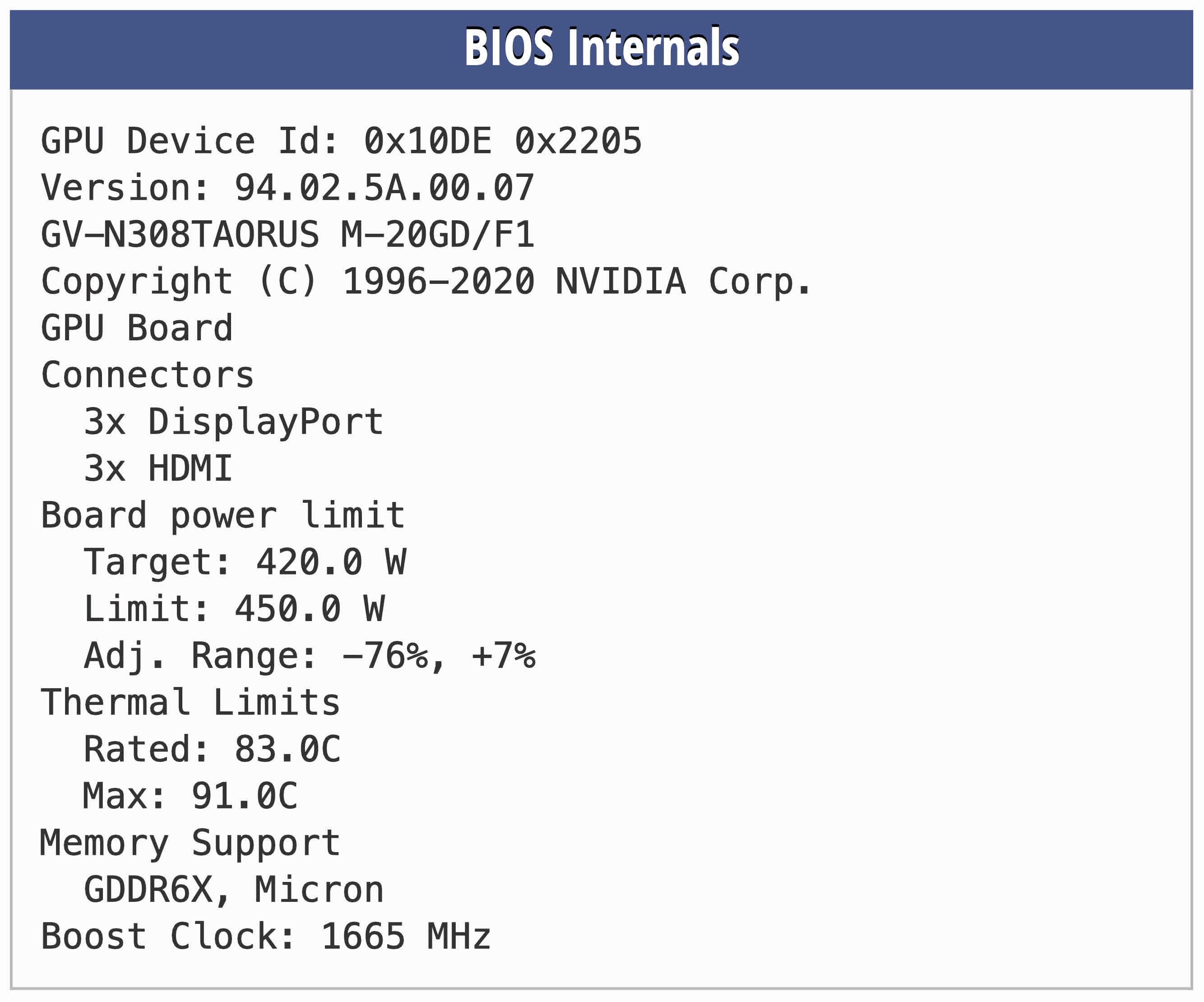

This week, an anonymous user uploaded what appears to be the firmware for a Gigabyte Aorus GeForce RTX 3080 Ti Xtreme (GV-N308TAORUS X-20GD) graphics card, which was spotted by @momomo_us. The device ID also appears to be different than the existing 12 gigabyte model that was released in June, indicating this could indeed turn out to be the long-awaited 20 gigabyte model.

Other than that, the new model looks to be equipped with the same 19 Gbps GDDR6X memory over a slightly smaller, 320-bit memory bus. If true, this would mean the new RTX 3080 Ti will have 17 percent less memory bandwidth than the current model. Be that as it may, a more worrying aspect is the power target of 420 watts, which could be a troubling sign of things to come.

(A Russian YouTuber is already exploring the card's crypto mining capabilities.)

If this doesn't convince you, apparently there's a Russian retailer called HARDVAR that is already selling this new graphics card in Saint Petersburg for 225,000 rubles -- the equivalent of $3,071. Another one called ZSCOM is also jumping in on the action. As usual, cryptocurrency miners have already gotten their hands on it to test its ability to turn watts into millions of hashes that certify transactions on the Ethereum network.

One such miner shared his results on YouTube, and by the looks of it, the card doesn't appear to have a hash rate limiter. After some tweaking, he was able to get 94 MH per second, which is lower than an RTX 3090 but significantly better than the existing RTX 3080 Ti, which is only able to reach around 65-66 MH per second. The YouTuber also explained that he's been able to get close to 98 MH per second but the card was less stable at that speed.

Given the fact that one Russian retailer is already selling the RTX 3080 Ti 20GB and another is already listing the new model on its website, we won't have to wait much longer before we see it popping up in more places. As for the price, it's possible the MSRP will be somewhere between that of the RTX 3080 Ti 12GB and the RTX 3090, which carry an MSRP of $1,200 and $1,500, respectively -- not that you can expect to find this new RTX 3080 Ti model at anywhere near its recommended price.

Rumors are also picking up about a monstrous RTX 3090 Super being in the works, and that too will have an inflated TGP of over 400 watts. If anything, it looks like the era of single GPUs demanding in excess of 400 watts is already here, despite indications that it will be ushered by next-generation models from Nvidia and AMD.

In the meantime, Intel is working hard on its Alchemist GPUs, but they're far from ready to battle the beasts coming out of the dungeons of the other two manufacturers.

https://www.techspot.com/news/91151-nvidia-geforce-rtx-3080-ti-20gb-graphics-card.html