Periscope, the Twitter-owned livestreaming app, is taking a crowdsourced approach to moderating potentially abusive comments.

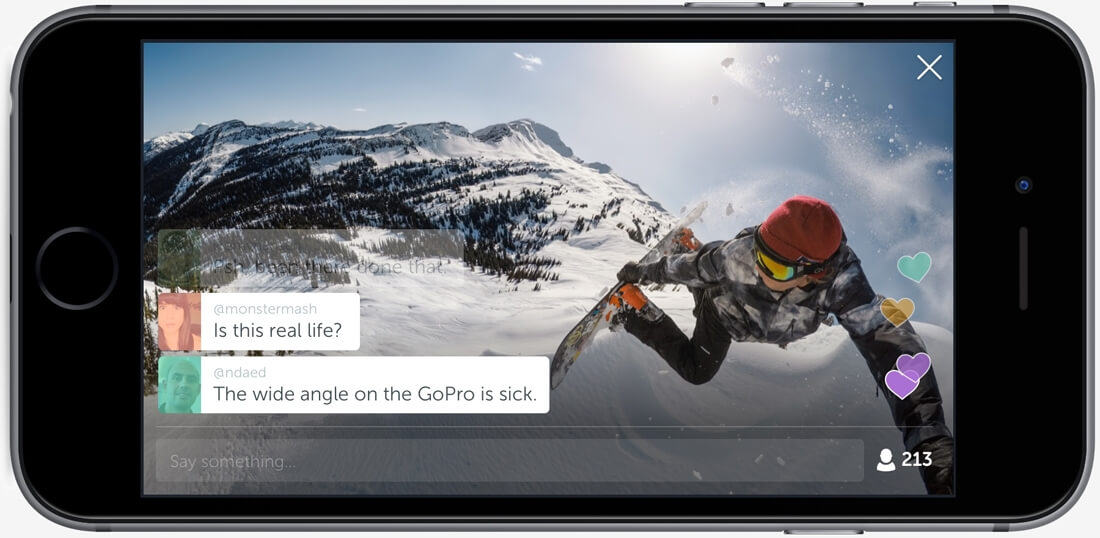

Announcing the new feature earlier today on Medium, Periscope said its service has been used to forge real-life friendships and online communities due to the fact that it’s live, unfiltered and open. Unfortunately, that level of openness also leaves Periscope and its users at an increased risk of abuse and spam.

Spam seems easy enough to tackle but applying those same tactics to comments just doesn’t work. For example, a comment that’s acceptable during a comedy-themed broadcast might not be acceptable in a more serious broadcast.

The best approach, it would seem, is to let other viewers determine what is and isn’t acceptable in the context of the broadcast they’re watching.

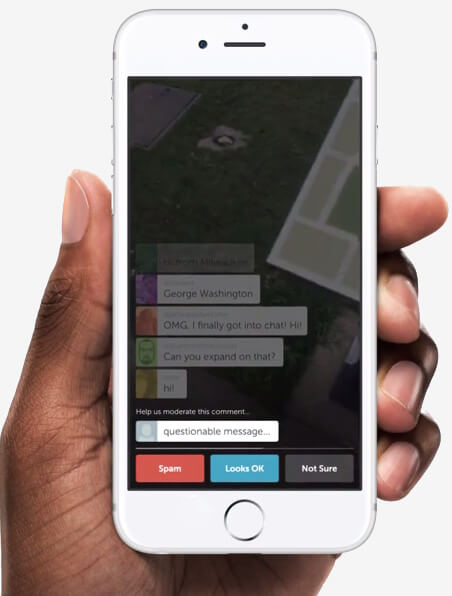

During a live broadcast, viewers can report a comment as being abuse or spam. Once that viewer has flagged a message as inappropriate, they will no longer see comments from that user for the remainder of the broadcast. When a comment is flagged, a few other random viewers will be asked to vote on whether the flagged comment is indeed spam or abuse. Voters can also select “not sure” if they don’t know or weren’t paying attention.

Should a comment be deemed as abuse or spam by a jury of peers, the commenter in question will have their commenting rights temporarily suspended. Repeat offenders will be banned from chatting for the duration of the broadcast.

It’s worth noting that participation is entirely optional. Broadcasters can elect to disable comment moderation and viewers can opt out of voting from the settings menu.

https://www.techspot.com/news/65047-periscope-taking-crowdsourced-approach-comment-moderation.html